Amazon: Machine-Generated Content Adds to Overhead Costs

July 7, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-10.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

“Amazon Has a Big Problem As AI-Generated Books Flood Kindle Unlimited” makes it clear that Amazon is going to have to re-think how it runs its self-publishing operation and figure out how to deal with machine-generated books from “respected” publishers.

The author of the article is expressing concern about ChatGPT-type outputs being assembled into electronic books. That concern is focused on Amazon and its ageing, arthritic Kindle eBook business. With voice to text tools, I suppose one should think about Audible audiobooks spit out by text-to-voice. The culprit, however, may be Amazon itself. Paying a person read a book for seven hours, not screw up, and making sure the sound is acceptable when the reader has a stuffed nose can be pricey.

A senior Amazon executive thinks to herself, “How can I fix this fake content stuff? I should really update my LinkedIn profile too.’ Will the lucky executive charged with fixing the problem identified in the article be allowed to eliminate revenue? Yep, get going on the LinkedIn profile first. Tackle the fake stuff later.

The write up points out:

the mass uploading of AI-generated books could be used to facilitate click-farming, where ‘bots’ click through a book automatically, generating royalties from Amazon Kindle Unlimited, which pays authors by the amount of pages that are read in an eBook.

And what’s Amazon doing about this quasi-fake content? The article reports:

It [Amazon] didn’t explicitly state that it was making an effort specifically to address the apparent spam-like persistent uploading of nonsensical and incoherent AI-generated books.

Then, the article raises the issues of “quality” and “authenticity.” I am not sure what these two glory words mean. My impression is that a machine-generated book is not as good as one crafted by a subject matter expert or motivated human author. If I am right, the editors at TechRadar are apparently oblivious to the idea of using XML structure content and a MarkLogic-type tool to slice-and-dice content. Then the components are assembled into a reference book. I want to point out that this method has been in use by professional publishers for a number of years. Because I signed a confidentiality agreement, I am not able to identify this outfit. But I still recall the buzz of excitement that rippled through one officer meeting at this outfit when those listening to a presentation realized [a] Humanoids could be terminated and a reduced staff could produce more books and [b] the guts of the technology was a database, a technology mostly understood by those with a few technical conferences under their belt. Yippy! No one had to learn anything. Just calculate the financial benefit of dumping humans and figuring out how to expense the contractors who could format content from a hovel in a Myanmar-type of low-cost location. At night, the executives dreamed about their bonuses for hitting their financial targets and how to start RIF’ing editorial staff, subject matter experts, and assorted specialists who doodled with front matter, footnotes, and fonts.

Net net: There is no fix. The write up illustrates the lack of understanding about how large sections of the information industry uses technology and the established procedures for dealing with cost-saving opportunity. Quality means more revenue from decisions. Authenticity is a marketing job. Amazon has a content problem and has to gear up its tools and business procedures to cope with machine-generated content whether in product reviews and eBooks.

Stephen E Arnold, July 7, 2023

Pricing Smart Software: Buy Now Because Prices Are Going Up in 18 hours 46 Minutes and Nine Seconds, Eight Seconds, Seven…

July 7, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-6.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I ignore most of the apps, cloud, and hybrid products and services infused with artificial intelligence. As one wit observed, AI means artificial ignorance. What I find interesting are the pricing models used by some of the firms. I want to direct your attention to Sheeter.ai. The service let’s one say in natural language something like “Calculate the median of A:Z rows.” The system then spits out the Excel formula which can be pasted into a cell. The Sheeter.ai formula works in Google Sheets too because Google wants to watch Microsoft Excel shrivel and die a painful death. The benefits of the approach are similar to services which convert SQL statements into well-formed SQL code (in theory). Will the dynamic duo of Google and Microsoft implement a similar feature in their spreadsheets? Of course, but Sheeter.ai is betting their approach is better.

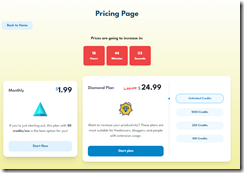

The innovation for which Sheeter.ai deserves a pat on the back is its approach to pricing. The screenshot below makes clear that the price one sees on the screen at a particular point in time is going to go up. A countdown timer helps boost user anxiety about price.

I was disappointed when the graphics did not include a variant of James Bond (the super spy) chained to an explosive device. Bond, James Bond, was using his brain to deactivate the timer. Obviously he was successful because there have been a half century of Bond, James Bond, films. He survives every time time.

Will other AI-infused products and services implement anxiety patterns to induce people to provide their name, email, and credit card? It seems in line with the direction in which online and AI businesses are moving. Right, Mr. Bond. Nine, eight, seven….

Stephen E Arnold, July 7, 2023

Googzilla Annoyed: No Longer to Stomp Around Scaring People

July 6, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-7.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

“Sweden Orders Four Companies to Stop Using Google Tool” reports that the Swedish government “has ordered four companies to stop using a Google tool that measures and analyzed Web traffic.” The idea informing the Swedish decision to control the rapacious creature’s desire for “personal data.” Is the lovable Googzilla slurping data and allegedly violating privacy? I have no idea.

In this MidJourney visual confection, it appears that a Tyrannosaurus Rex named Googzilla is watching children. Is Googzilla displaying an abnormal and possibly illegal behavior, particularly with regard to personal data.

The write up states:

The IMY said it considers the data sent to Google Analytics in the United States by the four companies to be personal data and that “the technical security measures that the companies have taken are not sufficient to ensure a level of protection that essentially corresponds to that guaranteed within the EU…”

Net net: Sweden is not afraid of the Google. Will other countries try their hand at influencing the lovable beastie?

Stephen E Arnold, July 6, 2023

Google and Its Use of the Word “Public”: A Clever and Revenue-Generating Policy Edit

July 6, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-9.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

If one has the cash, one can purchase user-generated data from more than 500 data publishers in the US. Some of these outfits are unknown. When a liberal Wall Street Journal reporter learns about Venntel or one of these outfits, outrage ensues. I am not going to explain how data from a user finds its ways into the hands of a commercial data aggregator or database publisher. Why not Google it? Let me know how helpful that research will be.

Why are these outfits important? The reasons include:

- Direct from app information obtained when a clueless mobile user accepts the Terms of Use. Do you hear the slurping sounds?

- Organizations with financial data and savvy data wranglers who cross correlate data from multiple sources?

- Outfits which assemble real-time or near-real-time user location data. How useful are those data in identifying military locations with a population of individuals who exercise wearing helpful heart and step monitoring devices?

Navigate to “Google’s Updated Privacy Policy States It Can Use Public Data to Train its AI Models.” The write up does not make clear what “public data” are. My hunch is that the Google is not exceptionally helpful with its definitions of important “obvious” concepts. The disconnect is the point of the policy change. Public data or third-party data can be purchased, licensed, used on a cloud service like an Oracle-like BlueKai clone, or obtained as part of a commercial deal with everyone’s favorite online service LexisNexis or one of its units.

A big advertiser demonstrates joy after reading about Google’s detailed prospect targeting reports. Dossiers of big buck buyers are available to those relying on Google for online text and video sales and marketing. The image of this happy media buyer is from the elves at MidJourney.

The write up states with typical Silicon Valley “real” news flair:

By updating its policy, it’s letting people know and making it clear that anything they publicly post online could be used to train Bard, its future versions and any other generative AI product Google develops.

Okay. “the weekend” mentioned in the write up is the 4th of July weekend. Is this a hot news or a slow news time? If you picked “hot”, you are respectfully wrong.

Now back to “public.” Think in terms of Google’s licensing third-party data, cross correlating those data with its log data generated by users, and any proprietary data obtained by Google’s Android or Chrome software, Gmail, its office apps, and any other data which a user clicking one of those “Agree” boxes cheerfully mouses through.

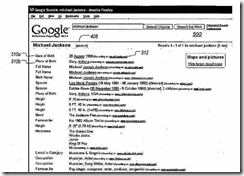

The idea, if the information in Google patent US7774328 B2. What’s interesting is that this granted patent does not include a quite helpful figure from the patent application US2007 0198481. Here’s the 16 year old figure. The subject is Michael Jackson. The text is difficult to read (write your Congressman or Senator to complain). The output is a machine generated dossier about the pop star. Note that it includes aliases. Other useful data are in the report. The granted patent presents more vanilla versions of the dossier generator, however.

The use of “public” data may enhance the type of dossier or other meaty report about a person. How about a map showing the travels of a person prior to providing a geo-fence about an individual’s location on a specific day and time. Useful for some applications? If these “inventions” are real, then the potential use cases are interesting. Advertisers will probably be interested? Can you think of other use cases? I can.

The cited article focuses on AI. I think that more substantive use cases fit nicely with the shift in “policy” for public data. Have your asked yourself, “What will Mandiant professionals find interesting in cross correlated data?”

Stephen E Arnold, July 6, 2023

Quantum Seeks Succor Amidst the AI Tsunami

July 5, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-5.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Imagine the heartbreak of a quantum wizard in the midst of the artificial intelligence tsunami. What can a “just around the corner” technology do to avoid being washed down the drain? The answer is public relations, media coverage, fascinating announcements. And what companies are practicing this dark art of outputting words instead of fully functional, ready-to-use solutions?

Give up?

I suggest that Google and IBM are the dominant players. Imagine an online ad outfit and a consulting firm with mainframes working overtime to make quantum computing exciting again. Frankly I am surprised that Intel has not climbed on its technology stallion and ridden Horse Ridge or Horse whatever into PR Land. But, hey, one has to take what one’s newsfeed delivers. The first 48 hours of July 2023 produced two interesting items.

The first is “Supercomputer Makes Calculations in Blink of an Eye That Take Rivals 47 Years.” The write up is about the Alphabet Google YouTube construct and asserts:

While the 2019 machine had 53 qubits, the building blocks of quantum computers, the next generation device has 70. Adding more qubits improves a quantum computer’s power exponentially, meaning the new machine is 241 million times more powerful than the 2019 machine. The researchers said it would take Frontier, the world’s leading supercomputer, 6.18 seconds to match a calculation from Google’s 53-qubit computer from 2019. In comparison, it would take 47.2 years to match its latest one. The researchers also claim that their latest quantum computer is more powerful than demonstrations from a Chinese lab which is seen as a leader in the field.

Can one see this fantastic machine which is 241 million times more powerful than the 2019 machine? Well, one can see a paper which talks about the machine. That is good enough for the Yahoo real news report. What do the Chinese, who have been kicked to the side of the Information Superhighway, say? Are you joking? That would be work. Writing about a Google paper and calling around is sufficient.

If you want to explore the source of this revelation, navigate to “Phase Transition in Random Circuit Sampling.” Note that the author has more than 175 authors is available from ArXiv.org at https://arxiv.org/abs/2304.11119. The list of authors does not appear in the PDF until page 37 (see below) and only about 80 appear on the abstract page on the ArXiv splash page. I scanned the list of authors and I did not see Jeff Dean’s name. Dr. Dean is/was a Big Dog at the Google but …

Just to make darned sure that Google’s Quantum Supremacy is recognized, the organizations paddling the AGY marketing stream include NASA, NIST, Harvard, and more than a dozen computing Merlins. So there! (Does AGY have an inferiority complex?)

The second quantum goody is the write up “IBM Unlocks Quantum Utility With its 127-Qubit “Eagle” Quantum Processing Unit.” The write up reports as actual factual IBM’s superior leap frogging quantum innovation; to wit, coping with noise and knowing if the results are accurate. The article says via a quote from an expert:

The crux of the work is that we can now use all 127 of Eagle’s qubits to run a pretty sizable and deep circuit — and the numbers come out correct

The write up explains:

The work done by IBM here has already had impact on the company’s [IBM’s] roadmap – ZNE has that appealing quality of making better qubits out of those we already can control within a Quantum Processing Unit (QPU). It’s almost as if we had a megahertz increase – more performance (less noise) without any additional logic. We can be sure these lessons are being considered and implemented wherever possible on the road to a “million + qubits”.

Can one access this new IBM approach? Well, there is this article and a chart.

Which quantum innovation is the more significant? In terms of putting the technology in one laptop, not much. Perhaps one can use the system via the cloud? Some may be able to get outputs… with permission of course.

But which is the PR winner? In my opinion, the Google wins because it presents a description of a concept with more authors. IBM, get your marketing in gear. By the way, what’s going on with the RedHat dust up? Quantum news releases won’t make that open source hassle go away. And, Google, the quantum stuff and the legion of authors is unlikely to impress European regulators.

And why make quantum noises before a US national holiday? My hunch is that quantum is perfect holiday fodder. My question, “When will the burgers be done?”

Stephen E Arnold, July 5, 2023

Step 1: Test AI Writing Stuff. Step 2: Terminate Humanoids. Will Outrage Prevent the Inevitable?

July 5, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-2.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I am fascinated by the information (allegedly actual factual) in “Gizmodo and Kotaku Staff Furious After Owner Announces Move to AI Content.” Part of my interest is the subtitle:

God, this is gonna be such a f***ing nightmare.

Ah, for whom, pray tell. Probably not for the owners, who may see a pot of gold at the end of the smart software rainbow; for example, Costs Minus Humans Minus Health Care Minus HR Minus Miscellaneous Humanoid costs like latte makers, office space, and salaries / bonuses. What do these produce? More money (value) for the lucky most senior managers and selected stakeholders. Humanoids lose; software wins.

A humanoid writer sits at desk and wonders if the smart software will become a pet rock or a creature let loose to ruin her life by those who want a better payoff.

For the humanoids, it is hasta la vista. Assume the quality is worse? Then the analysis requires quantifying “worse.” Software will be cheaper over a time interval, expensive humans lose. Quality is like love and ethics. Money matters; quality becomes good enough.

Will, fury or outrage or protests make a difference? Nope.

The write up points out:

“AI content will not replace my work — but it will devalue it, place undue burden on editors, destroy the credibility of my outlet, and further frustrate our audience,” Gizmodo journalist Lin Codega tweeted in response to the news. “AI in any form, only undermines our mission, demoralizes our reporters, and degrades our audience’s trust.” “Hey! This sucks!” tweeted Kotaku writer Zack Zwiezen. “Please retweet and yell at G/O Media about this! Thanks.”

Much to the delight of her significant others, the “f***ing nightmare” is from the creative, imaginative humanoid Ashley Feinberg.

An ideal candidate for early replacement by a software system and a list of stop words.

Stephen E Arnold, July 5, 2023

Learning Means Effort, Attention, and Discipline. No, We Have AI, or AI Has Us

July 4, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-8.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

My newsfeed of headlines produced a three-year young essay titled “How to Learn Better in the Digital Age.” The date on the document is November 2020. (Have you noticed how rare a specific date on a document appears?)

MidJourney provided this illustration of me doing math homework with both hands in 1952. I was fatter and definitely uglier than the representation in the picture. I want to point out: [a] no mobile phone, [b] no calculator, [c] no radio or TV, [d] no computer, and [e] no mathy father breathing down my neck. (He was busy handling the finances of a weapons manufacturer which dabbled in metal coat hangers.) Was homework hard? Nope, just part of the routine in Campinas, Brazil, and the thrilling Calvert Course.

The write up contains a simile which does not speak to me; namely, the functioning of the human brain is emulated to some degree in smart software. I am not in that dog fight. I don’t care because I am a dinobaby.

For me the important statement in the essay, in my opinion, is this one:

… we need to engage with what we encounter if we wish to absorb it long term. In a smartphone-driven society, real engagement, beyond the share or like or retweet, got fundamentally difficult – or, put another way, not engaging got fundamentally easier. Passive browsing is addictive: the whole information supply chain is optimized for time spent in-app, not for retention and proactivity.

I marvel at the examples of a failure to learn. United Airlines strands people. The CEO has a fix: Take a private jet. Clerks in convenience stores cannot make change even when the cash register displays the amount to return to the customer. Yeah, figuring out pennies, dimes, and quarters is a tough one. New and expensive autos near where I live sit on the side of the road awaiting a tow truck from the Land Rover- or Maserati-type dealer. The local hospital has been unable to verify appointments and allegedly find some X-ray images eight weeks after a cyber attack on an insecure system. Hip, HIPPA hooray, Hip HIPPA hooray. I have a basket of other examples, and I would wager $1.00US you may have one or two to contribute. But why? The impact of poor thinking, reading, math, and writing skills are abundant.

Observations:

- AI will take over routine functions because humans are less intelligent and diligent than when I was a fat, slow learning student. AI is fast and good enough.

- People today will not be able to identify or find information to validate or invalidate an output from a smart system; therefore, those who are intellectually elite will have their hands on machines that direct behavior, money, and power.

- Institutions — staffed by employees who look forward to a coffee break more than working hard — will gladly license smart workflow revolution.

Exciting times coming. I am delighted I a dinobaby and not a third-grade student juggling a mobile, an Xbox, an iPad, and a new M2 Air. I was okay with a paper and pencil. I just wanted to finish my homework and get the best grade I could.

Stephen E Arnold, July

Academics and Ethics: We Can Make It Up, Right?

July 4, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-4.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Bogus academic studies were already a troubling issue. Now generative text and image algorithms are turbocharging the problem. Nature describes how in, “AI Intensifies Fight Against ‘Paper Mills” that Churn Out Fake Research.” Writer Layal Liverpool states:

“Generative AI tools, including chatbots such as ChatGPT and image-generating software, provide new ways of producing paper-mill content, which could prove particularly difficult to detect. These were among the challenges discussed by research-integrity experts at a summit on 24 May, which focused on the paper-mill problem. ‘The capacity of paper mills to generate increasingly plausible raw data is just going to be skyrocketing with AI,’ says Jennifer Byrne, a molecular biologist and publication-integrity researcher at New South Wales Health Pathology and the University of Sydney in Australia. ‘I have seen fake microscopy images that were just generated by AI,’ says Jana Christopher, an image-data-integrity analyst at the publisher FEBS Press in Heidelberg, Germany. But being able to prove beyond suspicion that images are AI-generated remains a challenge, she says. Language-generating AI tools such as ChatGPT pose a similar problem. ‘As soon as you have something that can show that something’s generated by ChatGPT, there’ll be some other tool to scramble that,’ says Christopher.”

Researchers and integrity analysts at the summit brainstormed ideas to combat the growing problem and plan to publish an action plan “soon.” In a related issue, attendees agreed AI can be a legitimate writing aid but considered certain requirements, like watermarking AI-generated text and providing access to raw data.

Post-docs and graduate students make up data. MidJourney captures the camaraderie of 21st-century whiz kids rather well. A shared experience is meaningful.

Naturally, such decrees would take time to implement. Meanwhile, readers of academic journals should up their levels of skepticism considerably.

But tenure and grant money are more important than — what’s that concept? — ethical behavior for some.

Cynthia Murrell, July 4, 2023

NSO Group Restructuring Keeps Pegasus Aloft

July 4, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-3.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

The NSO Group has been under fire from critics for the continuing deployment if its infamous Pegasus spyware. The company, however, might more resemble a different mythological creature: Since its creditors pulled their support, NSO appears to be rising from the ashes.

Pegasus continues to fly. Can it monitor some of the people who have mobile phones? Not in ancient Greece. Other places? I don’t know. MidJourney’s creative powers does not shed light on this question.

The Register reports, “Pegasus-Pusher NSO Gets New Owner Keen on the Commercial Spyware Biz.” Reporter Jessica Lyons Hardcastle writes:

“Spyware maker NSO Group has a new ringleader, as the notorious biz seeks to revamp its image amid new reports that the company’s Pegasus malware is targeting yet more human rights advocates and journalists. Once installed on a victim’s device, Pegasus can, among other things, secretly snoop on that person’s calls, messages, and other activities, and access their phone’s camera without permission. This has led to government sanctions against NSO and a massive lawsuit from Meta, which the Supreme Court allowed to proceed in January. The Israeli company’s creditors, Credit Suisse and Senate Investment Group, foreclosed on NSO earlier this year, according to the Wall Street Journal, which broke that story the other day. Essentially, we’re told, NSO’s lenders forced the biz into a restructure and change of ownership after it ran into various government ban lists and ensuing financial difficulties. The new owner is a Luxembourg-based holding firm called Dufresne Holdings controlled by NSO co-founder Omri Lavie, according to the newspaper report. Corporate filings now list Dufresne Holdings as the sole shareholder of NSO parent company NorthPole.”

President Biden’s executive order notwithstanding, Hardcastle notes governments’ responses to spyware have been tepid at best. For example, she tells us, the EU opened an inquiry after spyware was found on phones associated with politicians, government officials, and civil society groups. The result? The launch of an organization to study the issue. Ah, bureaucracy! Meanwhile, Pegasus continues to soar.

Cynthia Murrell, July 4, 2023

Crackdown on Fake Reviews: That Is a Hoot!

July 3, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-1.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “The FTC Wants to Put a Ban on Fake Reviews.” My first reaction was, “Shouldn’t the ever-so-confident Verge poobah have insisted on the word “impose”; specifically, The FTC wants to impose a ban on a fake reviews” or maybe “The FTC wants to rein in fake reviews”? But who cares? The Verge is the digital New York Times and go-to source of “real” Silicon Valley type news.

The write up states:

If you, too, are so very tired of not knowing which reviews to trust on the internet, we may eventually get some peace of mind. That’s because the Federal Trade Commission now wants to penalize companies for engaging in shady review practices. Under the terms of a new rule proposed by the FTC, businesses could face fines for buying fake reviews — to the tune of up to $50,000 for each time a customer sees one.

For more than 30 years, I worked with an individual named Robert David Steele, who was an interesting figure in the intelligence world. He wrote and posted on Amazon more than 5,000 reviews. He wrote these himself, often in down times with me between meetings. At breakfast one morning in the Hague, Steele was writing at the breakfast table, and he knocked over his orange juice. He said, “Give me your napkin.” He used it to jot down a note; I sopped up the orange juice.

“That’s a hoot,” says a person who wrote a product review to make a competitor’s offering look bad. A $50,000 fine. Legal eagles take flight. The laughing man is an image flowing from the creative engine at MidJourney.

He wrote what I call humanoid reviews.

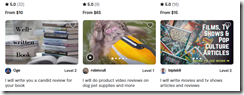

Now reviews of any type are readily available. Here’s an example from Fiverr.com, an Israel-based outfit with gig workers from many countries and free time on their hands:

How many of these reviews will be written by a humanoid? How many will be spat out via a ChatGPT-type system?

What about reviews written by someone with a bone to pick? The reviews are shaded so that the product or the book or whatever is presented in a questionable way? Did Mr. Steele write a review of an intelligence-related book and point out that the author was misinformed about the “real” intel world?

Several observations:

- Who or what is going to identify fake reviews?

- What’s the difference between a Fiverr-type review and a review written by a humanoid motivated by doing good or making the author or product look bad?

- As machine-generated text improves, how will software written to identify machine-generated reviews keep up with advances in the machine-generating software itself?

Net net: External editorial and ethical controls may be impractical. In my opinion, a failure of ethical controls within social structures creates a greenhouse in which fakery, baloney, misinformation, and corrupted content to thrive. In this context, who cares about the headline. It too is a reflection of the pickle barrel in which we soak.

Stephen E Arnold, July 3, 2023