Selling AI with Scare Tactics

June 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Ah, another article with more assertions to make workers feel they must adopt the smart software that threatens their livelihoods. AI automation firm UiPath describes “3 Common Barriers to AI Adoption and How to Overcome Them.” Before marketing director Michael Robinson gets to those barriers, he tries to motivate readers who might be on the fence about AI. He writes:

“There’s a growing consensus about the need for businesses to embrace AI. McKinsey estimated that generative AI could add between $2.6 to $4.4 trillion in value annually, and Deloitte’s ’State of AI in the Enterprise’ report found that 94% of surveyed executives ‘agree that AI will transform their industry over the next five years.’ The technology is here, it’s powerful, and innovators are finding new use cases for it every day. But despite its strategic importance, many companies are struggling to make progress on their AI agendas. Indeed, in that same report, Deloitte estimated that 74% of companies weren’t capturing sufficient value from their AI initiatives. Nevertheless, companies sitting on the sidelines can’t afford to wait any longer. As reported by Bain & Company, a ‘larger wedge’ is being driven ‘between those organizations that have a plan [for AI] and those that don’t—amplifying advantage and placing early adopters into stronger positions.’”

Oh, no! What can the laggards do? Fret not, the article outlines the biggest hurdles: lack of a roadmap, limited in-house expertise, and security or privacy concerns. Curious readers can see the post for details about each. As it happens, software like UiPath’s can help businesses clear every one. What a coincidence.

Cynthia Murrell, June 6, 2024

The Leak: One Nothing Burger, Please

June 5, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Everywhere I look I see write ups about the great Google leak. One example is the poohbah publication The Verge and its story “The Biggest Findings in the Google Search Leak.” From the git-go there is information which reveals something many people know about the Google. It does not explain what it does or its intentions. It just does stuff and then fancy dances around what the company is actually doing. How long has this been going on? Since the litigation about Google’s inspiring encounter with the Yahoo, Overture, GoTo pay-to-play advertising model. In one of my monographs about Google I created this illustration to explain how the Google technology works.

Here’s what I wrote in Google: The Calculating Predator (Infonortics, UK, 2007):

Like a skilled magician, a good stage presence and a bit of misdirection focus attention where Google wants it.

The “leak” is fodder for search engine optimization professionals who unwittingly make the case for just buying advertising. But the leak delivers one useful insight: Google does not tell what it does in plain English. Some call it prevarication; I call it part of the overall strategy of the firm. The philosophy is one manifestation of the idea that “users” don’t need to know anything. “Users” are there to allow Google to sell advertising, broker advertising, and automate advertising. Period. This is the ethos of the high school science club which knows everything. Obviously.

The cited article revealing the biggest findings offers these insights. Please, sit down. I don’t want to be responsible for causing anyone bodily harm.

First snippet:

Google spokespeople have repeatedly denied that user clicks factor into ranking websites, for example — but the leaked documents make note of several types of clicks users make and indicate they feed into ranking pages in search. Testimony from the antitrust suit by the US Department of Justice previously revealed a ranking factor called Navboost that uses searchers’ clicks to elevate content in search.

Are you still breathing. Yep, Google pays attention to clicks. Yes, that’s one of the pay-to-play requirements: Show data to advertisers and get those SEO people acting as an advertising pre-sales service. When SEO fails, buy ads. Yep, earth shattering.

An actual expert in online search examines the information from the “leak” and realizes the data for what they are: Out of context information from a mysterious source. Thanks MidJourney. Other smart services could not deliver a nothing burger. Yours is good enough.

How about this stunning insight:

Google Search representatives have said that they don’t use anything from Chrome for ranking, but the leaked documents suggest that may not be true.

Why would Google spend money to build a surveillance enabled software system? For fun? No, not for fun. Browsers funnel data back to a command-and-control center. The data are analyzed and nuggets used to generate revenue from advertising. This is a surprise. Microsoft got in trouble for browser bundling, but since the Microsoft legal dust up, regulators have taken a kinder, gentler approach to the Google.

Are there more big findings?

Yes, we now know what a digital nothing burger looks like. We already knew what falsehoods look like. SEO professionals are shocked. What’s that say for the unwitting Google pre-advertising purchase advocates?

Stephen E Arnold, June 5, 2024

Publication Founded by a Googler Cheers for Google AI Search

June 5, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

To understand the “rah rah” portion of this article, you need to know the backstory behind Search Engine Land, a news site about search and other technology. It was founded by Danny Sullivan, who pushed the SEO bandwagon. He did this because he was angling for a job at Google, he succeeded, and now he’s the point person for SEO.

Another press release touting the popularity of Google search dropped: “Google SEO Says AI Overviews Are Increasing Search Usage.” The author Danny Goodwin remains skeptical about Google’s spiked popularity due to AI and despite the bias of Search Engine Land’s founder.

During the QI 2024 Alphabet earnings call, Google/Alphabet CEO Sundar Pichai said that the search engine’s generative AI has been used for billions of queries and there are plans to develop the feature further. Pichai said positive things about AI, including that it increased user engagement, could answer more complex questions, and how there will be opportunities for monetization.

Goodwin wrote:

“All signs continue to indicate that Google is continuing its slow evolution toward a Search Generative Experience. I’m skeptical about user satisfaction increasing, considering what an unimpressive product AI overviews and SGE continues to be. But I’m not the average Google user – and this was an earnings call, where Pichai has mastered the art of using a lot of words to say a whole lot of nothing.”

AI is the next evolution of search and Google is heading the parade, but the technology still has tons of bugs. Who founded the publication? A Googler. Of course there is no interaction between the online ad outfit and an SEO mouthpiece. Un-uh. No way.

Whitney Grace, June 5, 2024

Google Demos Its Reliability

June 5, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Migrate everything to the cloud, they said. It is perfectly safe, we were told. And yet, “Google Cloud Accidentally Deletes $125 Billion Pension Fund’s Online Account,” reports Cyber Security News. Writer Dhivya reports a mistake in the setup process was to blame for the blunder. If it were not for a third-party backup, UniSuper’s profile might never have been recovered. We learn:

“A major mistake in setup caused Google Cloud and UniSuper to delete the financial service provider’s private cloud account. This event has caused many to worry about the security and dependability of cloud services, especially for big financial companies. The outage started in the blue, and UniSuper’s 620,000 members had no idea what was happening with their retirement funds.”

As it turns out, the funds themselves were just fine. But investors were understandably upset when they could not view updates. Together, the CEOs of Google Cloud and UniSuper dined on crow. Dhivya writes:

“According to the Guardian reports, the CEOs of UniSuper and Google Cloud, Peter Chun and Thomas Kurian, apologized for the failure together in a statement, which is not often done. … ‘UniSuper’s Private Cloud subscription was ultimately terminated due to an unexpected sequence of events that began with an inadvertent misconfiguration during provisioning,’ the two sources stated. ‘Google Cloud CEO Thomas Kurian has confirmed that the disruption was caused by an unprecedented sequence of events.’ ‘This is a one-time event that has never happened with any of Google Cloud’s clients around the world.’ ‘This really shouldn’t have happened,’ it said.”

At least everyone can agree on that. We are told UniSuper had two different backups, but they were also affected by the snafu. It was the backups kept by “another service provider” that allowed the hundreds of virtual machines, databases, and apps that made up UniSuper’s private cloud environment to be recovered. Eventually. The CEOs emphasized the herculean effort it took both Google Cloud and UniSuper technicians to make it happen. We hope they were well-paid. Naturally, both companies pledge to do keep this mistake from happening again. Great! But what about the next unprecedented, one-time screwup?

Let this be a reminder to us all: back up the data! Frequently and redundantly. One never knows when that practice will save the day.

Cynthia Murrell, June 5, 2024

Does Google Follow Its Own Product Gameplan?

June 5, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

If I were to answer the question based on Google’s AI summaries, I would say, “Nope.” The latest joke added to the Sundar & Prabhakar Comedy Show is the one about pizza. Here’s the joke if I recall it correctly.

Sundar: Yo, Prabhakar, how do you keep cheese from slipping off a hot pizza?

Prabhakar: I don’t know. Please, tell me, oh gifted one.

Sundar: You have your cook mix it with non-toxic glue, faithful colleague.

Prabhakar: [Laughing loudly]. That’s a good one, luminescent soul.

Did Google muff the bunny with its high-profile smart software feature? To answer the question, I looked to the ever-objective Fast Company online publication. I found a write which appears to provide some helpful information. The article is called “Conduct Stellar User Research Even Faster with This Google Ventures Formula.” Google has game plans for creating MVPs or minimum viable products.

The confident comedians look concerned when someone in the audience throws a large tomato at the well-paid performers. Thanks, MSFT. Working on security or the AI PC today?

Let’s look at what one Google partner reveals as the equivalent of the formula for Coca-Cola or McDonald’s recipe for Big Mac sauce.

Here’s the game winning touchdown razzle dazzle:

- Use a bullseye customer sprint. The idea is to get five “customers” and show them three prototypes. Listen for pros and cons. Then debrief together in a “watch party.”

- Conduct sprints early. The idea is to get this feedback before “a team invests a lot of time, money, or reputational risk into building, launching, and marketing an MVP (that’s a minimum viable product, not necessarily a good or needed product I think).

- Keep research bite size. Avoid heavy duty research overkill is the way I interpret the Google speak. The idea is that massive research projects are not desirable. They are work. Nibble, don’t gobble, I assume.

- Keep the process simple. Keep the prototypes simple. Get those interviews. That’s fun. Plus, there is the “watch party”, remember?

Okay, now let’s think about what Google suggests are outliers or fiddled AI results. Why is Google AI telling people to eat a rock a day?

The “bullseye” baloney is bull output for sure. I am on reasonably firm ground because in Paris the Sundar & Prabhakar Comedy Act showed incorrect outputs from Google’s AI system. Then Google invented about a dozen variations on the theme of a scrambled egg at Google I/O. Now Google is faced with its AI system telling people dogs own hotels. No, some dogs live in hotels. Some dogs deliver outputs in hotels. Dogs do not own hotels unless it is in a crazy virtual reality headset created by Apple or Meta.

The write up uses the word “stellar” to describe this MVP product stuff. The reality is that Googlers are creating work for themselves. Listening to “customers” who know little about AI or anything other than buy ad-get traffic. The “stellar” part of the title is like the “quantum supremacy” horse feather assertion the company crafted.

Smart software can, when trained and managed, can do some useful things. However, the bullseye and quantum supremacy stuff is capable of producing social media memes, concern among some stakeholders, and evidence that Google cannot do anything useful at this time.

Maybe the company will get its act together? When it does, I will check out the next Sundar & Prabhakar Comedy Act. Maybe some of the jokes will work? Let’s hope they are more effective than the bull’s-eye method. (Sorry. I had to fix up the spelling, Google.)

Stephen E Arnold, June 5, 2024

Allegations of Personal Data Flows from X.com to Au10tix

June 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I work from my dinobaby lair in rural Kentucky. What the heck to I know about Hod HaSharon, Israel? The answer is, “Not much.” However, I read an online article called “Elon Musk Now Requiring All X Users Who Get Paid to Send Their Personal ID Details to Israeli Intelligence-Linked Corporation.”I am not sure if the statements in the write up are accurate. I want to highlight some items from the write up because I have not seen information about this interesting identify verification process in my other feeds. This could be the second most covered news item in the last week or two. Number one goes to Google’s telling people to eat a rock a day and its weird “not our fault” explanation of its quantumly supreme technology.

Here’s what I carried away from this X to Au10tix write up. (A side note: Intel outfits like obscure names. In this case, Au10tix is a cute conversion of the word authentic to a unique string of characters. Aw ten tix. Get it?)

Yes, indeed. There is an outfit called Au10tix, and it is based about 60 miles north of Jerusalem, not in the intelware capital of the world Tel Aviv. The company, according to the cited write up, has a deal with Elon Musk’s X.com. The write up asserts:

X now requires new users who wish to monetize their accounts to verify their identification with a company known as Au10tix. While creator verification is not unusual for online platforms, Elon Musk’s latest move has drawn intense criticism because of Au10tix’s strong ties to Israeli intelligence. Even people who have no problem sharing their personal information with X need to be aware that the company they are using for verification is connected to the Israeli government. Au10tix was founded by members of the elite Israeli intelligence units Shin Bet and Unit 8200.

Sounds scary. But that’s the point of the article. I would like to remind you, gentle reader, that Israel’s vaunted intelligence systems failed as recently as October 2023. That event was described to me by one of the country’s former intelligence professionals as “our 9/11.” Well, maybe. I think it made clear that the intelware does not work as advertised in some situations. I don’t have first-hand information about Au10tix, but I would suggest some caution before engaging in flights of fancy.

The write up presents as actual factual information:

The executive director of the Israel-based Palestinian digital rights organization 7amleh, Nadim Nashif, told the Middle East Eye: “The concept of verifying user accounts is indeed essential in suppressing fake accounts and maintaining a trustworthy online environment. However, the approach chosen by X, in collaboration with the Israeli identity intelligence company Au10tix, raises significant concerns. “Au10tix is located in Israel and both have a well-documented history of military surveillance and intelligence gathering… this association raises questions about the potential implications for user privacy and data security.” Independent journalist Antony Loewenstein said he was worried that the verification process could normalize Israeli surveillance technology.

What the write up did not significant detail. The write up reports:

Au10tix has also created identity verification systems for border controls and airports and formed commercial partnerships with companies such as Uber, PayPal and Google.

My team’s research into online gaming found suggestions that the estimable 888 Holdings may have a relationship with Au10tix. The company pops up in some of our research into facial recognition verification. The Israeli gig work outfit Fiverr.com seems to be familiar with the technology as well. I want to point out that one of the Fiverr gig workers based in the UK reported to me that she was no longer “recognized” by the Fiverr.com system. Yeah, October 2023 style intelware.

Who operates the company? Heading back into my files, I spotted a few names. These individuals may no longer involved in the company, but several names remind me of individuals who have been active in the intelware game for a few years:

- Ron Atzmon: Chairman (Unit 8200 which was not on the ball on October 2023 it seems)

- Ilan Maytal: Chief Data Officer

- Omer Kamhi: Chief Information Security Officer

- Erez Hershkovitz: Chief Financial Officer (formerly of the very interesting intel-related outfit Voyager Labs, a company about which the Brennan Center has a tidy collection of information related to the LAPD)

The company’s technology is available in the Azure Marketplace. That description identifies three core functions of Au10tix’ systems:

- Identity verification. Allegedly the system has real-time identify verification. Hmm. I wonder why it took quite a bit of time to figure out who did what in October 2023. That question is probably unfair because it appears no patrols or systems “saw” what was taking place. But, I should not nit pick. The Azure service includes a “regulatory toolbox including disclaimer, parental consent, voice and video consent, and more.” That disclaimer seems helpful.

- Biometrics verification. Again, this is an interesting assertion. As imagery of the October 2023 emerged I asked myself, “How did that ID to selfie, selfie to selfie, and selfie to token matches” work? Answer: Ask the families of those killed.

- Data screening and monitoring. The system can “identify potential risks and negative news associated with individuals or entities.” That might be helpful in building automated profiles of individuals by companies licensing the technology. I wonder if this capability can be hooked to other Israeli spyware systems to provide a particularly helpful, real-time profile of a person of interest?

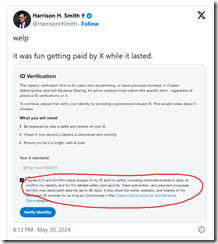

Let’s assume the write up is accurate and X.com is licensing the technology. X.com — according to “Au10tix Is an Israeli Company and Part of a Group Launched by Members of Israel’s Domestic Intelligence Agency, Shin Bet” — now includes this

The circled segment of the social media post says:

I agree to X and Au10tix using images of my ID and my selfie, including extracted biometric data to confirm my identity and for X’s related safety and security, fraud prevention, and payment purposes. Au10tix may store such data for up to 30 days. X may store full name, address, and hashes of my document ID number for as long as I participate in the Creator Subscription or Ads Revenue Share program.

This dinobaby followed the October 2023 event with shock and surprise. The dinobaby has long been a champion of Israel’s intelware capabilities, and I have done some small projects for firms which I am not authorized to identify. Now I am skeptical and more critical. What if X’s identity service is compromised? What if the servers are breached and the data exfiltrated? What if the system does not work and downstream financial fraud is enabled by X’s push beyond short text messaging? Much intelware is little more than glorified and old-fashioned search and retrieval.

Does Mr. Musk or other commercial purchasers of intelware know about cracks and fissures in intelware systems which allowed the October 2023 event to be undetected until live-fire reports arrived? This tie up is interesting and is worth monitoring.

Stephen E Arnold, June 4, 2024

Telegram May Play a Larger Role In Future Of War And Education

June 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Telegram is an essential tool for the future of crime. The Dark Web is still a hotbed of criminal activity, but as authorities crack down on it the bad actors need somewhere else to go. Stephen Arnold, Erik Arnold, et al. wrote a white paper titled E2EE: The Telegram Platform about how Telegram is replacing the Dark Web. Telegram is Dubai-based company with nefarious ties to Russia. The app offers data transfer for streaming audio and video, robust functions, and administrative tools. It’s being used to do everything from stealing people’s personal information to being an anti-US information platform.

The white paper details how Telegram is used to steal credit, gift, debit, and other card information. The process is called “carding” and a simple Google search reveals where stolen card information buyable. The team specifically investigated the Altenens.is, a paywall website to buy stolen information. It’s been removed from the Internet only to reappear again.

Altenens.is hosts forums, a chat, places to advertise products and services related to the website’s theme. Users are required to download and register with Telegram, because it offers encryption services for financial tractions. Altenen.is is only one of the main ways Telegram is used for bad acts:

“The Telegram service today is multi-faceted. One can argue that Telegram is a next-generation social network. Plus, it is a file transfer and rich media distribution service too. A bad actor can collect money from another Telegram user and then stream data or a video to an individual or a group. In the Altenen case example, the buyer of stolen credit cards gets a file with some carding data and the malware payload. The transaction takes place within Telegram. Its lax or hit-and-miss moderation method allows alleged illegal activity on the platform. ”

Telegram is becoming more advanced with its own cryptocurrency and abilities to mask and avoid third-party monitors. It’s used as a tool for war propaganda, but it’s also used to eschew authoritarian governments who want to control information. It’s interesting and warrants monitoring. If you work in an enforcement agency or a unit of the US government, you can request a copy of the white paper by writing benkent2020 @ yahoo dot com. Please, mention Beyond Search in your request. We do need to know your organization and area of interest.

Whitney Grace, June 4, 2024

Encryption Battles Continue

June 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Privacy protections are great—unless you are law-enforcement attempting to trace a bad actor. India has tried to make it easier to enforce its laws by forcing messaging apps to track each message back to its source. That is challenging for a platform with encryption baked in, as Rest of World reports in, “WhatsApp Gives India an Ultimatum on Encryption.” Writer Russell Brandom tells us:

“IT rules passed by India in 2021 require services like WhatsApp to maintain ‘traceability’ for all messages, allowing authorities to follow forwarded messages to the ‘first originator’ of the text. In a Delhi High Court proceeding last Thursday, WhatsApp said it would be forced to leave the country if the court required traceability, as doing so would mean breaking end-to-end encryption. It’s a common stance for encrypted chat services generally, and WhatsApp has made this threat before — most notably in a protracted legal fight in Brazil that resulted in intermittent bans. But as the Indian government expands its powers over online speech, the threat of a full-scale ban is closer than it’s been in years.”

And that could be a problem for a lot of people. We also learn:

“WhatsApp is used by more than half a billion people in India — not just as a chat app, but as a doctor’s office, a campaigning tool, and the backbone of countless small businesses and service jobs. There’s no clear competitor to fill its shoes, so if the app is shut down in India, much of the digital infrastructure of the nation would simply disappear. Being forced out of the country would be bad for WhatsApp, but it would be disastrous for everyday Indians.”

Yes, that sounds bad. For the Electronic Frontier Foundation, it gets worse: The civil liberties organization insists the regulation would violate privacy and free expression for all users, not just suspected criminals.

To be fair, WhatsApp has done a few things to limit harmful content. It has placed limits on message forwarding and has boosted its spam and disinformation reporting systems. Still, there is only so much it can do when enforcement relies on user reports. To do more would require violating the platform’s hallmark: its end-to-end encryption. Even if WhatsApp wins this round, Brandom notes, the issue is likely to come up again when and if the Bharatiya Janata Party does well in the current elections.

Cynthia Murrell, June 4, 2024

Lunch at a Big Time Publisher: Humble Pie and Sour Words

June 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Years ago I did some work for a big time New York City publisher. The firm employed people who used words like “fungible” and “synergy” when talking with me. I took the time to read an article with this title: “So Much for Peer Review — Wiley Shuts Down 19 Science Journals and Retracts 11,000 Gobbledygook Papers.” Was this the staid, conservative, and big vocabulary?

Yep.

The essay is little more than a wrapper for a Wall Street Journal story with the title “Flood of Fake Science Forces Multiple Journal Closures Tainted by Fraud.” I quite like that title, particularly the operative word “fraud.” What in the world is going on?

The write up explains:

Wiley — a mega publisher of science articles has admitted that 19 journals are so worthless, thanks to potential fraud, that they have to close them down. And the industry is now developing AI tools to catch the AI fakes (makes you feel all warm inside?)

A group of publishing executives becomes the focal point of a Midtown lunch in an upscale restaurant. The titans of publishing are complaining about the taste of humble pie and user secret NYAC gestures to express their disapproval. Thanks, MSFT Copilot. Your security expertise may warrant a special banquet too.

The information in the cited article contains some tasty nuggets which complement humble pie in my opinion; for instance:

- The shut down of the junk food publications has required two years. If Sillycon Valley outfits can fire thousands via email or Zoom, “Why are those uptown shoes being dragged?” I asked myself.

- Other high-end publishers have been doing the same thing. Sadly there are no names.

- The bogus papers included something called a “AI gobbledygook sandwich.” Interesting. Human reviews who are experts could not recognize the vernacular of academic and research fraudsters.

- Some in Australia think that the credibility of universities might be compromised. Oh, come now. Just because the president of Stanford had to search for his future elsewhere after some intellectual fancy dancing and the head of the Harvard ethic department demonstrated allegedly sci-fi ethics in published research, what’s the problem? Don’t students just get As and Bs. Professors are engaged in research, chasing consulting gigs, and ginning up grant money. Actual research? Oh, come now.

- Academic journals are or were a $30 billion dollar industry.

Observations are warranted:

- In today’s datasphere, I am not surprised. Scams, frauds, and cheats seems to be as common as ants at a picnic. A cultural shift has occurred. Cheating has become the norm.

- Will the online databases, produced by some professional publishers and commercial database companies, be updated to remove or at least flag the baloney? Probably not. That costs money. Spending money is not a modern publishing CEO’s favorite activity. (Hence the two-year draw down of the fake information at the publishing house identified in the cited write up.)

- How many people have died or been put out of work because of specious research data? I am not holding my breath for the peer reviewed journals to provide this information.

Net net: Humiliating and a shame. Quite a cultural mismatch between what some publishers say and this alleged what the firm ordered from the deli. I thought the outfit had a knowledge-based reason to tell me that it takes the high road. It seems that on that road, there are places where a bad humble pie is served.

Stephen E Arnold, June 4, 2024

AI Will Not Definitely, Certainly, Absolute Not Take Some Jobs. Whew. That Is News

June 3, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Outfits like McKinsey & Co. are kicking the tires of smart software. Some bright young sprouts I have heard arrive with a penchant for AI systems to create summaries and output basic information on a subject the youthful masters of the universe do not know. Will consulting services firms, publishers, and customer service outfits embrace smart software? The answer is, “You bet your bippy.”

“Why?” Answer: Potential cost savings. Humanoids require vacations, health care, bonuses, pension contributions (ho ho ho), and an old-fashioned and inefficient five-day work week.

Cost reductions over time, cost controls in real time, and more consistent outputs mean that as long as smart software is good enough, the technologies will go through organizations with more efficiency than Union General William T. Sherman led some 60,000 soldiers on a 285-mile march from Atlanta to Savannah, Georgia. Thanks, MSFT Copilot. Working on security today?

Software is allegedly better, faster, and cheaper. Software, particularly AI, may not be better, faster, or cheaper. But once someone is fired, the enthusiasm to return to the fold may be diminished. Often the response is a semi-amusing and often negative video posted on social media.

“Here’s Why AI Probably Isn’t Coming for Your Job Anytime Soon” disagrees with my fairly conservative prediction that consulting, publishing, and some service outfits will be undergoing what I call “humanoid erosion” and “AI accretion.” The write up asserts:

We live in an age of hyper specialization. This is a trend that’s been evolving for centuries. In his seminal work, The Wealth of Nations (written within months of the signing of the Declaration of Independence), Adam Smith observed that economic growth was primarily driven by specialization and division of labor. And specialization has been a hallmark of computing technology since its inception. Until now. Artificial intelligence (AI) has begun to alter, even reverse, this evolution.

Okay, Econ 101. Wonderful. But… and there are some, of course. the write up says:

But the direction is clear. While society is moving toward ever more specialization, AI is moving in the opposite direction and attempting to replicate our greatest evolutionary advantage—adaptability.

Yikes. I am not sure that AI is going in any direction. Senior managers are going toward reducing costs. “Good enough,” not excellence, is the high-water mark today.

Here’s another “but”:

But could AI take over the bulk of legal work or is there an underlying thread of creativity and judgment of the type only speculative super AI could hope to tackle? Put another way, where do we draw the line between general and specific tasks we perform? How good is AI at analyzing the merits of a case or determining the usefulness of a specific document and how it fits into a plausible legal argument? For now, I would argue, we are not even close.

I don’t remember much about economics. In fact, I only think about economics in terms of reducing costs and having more money for myself. Good old Adam wrote:

Wherever there is great property there is great inequality. For one very rich man, there must be at least five hundred poor, and the affluence of the few supposes the indigence of the many.

When it comes to AI, inequality is baked in. The companies that are competing fiercely to dominate the core technology are not into equality. The senior managers who want to reduce costs associated with publishing, writing consulting reports based on business school baloney, or reviewing documents hunting for nuggets useful in a trial. AI is going into these and similar knowledge professions. Most of those knowledge workers will have an opportunity to find their future elsewhere. But what about in-take professionals in hospitals? What about dispatchers at trucking companies? What about government citizen service jobs? Sorry. Software is coming. Companies are developing orchestrator software to allow smart software to function across multiple related and inter-related tasks. Isn’t that what most work in a many organizations is?

Here’s another test question from Econ 101:

Discuss the meaning of “It was not by gold or by silver, but by labor, that all wealth of the world was originally purchased.” Give examples of how smart software will replace labor and generate more money for those who own the rights to digital gold or silver.

Send me you blue book answers within 24 hours. You must write in legible cursive. You are not permitted to use artificial intelligence in any form to answer this question which counts for 95 percent of your grade in Economics 102: Work in the Age of AI.

Stephen E Arnold, June 3, 2024