Price Fixing Is Price Fixing with or without AI

June 3, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Small time landlords, such as mom and pops who invested in property for retirement, shouldn’t be compared to large, corporate landlords. The corporate landlords, however, give them all a bad name. Why? Because of actions like price fixing. ProPublicia details how politicians are fighting against the bad act: “We Found That Landlords Could Be Using Algorithms To Fix Rent Prices. Now Lawmakers Want To make The Practice Illegal.”

RealPage sells software programmed with AI algorithm that collect rent data and recommends how much landlords should charge. Lawmakers want to ban AI-base price fixing so landlords won’t become cartels that coordinate pricing. RealPage and its allies defend the software while lawmakers introduced a bill to ban it.

The FTC also states that AI-based real estate software has problems: “Price Fixing By Algorithm Is Still Price Fixing.” The FTC isn’t against technology. They’re against technology being used as a tool to cheat consumers:

“Meanwhile, landlords increasingly use algorithms to determine their prices, with landlords reportedly using software like “RENTMaximizer” and similar products to determine rents for tens of millions(link is external) of apartments across the country. Efforts to fight collusion are even more critical given private equity-backed consolidation(link is external) among landlords and property management companies. The considerable leverage these firms already have over their renters is only exacerbated by potential algorithmic price collusion. Algorithms that recommend prices to numerous competing landlords threaten to remove renters’ ability to vote with their feet and comparison-shop for the best apartment deal around.”

This is an example of how to use AI for evil. The problem isn’t the tool it’s the humans using it.

Whitney Grace, June 3, 2024

Spot a Psyop Lately?

June 3, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Psyops or psychological operations is also known as psychological warfare. It’s defines as actions used to weaken an enemy’s morale. Psyops can range from simple propaganda poster to a powerful government campaign. According to Annalee Newitz on her Hypothesis Buttondown blog, psyops are everywhere and she explains: “How To Recognize A Psyop In Three Easy Steps.”

Newitz smartly condenses the history of American psyops into a paragraph: it’s a mixture of pulp fiction tropes, advertising techniques, and pop psychology. In the twentieth century, US military harnessed these techniques to make messages to hurt, demean, and distract people. Unlike weapons, psyops can be avoided with a little bit of critical thinking.

The first step is to pay attention when people claim something is “anti-American.” The term “anti-American” can be interpreted in many ways, but it comes down to media saying one group of people (foreign, skin color, sexual orientation, etc.) is against the American way of life.

The second step is spreading lies with hints of truth. Newitz advises to read psychological warfare military manuals and uses an example of leaflets the Japanese dropped on US soldiers in the Philippines. The leaflets warned the soldiers about venomous snakes in jungles and they were signed by with “US Army.” Soldiers were told the leaflets were false, but it made them believe there were coverups:

“Psyops-level lies are designed to destabilize an enemy, to make them doubt themselves and their compatriots, and to convince them that their country’s institutions are untrustworthy. When psyops enter culture wars, you start to see lies structured like this snake “warning.” They don’t just misrepresent a specific situation; they aim to undermine an entire system of beliefs.”

The third step is the easiest to recognize and the most extreme: you can’t communicate with anyone who says you should be dead. Anyone who believes you should be dead is beyond rational thought. Her advice is to ignore it and not engage.

Another way to recognize psyops tactics is to question everything. Thinking isn’t difficult, but thinking critically takes practice.

Whitney Grace, June 3, 2024

So AI Is — Maybe, Just Maybe — Not the Economic Big Kahuna?

June 3, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I find it amusing how AI has become the go-to marketing word. I suppose if I were desperate, lacking an income, unsure about what will sell, and a follow-the-hyperbole-type person I would shout, “AI.” Instead I vocalize, “Ai-Yai-Ai” emulating the tones of a Central American death whistle. Yep, “Ai-Yai-AI.”

Thanks, MSFT Copilot. A harbinger? Good enough.

I read “MIT Professor Hoses Down Predictions AI Will Put a Rocket under the Economy.” I won’t comment upon the fog of distrust which I discern around Big Name Universities, nor will I focus my adjustable Walgreen’s spectacles on MIT’s fancy dancing with the quite interesting and decidedly non-academic Jeffrey Epstein. Nope. Forget those two factoids.

The write up reports:

…Daron Acemoglu, professor of economics at Massachusetts Institute of Technology, argues that predictions AI will improve productivity and boost wages in a “blue-collar bonanza” are overly optimistic.

The good professor is rowing against the marketing current. According to the article, the good professor identifies some wild and crazy forecasts. One of these is from an investment bank whose clients are unlikely to be what some one percenters perceive as non-masters of the universe.

That’s interesting. But it pales in comparison to the information in “Few People Are Using ChatGPT and Other AI Tools Regularly, Study Suggests.” (I love suggestive studies!) That write up reports about a study involving Thomson Reuters, the “trust” outfit:

Carried out by the Reuters Institute and Oxford University and involving 6,000 respondents from the U.S., U.K., France, Denmark, Japan, and Argentina, the researchers found that OpenAI’s ChatGPT is by far the most widely used generative-AI tool and is two or three times more widespread than the next most widely used products — Google Gemini and Microsoft Copilot. But despite all the hype surrounding generative AI over the last 18 months, only 1% of those surveyed are using ChatGPT on a daily basis in Japan, 2% in France and the UK, and 7% in the U.S. The study also found that between 19% and 30% of the respondents haven’t even heard of any of the most popular generative AI tools, and while many of those surveyed have tried using at least one generative-AI product, only a very small minority are, at the current time, regular users deploying them for a variety of tasks.

My hunch is that these contrarians want clicks. Well, the tactic worked for me. However, how many of those in AI-Land will take note? My thought is that these anti-AI findings are likely to be ignored until some of the Big Money folks lose their cash. Then the voices of negativity will be heard.

Several observations:

- The economics of AI seem similar to some early online ventures like Pets.com, not “all” mind you, just some

- Expertise in AI may not guarantee a job at a high-flying techno-feudalist outfit

- The difficulties Google appears to be having suggest that the road to AI-Land on the information superhighway may have some potholes. (If Google cannot pull AI off, how can Bob’s Trucking Company armed with Microsoft Word with Copilot?)

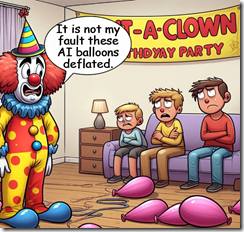

Net net: It will be interesting to monitor the frequency of “AI balloon deflating” analyses.

Stephen E Arnold, June 3, 2024

x