Another Big Consulting Firms Does Smart Software… Sort Of

September 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Will programmers and developers become targets for prosecution when flaws cripple vital computer systems? That may be a good idea because pointing to the “algorithm” as the cause of a problem does not seem to reduce the number of bugs, glitches, and unintended consequences of software. A write up which itself may be a blend of human and smart software suggests change is afoot.

Thanks, MSFT Copilot. Good enough.

“Judge Rules $400 Million Algorithmic System Illegally Denied Thousands of People’s Medicaid Benefits” reports that software crafted by the services firm Deloitte did not work as the State of Tennessee assumed. Yep, assume. A very interesting word.

The article explains:

The TennCare Connect system—built by Deloitte and other contractors for more than $400 million—is supposed to analyze income and health information to automatically determine eligibility for benefits program applicants. But in practice, the system often doesn’t load the appropriate data, assigns beneficiaries to the wrong households, and makes incorrect eligibility determinations, according to the decision from Middle District of Tennessee Judge Waverly Crenshaw Jr.

At one time, Deloitte was an accounting firm. Then it became a consulting outfit a bit like McKinsey. Well, a lot like that firm and other blue-chip consulting outfits. In its current manifestation, Deloitte is into technology, programming, and smart software. Well, maybe the software is smart but the programmers and the quality control seem to be riding in a different school bus from some other firms’ technical professionals.

The write up points out:

Deloitte was a major beneficiary of the nationwide modernization effort, winning contracts to build automated eligibility systems in more than 20 states, including Tennessee and Texas. Advocacy groups have asked the Federal Trade Commission to investigate Deloitte’s practices in Texas, where they say thousands of residents are similarly being inappropriately denied life-saving benefits by the company’s faulty systems.

In 2016, Cathy O’Neil published Weapons of Math Destruction. Her book had a number of interesting examples of what goes wrong when careless people make assumptions about numerical recipes. If she does another book, she may include this Deloitte case.

Several observations:

- The management methods used to create these smart systems require scrutiny. The downstream consequences are harmful.

- The developers and programmers can be fired, but the failure to have remediating processes in place when something unexpected surfaces must be part of the work process.

- Less informed users and more smart software strikes me as a combustible mixture. When a system ignites, the impacts may reverberate in other smart systems. What entity is going to fix the problem and accept responsibility? The answer is, “No one” unless there are significant consequences.

The State of Tennessee’s experience makes clear that a “brand name”, slick talk, an air of confidence, and possibly ill-informed managers can do harm. The opioid misstep was bad. Now imagine that type of thinking in the form of a fast, indifferent, and flawed “system.” Firing a 25 year old is not the solution.

Stephen E Arnold, September 3, 2024

Consensus: A Gen AI Search Fed on Research, not the Wild Wild Web

September 3, 2024

How does one make an AI search tool that is actually reliable? Maybe start by supplying it with only peer-reviewed papers instead of the whole Internet. Fast Company sings the praises of Consensus in, “Google Who? This New Service Actually Gets AI Search Right.” Writer JR Raphael begins by describing why most AI-powered search engines, including Google, are terrible:

“The problem with most generative AI search services, at the simplest possible level, is that they have no idea what they’re even telling you. By their very nature, the systems that power services like ChatGPT and Gemini simply look at patterns in language without understanding the actual context. And since they include all sorts of random internet rubbish within their source materials, you never know if or how much you can actually trust the info they give you.”

Yep, that pretty much sums it up. So, like us, Raphael was skeptical when he learned of yet another attempt to bring generative AI to search. Once he tried the easy-to-use Consensus, however, he was convinced. He writes:

“In the blink of an eye, Consensus will consult over 200 million scientific research papers and then serve up an ocean of answers for you—with clear context, citations, and even a simple ‘consensus meter’ to show you how much the results vary (because here in the real world, not everything has a simple black-and-white answer!). You can dig deeper into any individual result, too, with helpful features like summarized overviews as well as on-the-fly analyses of each cited study’s quality. Some questions will inevitably result in answers that are more complex than others, but the service does a decent job of trying to simplify as much as possible and put its info into plain English. Consensus provides helpful context on the reliability of every report it mentions.”

See the post for more on using the web-based app, including a few screenshots. Raphael notes that, if one does not have a specific question in mind, the site has long lists of its top answers for curious users to explore. The basic service is free to search with no query cap, but creators hope to entice us with an $8.99/ month premium plan. Of course, this service is not going to help with every type of search. But if the subject is worthy of academic research, Consensus should have the (correct) answers.

Cynthia Murrell, September 3, 2024

Elastic N.V. Faces a New Search Challenge

September 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Elastic N.V. and Shay Banon are what I call search survivors. Gone are Autonomy (mostly), Delphis, Exalead, Fast Search & Transfer (mostly), Vivisimo, and dozens upon dozens of companies who sought to put an organization’s information at an employee’s fingertips. The marketing lingo of these and other now-defunct enterprise search vendors is surprisingly timely. Once can copy and paste chunks of Autonomy’s white papers into the OpenAI ChatGPT search is coming articles and few would notice that the assertions and even the word choice was more than 40 years old.

Elastic N.V. survived. It rose from a failed search system called Compass. Elastic N.V. recycled the Lucene libraries, released the open source Elasticsearch, and did an IPO. Some people made a lot of money. The question is, “Will that continue?”

I noted the Silicon Angle article “Elastic Shares Plunge 25% on Lower Revenue Projections Amid Slower Customer Commitments.” That write up says:

In its earnings release, Chief Executive Officer Ash Kulkarni started positively, noting that the results in the quarter we solid and outperformed previous guidance, but then comes the catch and the reason why Elastic stock is down so heavily after hours. “We had a slower start to the year with the volume of customer commitments impacted by segmentation changes that we made at the beginning of the year, which are taking longer than expected to settle,” Kulkarni wrote. “We have been taking steps to address this, but it will impact our revenue this year.” With that warning, Elastic said that it expects fiscal second-quarter adjusted earnings per share of 37 to 39 cents on revenue of $353 million to $355 million. The earnings per share forecast was ahead of the 34 cents expected by analysts, but revenue fell short of an expected $360.8 million. It was a similar story for Elastic’s full-year outlook, with the company forecasting earnings per share of $1.52 to $1.56 on revenue of $1.436 billion to $1.444 billion. The earnings per share outlook was ahead of an expected $1.42, but like the second quarter outlook, revenue fell short, as analysts had expected $1.478 billion.

Elastic N.V. makes money via service and for-fee extras. I want to point out that the $300 million or so revenue numbers are good. Elastic B.V. has figured out a business model that has not required [a] fiddling the books, [b] finding a buyer as customers complain about problems with the search software, [c] the sources of financing rage about cash burn and lousy revenue, [d] government investigators are poking around for tax and other financial irregularities, [e] the cost of running the software is beyond the reach of the licensee, or [f] the system simply does not search or retrieve what the user wanted or expected.

Elastic B.V. and its management team may have a challenge to overcome. Thanks, OpenAI, the MSFT Copilot thing crashed today.

So what’s the fix?

A partial answer appears in the Elastic B.V. blog post titled “Elasticsearch Is Open Source, Again.” The company states:

The tl;dr is that we will be adding AGPL as another license option next to ELv2 and SSPL in the coming weeks. We never stopped believing and behaving like an open source community after we changed the license. But being able to use the term Open Source, by using AGPL, an OSI approved license, removes any questions, or fud, people might have.

Without slogging through the confusion between what Elastic B.V. sells, the open source version of Elasticsearch, the dust up with Amazon over its really original approach to search inspired by Elasticsearch, Lucid Imagination’s innovation, and the creaking edifice of A9, Elastic B.V. has released Elasticsearch under an additional open source license. I think that means one can use the software and not pay Elastic B.V. until additional services are needed. In my experience, most enterprise search systems regardless of how they are explained need the “owner” of the system to lend a hand. Contrary to the belief that smart software can do enterprise search right now, there are some hurdles to get over.

Will “going open source again” work?

Let me offer several observations based on my experience with enterprise search and retrieval which reaches back to the days of punch cards and systems which used wooden rods to “pull” cards with a wanted tag (index term):

- When an enterprise search system loses revenue momentum, the fix is to acquire companies in an adjacent search space and use that revenue to bolster the sales prospects for upsells.

- The company with the downturn gilds the lily and seeks a buyer. One example was the sale of Exalead to Dassault Systèmes which calculated it was more economical to buy a vendor than to keep paying its then current supplier which I think was Autonomy, but I am not sure. Fast Search & Transfer pulled of this type of “exit” as some of the company’s activities were under scrutiny.

- The search vendor can pivot from doing “search” and morph into a business intelligence system. (By the way, that did not work for Grok.)

- The company disappears. One example is Entopia. Poof. Gone.

I hope Elastic B.V. thrives. I hope the “new” open source play works. Search — whether enterprise or Web variety — is far from a solved problem. People believe they have the answer. Others believe them and license the “new” solution. The reality is that finding information is a difficult challenge. Let’s hope the “downturn” and “negativism” goes away.

Stephen E Arnold, September 2, 2024

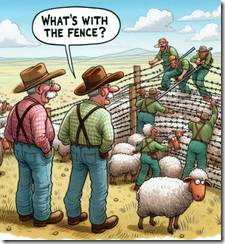

Social Media Cowboys, the Ranges Are Getting Fences

September 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Several recent developments suggest that the wide open and free ranges are being fenced in. How can I justify this statement, pardner? Easy. Check out these recent developments:

- The founder of Telegram is Pavel Durov. He was arrested on Saturday, August 26, 2024, at Le Bourget airport near Paris

- TikTok will stand trial for the harms to children caused by the “algorithm”

- Brazil has put up barbed wire to keep Twitter (now X.com) out of the country.

I am not the smartest dinobaby in the rest home, but even I can figure out that governments are taking action after decades of thinking about more weighty matters than the safety of children, the problems social media causes for parents and teachers, and the importance of taking immediate and direct action against those breaking laws.

A couple of social media ranchers are wondering about the actions of some judicial officials. Thanks, MSFT Copilot. Good enough like most software today.

Several questions seem to be warranted.

First, the actions are uncoordinated. Brazil, France, and the US have reached conclusions about different social media companies and acted without consulting one another. How quickly with other countries consider their particular situation and reach similar conclusions about free range technology outfits?

Second, why have legal authorities and legislators in many countries failed to recognize the issues radiating from social media and related technology operators? Was it the novelty of technology? Was it a lack of technology savvy? Was it moral or financial considerations?

Third, how will the harms be remediated? Is it enough to block a service or change penalties for certain companies?

I am personally not moved by those who say speech must be free and unfettered. Sorry. The obvious harms outweigh that self-serving statement from those who are mesmerized by online or paid to have that idea and promote it. I understand that a percentage of students will become high achievers with or without traditional reading, writing, and arithmetic. However, my concern is the other 95 percent of students. Structured learning is necessary for a society to function. That’s why there is education.

I don’t have any big ideas about ameliorating the obvious damage done by social media. I am a dinobaby and largely untouched by TikTok-type videos or Facebook-type pressures. I am, however, delighted to be able to cite three examples of long overdue action by Brazilian, French, and US officials. Will some of these wild west digital cowboys end up in jail? I might support that, pardner.

Stephen E Arnold, September 2, 2024

Google Claims It Fixed Gemini’s “Degenerate” People

September 2, 2024

History revision is a problem. It’s been a problem for…well…since the start of recorded history. The Internet and mass media are infamous for being incorrect about historical facts, but image generating AI, like Google’s Gemini, is even worse. Tech Crunch explains what Google did to correct its inaccurate algorithm: “Google Says It’s Fixed Gemini’s People-Generating Feature.”

Google released Gemini in early 2023, then over a year later paused the chatbot for being too “woke,”“politically incorrect,” and “historically inaccurate.” The worst of Gemini’s offending actions was when it (for example) was asked to depict a Roman legion as ethnically diverse which fit the woke DEI agenda, while when it was asked to make an equally ethnically diverse Zulu warrior army Gemini only returned brown-skinned people. The latter is historically accurate, because Google doesn’t want to offend western ethnic minorities and, of course, Europe (where light skinned pink people originate) was ethnically diverse centuries ago.

Everything was A OK, until someone invoked Godwin’s Law by asking Gemini to generate (degenerate [sic]) an image of Nazis. Gemini returned an ethnically diverse picture with all types of Nazis, not the historically accurate light-skinned Germans-native to Europe.

Google claims it fixed Gemini and it took way longer than planned. The people generative feature is only available to paid Gemini plans. How does Google plan to make its AI people less degenerative? Here’s how:

“According to the company, Imagen 3, the latest image-generating model built into Gemini, contains mitigations to make the people images Gemini produces more “fair.” For example, Imagen 3 was trained on AI-generated captions designed to ‘improve the variety and diversity of concepts associated with images in [its] training data,’ according to a technical paper shared with TechCrunch. And the model’s training data was filtered for “safety,” plus ‘review[ed] … with consideration to fairness issues,’ claims Google…;We’ve significantly reduced the potential for undesirable responses through extensive internal and external red-teaming testing, collaborating with independent experts to ensure ongoing improvement,” the spokesperson continued. ‘Our focus has been on rigorously testing people generation before turning it back on.’”

Google will eventually make it work and the company is smart to limit Gemini’s usage to paid subscriptions. Limiting the user pool means Google can better control the chatbot and (if need be) turn it off. It will work until bad actors learn how to abuse the chatbot again for their own sheets and giggles.

Whitney Grace, September 2, 2024