No Llama 3 for EU

July 31, 2024

Frustrated with European regulators, Meta is ready to take its AI ball and go home. Axios reveals, “Scoop: Meta Won’t Offer Future Multimodal AI Models in EU.” Reporter Ina Fried writes:

“Meta will withhold its next multimodal AI model — and future ones — from customers in the European Union because of what it says is a lack of clarity from regulators there, Axios has learned. Why it matters: The move sets up a showdown between Meta and EU regulators and highlights a growing willingness among U.S. tech giants to withhold products from European customers. State of play: ’We will release a multimodal Llama model over the coming months, but not in the EU due to the unpredictable nature of the European regulatory environment,’ Meta said in a statement to Axios.”

So there. And Meta is not the only firm petulant in the face of privacy regulations. Apple recently made a similar declaration. So governments may not be able to regulate AI, but AI outfits can try to regulate governments. Seems legit. The EU’s stance is that Llama 3 may not feed on European users’ Facebook and Instagram posts. Does Meta hope FOMO will make the EU back down? We learn:

“Meta plans to incorporate the new multimodal models, which are able to reason across video, audio, images and text, in a wide range of products, including smartphones and its Meta Ray-Ban smart glasses. Meta says its decision also means that European companies will not be able to use the multimodal models even though they are being released under an open license. It could also prevent companies outside of the EU from offering products and services in Europe that make use of the new multimodal models. The company is also planning to release a larger, text-only version of its Llama 3 model soon. That will be made available for customers and companies in the EU, Meta said.”

The company insists EU user data is crucial to be sure its European products accurately reflect the region’s terminology and culture. Sure That is almost a plausible excuse.

Cynthia Murrell, July 31, 2024

Meta and China: Yeah, Unauthorized Use of Llama. Meh

November 8, 2024

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

That open source smart software, you remember, makes everything computer- and information-centric so much better. One open source champion laboring as a marketer told me, “Open source means no more contractual handcuffs, the ability to make changes without a hassle, and evidence of the community.

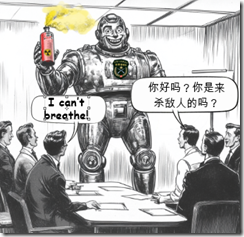

An AI-powered robot enters a meeting. One savvy executive asks in Chinese, “How are you? Are you here to kill the enemy?” Another executive, seated closer to the gas emitted from a cannister marked with hazardous materials warnings gasps, “I can’t breathe!” Thanks, Midjourney. Good enough.

How did those assertions work for China? If I can believe the “trusted” outputs of the “real” news outfit Reuters, just super cool. “Exclusive: Chinese Researchers Develop AI Model for Military Use on Back of Meta’s Llama”, those engaging folk of the Middle Kingdom:

… have used Meta’s publicly available Llama model to develop an AI tool for potential military applications, according to three academic papers and analysts.

Now that’s community!

The write up wobbles through some words about the alleged Chinese efforts and adds:

Meta has embraced the open release of many of its AI models, including Llama. It imposes restrictions on their use, including a requirement that services with more than 700 million users seek a license from the company. Its terms also prohibit use of the models for “military, warfare, nuclear industries or applications, espionage” and other activities subject to U.S. defense export controls, as well as for the development of weapons and content intended to “incite and promote violence”. However, because Meta’s models are public, the company has limited ways of enforcing those provisions.

In the spirit of such comments as “Senator, thank you for that question,” a Meta (aka Facebook), wizard allegedly said:

“That’s a drop in the ocean compared to most of these models (that) are trained with trillions of tokens so … it really makes me question what do they actually achieve here in terms of different capabilities,” said Joelle Pineau, a vice president of AI Research at Meta and a professor of computer science at McGill University in Canada.

My interpretation of the insight? Hey, that’s okay.

As readers of this blog know, I am not too keen on making certain information public. Unlike some outfits’ essays, Beyond Search tries to address topics without providing information of a sensitive nature. For example, search and retrieval is a hard problem. Big whoop.

But posting what I would term sensitive information as usable software for anyone to download and use strikes me as something which must be considered in a larger context; for example, a bad actor downloading an allegedly harmless penetration testing utility of the Metasploit-ilk. Could a bad actor use these types of software to compromise a commercial or government system? The answer is, “Duh, absolutely.”

Meta’s founder of the super helpful Facebook wants to bring people together. Community. Kumbaya. Sharing.

That has been the lubricant for amassing power, fame, and money… Oh, also a big gold necklace similar to the one’s I saw labeled “Pharaoh jewelry.”

Observations:

- Meta (Facebook) does open source for one reason: To blunt initiatives from its perceived competitors and to position itself to make money.

- Users of Meta’s properties are only data inputters and action points; that is, they are instrumentals.

- Bad actors love that open source software. They download it. They study it. They repurpose it to help the bad actors achieve their goals.

Did Meta include a kill switch in its open source software? Oh, sure. Meta is far-sighted, concerned with misuse of its innovations, and super duper worried about what an adversary of the US might do with that technology. On the bright side, if negotiations are required, the head of Meta (Facebook) allegedly speaks Chinese. Is that a benefit? He could talk with the weaponized robot dispensing biological warfare agents.

Stephen E Arnold, November 8, 2024

Llama Beans? Is That the LLM from Zuckbook?

August 4, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

We love open-source projects. Camelids that masquerade as such, not so much. According to The Register, “Meta Can Call Llama 2 Open Source as Much as It Likes, but That Doesn’t Mean It Is.” The company asserts its new large language model is open source because it is freely available for research and (some) commercial use. Are Zuckerburg and his team of Meta marketers fuzzy on the definition of open source? Writer Steven J. Vaughan-Nichols builds his case with quotes from several open source authorities. First up:

“As Erica Brescia, a managing director at RedPoint, the open source-friendly venture capital firm, asked: ‘Can someone please explain to me how Meta and Microsoft can justify calling Llama 2 open source if it doesn’t actually use an OSI [Open Source Initiative]-approved license or comply with the OSD [Open Source Definition]? Are they intentionally challenging the definition of OSS [Open Source Software]?'”

Maybe they are trying. After all, open source is good for business. And being open to crowd-sourced improvements does help the product. However, as the post continues:

“The devil is in the details when it comes to open source. And there, Meta, with its Llama 2 Community License Agreement, falls on its face. As The Register noted earlier, the community agreement forbids the use of Llama 2 to train other language models; and if the technology is used in an app or service with more than 700 million monthly users, a special license is required from Meta. It’s also not on the Open Source Initiative’s list of open source licenses.”

Next, we learn OSI‘s executive director Stefano Maffulli directly states Llama 2 does not meet his organization’s definition of open source. The write-up quotes him:

“While I’m happy that Meta is pushing the bar of available access to powerful AI systems, I’m concerned about the confusion by some who celebrate Llama 2 as being open source: if it were, it wouldn’t have any restrictions on commercial use (points 5 and 6 of the Open Source Definition). As it is, the terms Meta has applied only allow some commercial use. The keyword is some.”

Maffulli further clarifies Meta’s license specifically states Amazon, Google, Microsoft, Bytedance, Alibaba, and any startup that grows too much may not use the LLM. Such a restriction is a no-no in actual open source projects. Finally, Software Freedom Conservancy executive Karen Sandler observes:

“It looks like Meta is trying to push a license that has some trappings of an open source license but, in fact, has the opposite result. Additionally, the Acceptable Use Policy, which the license requires adherence to, lists prohibited behaviors that are very expansively written and could be very subjectively applied.”

Perhaps most egregious for Sandler is the absence of a public drafting or comment process for the Llama 2 license. Llamas are not particularly speedy creatures.

Cynthia Murrell, August 4, 2023

Stanford: Llama Hallucinating at the Dollar Store

March 21, 2023

Editor’s Note: This essay is the work of a real, and still alive, dinobaby. No smart software involved with the exception of the addled llama.

What happens when folks at Stanford University use the output of OpenAI to create another generative system? First, a blog article appears; for example, “Stanford’s Alpaca Shows That OpenAI May Have a Problem.” Second, I am waiting for legal eagles to take flight. Some may already be aloft and circling.

A hallucinating llama which confused grazing on other wizards’ work with munching on mushrooms. The art was a creation of ScribbledDiffusion.com. The smart software suggests the llama is having a hallucination.

What’s happening?

The model trained from OWW or Other Wizards’ Work mostly works. The gotcha is that using OWW without any silly worrying about copyrights was cheap. According to the write up, the total (excluding wizards’ time) was $600.

The article pinpoints the issue:

Alignment researcher Eliezer Yudkowsky summarizes the problem this poses for companies like OpenAI:” If you allow any sufficiently wide-ranging access to your AI model, even by paid API, you’re giving away your business crown jewels to competitors that can then nearly-clone your model without all the hard work you did to build up your own fine-tuning dataset.” What can OpenAI do about that? Not much, says Yudkowsky: “If you successfully enforce a restriction against commercializing an imitation trained on your I/O – a legal prospect that’s never been tested, at this point – that means the competing checkpoints go up on BitTorrent.”

I love the rapid rise in smart software uptake and now the snappy shift to commoditization. The VCs counting on big smart software payoffs may want to think about why the llama in the illustration looks as if synapses are forming new, low cost connections. Low cost as in really cheap I think.

Stephen E Arnold, March 21, 2023

Does a LLamA Bite? No, But It Can Be Snarky

February 28, 2023

Everyone in Harrod’s Creek knows the name Yann LeCun. The general view is that when it comes to smart software, this wizard wrote or helped write the book. I spotted a tweet thread “LLaMA Is a New *Open-Source*, High-Performance Large Language Model from Meta AI – FAIR.” The link to the Facebook research paper “LLaMA: Open and Efficient Foundation Language Models” explains the innovation for smart software enthusiasts. In a nutshell, the Zuck approach is bigger, faster, and trained without using data not available to everyone. Also, it does not require Googzilla scale hardware for some applications.

That’s the first tip off that the technical paper has a snarky sparkle. Exactly what data have been used to train Google and other large language models. The implicit idea is that the legal eagles flock to sue for copyright violating actions, the Zuckers are alleged flying in clean air.

Here are a few other snarkifications I spotted:

- Use small models trained on more data. The idea is that others train big Googzilla sized models trained on data, some of which is not public available

- The Zuck approach an “efficient implementation of the causal multi-head attention operator.” The idea is that the Zuck method is more efficient; therefore, better

- In testing performance, the results are all over the place. The reason? The method for determining performance is not very good. Okay, still Meta is better. The implication is that one should trust Facebook. Okay. That’s scientific.

- And cheaper? Sure. There will be fewer legal fees to deal with pesky legal challenges about fair use.

What’s my take? Another open source tool will lead to applications built on top of the Zuckbook’s approach.

Now the developers and users will have to decide if the LLamA can bite? Does Facebook have its wizardly head in the Azure clouds? Will the Sages of Amazon take note?

Tough questions. At first glance, llamas have other means of defending themselves. Teeth may not be needed. Yes, that’s snarky.

Stephen E Arnold, February 28, 2023

How Does Smart Software Interpret a School Test

January 29, 2025

A blog post from an authentic dinobaby. He’s old; he’s in the sticks; and he is deeply skeptical.

A blog post from an authentic dinobaby. He’s old; he’s in the sticks; and he is deeply skeptical.

I spotted an article titled “‘Is This Question Easy or Difficult to You?’: This LSAT Reading Comprehension Question Is Breaking Brains.” Click bait? Absolutely.

Here’s the text to figure out:

Physical education should teach people to pursue healthy, active lifestyles as they grow older. But the focus on competitive sports in most schools causes most of the less competitive students to turn away from sports. Having learned to think of themselves as unathletic, they do not exercise enough to stay healthy.

Imagine you are sitting in a hot, crowded examination room. No one wants to be there. You have to choose one of the following solutions.

(a) Physical education should include noncompetitive activities.

[b] Competition causes most students to turn away from sports.

[c] People who are talented at competitive physical endeavors exercise regularly.

[d] The mental aspects of exercise are as important as the physical ones.

[e] Children should be taught the dangers of a sedentary lifestyle.

Okay, what did you select?

Well, the “correct” answer is [a], Physical education should include noncompetitive activities.

Now how did some of the LLMs or smart software do?

ChatGPT o1 settled on [a].

Claude Sonnet 3.5 spit out a page of text but did conclude that the correct answer as [a].

Gemini 1.5 Pro concluded that [a] was correct.

Llama 3.2 90B output two sentences and the correct answer [a]

Will students use large language models for school work, tests, and real life?

Yep. Will students question or doubt the outputs? Nope.

Are the LLMs “good enough”?

Yep.

Stephen E Arnold, January 29, 2025

The Many Faces of Zuckbook

March 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

As evidenced by his business decisions, Mark Zuckerberg seems to be a complicated fellow. For example, a couple recent articles illustrate this contrast: On one hand is his commitment to support open source software, an apparently benevolent position. On the other, Meta is once again in the crosshairs of EU privacy advocates for what they insist is its disregard for the law.

First, we turn to a section of VentureBeat’s piece, “Inside Meta’s AI Strategy: Zuckerberg Stresses Compute, Open Source, and Training Data.” In it, reporter Sharon Goldman shares highlights from Meta’s Q4 2023 earnings call. She emphasizes Zuckerberg’s continued commitment to open source software, specifically AI software Llama 3 and PyTorch. He touts these products as keys to “innovation across the industry.” Sounds great. But he also states:

“Efficiency improvements and lowering the compute costs also benefit everyone including us. Second, open source software often becomes an industry standard, and when companies standardize on building with our stack, that then becomes easier to integrate new innovations into our products.”

Ah, there it is.

Our next item was apparently meant to be sneaky, but who did Meta think it was fooling? The Register reports, “Meta’s Pay-or-Consent Model Hides ‘Massive Illegal Data Processing Ops’: Lawsuit.” Meta is attempting to “comply” with the EU’s privacy regulations by making users pay to opt in to them. That is not what regulators had in mind. We learn:

“Those of us with aunties on FB or friends on Instagram were asked to say yes to data processing for the purpose of advertising – to ‘choose to continue to use Facebook and Instagram with ads’ – or to pay up for a ‘subscription service with no ads on Facebook and Instagram.’ Meta, of course, made the changes in an attempt to comply with EU law. But privacy rights folks weren’t happy about it from the get-go, with privacy advocacy group noyb (None Of Your Business), for example, sarcastically claiming Meta was proposing you pay it in order to enjoy your fundamental rights under EU law. The group already challenged Meta’s move in November, arguing EU law requires consent for data processing to be given freely, rather than to be offered as an alternative to a fee. Noyb also filed a lawsuit in January this year in which it objected to the inability of users to ‘freely’ withdraw data processing consent they’d already given to Facebook or Instagram.”

And now eight European Consumer Organization (BEUC) members have filed new complaints, insisting Meta’s pay-or-consent tactic violates the European General Data Protection Regulation (GDPR). While that may seem obvious to some, Meta insists it is in compliance with the law. Because of course it does.

Cynthia Murrell, March 29, 2024

What Happens When Understanding Technology Is Shallow? Weakness

February 14, 2025

Yep, a dinobaby wrote this blog post. Replace me with a subscription service or a contract worker from Fiverr. See if I care.

Yep, a dinobaby wrote this blog post. Replace me with a subscription service or a contract worker from Fiverr. See if I care.

I like this question. Even more satisfying is that a big name seems to have answered it. I refer to an essay by Gary Marcus in “The Race for “AI Supremacy” Is Over — at Least for Now.”

Here’s the key passage in my opinion:

China caught up so quickly for many reasons. One that deserves Congressional investigation was Meta’s decision to open source their LLMs. (The question that Congress should ask is, how pivotal was that decision in China’s ability to catch up? Would we still have a lead if they hadn’t done that? Deepseek reportedly got its start in LLMs retraining Meta’s Llama model.) Putting so many eggs in Altman’s basket, as the White House did last week and others have before, may also prove to be a mistake in hindsight. … The reporter Ryan Grim wrote yesterday about how the US government (with the notable exception of Lina Khan) has repeatedly screwed up by placating big companies and doing too little to foster independent innovation

The write up is quite good. What’s missing, in my opinion, is the linkage of a probe to determine how a technology innovation released as a not-so-stealthy open source project can affect the US financial markets. The result was satisfying to the Chinese planners.

Also, the write up does not put the probe or “foray” in a strategic context. China wants to make certain its simple message “China smart, US dumb” gets into the world’s communication channels. That worked quite well.

Finally, the write up does not point out that the US approach to AI has given China an opportunity to demonstrate that it can borrow and refine with aplomb.

Net net: I think China is doing Shien and Temu in the AI and smart software sector.

Stephen E Arnold, February 14, 2025

Debbie Downer Says, No AI Payoff Until 2026

December 27, 2024

Holiday greetings from the Financial Review. Its story “Wall Street Needs to Prepare for an AI Winter” is a joyous description of what’s coming down the Information Highway. The uplifting article sings:

shovelling more and more data into larger models will only go so far when it comes to creating “intelligent” capabilities, and we’ve just about arrived at that point. Even if more data were the answer, those companies that indiscriminately vacuumed up material from any source they could find are starting to struggle to acquire enough new information to feed the machine.

Translating to rural Kentucky speak: “We been shoveling in the horse stall and ain’t found the nag yet.”

The flickering light bulb has apparently illuminated the idea that smart software is expensive to develop, train, optimize, run, market, and defend against allegations of copyright infringement.

To add to the profit shadow, Debbie Downer’s cousin compared OpenAI to Visa. The idea in “OpenAI Is Visa” is that Sam AI-Man’s company is working overtime to preserve its lead in AI and become a monopoly before competitors figure out how to knock off OpenAI. The write up says:

Either way, Visa and OpenAI seem to agree on one thing: that “competition is for losers.”

Too add to the uncertainty about US AI “dominance,” Venture Beat reports:

DeepSeek-V3, ultra-large open-source AI, outperforms Llama and Qwen on launch.

Does that suggest that the squabbling and mud wrestling among US firms can be body slammed by the Chinese AI grapplers are more agile? Who knows. However, in a series of tweets, DeepSeek suggested that its “cost” was less than $6 million. The idea is that what Chinese electric car pricing is doing to some EV manufacturers, China’s AI will do to US AI. Better and faster? I don’t know but that “cheaper” angle will resonate with those asked to pump cash into the Big Dogs of US AI.

In January 2023, many were struck by the wonders of smart software. Will the same festive atmosphere prevail in 2025?

Stephen E Arnold, December 27, 2024

China Smart, US Dumb: LLMs Bad, MoEs Good

November 21, 2024

Okay, an “MoE” is an alternative to LLMs. An “MoE” is a mixture of experts. An LLM is a one-trick pony starting to wheeze.

Google, Apple, Amazon, GitHub, OpenAI, Facebook, and other organizations are at the top of the list when people think about AI innovations. We forget about other countries and universities experimenting with the technology. Tencent is a China-based technology conglomerate located in Shenzhen and it’s the world’s largest video game company with equity investments are considered. Tencent is also the developer of Hunyuan-Large, the world’s largest MoE.

According to Tencent, LLMs (large language models) are things of the past. LLMs served their purpose to advance AI technology, but Tencent realized that it was necessary to optimize resource consumption while simultaneously maintaining high performance. That’s when the company turned to the next evolution of LLMs or MoE, mixture of experts models.

Cornell University’s open-access science archive posted this paper on the MoE: “Hunyuan-Large: An Open-Source MoE Model With 52 Billion Activated Parameters By Tencent” and the abstract explains it is a doozy of a model:

In this paper, we introduce Hunyuan-Large, which is currently the largest open-source Transformer-based mixture of experts model, with a total of 389 billion parameters and 52 billion activation parameters, capable of handling up to 256K tokens. We conduct a thorough evaluation of Hunyuan-Large’s superior performance across various benchmarks including language understanding and generation, logical reasoning, mathematical problem-solving, coding, long-context, and aggregated tasks, where it outperforms LLama3.1-70B and exhibits comparable performance when compared to the significantly larger LLama3.1-405B model. Key practice of Hunyuan-Large include large-scale synthetic data that is orders larger than in previous literature, a mixed expert routing strategy, a key-value cache compression technique, and an expert-specific learning rate strategy. Additionally, we also investigate the scaling laws and learning rate schedule of mixture of experts models, providing valuable insights and guidance for future model development and optimization. The code and checkpoints of Hunyuan-Large are released to facilitate future innovations and applications.”

Tencent has released Hunyuan-Large as an open source project, so other AI developers can use the technology! The well-known companies will definitely be experimenting with Hunyuan-Large. Is there an ulterior motive? Sure. Money, prestige, and power are at stake in the AI global game.

Whitney Grace, November 21, 2024