Eric Schmidt, Truth Teller at Stanford University, Bastion of Ethical Behavior

August 26, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1]_thumb_thumb green-dino_thumb_thumb_thumb_thumb_t[1]_thumb_thumb](https://arnoldit.com/wordpress/wp-content/uploads/2024/08/green-dino_thumb_thumb_thumb_thumb_t1_thumb_thumb_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I spotted some of the quotes in assorted online posts about Eric Schmidt’s talk / interview at Stanford University. I wanted to share a transcript of the remarks. You can find the ASCII transcript on GitHub at this link. For those interested in how Silicon Valley concepts influence one’s view of appropriate behavior, this talk is a gem. Is it at the level of the Confessions of St. Augustine? Well, the content is darned close in my opinion. Students of Google’s decision making past and present may find some guideposts. Aspiring “leadership” type people may well find tips and tricks.

Stephen E Arnold, August 26, 2024

Meta Leadership: Thank you for That Question

August 26, 2024

Who needs the Dark Web when one has Facebook? We learn from The Hill, “Lawmakers Press Meta Over Illicit Drug Advertising Concerns.” Writer Sarah Fortinsky pulls highlights from the open letter a group of House representatives sent directly to Mark Zuckerberg. The rebuke follows a March report from The Wall Street Journal that Meta was under investigation for “facilitating the sale of illicit drugs.” Since that report, the lawmakers lament, Meta has continued to run such ads. We learn:

The Tech Transparency Project recently reported that it found more than 450 advertisements on those platforms that sell pharmaceuticals and other drugs in the last several months. ‘Meta appears to have continued to shirk its social responsibility and defy its own community guidelines. Protecting users online, especially children and teenagers, is one of our top priorities,’ the lawmakers wrote in their letter, which was signed by 19 lawmakers. ‘We are continuously concerned that Meta is not up to the task and this dereliction of duty needs to be addressed,’ they continued. Meta uses artificial intelligence to moderate content, but the Journal reported the company’s tools have not managed to detect the drug advertisements that bypass the system.”

The bipartisan representatives did not shy from accusing Meta of dragging its heels because it profits off these illicit ad campaigns:

“The lawmakers said it was ‘particularly egregious’ that the advertisements were ‘approved and monetized by Meta.’ … The lawmakers noted Meta repeatedly pushes back against their efforts to establish greater data privacy protections for users and makes the argument ‘that we would drastically disrupt this personalization you are providing,’ the lawmakers wrote. ‘If this personalization you are providing is pushing advertisements of illicit drugs to vulnerable Americans, then it is difficult for us to believe that you are not complicit in the trafficking of illicit drugs,’ they added.”

The letter includes a list of questions for Meta. There is a request for data on how many of these ads the company has discovered itself and how many it missed that were discovered by third parties. It also asks about the ad review process, how much money Meta has made off these ads, what measures are in place to guard against them, and how minors have interacted with them. The legislators also ask how Meta uses personal data to target these ads, a secret the company will surely resist disclosing. The letter gives Zuckerberg until September 6 to respond.

Cynthia Murrell, August 26, 2024

Threat. What Threat? Google Does Not Behave Improperly. No No No.

August 21, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Amazing write up from a true poohbah publication: “Google Threatened Tech Influencers Unless They Preferred the Pixel.” Even more amazing is the Googley response: “We missed the mark?”

Thanks, MSFT Copilot. Good enough.

Let’s think about this.

The poohbah publication reports:

A Pixel 9 review agreement required influencers to showcase the Pixel over competitors or have their relationship terminated. Google now says the language ‘missed the mark.’

What?

I thought Google was working overtime to build relationships and develop trust. I thought Google was characterized unfairly as a monopolist. I thought Google had some of that good old “Do no evil” DNA.

These misconceptions demonstrate how out of touch a dinobaby like me can be.

The write up points out:

The Verge has independently confirmed screenshots of the clause in this year’s Team Pixel agreement for the new Pixel phones, which various influencers began posting on X and Threads last night. The agreement tells participants they’re “expected to feature the Google Pixel device in place of any competitor mobile devices.” It also notes that “if it appears other brands are being preferred over the Pixel, we will need to cease the relationship between the brand and the creator.” The link to the form appears to have since been shut down.

Does that sound like a threat? As a dinobaby and non-influencer, I think the Google is just trying to prevent miscreants like those people posting information about Russia’s special operation from misinterpreting the Pixel gadgets. Look. Google was caught off guard and flipped into Code Red or whatever. Now the Gemini smart software is making virtually everyone’s life online better.

I think the Google is trying to be “honest.” The term, like the word “ethical”, can house many means. Consequently non-Googley phones, thoughts, ideas, and hallucinations are not permitted. Otherwise what? The write up explains:

Those terms certainly caused confusion online, with some assuming such terms apply to all product reviewers. However, that isn’t the case. Google’s official Pixel review program for publications like The Verge requires no such stipulations. (And, to be clear, The Verge would never accept such terms, in accordance with our ethics policy.)

The poohbah publication has ethics. That’s super.

Here’s the “last words” in the article about this issue that missed the mark:

Influencer is a broad term that encompasses all sorts of creators. Many influencers adhere to strict ethical standards, but many do not. The problem is there are no guidelines to follow and limited disclosure to help consumers if what they’re reading or watching was paid for in some way. The FTC is taking some steps to curtail fake and misleading reviews online, but as it stands right now, it can be hard for the average person to spot a genuine review from marketing. The Team Pixel program didn’t create this mess, but it is a sobering reflection of the murky state of online reviews.

Why would big outfits appear to threaten people? There are no consequences. And most people don’t care. Threats are enervating. There’s probably a course at Stanford University on the subject.

Net net: This is new behavior? Nope. It is characteristic of a largely unregulated outfit with lots of money which, at the present time, feels threatened. Why not do what’s necessary to remain wonderful, loved, and trusted. Or else!

Stephen E Arnold, August 21, 2024

A Xoogler Reveals Why Silicon Valley Is So Trusted, Loved, and Respected

August 20, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Amazing as it seems, a Xoogler — in this case, the former adult at Google — is spilling the deepest, darkest secrets of success. The slick executive gave a talk at Stanford University. Was the talk a deep fake? I heard that the video was online and then disappeared. Then I spotted a link to a video which purported to be the “real” Ex-Google CEO’s banned interview. It may or may not be at this link because… Google’s policies about censorship are mysterious to me.

Which persona is real: The hard edged executive or the bad actor in the mirror? Thanks, MSFT Copilot. How is the security effort going?

Let’s cut to the chase. I noted the Wall Street Journal’s story “Eric Schmidt Walks Back Claim Google Is Behind on AI Because of Remote Work.” Someone more alert than I noticed an interesting comment; to wit:

If TikTok is banned, here’s what I propose each and every one of you do: Say to your LLM the following: “Make me a copy of TikTok, steal all the users, steal all the music, put my preferences in it, produce this program in the next 30 seconds, release it, and in one hour, if it’s not viral, do something different along the same lines.” That’s the command. Boom, boom, boom, boom. So, in the example that I gave of the TikTok competitor — and by the way, I was not arguing that you should illegally steal everybody’s music — what you would do if you’re a Silicon Valley entrepreneur, which hopefully all of you will be, is if it took off, then you’d hire a whole bunch of lawyers to go clean the mess up, right? But if nobody uses your product, it doesn’t matter that you stole all the content.

And do not quote me.

I want to point out that this snip comes from the Slashdot post from Msmash on August 16, 2024.

Several points dug into my dinobaby brain:

- Granting an interview, letting it be captured to video, and trying to explain away the remarks strikes me as a little wild and frisky. Years ago, this same Googler was allegedly annoyed when an online publication revealed facts about him located via Google.

- Remaining in the news cycle in the midst of a political campaign, a “special operation” in Russia, and the wake of the Department of Justice’s monopoly decision is interesting. Those comments, like the allegedly accurate one quoted above, put the interest in the Google on some people’s radar. Legal eagles are your sensing devices beeping?

- The entitled behavior of saying one thing to students and then mansplaining that the ideas were not reflective of the inner self is an illustration of behavior my mother would have found objectionable. I listened to my mother. To whom does the Xoogler listen?

Net net: Stanford’s president was allegedly making up information and he subsequently resigned. Now a guest lecturer explains that it is okay to act in what some might call an illegal manner. What are those students learning? I would assert that it is not ethical behavior.

Stephen E Arnold, August 20, 2024

Telegram Rolled Over for Russia. Has Google YouTube Become a Circus Animal Too?

August 19, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Most of the people with whom I interact do not know that Telegram apparently took steps to filter content which the Kremlin deemed unsuitable for Russian citizens. Information reaching me in late April 2024 asserted that Ukrainian government units were no longer able to use Telegram Messenger functions to disseminate information to Telegram users in Russia about Mr. Putin’s “special operation.” Telegram has made a big deal about its commitment to free speech, and it has taken a very, very light touch to censoring content and transactions on its basic and “secret” messaging service. Then, at the end of April 2024, Mr. Pavel Durov flipped, apparently in response to either a request or threat from someone in Russia. The change in direction for a messaging app with 900 million users is a peanut compared to Meta WhatsApp five million or so. But when free speech becomes obeisance I take note.

I have been tracking Russian YouTubers because I have found that some of the videos provide useful insights into the impact of the “special operation” on prices, the attitudes of young people, and imagery about the condition of housing, information day-to-day banking matters, and the demeanor of people in the background of some YouTube, TikTok, Rutube, and Kick videos.

I want to mention that Alphabet Google YouTube a couple of years ago took action to suspend Russian state channels from earning advertising revenue from the Google “we pay you” platform. Facebook and the “old” Twitter did this as well. I have heard that Google and YouTube leadership understood that Ukraine wanted those “propaganda channels” blocked. The online advertising giant complied. About 9,000 channels were demonetized or made difficult to find (to be fair, finding certain information on YouTube is not an easy task.) Now Russia has convinced Google to respond to its wishes.

So what? To most people, this is not important. Just block the “bad” content. Get on with life.

I watched a video called “Demonetized! Update and the Future.” The presenter is a former business manager who turned to YouTube to document his view of Russian political, business, and social events. The gentleman — allegedly named “Konstantin” — worked in the US. He returned to Russia and then moved to Uzbekistan. His YouTube channel is (was) titled Inside Russia.

The video “Demonetized! Update and the Future” caught my attention. Please, note, that the video may be unavailable when you read this blog post. “Demonetization” is Google speak for cutting of advertising revenue itself and to the creator.

Several other Russian vloggers producing English language content about Russia, the Land of Putin on the Fritz, have expressed concern about their vlogging since Russia slowed down YouTube bandwidth making some content unwatchable. Others have taken steps to avoid problems; for example, creators Svetlana, Niki, and Nfkrz have left Russia. Others are keeping a low profile.

This raises questions about the management approach in a large and mostly unregulated American high-technology company. According to Inside Russia’s owner Konstantin, YouTube offered no explanation for the demonetization of the channel. Konstantin asserts that YouTube is not providing information to him about its unilateral action. My hunch is that he does not want to come out and say, “The Kremlin pressured an American company to cut off my information about the impact of the ‘special operation’ on Russia.”

Several observations:

- I have heard but not verified that Apple has cooperated with the Kremlin’s wish for certain content to be blocked so that it does not quickly reach Russian citizens. It is unclear what has caused the US companies to knuckle under. My initial thought was, “Money.” These outfits want to obtain revenues from Russia and its federation, hoping to avoid a permanent ban when the “special operation” ends. The inducements (and I am speculating) might also have a kinetic component. That occurs when a person falls out of a third story window and then impacts the ground. Yes, falling out of windows can happen.

- I surmise that the vloggers who are “demonetized” are probably on a list. These individuals and their families are likely to have a tough time getting a Russian government job, a visa, or a passport. The list may have the address for the individual who is generating unacceptable-to-the-Kremlin content. (There is a Google Map for Uzbekistan’s suburb where Konstantin may be living.)

- It is possible that YouTube is doing nothing other than letting its “algorithm” make decisions. Demonetizing Russian YouTubers is nothing more than an unintended consequence of no material significance.

- Does YouTube deserve some attention because its mostly anything-goes approach to content seems to be malleable? For example, I can find information about how to steal a commercial software program owned by a German company via the YouTube search box. Why is this crime not filtered? Is a fellow talking about the “special operation” subject to a different set of rules?

Screen shot of suggested terms for the prompt “Magix Vegas Pro 21 crack” taken on August 16, 2024, at 224 pm US Eastern.

I have seen some interesting corporate actions in my 80 years. But the idea that a country, not particularly friendly to the U.S. at this time, can cause an American company to take what appears to be an specific step designed to curtail information flow is remarkable. Perhaps when Alphabet executives explain to Congress the filtering of certain American news related to events linked to the current presidential campaign more information will be made available?

If Konstantin’s allegations about demonetization are accurate, what’s next on Alphabet, Google, and YouTube’s to-do list for information snuffing or information cleansing?

Stephen E Arnold, August 18, 2024

An Ed Critique That Pans the Sundar & Prabhakar Comedy Act

August 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read Ed.

Ed refers to Edward Zitron, the thinker behind Where’s Your Ed At. The write up which caught my attention is “Monopoly Money.” I think that Ed’s one-liners will not be incorporated into the Sundar & Prabhakar comedy act. The flubbed live demos are knee slappers, but Ed’s write up is nipping at the heels of the latest Googley gaffe.

Young people are keen observers of certain high-technology companies. What happens if one of the giants becomes virtual and moves to a Dubai-type location? Who has jurisdiction? Regulatory enforcement delayed means big high-tech outfits are more portable than old-fashioned monopolies. Thanks, MSFT Copilot. Big industrial images are clearly a core competency you have.

Ed’s focus is on the legal decision which concluded that the online advertising company is a monopoly in “general text advertising.” The essay states:

The ruling precisely explains how Google managed to limit competition and choice in the search and ad markets. Documents obtained through discovery revealed the eye-watering amounts Google paid to Samsung ($8 billion over four years) and Apple ($20 billion in 2022 alone) to remain the default search engine on their devices, as well as Mozilla (around $500 million a year), which (despite being an organization that I genuinely admire, and that does a lot of cool stuff technologically) is largely dependent on Google’s cash to remain afloat.

Ed notes:

Monopolies are a big part of why everything feels like it stopped working.

Ed is on to something. The large technology outfits in the US control online. But one of the downstream consequences of what I call the Silicon Valley way or the Googley approach to business is that other industries and market sectors have watched how modern monopolies work. The result is that concentration of power has not been a regulatory priority. The role of data aggregation has been ignored. As a result, outfits like Kroger (a grocery company) is trying to apply Googley tactics to vegetables.

Ed points out:

Remember when “inflation” raised prices everywhere? It’s because the increasingly-dwindling amount of competition in many consumer goods companies allowed them to all raise their prices, gouging consumers in a way that should have had someone sent to jail rather than make $19 million for bleeding Americans dry. It’s also much, much easier for a tech company to establish one, because they often do so nestled in their own platforms, making them a little harder to pull apart. One can easily say “if you own all the grocery stores in an area that means you can control prices of groceries,” but it’s a little harder to point at the problem with the tech industry, because said monopolies are new, and different, yet mostly come down to owning, on some level, both the customer and those selling to the customer.

Blue chip consulting firms flip this comment around. The points Ed makes are the recommendations and tactics the would-be monopolists convert to action plans. My reaction is, “Thanks, Silicon Valley. Nice contribution to society.”

Ed then gets to artificial intelligence, definitely a hot topic. He notes:

Monopolies are inherently anti-consumer and anti-innovation, and the big push toward generative AI is a blatant attempt to create another monopoly — the dominance of Large Language Models owned by Microsoft, Amazon, Google and Meta. While this might seem like a competitive marketplace, because these models all require incredibly large amounts of cloud compute and cash to both train and maintain, most companies can’t really compete at scale.

Bingo.

I noted this Ed comment about AI too:

This is the ideal situation for a monopolist — you pay them money for a service and it runs without you knowing how it does so, which in turn means that you have no way of building your own version. This master plan only falls apart when the “thing” that needs to be trained using hardware that they monopolize doesn’t actually provide the business returns that they need to justify its existence.

Ed then makes a comment which will cause some stakeholders to take a breath:

As I’ve written before, big tech has run out of hyper-growth markets to sell into, leaving them with further iterations of whatever products they’re selling you today, which is a huge problem when big tech is only really built to rest on its laurels. Apple, Microsoft and Amazon have at least been smart enough to not totally destroy their own products, but Meta and Google have done the opposite, using every opportunity to squeeze as much revenue out of every corner, making escape difficult for the customer and impossible for those selling to them. And without something new — and no, generative AI is not the answer — they really don’t have a way to keep growing, and in the case of Meta and Google, may not have a way to sustain their companies past the next decade. These companies are not built to compete because they don’t have to, and if they’re ever faced with a force that requires them to do good stuff that people like or win a customer’s love, I’m not sure they even know what that looks like.

Viewed from a Googley point of view, these high-technology outfits are doing what is logical. That’s why the Google advertisement for itself troubled people. The person writing his child willfully used smart software. The fellow embodied a logical solution to the knotty problem of feelings and appropriate behavior.

Ed suggests several remedies for the Google issue. These make sense, but the next step for Google will be an appeal. Appeals take time. US government officials change. The appetite to fight legions of well resourced lawyers can wane. The decision reveals some interesting insights into the behavior of Google. The problem now is how to alter that behavior without causing significant market disruption. Google is really big, and changes can have difficult-to-predict consequences.

The essay concludes:

I personally cannot leave Google Docs or Gmail without a significant upheaval to my workflow — is a way that they reinforce their monopolies. So start deleting sh*t. Do it now. Think deeply about what it is you really need — be it the accounts you have and the services you need — and take action. They’re not scared of you, and they should be.

Interesting stance.

Several observations:

- Appeals take time. Time favors outfits like losers of anti-trust cases.

- Google can adapt and morph. The size and scale equip the Google in ways not fathomable to those outside Google.

- Google is not Standard Oil. Google is like AT&T. That break up resulted in reconsolidation and two big Baby Bells and one outside player. So a shattered Google may just reassemble itself. The fancy word for this is emergent.

Ed hits some good points. My view is that the Google fumbles forward putting the Sundar & Prabhakar Comedy Act in every city the digital wagon can reach.

Stephen E Arnold, August 16, 2024

Pragmatic AI: Individualized Monitoring

August 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

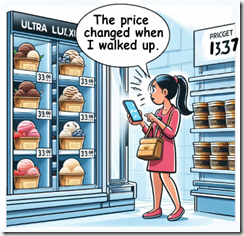

In June 2024 at the TechnoSecurity & Digital Forensics conference, one of the cyber investigators asked me, “What are some practical uses of AI in law enforcement?” I told the person that I would send him a summary of my earlier lecture called “AI for LE.” He said, “Thanks, but what should I watch to see some AI in action.” I told him to pay attention to the Kroger pricing methods. I had heard that Kroger was experimenting with altering prices based on certain signals. The example I gave is that if the Kroger is located in a certain zip code, then the Kroger stores in that specific area would use dynamic pricing. The example I gave was similar to Coca-Cola’s tests of a vending machine that charged more if the temperature was hot. In the Kroger example, a hot day would trigger a change in the price of a frozen dessert. He replied, “Kroger?” I said, “Yes, Kroger is experimenting with AI in order to detect specific behaviors and modify prices to reflect those signals.” What Kroger is doing will be coming to law enforcement and intelligence operations. Smart software monitors the behavior of a prisoner, for example, and automatically notifies an investigator when a certain signal is received. I recall mentioning that smart software, signals, and behavior change or direct action will become key components of a cyber investigator’s tool kit. He said, laughing, “Kroger. Interesting.”

Thanks, MSFT Copilot. Good enough.

I learned that Kroger’s surveillance concept is now not a rumor discussed at a neighborhood get together. “‘Corporate Greed Is Out of Control’: Warren Slams Kroger’s AI Pricing Scheme” reveals that elected officials and probably some consumer protection officials may be aware of the company’s plans for smart software. The write up reports:

Warren (D-Mass.) was joined by Sen. Bob Casey (D-Pa.) on Wednesday in writing a letter to the chairman and CEO of the Kroger Company, Rodney McMullen, raising concerns about how the company’s collaboration with AI company IntelligenceNode could result in both privacy violations and worsened inequality as customers are forced to pay more based on personal data Kroger gathers about them “to determine how much price hiking [they] can tolerate.” As the senators wrote, the chain first introduced dynamic pricing in 2018 and expanded to 500 of its nearly 3,000 stores last year. The company has partnered with Microsoft to develop an Electronic Shelving Label (ESL) system known as Enhanced Display for Grocery Environment (EDGE), using a digital tag to display prices in stores so that employees can change prices throughout the day with the click of a button.

My view is that AI orchestration will allow additional features and functions. Some of these may be appropriate for use in policeware and intelware systems. Kroger makes an effort to get individuals to sign up for a discount card. Also, Kroger wants users to install the Kroger app. The idea is that discounts or other incentives may be “awarded” to the customer who takes advantages of the services.

However, I am speculating that AI orchestration will allow Kroger to implement a chain of actions like this:

- Customer with a mobile phone enters the store

- The store “acknowledges” the customer

- The customer’s spending profile is accessed

- The customer is “known” to purchase upscale branded ice cream

- The price for that item automatically changes as the customer approaches the display

- The system records the item bar code and the customer ID number

- At check out, the customer is charged the higher price.

Is this type of AI orchestration possible? Yes. Is it practical for a grocery store to deploy? Yes because Kroger uses third parties to provide its systems and technical capabilities for many applications.

How does this apply to law enforcement? Kroger’s use of individualized tracking may provide some ideas for cyber investigators.

As large firms with the resources to deploy state-of-the-art technology to boost sales, know the customer, and adjust prices at the individual shopper level, the benefit of smart software become increasingly visible. Some specialized software systems lag behind commercial systems. Among the reasons are budget constraints and the often complicated procurement processes.

But what is at the grocery store is going to become a standard function in many specialized software systems. These will range from security monitoring systems which can follow a person of interest in an specific area to automatically updating a person of interest’s location on a geographic information module.

If you are interested in watching smart software and individualized “smart” actions, just pay attention at Kroger or a similar retail outfit.

Stephen E Arnold, August 15, 2024

Meta Shovels Assurances. Will Australia Like the Output?

August 14, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I came across a news story which I found quite interesting. Even though I am a dinobaby, I am a father and a grandfather. I used to take pictures when my son and daughter were young. I used Kodak film, printed the pictures my wife wanted, and tossed the rest. Pretty dull. Some parents have sportier ideas. I want to point out that some ideas do not appeal to me. Others make me uncomfortable.

How do you think I reacted to the information in “Parents Still Selling Revealing Content of Their Kids on Instagram, Despite Meta’s Promises to Ban the Practice.” The main idea in the write up seems to be:

The ABC [Australian Broadcasting Council] has found almost 50 Instagram accounts that allow subscribers to pay for exclusive content of children or teenagers, some of which is sexualized. Meta had vowed to clamp down on the practice but said it was taking time to "fully roll out" its new policy. Advocates say the accounts represent an "extreme" form of child exploitation.

If I understand the title of the article and this series of statements, I take away these messages:

- Instagram contains “revealing content” of young people

- Meta — the Zuck’s new name for the old-timey Facebook, WhatsApp, and Instagram services — said it would take steps to curtail posting of this type of content. A statement which, the ABC seems to apply, was similar to other Silicon Valley-inspired assertions: A combination of self-serving assurances and then generating as much revenue as possible because some companies face zero consequences.

- Meta seems to create a greenhouse for what the ABC calls “child exploitation.”

I hope I captured the intent of the news story’s main idea.

I noted this passage:

Sarah Adams, an online child safety advocate who goes by the name Mom.Uncharted, said it was clear Meta had lost control of child accounts.

How did Meta respond to the ABC inquiry. Check this:

"The new policy is in effect as of early April and we are taking action on adult-run accounts that primarily post content focused on children whenever we become aware of them," a Meta spokesperson said in a statement. "As with any new policy, enforcement can take time to fully roll out."

That seems plausible. How long has Meta hosted questionable content? I remember 20 years ago. “We are taking action” is a wonderfully proactive statement. Plus, combatting child exploitation is one of those tasks where “enforcement can take time.”

Got it.

Stephen E Arnold, August 14, 2024

Apple Does Not Just Take Money from Google

August 12, 2024

In an apparent snub to Nvidia, reports MacRumors, “Apple Used Google Tensor Chips to Develop Apple Intelligence.” The decision to go with Google’s TPUv5p chips over Nvidia’s hardware is surprising, since Nvidia has been dominating the AI processor market. (Though some suggest that will soon change.) Citing Apple’s paper on the subject, writer Hartley Charlton reveals:

“The paper reveals that Apple utilized 2,048 of Google’s TPUv5p chips to build AI models and 8,192 TPUv4 processors for server AI models. The research paper does not mention Nvidia explicitly, but the absence of any reference to Nvidia’s hardware in the description of Apple’s AI infrastructure is telling and this omission suggests a deliberate choice to favor Google’s technology. The decision is noteworthy given Nvidia’s dominance in the AI processor market and since Apple very rarely discloses its hardware choices for development purposes. Nvidia’s GPUs are highly sought after for AI applications due to their performance and efficiency. Unlike Nvidia, which sells its chips and systems as standalone products, Google provides access to its TPUs through cloud services. Customers using Google’s TPUs have to develop their software within Google’s ecosystem, which offers integrated tools and services to streamline the development and deployment of AI models. In the paper, Apple’s engineers explain that the TPUs allowed them to train large, sophisticated AI models efficiently. They describe how Google’s TPUs are organized into large clusters, enabling the processing power necessary for training Apple’s AI models.”

Over the next two years, Apple says, it plans to spend $5 billion in AI server enhancements. The paper gives a nod to ethics, promising no private user data is used to train its AI models. Instead, it uses publicly available web data and licensed content, curated to protect user privacy. That is good. Now what about the astronomical power and water consumption? Apple has no reassuring words for us there. Is it because Apple is paying Google, not just taking money from Google?

Cynthia Murrell, August 12, 2024

Curating Content: Not Really and Maybe Not at All

August 5, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Most people assume that if software is downloaded from an official “store” or from a “trusted” online Web search system, the user assumes that malware is not part of the deal. Vendors bandy about the word “trust” at the same time wizards in the back office are filtering, selecting, and setting up mechanisms to sell advertising to anyone who has money.

Advertising sales professionals are the epitome of professionalism. Google the word “trust”. You will find many references to these skilled individuals. Thanks, MSFT Copilot. Good enough.

Are these statements accurate? Because I love the high-tech outfits, my personal view is that online users today have these characteristics:

- Deep knowledge about nefarious methods

- The time to verify each content object is not malware

- A keen interest in sustaining the perception that the Internet is a clean, well-lit place. (Sorry, Mr. Hemingway, “lighted” will get you a points deduction in some grammarians’ fantasy world.)

I read “Google Ads Spread Mac Malware Disguised As Popular Browser.” My world is shattered. Is an alleged monopoly fostering malware? Is the dominant force in online advertising unable to verify that its advertisers are dealing from the top of the digital card deck? Is Google incapable of behaving in a responsible manner? I have to sit down. What a shock to my dinobaby system.

The write up alleges:

Google Ads are mostly harmless, but if you see one promoting a particular web browser, avoid clicking. Security researchers have discovered new malware for Mac devices that steals passwords, cryptocurrency wallets and other sensitive data. It masquerades as Arc, a new browser that recently gained popularity due to its unconventional user experience.

My assumption is that Google’s AI and human monitors would be paying close attention to a browser that seeks to challenge Google’s Chrome browser. Could I be incorrect? Obviously if the write up is accurate I am. Be still my heart.

The write up continues:

The Mac malware posing as a Google ad is called Poseidon, according to researchers at Malwarebytes. When clicking the “more information” option next to the ad, it shows it was purchased by an entity called Coles & Co, an advertiser identity Google claims to have verified. Google verifies every entity that wants to advertise on its platform. In Google’s own words, this process aims “to provide a safe and trustworthy ad ecosystem for users and to comply with emerging regulations.” However, there seems to be some lapse in the verification process if advertisers can openly distribute malware to users. Though it is Google’s job to do everything it can to block bad ads, sometimes bad actors can temporarily evade their detection.

But the malware apparently exists and the ads are the vector. What’s the fix? Google is already doing its typical A Number One Quantumly Supreme Job. Well, the fix is you, the user.

You are sufficiently skilled to detect, understand, and avoid such online trickery, right?

Stephen E Arnold, August 5, 2024