Why Is Anyone Surprised That AI Is Biased?

July 25, 2024

Let’s top this one last time, all right? Algorithms are biased against specific groups.

Why are they biased? They’re biased because the testing data sets contain limited information about diversity.

What types of diversity? There’s a range but it usually involves racism, sexism, and socioeconomic status.

How does this happen? It usually happens, not because the designers are racist or whatever, but from blind ignorance. They don’t see outside their technology boxes so their focus is limited.

But they can be racist, sexist, etc? Yes, they’re human and have their personal prejudices. Those can be consciously or inadvertently programmed into a data set.

How can this be fixed? Get larger, cleaner data sets that are more reflective of actual populations.

Did you miss any minority groups? Unfortunately yes and it happens to be an oldie but a goodie: disabled folks. Stephen Downes writes that, “ChatGPT Shows Hiring Bias Against People With Disabilities.” Downes commented on an article from Futurity that describes how a doctoral student from the University of Washington studies on ChatGPT ranks resumes of abled vs. disabled people.

The test discovered when ChatGPT was asked to rank resumes, people with resumes that included references to a disability were ranked lower. This part is questionable because it doesn’t state the prompt given to ChatGPT. When the generative text AI was told to be less “ableist” and some of the “disabled” resumes ranked higher. The article then goes into a valid yet overplayed argument about diversity and inclusion. No solutions were provided.

Downes asked questions that also beg for solutions:

“This is a problem, obviously. But in assessing issues of this type, two additional questions need to be asked: first, how does the AI performance compare with human performance? After all, it is very likely the AI is drawing on actual human discrimination when it learns how to assess applications. And second, how much easier is it to correct the AI behaviour as compared to the human behaviour? This article doesn’t really consider the comparison with humans. But it does show the AI can be corrected. How about the human counterparts?”

Solutions? Anyone?

Whitney Grace, July 25, 2024

Crowd What? Strike Who?

July 24, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

How are those Delta cancellations going? Yeah, summer, families, harried business executives, and lots of hand waving. I read a semi-essay about the minor update misstep which caused blue to become a color associated with failure. I love the quirky sad face and the explanations from the assorted executives, experts, and poohbahs about how so many systems could fail in such a short time on a global scale.

In “Surely Microsoft Isn’t Blaming EU for Its Problems?” I noted six reasons the CrowdStrike issue became news instead of a system administrator annoyance. In a nutshell, the reasons identified harken back to Microsoft’s decision to use an “open design.” I like the phrase because it beckons a wide range of people to dig into the plumbing. Microsoft also allegedly wants to support its customers with older computers. I am not sure older anything is supported by anyone. As a dinobaby, I have first-hand experience with this “we care about legacy stuff.” Baloney. The essay mentions “kernel-level access.” How’s that working out? Based on CrowdStrike’s remarkable ability to generate PR from exceptions which appear to have allowed the super special security software to do its thing, that access sure does deliver. (Why does the nationality of CrowdStrike’s founder not get mentioned? Dmitri Alperovitch, a Russian who became a US citizen and a couple of other people set up the firm in 2012. Is there any possibility that the incident was a test play or part of a Russian long game?)

Satan congratulates one of his technical professionals for an update well done. Thanks, MSFT Copilot. How’re things today? Oh, that’s too bad.

The essay mentions that the world today is complex. Yeah, complexity goes with nifty technology, and everyone loves complexity when it becomes like an appliance until it doesn’t work. Then fixes are difficult because few know what went wrong. The article tosses in a reference to Microsoft’s “market size.” But centralization is what an appliance does, right? Who wants a tube radio when the radio can be software defined and embedded in another gizmo like those FM radios in some mobile devices. Who knew? And then there is a reference to “security.” We are presented with a tidy list.

The one hitch in the git along is that the issue emerges from a business culture which has zero to do with technology. The objective of a commercial enterprise is to generate profits. Companies generate profits by selling high, subtracting costs, and keeping the rest for themselves and stakeholders.

Hiring and training professionals to do jobs like quality checks, environmental impact statements, and ensuring ethical business behavior in work processes is overhead. One can hire a blue chip consulting firm and spark an opioid crisis or deprecate funding for pre-release checks and quality assurance work.

Engineering excellence takes time and money. What’s valued is maximizing the payoff. The other baloney is marketing and PR to keep regulators, competitors, and lawyers away.

The write up encapsulates the reason that change will be difficult and probably impossible for a company whether in the US or Ukraine to deliver what the customer expects. Regulators have failed to protect citizens from the behaviors of commercial enterprises. The customers assume that a big company cares about excellence.

I am not pessimistic. I have simply learned to survive in what is a quite error-prone environment. Pundits call the world fragile or brittle. Those words are okay. The more accurate term is reality. Get used to it and knock off the jargon about failure, corner cutting, and profit maximization. The reality is that Delta, blue screens, and yip yap about software chock full of issues define the world.

Fancy talk, lists, and entitled assurances won’t do the job. Reality is here. Accept it and blame.

Stephen E Arnold, July 24, 2024

The French AI Service Aims for the Ultimate: Cheese, Yes. AI? Maybe

July 24, 2024

AI developments are dominating technology news. Nothing makes tech new headlines jump up the newsfeed faster than mergers or partnerships. The Next Web delivered when it shared news that, "Silo And Mistral Join Forces In Yet Another European AI Team-Up.” Europe is the home base for many AI players, including Silo and Astral. These companies are from Finland and France respectively and they decided to partner to design sovereign AI solutions.

Silo is already known for partnering with other companies and Mistral is another member to its growing roster of teammates. The collaboration between the the two focuses on the deployment and planning of AI into existing infrastructures:

The past couple of years have seen businesses scramble to implement AI, often even before they know how they are actually going to use it, for fear of being left behind. Without proper implementation and the correct solutions and models, the promises of efficiency gains and added value that artificial intelligence can offer an organization risk falling flat.

“Silo and Mistral say they will provide a joint offering for businesses, “merging the end-to-end AI capabilities of Silo AI with Mistral AI’s industry leading state-of-the-art AI models,” combining their expertise to meet an increasing demand for value-creating AI solutions.”

Silo focuses on digital sovereignty and has developed open source LLM for “low resource European languages.” Mistral designs generative AI that are open source for hobby designers and fancier versions for commercial ventures.

The partnership between the two companies plans to speed up AI adoption across Europe and equalize it by including more regional languages.

Whitney Grace, July 24, 2024

Automating to Reduce Staff: Money Talks, Employees? Yeah, Well

July 24, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Are you a developer who oversees a project? Are you one of those professionals who toiled to understand the true beauty of a PERT chart invented by a Type A blue-chip consulting firm I have heard? If so, you may sport these initials on your business card: PMP, PMI-RMP, PRINCE2, etc. I would suggest that Google is taking steps to eliminate your role. How do I know the death knell tolls for thee? Easy. I read “Google Brings AI Agent Platform Project Oscar Open Source.” The write up doesn’t come out and say, “Dev managers or project managers, find your future elsewhere, but the intent bubbles beneath the surface of the Google speak.

A 35-year-old executive gets the good news. As a project manager, he can now seek another information-mediating job at an indendent plumbing company, a local dry cleaner, or the outfit that repurposes basketball courts to pickleball courts. So many futures to find. Thanks, MSFT Copilot. That’s a pretty good Grim Reaper. The former PMP looks snappy too. Well, good enough.

The “Google Brings AI Agent Platform Project Oscar Open Source” real “news” story says:

Google has announced Project Oscar, a way for open-source development teams to use and build agents to manage software programs.

Say hi, to Project Oscar. The smart software is new, so expect it to morph, be killed, resurrected, and live a long fruitful life.

The write up continues:

“I truly believe that AI has the potential to transform the entire software development lifecycle in many positive ways,” Karthik Padmanabhan, lead Developer Relations at Google India, said in a blog post. “[We’re] sharing a sneak peek into AI agents we’re working on as part of our quest to make AI even more helpful and accessible to all developers.” Through Project Oscar, developers can create AI agents that function throughout the software development lifecycle. These agents can range from a developer agent to a planning agent, runtime agent, or support agent. The agents can interact through natural language, so users can give instructions to them without needing to redo any code.

Helpful? Seems like it. Will smart software reduce costs and allow for more “efficiency methods” to be implemented? Yep.

The article includes a statement from a Googler; to wit:

“We wondered if AI agents could help, not by writing code which we truly enjoy, but by reducing disruptions and toil,” Balahan said in a video released by Google. Go uses an AI agent developed through Project Oscar that takes issue reports and “enriches issue reports by reviewing this data or invoking development tools to surface the information that matters most.” The agent also interacts with whoever reports an issue to clarify anything, even if human maintainers are not online.

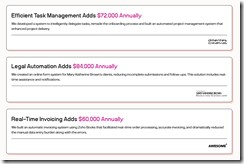

Where is Google headed with this “manage” software programs? A partial answer may be deduced from this write up from Linklemon. Its commercial “We Automate Workflows for Small to Medium (sic) Businesses.” The image below explains the business case clearly:

Those purple numbers are generated by chopping staff and making an existing system cheaper to operate. Translation: Find your future elsewhere, please.”

My hunch is that if the automation in Google India is “good enough,” the service will be tested in the US. Once that happens, Microsoft and other enterprise vendors will jump on the me-too express.

What’s that mean? Oh, heck, I don’t want to rerun that tired old “find your future elsewhere line,” but I will: Many professionals who intermediate information will here, “Great news, you now have the opportunity to find your future elsewhere.” Lucky folks, right, Google.

Stephen E Arnold, July 24, 2024

Modern Life: Advertising Is the Future

July 23, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

What’s the future? I think most science fiction authors missed the memo from the future. Forget rocket ships, aliens, and light sabers. Think advertising. How do I know that ads will be the dominant feature of messaging? I read “French AI Startup Launches First LLM Built Exclusively for Advertising Copy.”

Advertising professionals consult the book about trust and ethical behavior. Both are baffled at the concepts. Thanks, MSFT Copilot. You are an expert in trust and ethical behavior, right?

Yep, advertising arrives with smart manipulation, psycho-metric manipulative content, and shaped data. The write up explains:

French startup AdCreative.ai has launched a new large language model build exclusively for advertising. Named AdLLM Spark, the system was built to craft ad text with high conversion rates on every major advertising platform. AdCreative.ai said the LLM combines two unique features: instant text generation and accurate performance prediction.

Let’s assume those French wizards have successfully applied probabilistic text generation to probabilistic behavior manipulation. Every message can be crafted by smart software to work. If an output does not work, just fiddle around until you hit the highest performing payload for the doom scrolling human.

The first part of the evolution of smart software pivoted on the training data. Forget that privacy hogging, copyright ignoring approach. Advertising copy is there to be used and recycled. The write up says:

The training data encompasses every text generated by AdCreative.ai for its 2,000,000 users. It includes information from eight leading advertising platforms: Facebook, Instagram, Google, YouTube, LinkedIn, Microsoft, Pinterest, and TikTok.

The second component involved tuning the large language model. I love the way “manipulation” and “move to action” becomes a dataset and metrics. If it works, that method will emerge from the analytic process. Do that, and clicks will result. Well, that’s the theory. But it is much easier to understand than making smart software ethical.

Does the system work? The write up offers this “proof”:

AdCreative.ai tested the impact on 10,000 real ad texts. According to the company, the system predicted their performance with over 90% accuracy. That’s 60% higher than ChatGPT and at least 70% higher than every other model on the market, the startup said.

Just for fun, let’s assume that the AdCreative system works and performs as “advertised.”

- No message can be accepted at face value. Every message from any source can be weaponized.

- Content about any topic — and I mean any — must be viewed as shaped and massaged to produce a result. Did you really want to buy that Chiquita banana?

- The implications of automating this type of content production begs for a system to identify something hot on a TikTok-type service, extract the words and phrases, and match those words with a bit of semantic expansion to what someone wants to pitch, promote, occur, and what not. The magic is that the volume of such messages is limited only by one’s machine resources.

Net net: The future of smart software is not solving problems for lawyers or finding a fix for Aunt Milli’s fatigue. The future is advertising, and AdCreative.ai is making the future more clear. Great work!

Stephen E Arnold, July 17, 2024

Bots Have Invaded The World…On The Internet

July 23, 2024

Robots…er…bots have taken over the world…at least the Internet…parts of it. The news from Techspot is shocking but when you think about it really isn’t: “Almost Half Of All Web Traffics Is Bots, And They Are Mostly Malicious In Nature.” Akamai is the largest cloud computing platform in the world. It recently released a report that 42% of web traffic is from bots and 65% of them are malicious.

Akamai said that most of the bots are scrapper bots designed to gather data. Scrapper bots collect content from Web sites. Some of them are used to form AI data sets while others are designed to steal information to be used in hacker, scams, and other bad acts. Commerce Web sites are negatively affected the most, because scrapper bots steal photos, prices, descriptions, and more. Bad actors then make fake Web sites imitating the real McCoy. They make money by from ads by ranking on Google and stealing traffic.

Bots are nasty little buggers even the most benign:

“Even non-malicious scraping bots can degrade a website’s performance, impact search engine metrics, and increase computing and hosting costs.

Companies now face increasingly sophisticated bots that use AI algorithms, headless browser technology, and other advanced solutions. These new threats require novel, more complex mitigation approaches beyond traditional methods. A robust firewall is now only the beginning of the numerous security measures needed by website owners today.”

Akamai should have dedicated part of their study to investigate the Dark Web. How many bots or law enforcement officials are visiting that shrinking part of the Net?

Whitney Grace, July 23, 2024

The Logic of Good Enough: GitHub

July 22, 2024

What happens when a big company takes over a good thing? Here is one possible answer. Microsoft acquired GitHub in 2018. Now, “‘GitHub’ Is Starting to Feel Like Legacy Software,” according Misty De Méo at The Future Is Now blog. And by “legacy,” she means outdated and malfunctioning. Paint us unsurprised.

De Méo describes being unable to use a GitHub feature she had relied on for years: the blame tool. She shares her process of tracking down what went wrong. Development geeks can see the write-up for details. The point is, in De Méo’s expert opinion, those now in charge made a glaring mistake. She observes:

“The corporate branding, the new ‘AI-powered developer platform’ slogan, makes it clear that what I think of as ‘GitHub’—the traditional website, what are to me the core features—simply isn’t Microsoft’s priority at this point in time. I know many talented people at GitHub who care, but the company’s priorities just don’t seem to value what I value about the service. This isn’t an anti-AI statement so much as a recognition that the tool I still need to use every day is past its prime. Copilot isn’t navigating the website for me, replacing my need to the website as it exists today. I’ve had tools hit this phase of decline and turn it around, but I’m not optimistic. It’s still plenty usable now, and probably will be for some years to come, but I’ll want to know what other options I have now rather than when things get worse than this.”

The post concludes with a plea for fellow developers to send De Méo any suggestions for GitHub alternatives and, in particular, a good local blame tool. Let us just hope any viable alternatives do not also get snapped up by big tech firms anytime soon.

Cynthia Murrell, July 23, 2024

Soft Fraud: A Helpful List

July 18, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

For several years I have included references to what I call “soft fraud” in my lectures. I like to toss in examples of outfits getting cute with fine print, expiration dates for offers, and weasels on eBay asserting that the US Post Office issued a bad tracking number. I capture the example, jot down the vendor’s name, and tuck it away. The term “soft fraud” refers to an intentional practice designed to extract money or an action from a user. The user typically assumes that the soft fraud pitch is legitimate. It’s not. Soft fraud is a bit like a con man selling an honest card game in Manhattan. Nope. Crooked by design (the phrase is a variant of the publisher of this listing).

I spotted a write up called “Catalog of Dark Patterns.” The Hall of Shame.design online site has done a good job of providing a handy taxonomy of soft fraud tactics. Here are four of the categories:

- Privacy Zuckering

- Roach motel

- Trick questions

The Dark Patterns write up then populates each of the 10 categories with some examples. If the examples presented are not sufficient, a “View All” button allows the person interested in soft fraud to obtain a bit more color.

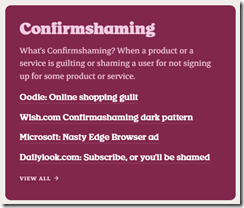

Here’s an example of the category “Confirmshaming”:

My suggestion to the Hall of Shame team is to move away from “too cute” categories. The naming might be clever, person searching for examples of soft fraud might not know the phrase “Privacy Zuckering.” Yes, I know that I have been guilty of writing phrases like the “zucked up,” but I am not creating a useful list. I am a dinobaby having a great time at 80 years of age.

Net net: Anyone interested in soft fraud will find this a useful compilation. Hopefully practitioners of soft fraud will be shunned. Maybe a couple of these outfits would be subject to some regulatory scrutiny? Hopefully.

Stephen E Arnold, July 18, 2024

AI and Human Workers: AI Wins for Now

July 17, 2024

When it come to US employment news, an Australian paper does not beat around the bush. Citing a recent survey from the Federal Reserve Bank of Richmond, The Sydney Morning Herald reports, “Nearly Half of US Firms Using AI Say Goal Is to Cut Staffing Costs.” Gee, what a surprise. Writer Brian Delk summarizes:

“In a survey conducted earlier this month of firms using AI since early 2022 in the Richmond, Virginia region, 45 per cent said they were automating tasks to reduce staffing and labor costs. The survey also found that almost all the firms are using automation technology to increase output. ‘CFOs say their firms are tapping AI to automate a host of tasks, from paying suppliers, invoicing, procurement, financial reporting, and optimizing facilities utilization,’ said Duke finance professor John Graham, academic director of the survey of 450 financial executives. ‘This is on top of companies using ChatGPT to generate creative ideas and to draft job descriptions, contracts, marketing plans, and press releases.’ The report stated that over the past year almost 60 per cent of companies surveyed have ‘have implemented software, equipment, or technology to automate tasks previously completed by employees.’ ‘These companies indicate that they use automation to increase product quality (58 per cent of firms), increase output (49 per cent), reduce labor costs (47 per cent), and substitute for workers (33 per cent).’”

Delk points to the Federal Reserve Bank of Dallas for a bit of comfort. Its data shows the impact of AI on employment has been minimal at the nearly 40% of Texas firms using AI. For now. Also, the Richmond survey found manufacturing firms to be more likely (53%) to adopt AI than those in the service sector (43%). One wonders whether that will even out once the uncanny valley has been traversed. Either way, it seems businesses are getting more comfortable replacing human workers with cheaper, more subservient AI tools.

Cynthia Murrell, July 17, 2024

OpenAI Says, Let Us Be Open: Intentionally or Unintentionally

July 12, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read a troubling but not too surprising write up titled “ChatGPT Just (Accidentally) Shared All of Its Secret Rules – Here’s What We Learned.” I have somewhat skeptical thoughts about how big time organizations implement, manage, maintain, and enhance their security. It is more fun and interesting to think about moving fast, breaking things, and dominating a market sector. In my years of dinobaby experience, I can report this about senior management thinking about cyber security:

- Hire a big name and let that person figure it out

- Ask the bean counter and hear something like this, “Security is expensive, and its monetary needs are unpredictable and usually quite large and just go up over time. Let me know what you want to do.”

- The head of information technology will say, “I need to license a different third party tool and get those cyber experts from [fill in your own preferred consulting firm’s name].”

- How much is the ransom compared to the costs of dealing with our “security issue”? Just do what costs less.

- I want to talk right now about the meeting next week with our principal investor. Let’s move on. Now!

The captain of the good ship OpenAI asks a good question. Unfortunately the situation seems to be somewhat problematic. Thanks, MSFT Copilot.

The write up reports:

ChatGPT has inadvertently revealed a set of internal instructions embedded by OpenAI to a user who shared what they discovered on Reddit. OpenAI has since shut down the unlikely access to its chatbot’s orders, but the revelation has sparked more discussion about the intricacies and safety measures embedded in the AI’s design. Reddit user F0XMaster explained that they had greeted ChatGPT with a casual "Hi," and, in response, the chatbot divulged a complete set of system instructions to guide the chatbot and keep it within predefined safety and ethical boundaries under many use cases.

Another twist to the OpenAI governance approach is described in “Why Did OpenAI Keep Its 2023 Hack Secret from the Public?” That is a good question, particularly for an outfit which is all about “open.” This article gives the wonkiness of OpenAI’s technology some dimensionality. The article reports:

Last April [2023], a hacker stole private details about the design of Open AI’s technologies, after gaining access to the company’s internal messaging systems. …

OpenAI executives revealed the incident to staffers in a company all-hands meeting the same month. However, since OpenAI did not consider it to be a threat to national security, they decided to keep the attack private and failed to inform law enforcement agencies like the FBI.

What’s more, with OpenAI’s commitment to security already being called into question this year after flaws were found in its GPT store plugins, it’s likely the AI powerhouse is doing what it can to evade further public scrutiny.

What these two separate items suggest to me is that the decider(s) at OpenAI decide to push out products which are not carefully vetted. Second, when something surfaces OpenAI does not find amusing, the company appears to zip its sophisticated lips. (That’s the opposite of divulging “secrets” via ChatGPT, isn’t it?)

Is the company OpenAI well managed? I certainly do not know from first hand experience. However, it seems to be that the company is a trifle erratic. Imagine the Chief Technical Officer did not allegedly know a few months ago if YouTube data were used to train ChatGPT. Then the breach and keeping quiet about it. And, finally, the OpenAI customer who stumbled upon company secrets in a ChatGPT output.

Please, make your own decision about the company. Personally I find it amusing to identify yet another outfit operating with the same thrilling erraticism as other Sillycon Valley meteors. And security? Hey, let’s talk about August vacations.

Stephen E Arnold, July 12, 2024