Telegram Has a Plumber and a Pinger But Few Know

January 12, 2026

While reviewing research notes, the author of the “Telegram Labyrinth” spotted an interesting connection between Telegram and a firm with links to the Kremlin. A now-deleted Reuters report alleged that Telegram utilizes infrastructure linked to the FSB. The provider is Global Network Management (GNM), owned by Vladimir Vedeneev, a former Russian Space Force member with a Russian security clearance. Vedeneev’s relationship with Pavel Durov dates back to the VKontakte era, and he reportedly provided the networking foundation for Telegram in 2013.

Vedeneev maintains access to Telegram’s servers and possessed signatory authority as both CEO and CFO for Telegram. He also controls Electrotelecom, a firm servicing Russian security agencies. While Durov promises user privacy, Vedeneev’s firms provides a point of access. If exercised, Russia’s government agencies could legally harvest metadata via deep packet inspection. Registered in Antigua and Barbuda, the legal set up of GNM provides a possible work-around for EU and US sanctions on some Telegram-centric activities. GNM operates with opaque points of presence globally, raising questions about its partnerships with Google, Cloudflare, and others.

Stephen E Arnold hypothesizes that Telegram and GNM are tightly coupled, with Durov championing privacy while his partner facilitates state surveillance access. Political backing likely protects this classic “man in the middle” operation possible. If you want to read the complete article in Telegram Notes, click this link.

Kent Maxwell, January 12, 2026

OpenCode: A Little Helper for Good Guys and the Obverse

January 7, 2026

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I gave a couple of talks late in 2025 to cyber investigators in one of those square, fly-over states. In those talks, I showed code examples. Some were crafted by the people on my team and a complete Telegram app was coded by Anthropic Claude. Yeah, the smart software needed some help. Telegram bots are not something lots of Claude users whip up. But the system worked.

Here I am reading the OpenCode “privacy” document. I look pretty good when Venice.ai converts me from an old dinobaby to a young dinobaby.

One of my team called my attention to OpenCode. The idea is to make it easier than ever for a good actor or a not-so-good actor to create scripts, bots, and functional applications. OpenCode is an example of what I call “meta ai”; that is, the OpenCode service is a wrapper that allows a lot of annoying large language model operations, library chasing, and just finding stuff to take place under one roof. If you are a more hip cat than I, you would probably say that OpenCode is a milestone on the way to a point and click dashboard to make writing goodware and malware easier and more reliable than previously possible. I will let you ponder the implications of this statement.

According to the organization:

OpenCode is an open source agent that helps you write and run code with any AI model. It’s available as a terminal-based interface, desktop app, or IDE extension.

The sounds as if an AI contributed to the sentences. But that’s to be expected. The organization is OpenCode.ai.

The organization says:

OpenCode comes with a set of free models that you can use without creating an account. Aside from these, you can use any of the popular coding models by creating a Zen account. While we encourage users to use Zen*, OpenCode also works with all popular providers such as OpenAI, Anthropic, xAI etc. You can even connect your local models. [*Zen gives you access to a handpicked set of AI models that OpenCode has tested and benchmarked specifically for coding agents. No need to worry about inconsistent performance and quality across providers, use validated models that work.]

The system is open source. As of January 7, 2026, it is free to use. You will have to cough up money if you want to use OpenCode with the for fee smart software.

The OpenCode Web site makes a big deal about privacy. You can find about 10,000 words explaining what the developers of the system bundle in their “privacy” write up. It is a good idea to read the statement. It includes some interesting features; for example:

- Accepting the privacy agreement allows home buyers to be data recipients

- Fuzzy and possibly contradictory statements about user data sales

- Continued use of the service means you accept terms which can be changed.

I won’t speculate on how a useful service needs a long and somewhat challenging “privacy” statement. But this is 2026. I still can’t figure out why home buyers are involved, but I am a clueless dinobaby. Remember there are good actors and the other type too.

Stephen E Arnold, January 7, 2026

Mid Tier Consulting Firm Labels AI As a Chaos Agent.

December 5, 2025

![green-dino_thumb_thumb[3] green-dino_thumb_thumb[3]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb3_thumb-1.gif) Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

A mid tier consulting firm (Forrester) calls smart software a chaos agent. Is the company telling BAIT (big AI tech) firms not to hire them for consulting projects? I am a dinobaby. When I worked at a once big time blue chip outfit, labeling something that is easy to sell a problem was not a standard practice. But what do I know? I am a dinobaby.

The write up in the content marketing-type publication is not exactly a sales pitch. Could it be a new type of article? Perhaps it is an example of contrarianism and a desire to make sure people know that smart software is an expensive boondoggle? I noted a couple of interesting statements in “Forrester: Gen AI Is a Chaos Agent, Models Are Wrong 60% of the Time.”

Sixty percent is, even with my failing math skills, is more than half of something. I think the idea is that smart software is stupid, and it gets an F for failure. Let’s look at a couple of statements from the write up:

Forrester says, gen AI has become that predator in the hands of attackers: The one that never tires or sleeps and executes at scale. “In Jaws, the shark acts as the chaos agent,” Forrester principal analyst Allie Mellen told attendees at the IT consultancy firm’s 2025 Security and Risk Summit. “We have a chaos agent of our own today… And that chaos agent is generative AI.”

This is news?

How about this statement?

Of the many studies Mellen cited in her keynote, one of the most damning is based on research conducted by the Tow Center for Digital Journalism at Columbia University, which analyzed eight different AI models, including ChatGPT and Gemini. The researchers found that overall, models were wrong 60% of the time; their combined performance led to more failed queries than accurate ones.

I think it is fair to conclude that Forrester is not thrilled with smart software. I don’t know if the firm uses AI or just reads about AI, but its stance is crystal clear. Need proof? A Forrester wizard recycled research that says “specialized enterprise agents all showed systemic patterns of failure. Top performers completed only 24% of tasks autonomously.

Okay, that means today’s AI gets an F. How do the disappointed parents at BAIT outfits cope with Claude, Gemini, and Copilot getting sent to a specialized school? My hunch is that the leadership in BAIT firms will ignore the criticism, invest in data centers, and look for consultants not affiliated with an outfit that dumps trash at their headquarters.

Forrester trots out a solution of course. The firm does sell time and expertise. What’s interesting is that Venture Beat rolled out some truisms about smart software, including buzzwords like red team and machine speed.

Net net: AI will be wrong most of the time. AI will be used by bad actors to compromise organizations. AI gets an F; threat actors find that AI delivers a slam dunk A. Okay, which is it? I know. It’s marketing.

Stephen E Arnold, December 5, 2025

From the Ostrich Watch Desk: A Signal for Secure Messaging?

December 4, 2025

![green-dino_thumb_thumb[1] green-dino_thumb_thumb[1]](https://www.arnoldit.com/wordpress/wp-content/uploads/2025/11/green-dino_thumb_thumb1_thumb.gif) Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

A dinobaby is not supposed to fraternize with ostriches. These two toed birds can run. It may be time for those cyber security folks who say, “Signal is secure to run away from that broad statement.” Perhaps something like sort of secure if the information presented by the “please, please, please, send us money” newspaper company. (Tip to the Guardian leadership. There are ways to generate revenue some of which I shared in a meeting about a decade ago.)

Listening, verifying, and thinking critically are skills many professionals may want to apply to routine meetings about secure services. Thanks, Venice.ai. Good enough.

The write up from the “please, please, please, donate” outfit is “The FBI Spied on a Signal Group Chat of Immigration Activists, Records Reveal.” The subtitle makes clear that I have to mind the length of my quotes and emphasize that absolutely no one knows about this characteristic of super secret software developed by super quirky professionals working in the not-so-quirky US of A today.

The write up states:

The FBI spied on a private Signal group chat of immigrants’ rights activists who were organizing “courtwatch” efforts in New York City this spring, law enforcement records shared with the Guardian indicate.

How surprised is the Guardian? The article includes this statement, which I interpret as the Guardian’s way of saying, “You Yanks are violating privacy.” Judge for yourself:

Spencer Reynolds, a civil liberties advocate and former senior intelligence counsel with the DHS, said the FBI report was part of a pattern of the US government criminalizing free speech activities.

Several observations are warranted:

- To the cyber security vice president who told me, “Signal is secure.” The Guardian article might say, “Ooops.” When I explained it was not, he made a Three Stooges’ sound and cancel cultured me.

- When appropriate resources are focused on a system created by a human or a couple of humans, that system can be reverse engineered. Did you know Android users can drop content on an iPhone user’s device. What about those how-tos explaining the insecurity of certain locks on YouTube? Yeah. Security.

- Quirky and open source are not enough, and quirky will become less suitable as open source succumbs to corporatism and agentic software automates looking for tricks to gain access. Plus, those after-the-fact “fixes” are usually like putting on a raincoat after the storm. Security enhancement is like going to the closest big box store for some fast drying glue.

One final comment. I gave a lecture about secure messaging a couple of years ago for a US government outfit. One topic was a state of the art messaging service. Although a close hold, a series of patents held by entities in Virginia disclosed some of the important parts of the system and explained in a way lawyers found just wonderful a novel way to avoid Signal-type problems. The technology is in use in some parts of the US government. Better methods for securing messages exist. Open source, cheap, and easy remains popular.

Will I reveal the name of this firm, provide the patent numbers in this blog, and present my diagram showing how the system works? Nope.

PS to the leadership of the Guardian. My recollection is that your colleagues did not know how to listen when I ran down several options for making money online. Your present path may lead to some tense moments at budget review time. Am I right?

Stephen E Arnold, December 4, 2025

Microsoft Demonstrates Its Commitment to Security. Right, Copilot

December 4, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I read on November 20, 2025, an article titled “Critics Scoff after Microsoft Warns AI Feature Can Infect Machines and Pilfer Data.” My immediate reaction was, “So what’s new?” I put the write up aside. I had to run an errand, so I grabbed the print out of this Ars Technica story in case I had to wait for the shop to hunt down my dead lawn mower.

A hacking club in Moscow celebrates Microsoft’s decision to enable agents in Windows. The group seems quite happy despite sanctions, food shortages, and the special operation. Thanks, MidJourney. Good enough.

I worked through the short write up and spotted a couple of useful (if true) factoids. It may turn out that the information in this Ars Technica write up provide insight about Microsoft’s approach to security. If I am correct, threat actors, assorted money laundering outfits, and run-of-the-mill state actors will be celebrating. If I am wrong, rest easy. Cyber security firms will have no problem blocking threats — for a small fee of course.

The write up points to what the article calls a “warning” from Microsoft on November 18, 2025. The report says:

an experimental AI agent integrated into Windows can infect devices and pilfer sensitive user data

Yep, Ars Technica then puts a cherry on top with this passage:

Microsoft introduced Copilot Actions, a new set of “experimental agentic features” that, when enabled, perform “everyday tasks like organizing files, scheduling meetings, or sending emails,” and provide “an active digital collaborator that can carry out complex tasks for you to enhance efficiency and productivity.”

But don’t worry. Users can use these Copilot actions:

if you understand the security implications.

Wow, that’s great. We know from the psycho-pop best seller Thinking Fast and Slow that more than 80 percent of people cannot figure out how much a ball costs if the total is $1.10 and the ball costs one dollar more. Also, Microsoft knows that most Windows users do not disable defaults. I think that even Microsoft knows that turning on agentic magic by default is not a great idea.

Nevertheless, this means that agents combined with large language models are sparking celebrations among the less trustworthy sectors of those who ignore laws and social behavior conventions. Agentic Windows is the new theme part for online crime.

Should you worry? I will let you decipher this statement allegedly from Microsoft. Make up your own mind, please:

“As these capabilities are introduced, AI models still face functional limitations in terms of how they behave and occasionally may hallucinate and produce unexpected outputs,” Microsoft said. “Additionally, agentic AI applications introduce novel security risks, such as cross-prompt injection (XPIA), where malicious content embedded in UI elements or documents can override agent instructions, leading to unintended actions like data exfiltration or malware installation.”

I thought this sub head in the article exuded poetic craft:

Like macros on Marvel superhero crack

The article reports:

Microsoft’s warning, one critic said, amounts to little more than a CYA (short for cover your ass), a legal maneuver that attempts to shield a party from liability. “Microsoft (like the rest of the industry) has no idea how to stop prompt injection or hallucinations, which makes it fundamentally unfit for almost anything serious,” critic Reed Mideke said. “The solution? Shift liability to the user. Just like every LLM chatbot has a ‘oh by the way, if you use this for anything important be sure to verify the answers” disclaimer, never mind that you wouldn’t need the chatbot in the first place if you knew the answer.”

Several observations are warranted:

- How about that commitment to security after SolarWinds? Yeah, I bet Microsoft forgot that.

- Microsoft is doing what is necessary to avoid the issues that arise when the Board of Directors has a macho moment and asks whoever is the Top Dog at the time, “What about the money spent on data centers and AI technology? You know, How are you going to recoup those losses?

- Microsoft is not asking its users about agentic AI. Microsoft has decided that the future of Microsoft is to make AI the next big thing. Why? Microsoft is an alpha in a world filled with lesser creatures. The answer? Google.

Net net: This Ars Technica article makes crystal clear that security is not top of mind among Softies. Hey, when’s the next party?

Stephen E Arnold, December 4, 2025

AI Agents and Blockchain-Anchored Exploits:

November 20, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In October 2025, Google published “New Group on the Block: UNC5142 Leverages EtherHiding to Distribute Malware,” which generated significant attention across cybersecurity publications, including Barracuda’s cybersecurity blog. While the EtherHiding technique was originally documented in Guard.io’s 2023 report, Google’s analysis focused specifically on its alleged deployment by a nation-state actor. The methodology itself shares similarities with earlier exploits: the 2016 CryptoHost attack also utilized malware concealed within compressed files. This layered obfuscation approach resembles matryoshka (Russian nesting dolls) and incorporates elements of steganography—the practice of hiding information within seemingly innocuous messages.Recent analyses emphasize the core technique: exploiting smart contracts, immutable blockchains, and malware delivery mechanisms. However, an important underlying theme emerges from Google’s examination of UNC5142’s methodology—the increasing role of automation. Modern malware campaigns already leverage spam modules for phishing distribution, routing obfuscation to mask server locations, and bots that harvest user credentials.

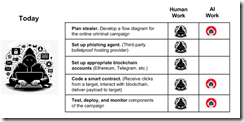

With rapid advances in agentic AI systems, the trajectory toward fully automated malware development becomes increasingly apparent. Currently, exploits still require threat actors to manually execute fundamental development tasks, including coding blockchain-enabled smart contracts that evade detection.During a recent presentation to law enforcement, attorneys, and intelligence professionals, I outlined the current manual requirements for blockchain-based exploits. Threat actors must currently complete standard programming project tasks: [a] Define operational objectives; [b] Map data flows and code architecture; [c] Establish necessary accounts, including blockchain and smart contract access; [d] Develop and test code modules; and [e] Deploy, monitor, and optimize the distributed application (dApp).

The diagrams from my lecture series on 21st-century cybercrime illustrate what I believe requires urgent attention: the timeline for when AI agents can automate these tasks. While I acknowledge my specific timeline may require refinement, the fundamental concern remains valid—this technological convergence will significantly accelerate cybercrime capabilities. I welcome feedback and constructive criticism on this analysis.

The diagram above illustrates how contemporary threat actors can leverage AI tools to automate as many as one half of the tasks required for a Vibe Blockchain Exploit (VBE). However, successful execution still demands either a highly skilled individual operator or the ability to recruit, coordinate, and manage a specialized team. Large-scale cyber operations remain resource-intensive endeavors. AI tools are increasingly accessible and often available at no cost. Not surprisingly, AI is a standard components in the threat actor’s arsenal of digital weapons. Also, recent reports indicate that threat actors are already using generative AI to accelerate vulnerability exploitation and tool development. Some operations are automating certain routine tactical activities; for example, phishing. Despite these advances, a threat actor has to get his, her, or the team’s hands under the hood of an operation.

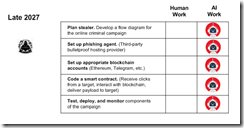

Now let’s jump forward to 2027.

The diagram illustrates two critical developments in the evolution of blockchain-based exploits. First, the threat actor’s role transforms from hands-on execution to strategic oversight and decision-making. Second, increasingly sophisticated AI agents assume responsibility for technical implementation, including the previously complex tasks of configuring smart contract access and developing evasion-resistant code. This represents a fundamental shift: the majority of operational tasks transition from human operators to autonomous software systems.

Several observations appear to be warranted:

- Trajectory and Detection Challenges. While the specific timeline remains subject to refinement, the directional trend for Vibe Blockchain Exploits (VBE) is unmistakable. Steganographic techniques embedded within blockchain operations will likely proliferate. The encryption and immutability inherent to blockchain technology significantly extend investigation timelines and complicate forensic analysis.

- Democratization of Advanced Cyber Capabilities. The widespread availability of AI tools, combined with continuous capability improvements, fundamentally alters the threat landscape by reducing deployment time, technical barriers, and operational costs. Our analysis indicates sustained growth in cybercrime incidents. Consequently, demand for better and advanced intelligence software and trained investigators will increase substantially. Contrary to sectors experiencing AI-driven workforce reduction, the AI-enabled threat environment will generate expanded employment opportunities in cybercrime investigation and digital forensics.

- Asymmetric Advantages for Threat Actors. As AI systems achieve greater sophistication, threat actors will increasingly leverage these tools to develop novel exploits and innovative attack methodologies. A critical question emerges: Why might threat actors derive greater benefit from AI capabilities than law enforcement agencies? Our assessment identifies a fundamental asymmetry. Threat actors operate with fewer behavioral constraints. While cyber investigators may access equivalent AI tools, threat actors maintain operational cadence advantages. Bureaucratic processes introduce friction, and legal frameworks often constrain rapid response and hamper innovation cycles.

Current analyses of blockchain-based exploits overlook a crucial convergences: The combination of advanced AI systems, blockchain technologies, and agile agentic operational methodologies for threat actors. These will present unprecedented challenges to regulatory authorities, intelligence agencies, and cybercrime investigators. Addressing this emerging threat landscape requires institutional adaptation and strategic investment in both technological capabilities and human expertise.

Stephen E Arnold, November 20, 2025

Cybersecurity Systems and Smart Software: The Dorito Threat

November 19, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

My doctor warned me about Doritos. “Don’t eat them!” he said. “I don’t,” I said. “Maybe Cheetos once every three or four months, but no Doritos. They suck and turn my tongue a weird but somewhat Apple-like orange.”

But Doritos are a problem for smart cybersecurity. The company with the Dorito blind spot is allegedly Omnilert. The firm codes up smart software to spot weapons that shoot bullets. Knives, camp shovels, and sharp edged credit cards probably not. But it seems Omnilert is watching for Doritos.

Thanks, MidJourney. Good enough even though you ignored the details in my prompt.

I learned about this from the article “AI Alert System That Mistook Student’s Doritos for a Gun Shuts Down Another School.” The write up says as actual factual:

An AI security platform that recently mistook a bag of Doritos for a firearm has triggered another false alarm, forcing police to sweep a Baltimore County high school.

But that’s not the first such incident. According to the article:

The incident comes only weeks after Omnilert falsely identified a 16-year-old Kenwood High School student’s Doritos bag as a gun, leading armed officers to swarm him outside the building. The company later admitted that alert was a “false positive” but insisted the system still “functioned as intended,” arguing that its role is to quickly escalate cases for human review.

At a couple of the law enforcement conferences I have attended this year, I heard about some false positives for audio centric systems. These use fancy dancing triangulation algorithms to pinpoint (so the marketing collateral goes) the location of a gun shot in an urban setting. The only problem is that the smart systems gets confused when autos backfire, a young at heart person sets off a fire cracker, or someone stomps on an unopenable bag of overpriced potato chips. Stomp right and the sound is similar to a demonstration in a Yee Yee Life YouTube video.

I learned that some folks are asking questions about smart cybersecurity systems, even smarter software, and the confusion between a weapon that can kill a person quick and a bag of Doritos that poses, according to my physician, a deadly but long term risk.

Observations:

- What happens when smart software makes such errors when diagnosing a treatment for an injured child?

- What happens when the organizations purchasing smart cyber systems realize that old time snake oil marketing is alive and well in certain situations?

- What happens when the procurement professionals at a school district just want to procure fast and trust technology?

Good questions.

Stephen E Arnold, November 19, 2025

Dark Patterns Primer

November 13, 2025

Here is a useful explainer for anyone worried about scams brought to us by a group of concerned designers and researchers. The Dark Patterns Hall of Shame arms readers with its Catalog of Dark Patterns. The resource explores certain misleading tactics we all encounter online. The group’s About page tells us:

“We are passionate about identifying dark patterns and unethical design examples on the internet. Our [Hall of Shame] collection serves as a cautionary guide for companies, providing examples of manipulative design techniques that should be avoided at all costs. These patterns are specifically designed to deceive and manipulate users into taking actions they did not intend. HallofShame.com is inspired by Deceptive.design, created by Harry Brignull, who coined the term ‘Dark Pattern’ on 28 July 2010. And as was stated by Harry on Darkpatterns.org: The purpose of this website is to spread awareness and to shame companies that use them. The world must know its ‘heroes.’”

Can companies feel shame? We are not sure. The first page of the Catalog provides a quick definition of each entry, from the familiar Bait-and-Switch to the aptly named Privacy Zuckering (“service or a website tricks you into sharing more information with it than you really want to.”) One can then click through to real-world examples pulled from the Hall of Shame write-ups. Some other entries include:

“Disguised Ads. What’s a Disguised Ad? When an advertisement on a website pretends to be a UI element and makes you click on it to forward you to another website.

Roach Motel. What’s a roach motel? This dark pattern is usually used for subscription services. It is easy to sign up for it, but it’s much harder to cancel it (i.e. you have to call customer support).

Sneak into Basket. What’s a sneak into basket? When buying something, during your checkout, a website adds some additional items to your cart, making you take the action of removing it from your cart.

Confirmshaming. What’s confirmshaming? When a product or a service is guilting or shaming a user for not signing up for some product or service.”

One case of Confirmshaming: the pop-up Microsoft presents when one goes to download Chrome through Edge. Been there. See the post for the complete list and check out the extensive examples. Use the information to protect yourself or the opposite.

Cynthia Murrell, November 13, 2025

Cyber Security: Do the Children of Shoemakers Have Yeezies or Sandals?

November 7, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

When I attended conferences, I liked to stop at the exhibitor booths and listen to the sales pitches. I remember one event held in a truly shabby hotel in Tyson’s Corner. The vendor whose name escapes me explained that his firm’s technology could monitor employee actions, flag suspicious behaviors, and virtually eliminate insider threats. I stopped at the booth the next day and asked, “How can your monitoring technology identify individuals who might flip the color of their hat from white to black?” The answer was, “Patterns.” I found the response interesting because virtually every cyber security firm with whom I have interacted over the years talks about patterns.

Thanks, OpenAI. Good enough.

The problem is that individuals aware of what are mostly brute-force methods of identifying that employee A tried to access a Dark Web site known for selling malware works if the bad actor is clueless. But what happens if the bad actors were actually wearing white hats, riding white stallions, and saying, “Hi ho, Silver, away”?

Here’s the answer: “Prosecutors allege incident response pros used ALPHV/BlackCat to commit string of ransomware attacks

.” The write up explains that “cybersecurity turncoats attacked at least five US companies while working for” cyber security firms. Here’s an interesting passage from the write up:

Ryan Clifford Goldberg, Kevin Tyler Martin and an unnamed co–conspirator — all U.S. nationals — began using ALPHV, also known as BlackCat, ransomware to attack companies in May 2023, according to indictments and other court documents in the U.S. District Court for the Southern District of Florida. At the time of the attacks, Goldberg was a manager of incident response at Sygnia, while Martin, a ransomware negotiator at DigitalMint, allegedly collaborated with Goldberg and another co-conspirator, who also worked at DigitalMint and allegedly obtained an affiliate account on ALPHV. The trio are accused of carrying out the conspiracy from May 2023 through April 2025, according to an affidavit.

How long did the malware attacks persist? Just from May 2023 until April 2025.

Obviously the purpose of the bad behavior was money. But the key point is that, according to the article, “he was recruited by the unnamed co-conspirator.”

And that, gentle reader, is how bad actors operate. Money pressure, some social engineering probably at a cyber security conference, and a pooling of expertise. I am not sure that insider threat software can identify this type of behavior. The evidence is that multiple cyber security firms employed these alleged bad actors and the scam was afoot for more that 20 months. And what about the people who hired these individuals? That screening seems to be somewhat spotty, doesn’t it?

Several observations:

- Cyber security firms themselves are not able to operate in a secure manner

- Trust in Fancy Dan software may be misplaced. Managers and co-workers need to be alert and have a way to communicate suspicions in an appropriate way

- The vendors of insider threat detection software may want to provide some hard proof that their systems operate when hats change from black to white.

Everyone talks about the boom in smart software. But cyber security is undergoing a similar economic gold rush. This example, if it is indeed accurate, indicates that companies may develop, license, and use cyber security software. Does it work? I suggest you ask the “leadership” of the firms involved in this legal matter.

Stephen E Arnold, November 7, 2025

Copilot in Excel: Brenda Has Another Problem

November 6, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Simon Wilson posted an interesting snippet from a person whom I don’t know. The handle is @belligerentbarbies who is a member of TikTok. You can find the post “Brenda” on Simon Wilson’s Weblog. The main idea in the write up is that a person in accounting or finance assembles an Excel worksheet. In many large outfits, the worksheets are templates or set up to allow the enthusiastic MBA to plug in a few numbers. Once the numbers are “in,” then the bright, over achiever hits Shift F9 to recalculate the single worksheet. If it looks okay, the MBA mashes F9 and updates the linked spreadsheets. Bingo! A financial services firm has produced the numbers needed to slap into a public or private document. But, and here’s the best part…

Thanks, Venice.ai. Good enough.

Before the document leaves the office, a senior professional who has not used Excel checks the spreadsheet. Experience dictates to look at certain important cells of data. If those pass the smell test, then the private document is moved to the next stage of its life. It goes into production so that the high net worth individual, the clued in business reporter, the big customers, and people in the CEO’s bridge group get the document.

Because those “reports” can move a stock up or down or provide useful information about a deal that is not put into a number context, most outfits protect Excel spreadsheets. Heck, even the fill-in-the-blank templates are big time secrets. Each of the investment firms for which I worked over the years follow the same process. Each uses its own, custom-tailored, carefully structure set of formulas to produce the quite significant reports, opinions, and marketing documents.

Brenda knows Excel. Most Big Dogs know some Excel, but as these corporate animals fight their way to Carpetland, those Excel skills atrophy. Now Simon Wilson’s post enters and references Copilot. The post is insightful because it highlights a process gap. Specifically if Copilot is involved in an Excel spreadsheet, Copilot might— just might in this hypothetical — make a change. The Big Dog in Carpetland does not catch the change. The Big Dog just sniffs a few spots in the forest or jungle of numbers.

Before Copilot Brenda or similar professional was involved. Copilot may make it possible to ignore Brenda and push the report out. If the financial whales make money, life is good. But what happens if the Copilot tweaked worksheet is hallucinating. I am not talking a few disco biscuits but mind warping errors whipped up because AI is essentially operating at “good enough” levels of excellence.

Bad things transpire. As interesting as this problem is to contemplate, there’s another angle that the Simon Wilson post did not address. What if Copilot is phoning home. The idea is that user interaction with a cloud-based service is designed to process data and add those data to its training process. The AI wizards have some jargon for this “learn as you go” approach.

The issue is, however, what happens if that proprietary spreadsheet or the “numbers” about a particular company find their way into a competitor’s smart output? What if Financial firm A does not know this “process” has compromised the confidentiality of a worksheet. What if Financial firm B spots the information and uses it to advantage firm B?

Where’s Brenda in this process? Who? She’s been RIFed. What about Big Dog in Carpetland? That professional is clueless until someone spots the leak and the information ruins what was a calm day with no fires to fight. Now a burning Piper Cub is in the office. Not good, is it.

I know that Microsoft Copilot will be or is positioned as super secure. I know that hypotheticals are just that: Made up thought donuts.

But I think the potential for some knowledge leaking may exist. After all Copilot, although marvelous, is not Brenda. Clueless leaders in Carpetland are not interested in fairy tales; they are interested in making money, reducing headcount, and enjoying days without a fierce fire ruining a perfectly good Louis XIV desk.

Net net: Copilot, how are you and Brenda communicating? What’s that? Brenda is not answering her company provided mobile. Wow. Bummer.

Stephen E Arnold, November 6, 2025