Data Thirst? Guess Who Can Help?

April 17, 2024

As large language models approach the limit of freely available data on the Internet, companies are eyeing sources supposedly protected by copyrights and user agreements. PCMag reports, “Google Let OpenAI Scrape YouTube Data Because Google Was Doing It Too.” It seems Google would rather double down on violations than be hypocritical. Writer Emily Price tells us:

“OpenAI made headlines recently after its CTO couldn’t say definitively whether the company had trained its Sora video generator on YouTube data, but it looks like most of the tech giants—OpenAI, Google, and Meta—have dabbled in potentially unauthorized data scraping, or at least seriously considered it. As the New York Times reports, OpenAI transcribed than a million hours of YouTube videos using its Whisper technology in order to train its GPT-4 AI model. But Google, which owns YouTube, did the same, potentially violating its creators’ copyrights, so it didn’t go after OpenAI. In an interview with Bloomberg this week, YouTube CEO Neal Mohan said the company’s terms of service ‘does not allow for things like transcripts or video bits to be downloaded, and that is a clear violation of our terms of service.’ But when pressed on whether YouTube data was scraped by OpenAI, Mohan was evasive. ‘I have seen reports that it may or may not have been used. I have no information myself,’ he said.”

How silly to think the CEO would have any information. Besides stealing from YouTube content creators, companies are exploring other ways to pierce untapped sources of data. According to the Times article cited above, Meta considered buying Simon & Schuster to unlock all its published works. We are sure authors would have been thrilled. Meta executives also considered scraping any protected data it could find and hoping no one would notice. If caught, we suspect they would consider any fees a small price to pay.

The same article notes Google changed its terms of service so it could train its AI on Google Maps reviews and public Google Docs. See, the company can play by the rules, as long as it remembers to change them first. Preferably, as it did here, over a holiday weekend.

Cynthia Murrell, April 17, 2024

Google Cracks Infinity Which Overshadows Quantum Supremacy Maybe?

April 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The AI wars are in overdrive. Google’s high school rhetoric is in another dimension. Do you remember quantum supremacy? No, that’s okay, but it makes it clear that the Google is the leader in quantum computing. When will that come to the Pixel mobile device? Now Google’s wizards, infused with the juices of a rampant high school science club member (note the words rampant and member, please. They are intentional.)

An article in Analytics India (now my favorite cheerleading reference tool) uses this headline: “Google Demonstrates Method to Scale Language Model to Infinitely Long Inputs.” Imagine a demonstration of infinity using infinite inputs. I thought the smart software outfits were struggling to obtain enough content to train their models. Now Google’s wizards can handle “infinite” inputs. If one demonstrates infinity, how long will that take? Is one possible answer, “An infinite amount of time.”

Wow.

The write up says:

This modification to the Transformer attention layer supports continual pre-training and fine-tuning, facilitating the natural extension of existing LLMs to process infinitely long contexts.

Even more impressive is the diagram of the “infinite” method. I assure you that it won’t take an infinite amount of time to understand the diagram:

See, infinity may have contributed to Cantor’s mental issues, but the savvy Googlers have sidestepped that problem. Nifty.

But the write up suggests that “infinite” like many Google superlatives has some boundaries; for instance:

The approach scales naturally to handle million-length input sequences and outperforms baselines on long-context language modelling benchmarks and book summarization tasks. The 1B model, fine-tuned on up to 5K sequence length passkey instances, successfully solved the 1M length problem.

Google is trying very hard to match Microsoft’s marketing coup which caused the Google Red Alert. Even high schoolers can be frazzled by flashing lights, urgent management edicts, and the need to be perceived as a leader in something other than online advertising. The science club at Google will keep trying. Next up quantumly infinite. Yeah.

Stephen E Arnold, April 16, 2024

Are Experts Misunderstanding Google Indexing?

April 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google is not perfect. More and more people are learning that the mystics of Mountain View are working hard every day to deliver revenue. In order to produce more money and profit, one must use Rust to become twice as wonderful than a programmer who labors to make C++ sit up, bark, and roll over. This dispersal of the cloud of unknowing obfuscating the magic of the Google can be helpful. What’s puzzling to me is that what Google does catches people by surprise. For example, consider the “real” news presented in “Google Books Is Indexing AI-Generated Garbage.” The main idea strikes me as:

But one unintended outcome of Google Books indexing AI-generated text is its possible future inclusion in Google Ngram viewer. Google Ngram viewer is a search tool that charts the frequencies of words or phrases over the years in published books scanned by Google dating back to 1500 and up to 2019, the most recent update to the Google Books corpora. Google said that none of the AI-generated books I flagged are currently informing Ngram viewer results.

Thanks, Microsoft Copilot. I enjoyed learning that security is a team activity. Good enough again.

Indexing lousy content has been the core function of Google’s Web search system for decades. Search engine optimization generates information almost guaranteed to drag down how higher-value content is handled. If the flagship provides the navigation system to other ships in the fleet, won’t those vessels crash into bridges?

In order to remediate Google’s approach to indexing requires several basic steps. (I have in various ways shared these ideas with the estimable Google over the years. Guess what? No one cared, understood, and if the Googler understood, did not want to increase overhead costs. So what are these steps? I shall share them:

- Establish an editorial policy for content. Yep, this means that a system and method or systems and methods are needed to determine what content gets indexed.

- Explain the editorial policy and what a person or entity must do to get content processed and indexed by the Google, YouTube, Gemini, or whatever the mystics in Mountain View conjure into existence

- Include metadata with each content object so one knows the index date, the content object creation date, and similar information

- Operate in a consistent, professional manner over time. The “gee, we just killed that” is not part of the process. Sorry, mystics.

Let me offer several observations:

- Google, like any alleged monopoly, faces significant management challenges. Moving information within such an enterprise is difficult. For an organization with a Foosball culture, the task may be a bit outside the wheelhouse of most young people and individuals who are engineers, not presidents of fraternities or sororities.

- The organization is under stress. The pressure is financial because controlling the cost of the plumbing is a reasonably difficult undertaking. Second, there is technical pressure. Google itself made clear that it was in Red Alert mode and keeps adding flashing lights with each and every misstep the firm’s wizards make. These range from contentious relationships with mere governments to individual staff member who grumble via internal emails, angry Googler public utterances, or from observed behavior at conferences. Body language does speak sometimes.

- The approach to smart software is remarkable. Individuals in the UK pontificate. The Mountain View crowd reassures and smiles — a lot. (Personally I find those big, happy looks a bit tiresome, but that’s a dinobaby for you.)

Net net: The write up does not address the issue that Google happily exploits. The company lacks the mental rigor setting and applying editorial policies requires. SEO is good enough to index. Therefore, fake books are certainly A-OK for now.

Stephen E Arnold, April 12, 2024

The Only Dataset Search Tool: What Does That Tell Us about Google?

April 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If you like semi-jazzy, academic write ups, you will revel in “Discovering Datasets on the Web Scale: Challenges and Recommendations for Google Dataset Search.” The write up appears in a publication associated with Jeffrey Epstein’s favorite university. It may be worth noting that MIT and Google have teamed to offer a free course in Artificial Intelligence. That is the next big thing which does hallucinate at times while creating considerable marketing angst among the techno-giants jousting to emerge as the go-to source of the technology.

Back to the write up. Google created a search tool to allow a user to locate datasets accessible via the Internet. There are more than 700 data brokers in the US. These outfits will sell data to most people who can pony up the cash. Examples range from six figure fees for the Twitter stream to a few hundred bucks for boat license holders in states without much water.

The write up says:

Our team at Google developed Dataset Search, which differs from existing dataset search tools because of its scope and openness: potentially any dataset on the web is in scope.

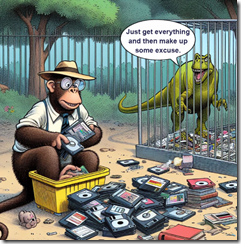

A very large, money oriented creature enjoins a worker to gather data. If someone asks, “Why?”, the monster says, “Make up something.” Thanks MSFT Copilot. How is your security today? Oh, that’s too bad.

The write up does the academic thing of citing articles which talk about data on the Web. There is even a table which organizes the types of data discovery tools. The categorization of general and specific is brilliant. Who would have thought there were two categories of a vertical search engine focused on Web-accessible data. I thought there was just one category; namely, gettable. The idea is that if the data are exposed, take them. Asking permission just costs time and money. The idea is that one can apologize and keep the data.

The article includes a Googley graphic. The French portal, the Italian “special” portal, and the Harvard “dataverse” are identified. Were there other Web accessible collections? My hunch is that Google’s spiders such down as one famous Googler said, “All” the world’s information. I will leave it to your imagination to fill in other sources for the dataset pages. (I want to point out that Google has some interesting technology related to converting data sets into normalized data structures. If you are curious about the patents, just write benkent2020 at yahoo dot com, and one of my researchers will send along a couple of US patent numbers. Impressive system and method.)

The section “Making Sense of Heterogeneous Datasets” is peculiar. First, the Googlers discovered the basic fact of data from different sources — The data structures vary. Think in terms of grapes and deer droppings. Second, the data cannot be “trusted.” There is no fix to this issue for the team writing the paper. Third, the authors appear to be unaware of the patents I mentioned, particularly the useful example about gathering and normalizing data about digital cameras. The method applies to other types of processed data as well.

I want to jump to the “beyond metadata” idea. This is the mental equivalent of “popping” up a perceptual level. Metadata are quite important and useful. (Isn’t it odd that Google strips high value metadata from its search results; for example, time and data?) The authors of the paper work hard to explain that the Google approach to data set search adds value by grouping, sorting, and tagging with information not in any one data set. This is common sense, but the Googley spin on this is to build “trust.” Remember: This is an alleged monopolist engaged in online advertising and co-opting certain Web services.

Several observations:

- This is another of Google’s high-class PR moves. Hooking up with MIT and delivering razz-ma-tazz about identifying spiderable content collections in the name of greater good is part of the 2024 Code Red playbook it seems. From humble brag about smart software to crazy assertions like quantum supremacy, today’s Google is a remarkable entity

- The work on this “project” is divorced from time. I checked my file of Google-related information, and I found no information about the start date of a vertical search engine project focused on spidering and indexing data sets. My hunch is that it has been in the works for a while, although I can pinpoint 2006 as a year in which Google’s technology wizards began to talk about building master data sets. Why no time specifics?

- I found the absence of AI talk notable. Perhaps Google does not think a reader will ask, “What’s with the use of these data? I can’t use this tool, so why spend the time, effort, and money to index information from a country like France which is not one of Google’s biggest fans. (Paris was, however, the roll out choice for the answer to Microsoft and ChatGPT’s smart software announcement. Plus that presentation featured incorrect information as I recall.)

Net net: I think this write up with its quasi-academic blessing is a bit of advance information to use in the coming wave of litigation about Google’s use of content to train its AI systems. This is just a hunch, but there are too many weirdnesses in the academic write up to write off as intern work or careless research writing which is more difficult in the wake of the stochastic monkey dust up.

Stephen E Arnold, April 11, 2024

Google: The DMA Makes Us Harm Small Business

April 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I cannot estimate the number of hours Googlers invested in crafting the short essay “New Competition Rules Come with Trade-Offs.” I find it a work of art. Maybe not the equal of Dante’s La Divina Commedia, but is is darned close.

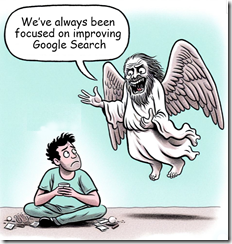

A deity, possibly associated with the quantumly supreme, reassures a human worried about life. Words are reality, at least to some fretful souls. Thanks MSFT Copilot. Good enough.

The essay pivots on unarticulated and assumed “truths.” Particularly charming are these:

- “We introduced these types of Google Search features to help consumers”

- “These businesses now have to connect with customers via a handful of intermediaries that typically charge large commissions…”

- “We’ve always been focused on improving Google Search….”

The first statement implies that Google’s efforts have been the “help.” Interesting: I find Google search often singularly unhelpful, returning results for malware, biased information, and Google itself.

The second statement indicates that “intermediaries” benefit. Isn’t Google an intermediary? Isn’t Google an alleged monopolist in online advertising?

The third statement is particularly quantumly supreme. Note the word “always.” John Milton uses such verbal efflorescence when describing God. Yes, “always” and improving. I am tremulous.

Consider this lyrical passage and the elegant logic of:

We’ll continue to be transparent about our DMA compliance obligations and the effects of overly rigid product mandates. In our view, the best approach would ensure consumers can continue to choose what services they want to use, rather than requiring us to redesign Search for the benefit of a handful of companies.

Transparent invokes an image of squeaky clean glass in a modern, aluminum-framed window, scientifically sealed to prevent its unauthorized opening or repair by anyone other than a specially trained transparency provider. I like the use of the adjective “rigid” because it implies a sturdiness which may cause the transparent window to break when inclement weather (blasts of hot and cold air from oratorical emissions) stress the see-through structures. The adult-father-knows-best reference in “In our view, the best approach”. Very parental. Does this suggest the EU is childish?

Net net: Has anyone compiled the Modern Book of Google Myths?

Stephen E Arnold, April 11, 2024

One Google Gem: Reflections about a Digital Zircon

April 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have a Google hat, and I don’t think I will wear it to my local burrito restaurant. I just read “The Beginning of the End?” That’s a snappy title for End of Times’s types, but the sub-title is a corker:

More of us are moving away from Google towards TikTok and AI chatbots — as research reveals that the golden era of search engines may well be over

Thanks, MSFT Copilot. How are you coming with your security? Good enough, perhaps?

The most interesting assertion in the essay is that generative AI (the software that writes résumés for RIF’ed blue chip consultants and SEO professionals) is not particularly good. Doesn’t everyone make up facts today? I think that’s popular with college presidents, but I may be wrong because I am thinking about Harvard and Stanford at the moment. I noted this statement:

The surveys reveal that the golden era of search engines might be coming to an end, as consumers increasingly turn towards AI chatbots for their information needs. However, as Chris Sheehan, SVP Strategic Accounts and AI at Applause sums up, “Chatbots are getting better at dealing with toxicity, bias and inaccuracy – however, concerns still remain. Not surprisingly, switching between chatbots to accomplish different tasks is common, while multimodal capabilities are now table stakes. To gain further adoption, chatbots need to continue to train models on quality data in specific domains and thoroughly test across a diverse user base to drive down toxicity and inaccuracy.”

Well, by golly, I am not going to dispute the chatbot subject with an expert from Applause. (Give me a hand, please.) I would like to capture these observations:

- Google is an example of what happens when the high school science club gets aced by a group with better PR. Yep, I am thinking about MSFT’s OpenAI, Mistral, and Inflection deals. The outputs may be wonky, but the buzz has been consistent for more than a year. Tinnitus can drive some creatures to distraction.

- Google does a wonderful job delivering Smoothies to regulators in the US and the EU. However, engineering wizards can confuse a calm demeanor and Teflon talk with real managerial and creative capabilities. Nope. Smooth and running a company while innovating are a potentially harmful mixture.

- The challenge of cost control remains a topic few Google experts want to discuss. Even the threat of a flattening or, my goodness, a decline will alter the priorities of those wizards in charge. I was reviewing my notes about what makes Google tick, and the theme in my notes for the last 15 years appears to be money. Money intake, money capture, money in certain employees’ pockets, money for lobbyists, and money for acquisitions, etc. etc.

Net net: Criticizing Google through the filter of ChatGPT is interesting, but the Google lacks the managerial talent to make lemonade from lemons or money from a single alleged monopoly. Thus, consultant surveys and flawed smart software are interesting, but they miss the point of what ails Googzilla: Cost control, regulations, and its PR magnet losing its attraction.

Stephen E Arnold, April 10, 2024

Do Not Assume Googzilla Is Heading to the Monster Retirement Village

April 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google is a ubiquitous tool. It’s used for than searching the Internet. Google and its associated tools have become an indispensable part of modern society. Some technology experts say that AI search engines and generative content tools will kill Google, but others disagree because of its Swiss army knife application. The Verge explores how Google will probably withstand an AI onslaught in: “Here’s Why AI Search Engines Really Can’t Kill Google.”

The article’s author David Pierce pitted Google and AI search against how each performed search’s three basic tasks. Navigation is the first task and it’s the most popular one. Users type in a Web site and want the search results to spit out the correct address. Google completes this task without a hitch, while AI search engines return information about the Web site and the desired address is buried within the top results.

The second task is an information query, like weather, sport scores, temperature, or the time. AI engines stink when it comes to returning real time information. Google probably already has the information about you, not to mention it is connected to real time news sources, weather services, and sports media. The AI engines were useful for evergreen information, such as how many weeks are in a year. However, the AI engines couldn’t confer about the precise number. One said 52.143 weeks, another said 52 and mentioned leap year, and a third said it was 52 plus a few days. Price had to conduct additional research to find the correct answer. Google won this task again because it had speed.

This was useful:

“There is one sub-genre of information queries in which the exact opposite is true, though. I call them Buried Information Queries. The best example I can offer is the very popular query, “how to screenshot on mac.” There are a million pages on the internet that contain the answer — it’s just Cmd-Shift-3 to take the whole screen or Cmd-Shift-4 to capture a selection, there, you’re welcome — but that information is usually buried under a lot of ads and SEO crap. All the AI tools I tried, including Google’s own Search Generative Experience, just snatch that information out and give it to you directly.”

The third task is exploration queries for more in-depth research. These range from researching history, tourist attractions, how to complete a specific task, medical information, and more. Google completed the tasks but AI search engines were better. AI search engines provided citations paired with images and useful information about the queries. It’s similar to reading a blurb in an encyclopedia or how-to manual.

Google is still the search champion but the AI search engines have useful abilities. The best idea would be to combine Google’s speed, real time, and consumable approach with AI engines’ information quality. It will happen one day but probably not in 2024.

Whitney Grace, April 9, 2024

Angling to Land the Big Google Fish: A Humblebrag Quest to Be CEO?

April 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My goodness, the staff and alums of DeepMind have been in the news. Wherever there are big bucks or big buzz opportunities, one will find the DeepMind marketing machinery. Consider “Can Demis Hassabis Save Google?” The headline has two messages for me. The first is that a “real” journalist things that Google is in big trouble. Big trouble translates to stakeholder discontent. That discontent means it is time to roll in a new Top Dog. I love poohbahing. But opining that the Google is in trouble. Sure, it was aced by the Microsoft-OpenAI play not too long ago. But the Softies have moved forward with the Mistral deal and the mysterious Inflection deal . But the Google has money, market share, and might. Jake Paul can say he wants the Mike Tyson death stare. But that’s an opinion until Mr. Tyson hits Mr. Paul in the face.

The second message in the headline that one of the DeepMind tribe can take over Google, defeat Microsoft, generate new revenues, avoid regulatory purgatory, and dodge the pain of its swinging door approach to online advertising revenue generation; that is, people pay to get in, people pay to get out, and soon will have to subscribe to watch those entering and exiting the company’s advertising machine.

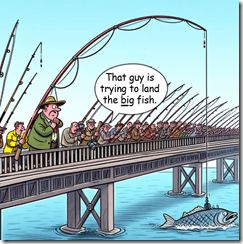

Thanks, MSFT Copilot. Nice fish.

What are the points of the essay which caught my attention other than the headline for those clued in to the Silicon Valley approach to “real” news? Let me highlight a few points.

First, here’s a quote from the write up:

Late on chatbots, rife with naming confusing, and with an embarrassing image generation fiasco just in the rearview mirror, the path forward won’t be simple. But Hassabis has a chance to fix it. To those who known him, have worked alongside him, and still do — all of whom I’ve spoken with for this story — Hassabis just might be the perfect person for the job. “We’re very good at inventing new breakthroughs,” Hassabis tells me. “I think we’ll be the ones at the forefront of doing that again in the future.”

Is the past a predictor of future success? More than lab-to-Android is going to be required. But the evaluation of the “good at inventing new breakthroughs” is an assertion. Google has been in the me-too business for a long time. The company sees itself as a modern Bell Labs and PARC. I think that the company’s perception of itself, its culture, and the comments of its senior executives suggest that the derivative nature of Google is neither remembered nor considered. It’s just “we’re very good.” Sure “we” are.

Second, I noted this statement:

Ironically, a breakthrough within Google — called the transformer model — led to the real leap. OpenAI used transformers to build its GPT models, which eventually powered ChatGPT. Its generative ‘large language’ models employed a form of training called “self-supervised learning,” focused on predicting patterns, and not understanding their environments, as AlphaGo did. OpenAI’s generative models were clueless about the physical world they inhabited, making them a dubious path toward human level intelligence, but would still become extremely powerful. Within DeepMind, generative models weren’t taken seriously enough, according to those inside, perhaps because they didn’t align with Hassabis’s AGI priority, and weren’t close to reinforcement learning. Whatever the rationale, DeepMind fell behind in a key area.

Google figured something out and then did nothing with the “insight.” There were research papers and chatter. But OpenAI (powered in part by Sam AI-Man) used the Google invention and used it to carpet bomb, mine, and set on fire Google’s presumed lead in anything related to search, retrieval, and smart software. The aftermath of the Microsoft OpenAI PR coup is a continuing story of rehabilitation. From what I have seen, Google needs more time getting its ageingbody parts working again. The ad machine produces money, but the company reels from management issue to management issue with alarming frequency. Biased models complement spats with employees. Silicon Valley chutzpah causes neurological spasms among US and EU regulators. Something is broken, and I am not sure a person from inside the company has the perspective, knowledge, and management skills to fix an increasingly peculiar outfit. (Yes, I am thinking of ethnically-incorrect German soldiers loyal to a certain entity on Google’s list of questionable words and phrases.)

And, lastly, let’s look at this statement in the essay:

Many of those who know Hassabis pine for him to become the next CEO, saying so in their conversations with me. But they may have to hold their breath. “I haven’t heard that myself,” Hassabis says after I bring up the CEO talk. He instantly points to how busy he is with research, how much invention is just ahead, and how much he wants to be part of it. Perhaps, given the stakes, that’s right where Google needs him. “I can do management,” he says, ”but it’s not my passion. Put it that way. I always try to optimize for the research and the science.”

I wonder why the author of the essay does not query Jeff Dean, the former head of a big AI unit in Mother Google’s inner sanctum about Mr. Hassabis? How about querying Mr. Hassabis’ co-founder of DeepMind about Mr. Hassabis’ temperament and decision-making method? What about chasing down former employees of DeepMind and getting those wizards’ perspective on what DeepMind can and cannot accomplish.

Net net: Somewhere in the little-understood universe of big technology, there is an invisible hand pointing at DeepMind and making sure the company appears in scientific publications, the trade press, peer reviewed journals, and LinkedIn funded content. Determining what’s self-delusion, fact, and PR wordsmithing is quite difficult.

Google may need some help. To be frank, I am not sure anyone in the Google starting line up can do the job. I am also not certain that a blue chip consulting firm can do much either. Google, after a quarter century of zero effective regulation, has become larger than most government agencies. Its institutional mythos creates dozens of delusional Ulysses who cannot separate fantasies of the lotus eaters from the gritty reality of the company as one of the contributors to the problems facing youth, smaller businesses, governments, and cultural norms.

Google is Googley. It will resist change.

Stephen E Arnold, April 3, 2024

Google Mandates YouTube AI Content Be Labeled: Accurately? Hmmmm

April 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The rules for proper use of AI-generated content are still up in the air, but big tech companies are already being pressured to induct regulations. Neowin reported that “Google Is Requiring YouTube Creators To Post Labels For Realistic AI-Created Content” on videos. This is a smart idea in the age of misinformation, especially when technology can realistically create images and sounds.

Google first announced the new requirement for realistic AI-content in November 2023. The YouTube’s Creator Studio now has a tool in the features to label AI-content. The new tool is called “Altered content” and asks creators yes and no questions. Its simplicity is similar to YouTube’s question about whether a video is intended for children or not. The “Altered content” label applies to the following:

• “Makes a real person appear to say or do something they didn’t say or do

• Alters footage of a real event or place

• Generates a realistic-looking scene that didn’t actually occur”

The article goes on to say:

“The blog post states that YouTube creators don’t have to label content made by generative AI tools that do not look realistic. One example was “someone riding a unicorn through a fantastical world.” The same applies to the use of AI tools that simply make color or lighting changes to videos, along with effects like background blur and beauty video filters.”

Google says it will have enforcement measures if creators consistently don’t label their realistic AI videos, but the consequences are specified. YouTube will also reserve the right to place labels on videos. There will also be a reporting system viewers can use to notify YouTube of non-labeled videos. It’s not surprising that Google’s algorithms can’t detect realistic videos from fake. Perhaps the algorithms are outsmarting their creators.

Whitney Grace, April 2, 2024

A Single, Glittering Google Gem for 27 March 2024

March 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

So many choices. But one gem outshines the others. Google’s search generative experience is generating publicity. The old chestnut may be true. Any publicity is good publicity. I would add a footnote. Any publicity about Google’s flawed smart software is probably good for Microsoft and other AI competitors. Google definitely looks as though it has some behaviors that are — how shall I phrase it? — questionable. No, maybe, ill-considered. No, let’s go with bungling. That word has a nice ring to it. Bungling.

I learned about this gem in “Google’s New AI Search Results Promotes Sites Pushing Malware, Scams.” The write up asserts:

Google’s new AI-powered ‘Search Generative Experience’ algorithms recommend scam sites that redirect visitors to unwanted Chrome extensions, fake iPhone giveaways, browser spam subscriptions, and tech support scams.

The technique which gets the user from the quantumly supreme Google to the bad actor goodies is redirects. Some user notification functions to pump even more inducements toward the befuddled user. (See, bungling and befuddled. Alliteration.)

Why do users fall for these bad actor gift traps? It seems that Google SGE conversational recommendations sound so darned wonderful, Google users just believe that the GOOG cares about the information it presents to those who “trust” the company. k

The write up points out that the DeepMinded Google provided this information about the bumbling SGE:

"We continue to update our advanced spam-fighting systems to keep spam out of Search, and we utilize these anti-spam protections to safeguard SGE," Google told BleepingComputer. "We’ve taken action under our policies to remove the examples shared, which were showing up for uncommon queries."

Isn’t that reassuring? I wonder if the anecdote about this most recent demonstration of the Google’s wizardry will become part of the Sundar & Prabhakar Comedy Act?

This is a gem. It combines Google’s management process, word salad frippery, and smart software into one delightful bouquet. There you have it: Bungling, befuddled, bumbling, and bouquet. I am adding blundering. I do like butterfingered, however.

Stephen E Arnold, March 27, 2024