Beating on Quantum: Thump, Clang

January 13, 2025

A dinobaby produced this post. Sorry. No smart software was able to help the 80 year old this time around.

A dinobaby produced this post. Sorry. No smart software was able to help the 80 year old this time around.

The is it new or is it PR service Benzinga published on January 13, 2025, “Quantum Computing Stocks Tumble after Mark Zuckerberg Backs Nvidia CEO Jensen Huang’s Practical Comments.” I love the “practical.” Quantum computing is similar to the modular home nuclear reactor from my point of view. These are interesting topics to discuss, but when it comes to convincing a home owners’ association to allow the installation of a modular nuclear reactor or squeezing the gizmos required to make quantum computing sort of go in a relatively reliable way, un uh.

Is this a practical point of view? No. The reason is that most people have zero idea of what is required to get a quantum computer or a quantum anything to work. The room for the demonstration is usually a stage set. The cooling, the electronics, and the assorted support equipment is — how shall I phrase it — bulky. That generator outside the quantum lab is not for handling a power outage. The trailer-sized box is pumping volts into the quantum set up.

The write up explains:

comments made by Meta CEO Mark Zuckerberg and Nvidia Corp. CEO Jensen Huang, who both expressed caution regarding the timeline for quantum computing advancements.

Caution. Good word.

The remarks by Zuckerberg and Huang have intensified concerns about the future of quantum computing. Earlier, during Nvidia’s analyst day, Huang expressed optimism about quantum computing’s potential but cautioned that practical applications might take 15 to 30 years to materialize. This outlook has led to a sharp decline in quantum computing stocks. Despite the cautious projections, some industry insiders have countered Huang’s views, arguing that quantum-based innovations are already being integrated into the tech ecosystem. Retail investors have shown optimism, with several quantum computing stocks experiencing significant growth in recent weeks.

I know of a person who lectures about quantum. I have heard that the theme of these presentations is that quantum computing is just around the corner. Okay. Google is quantumly supreme. Intel has its super technology called Horse Ridge or Horse Features. IBM makes quantum squeaks.

I want research to continue, but it is interesting to me that two big technology wizards want to talk about practical quantum computing. One does the social media thing unencumbered by expensive content moderation and the other is pushing smart software enabling technology forward.

Neither wants the quantum hype to supersede the marketing of either of these wizards’ money machines. I love “real news”, particularly when it presents itself as practical. May I suggest you place your order for a D-Wave or an Enron egg nuclear reactor. Practical.

Stephen E Arnold, January 13, 2025

Oh, Oh! Silicon Valley Hype Minimizes Risk. Who Knew?

January 10, 2025

This is an official dinobaby post. No smart software involved in this blog post.

This is an official dinobaby post. No smart software involved in this blog post.

I read “Silicon Valley Stifled the AI Doom Movement in 2024.” I must admit I was surprised that one of the cheerleaders for Silicon Valley is disclosing something absolutely no one knew. I mean unregulated monopolies, the “Puff the Magic Dragon” strafing teens, and the vulture capitalists slavering over the corpses of once thriving small and mid sized businesses. Hey, I thought that “progress” myth was real. I thought technology only makes life better. Now I read that “Silicon Valley” wanted only good news about smart software. Keep in mind that this is software which outputs hallucinations, makes decisions about medical care for people, and monitors the clicks and location of everyone with a mobile device or a geotracker.

The write up reminded me that ace entrepreneur / venture professional Marc Andreessen said:

“The era of Artificial Intelligence is here, and boy are people freaking out. Fortunately, I am here to bring the good news: AI will not destroy the world, and in fact may save it,” said Andreessen in the essay. In his conclusion, Andreessen gave a convenient solution to our AI fears: move fast and break things – basically the same ideology that has defined every other 21st century technology (and their attendant problems). He argued that Big Tech companies and startups should be allowed to build AI as fast and aggressively as possible, with few to no regulatory barriers. This would ensure AI does not fall into the hands of a few powerful companies or governments, and would allow America to compete effectively with China, he said.

What publications touted Mr. Andreessen’s vision? Answer: Lots.

Regulate smart software? Nope. From Connecticut’s effort to the US government, smart software regulation went nowhere. The reasons included, in my opinion:

- A chance to make a buck, well, lots of bucks

- Opportunities to foist “smart software” plus its inherent ability to make up stuff on corporate sheep

- A desire to reinvent “dumb” processes like figuring out how to push buttons to create addiction to online gambling, reduce costs by eliminating inefficient humans, and using stupid weapons.

Where are we now? A pillar of the Silicon Valley media ecosystem writes about the possible manipulation of information to make smart software into a Care Bear. Cuddly. Harmless. Squeezable. Yummy too.

The write up concludes without one hint of the contrast between the AI hype and the viewpoints of people who think that the technology of AI is immature but fumbling forward to stick its baby finger in a wall socket. I noted this concluding statement in the write up:

Calling AI “tremendously safe” and attempts to regulate it “dumb” is something of an oversimplification. For example, Character.AI – a startup a16z has invested in – is currently being sued and investigated over child safety concerns. In one active lawsuit, a 14-year-old Florida boy killed himself after allegedly confiding his suicidal thoughts to a Character.AI chatbot that he had romantic and sexual chats with. This case shows how our society has to prepare for new types of risks around AI that may have sounded ridiculous just a few years ago. There are more bills floating around that address long-term AI risk – including one just introduced at the federal level by Senator Mitt Romney. But now, it seems AI doomers will be fighting an uphill battle in 2025.

But don’t worry. Open source AI provides a level playing field for [a] adversaries of the US, [b] bad actors who use smart software to compromise Swiss cheese systems, and [c] manipulate people on a grand scale. Will the “Silicon Valley” media give equal time to those who don’t see technology as a benign or net positive? Are you kidding? Oh, aren’t those smart drones with kinetic devices just fantastic?

Stephen E Arnold, January 10, 2025

AI Outfit Pitches Anti Human Message

January 9, 2025

AI startup Artisan thought it could capture attention by telling companies to get rid of human workers and use its software instead. It was right. Gizmodo reports, “AI Firm’s ‘Stop Hiring Humans’ Billboard Campaign Sparks Outrage.” The firm plastered its provocative messaging across San Francisco. Writer Lucas Ropek reports:

“The company, which is backed by startup accelerator Y-Combinator, sells what it calls ‘AI Employees’ or ‘Artisans.’ What the company actually sells is software designed to assist with customer service and sales workflow. The company appears to have done an internal pow-wow and decided that the most effective way to promote its relatively mundane product was to fund an ad campaign heralding the end of the human age. Writing about the ad campaign, local outlet SFGate notes that the posters—which are strewn all over the city—include plugs like the following:

‘Artisans won’t complain about work-life balance’

‘Artisan’s Zoom cameras will never ‘not be working’ today.’

‘Hire Artisans, not humans.’

‘The era of AI employees is here.'”

The write-up points to an interview with SFGate in which CEO Jaspar Carmichael-Jack states the ad campaign was designed to “draw eyes.” Mission accomplished. (And is it just me, or does that name belong in a pirate movie?) Though Ropek acknowledges his part in drawing those eyes, he also takes this chance to vent about AI and big tech in general. He writes:

“It is Carmichael-Jackson’s admission that his billboards are ‘dystopian’—just like the product he’s selling—that gets to the heart of what is so [messed] up about the whole thing. It’s obvious that Silicon Valley’s code monkeys now embrace a fatalistic bent of history towards the Bladerunner-style hellscape their market imperatives are driving us.”

Like Artisan’s billboards, Ropek pulls no punches. Located in San Francisco, Artisan was launched in 2023. Founders hail from the likes of Stanford, Oxford, Meta, and IBM. Will the firm find a way to make its next outreach even more outrageous?

Cynthia Murrell, January 9, 2025

Salesforce Surfs Agentic AI and Hopes to Stay on the Long Board

January 7, 2025

This is an official dinobaby post. No smart software involved in this blog post.

This is an official dinobaby post. No smart software involved in this blog post.

I spotted a content marketing play, and I found it amusing. The spin was enough to make my eyes wobble. “Intelligence (AI). Its Stock Is Up 39% in 4 Months, and It Could Soar Even Higher in 2025” appeared in the Motley Fool online investment information service. The headline is standard fare, but the catchphrase in the write up is “the third wave of AI.” What were the other two waves, you may ask? The first wave was machine learning which is an age measured in decades. The second wave which garnered the attention of the venture community and outfits like Google was generative AI. I think of the second wave as the content suck up moment.

So what’s the third wave? Answer: Salesforce. Yep, the guts of the company is a digitized record of sales contacts. The old word for what made a successful sales person valuable was “Rolodex.” But today one may as well talk about a pressing ham.

What makes this content marketing-type article notable is that Salesforce wants to “win” the battle of the enterprise and relegate Microsoft to the bench. What’s interesting is that Salesforce’s innovation is presented this way:

The next wave of AI will build further on generative AI’s capabilities, enabling AI to make decisions and take actions across applications without human intervention. Salesforce (CRM -0.42%) CEO Marc Benioff calls it the “digital workforce.” And his company is leading the growth in this Agentic AI with its new Agentforce product.

Agentic.

What’s Salesforce’s secret sauce? The write up says:

Artificial intelligence algorithms are only as good as the data used to train them. Salesforce has accurate and specific data about each of its enterprise customers that nobody else has. While individual businesses could give other companies access to those data, Salesforce’s ability to quickly and simply integrate client data as well as its own data sets makes it a top choice for customers looking to add AI agents to their “workforce.” During the company’s third-quarter earnings call, Benioff called Salesforce’s data an “unfair advantage,” noting Agentforce agents are more accurate and less hallucinogenic as a result.

To put some focus on the competition, Salesforce targets Microsoft. The write up says:

Benioff also called out what might be Salesforce’s largest competitor in Agentic AI, Microsoft (NASDAQ: MSFT). While Microsoft has a lot of access to enterprise customers thanks to its Office productivity suite and other enterprise software solutions, it doesn’t have as much high-quality data on a business as Salesforce. As a result, Microsoft’s Copilot abilities might not be up to Agentforce in many instances. Benioff points out Microsoft isn’t using Copilot to power its online help desk like Salesforce.

I think it is worth mentioning that Apple’s AI seems to be a tad problematic. Also, those AI laptops are not the pet rock for a New Year’s gift.

What’s the Motley Fool doing for Salesforce besides making the company’s stock into a sure-fire winner for 2025? The rah rah is intense; for example:

But if there’s one thing investors have learned from the last two years of AI innovation, it’s that these things often grow faster than anticipated. That could lead Salesforce to outperform analysts’ expectations over the next few years, as it leads the third wave of artificial intelligence.

Let me offer several observations:

- Salesforce sees a marketing opportunity for its “agentic” wrappers or apps. Therefore, put the pedal to the metal and grab mind share and market share. That’s not much different from the company’s attention push.

- Salesforce recognizes that Microsoft has some momentum in some very lucrative markets. The prime example is the Microsoft tie up with Palantir. Salesforce does not have that type of hook to generate revenue from US government defense and intelligence budgets.

- Salesforce is growing, but so is Oracle. Therefore, Salesforce feels that it could become the cold salami in the middle of a Microsoft and Oracle sandwich.

Net net: Salesforce has to amp up whatever it can before companies that are catching the rising AI cloud wave swamp the Salesforce surf board.

Stephen E Arnold, January 7, 2025

Marketing Milestone 2024: Whither VM?

January 3, 2025

When a vendor jacks up prices tenfold, customers tend to look elsewhere. If VMware‘s new leadership thought its clients had no other options, it was mistaken. Ars Technica reports, “Company Claims 1.000 Percent Price Hike Drove it from VMware to Open Source Rival.” We knew some were unhappy with changes Broadcom made since it bought VMware in November, 2023. For example, nixing perpetual license sales sent costs soaring for many. (Broadcom claims that move was planned before it bought VMware.) Now, one firm that had enough has come forward. Writer Scharon Harding tells us:

“According to a report from The Register today, Beeks Group, a cloud operator headquartered in the United Kingdom, has moved most of its 20,000-plus virtual machines (VMs) off VMware and to OpenNebula, an open source cloud and edge computing platform. Beeks Group sells virtual private servers and bare metal servers to financial service providers. It still has some VMware VMs, but ‘the majority’ of its machines are currently on OpenNebula, The Register reported. Beeks’ head of production management, Matthew Cretney, said that one of the reasons for Beeks’ migration was a VMware bill for ’10 times the sum it previously paid for software licenses,’ per The Register. According to Beeks, OpenNebula has enabled the company to dedicate more of its 3,000 bare metal server fleet to client loads instead of to VM management, as it had to with VMware. With OpenNebula purportedly requiring less management overhead, Beeks is reporting a 200 percent increase in VM efficiency since it now has more VMs on each server.”

Less expensive and more efficient? That is a no-brainer. OpenNebula‘s CEO says other organizations that are making the switch, though he declined to name them. Though Broadcom knows some customers are jumping ship, it may believe its changes are lucrative enough to make up for their absence. At the same time, it is offering an olive branch to small and medium-sized businesses with a less pricy subscription tier designed for them. Will it stem the exodus, or is it already too late?

Cynthia Murrell, January 3, 2024

Boxing Day Cheat Sheet for AI Marketing: Happy New Year!

December 27, 2024

Other than automation and taking the creative talent out of the entertainment industry, where is AI headed in 2025? The lowdown for the upcoming year can be found on the Techknowledgeon AI blog and its post: “The Rise Of Artificial Intelligence: Know The Answers That Makes You Sensible About AI.”

The article acts as a primer for what AI I, its advantages, and answering important questions about the technology. The questions that grab our attention are “Will AI take over humans one day?” And “Is AI an Existential Threat to Humanity?” Here’s the answer to the first question:

“The idea of AI taking over humanity has been a recurring theme in science fiction and a topic of genuine concern among some experts. While AI is advancing at an incredible pace, its potential to surpass or dominate human capabilities is still a subject of intense debate. Let’s explore this question in detail.

AI, despite its impressive capabilities, has significant limitations:

- Lack of General Intelligence: Most AI today is classified as narrow AI, meaning it excels at specific tasks but lacks the broader reasoning abilities of human intelligence.

- Dependency on Humans: AI systems require extensive human oversight for design, training, and maintenance.

- Absence of Creativity and Emotion: While AI can simulate creativity, it doesn’t possess intrinsic emotions, intuition, or consciousness.

And then the second one is:

“Instead of "taking over," AI is more likely to serve as an augmentation tool:

- Workforce Support: AI-powered systems are designed to complement human skills, automating repetitive tasks and freeing up time for creative and strategic thinking.

- Health Monitoring: AI assists doctors but doesn’t replace the human judgment necessary for patient care.

- Smart Assistants: Tools like Alexa or Google Assistant enhance convenience but operate under strict limitations.”

So AI has a long way to go before it replaces humanity and the singularity of surpassing human intelligence is either a long way off or might never happen.

This dossier includes useful information to understand where AI is going and will help anyone interested in learning what AI algorithms are projected to do in 2025.

Whitney Grace, December 27, 2024

Google AI Videos: Grab Your Popcorn and Kick Back

December 20, 2024

This blog post is the work of an authentic dinobaby. No smart software was used.

This blog post is the work of an authentic dinobaby. No smart software was used.

Google has an artificial intelligence inferiority complex. In January 2023, it found itself like a frail bathing suit clad 13 year old in the shower room filled with Los Angeles Rams. Yikes. What could the inhibited Google do? The answer has taken about two years to wend its way into Big Time PR. Nothing is an upgrade. Google is interacting with parallel universes. It is redefining quantum supremacy into supremest computer. It is trying hard not to recommend that its “users” use glue to keep cheese on its pizza.

Score one for the Grok. Good enough, but I had to try the free X.com image generator. Do you see a shivering high school student locked out of the gym on a cold and snowy day? Neither do I. Isn’t AI fabulous?

Amidst the PR bombast, Google has gathered 11 videos together under the banner of “Gemini 2.0: Our New AI Model for the Agentic Era. What is an “era”? As I recall, it is a distinct period of history with a particular feature like online advertising charging everyone someway or another. Eras, according to some long-term thinkers, are millions of years long; for example, the Mesozoic Era consists of the Triassic, Jurassic, and Cretaceous periods. Google is definitely thinking in terms of a long, long time.

Here’s the link to the playlist: https://www.youtube.com/playlist?list=PLqYmG7hTraZD8qyQmEfXrJMpGsQKk-LCY. If video is not your bag, you can listen to Google AI podcasts at this link: https://deepmind.google/discover/the-podcast/.

Has Google neutralized the blast and fall out damage from Microsoft’s 2023 OpenAI deal announcement? I think it depends on whom one asks. The feeling of being behind the AI curve must be intense. Google invented the transformer technology. Even Microsoft’s Big Dog said that Google should have been the winner. Watch for more Google PR about Google and parallel universes and numbers too big for non Googlers to comprehend.

Somebody give that kid a towel. He’s shivering.

Stephen E Arnold, December 20, 2024

Another Horse Ridge or Just Horse Feathers from the Management Icon Intel?

December 20, 2024

This write up emerged from the dinobaby’s own mind. No AI was used because this dinobaby is too stupid to make it work.

This write up emerged from the dinobaby’s own mind. No AI was used because this dinobaby is too stupid to make it work.

If you are an Intel trivia buff, you will know the answer to this question: “What was the name of the 2019 cryogenic control chip Intel rolled out for quantum computers?” The answer? Horse Ridge. And there was a Horse Ridge II a few months later. I am not sure what happened to Horse Ridge. Maybe it was as I suggested horse feathers?

A rider on the Horse Ridge Trail. Notice that where the horse goes, the sagebrush and prairie dogs burn. Thanks, Magic Studio. Good enough.

Intel is back with another big time announcement. I assume this is PR’s way of neutralizing the governance wackiness in evidence at the company. Is there a president? Is there a Horse Ridge?

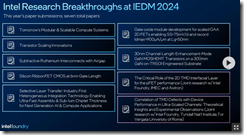

I read “Intel Looks Beyond Silicon, Outlines Breakthroughs in Atomically-Thin 2D Transistors, Chip Packaging, and Interconnects at IEDM 2024.” The write up reports via information directly from the really well managed outfit the following:

…the Intel Foundry Technology Research team announced technology breakthroughs in 2D transistor technology using beyond-silicon materials, chip interconnects, and packaging technology, among others.

This news will definitely push these type of stories out of the news cycle. This one is from CNN via MSN.com:

Ousted Intel CEO Pat Gelsinger Is Leaving the Company with Millions

I thought that Intel was going to

create great CPUs and super duper graphics cards. (Have you ever test an Intel graphics card? Have you ever tried to find current drivers? Have you dumped it because an Nvidia 3060 is faster and more stable for basic office tasks? I have.

Intel breakthroughs via the cited article and Intel Foundry.

The write up says:

Intel hasn’t shared the deep dive details of its Subtractive Ruthenium process, but we’re sure to learn more details during the presentation. Intel says its Subtractive Ruthenium process with airgaps provides up to 25% capacitance at matched resistance at sub-25nm pitches (the center-to-center distance between interconnect lines). Intel says its research team “was first to demonstrate, in R&D test vehicles, a practical, cost-efficient and high-volume manufacturing compatible subtractive Ru integrated process with airgaps that does not require expensive lithographic airgap exclusion zones around vias, or self-aligned via flows that require selective etches.”

Is there a fungible product? Nope. But technical papers are coming real soon.

Stephen E Arnold, December 20, 2024

A Monopolist CEO Loses His Cool: It Is Our AI, Gosh Darn It!

December 17, 2024

This blog post flowed from the sluggish and infertile mind of a real live dinobaby. If there is art, smart software of some type was probably involved.

This blog post flowed from the sluggish and infertile mind of a real live dinobaby. If there is art, smart software of some type was probably involved.

“With 4 Words, Google’s CEO Just Fired the Company’s Biggest Shot Yet at Microsoft Over AI” suggests that Sundar Pichai is not able to smarm his way out of an AI pickle. In January 2023, Satya Nadella, the boss of Microsoft, announced that Microsoft was going to put AI on, in, and around its products and services. Google immediately floundered with a Sundar & Prabhakar Comedy Show in Paris and then rolled out a Google AI service telling people to glue cheese on pizza.

Magic Studio created a good enough image of an angry executive thinking about how to put one of his principal competitors behind a giant digital eight ball.

Now 2025 is within shouting distance. Google continues to lag in the AI excitement race. The company may have oodles of cash, thousands of technical wizards, and a highly sophisticated approach to marketing, branding, and explaining itself. But is it working.

According to the cited article from Inc. Magazine’s online service:

Microsoft CEO Satya Nadella had said that “Google should have been the default winner in the world of big tech’s AI race.”

I like the “should have been.” I had a high school English teacher try to explain to me as an indifferent 14-year-old that the conditional perfect tense suggests a different choice would have avoided a disaster. Her examples involved a young person who decided to become an advertising executive and not a plumber. I think Ms. Dalton said something along the lines “Tom would have been happier and made more money if he had fixed leaks for a living.” I pegged the grammatical expression as belonging to the “woulda, coulda, shoulda” branch of rationalizing failure.

Inc. Magazine recounts an interview during which the interlocuter set up this exchange with the Big Dog of Google, Sundar Pichai, the chief writer for the Sundar & Prabhakar Comedy Show:

Interviewer: “You guys were the originals when it comes to AI.” Where [do] you think you are in the journey relative to these other players?”

Sundar, the Googler: I would love to see “a side-by-side comparison of Microsoft’s models and our models any day, any time. Microsoft is using someone else’s models.

Yep, Microsoft inked a deal with the really stable, fiscally responsible outfit OpenAI and a number of other companies including one in France. Imagine that. France.

Inc. Magazine states:

Google’s biggest problem isn’t that it can’t build competitive models; it’s that it hasn’t figured out how to build compelling products that won’t destroy its existing search business. Microsoft doesn’t have that problem. Sure, Bing exists, but it’s not a significant enough business to matter, and Microsoft is happy to replace it with whatever its generative experience might look like for search.

My hunch is that Google will not be advertising on Inc.’s site. Inc. might have to do some extra special search engine optimization too. Why? Inc.’s article repeats itself in case Sundar of comedy act fame did not get the message. Inc. states again:

Google hasn’t figured out the product part. It hasn’t figured out how to turn its Gemini AI into a product at the same scale as search without killing its real business. Until it does, it doesn’t matter whether the competition uses someone else’s models.

With the EU competition boss thinking about chopping up the Google, Inc. Magazine and Mr. Nadella may struggle to get Sundar’s attention. It is tough to do comedy when tragedy is a snappy quip away.

Stephen E Arnold, December 17, 2024

Google: More Quantum Claims; Some Are Incomprehensible Like Multiple Universes

December 16, 2024

This blog post is the work of an authentic dinobaby. No smart software was used.

This blog post is the work of an authentic dinobaby. No smart software was used.

Beleaguered Google is going all out to win a PR war against the outfits using its Transformer technology. Google should have been the de facto winner of the smart software wars. I think the president of Microsoft articulated a similar sentiment. That hurts, particularly when it comes from a person familiar with the mores and culinary delights of Mughlai cuisine. “Should have, would have, could have” — very painful to one’s ego.

I read an PR confection which spot lit this Google need to be the “best” in the fast moving AI world. I envision Google’s leadership getting hit in the back of the head by a grandmother. My grandmother did this to me when I visited her on my way home from high school. She was frail but would creep up behind me and whack me if I did not get A’s on my report card. Well, Google, let me tell you I have the memory, but the familial whack did not help me one whit.

“Willow: Google Reveals New Quantum Chip Offering Incomprehensibly Fast Processing” is a variant of the quantum supremacy claim issued a couple of years ago. In terms of technical fluff, Google is now matching the wackiness of Intel’s revolutionary Horse-something quantum innovation. But “incomprehensibly”? Come on, BetaNews.

The PR approved write up reports:

Google says that its quantum chip took less than five minutes to perform tasks that would take even the fastest supercomputers 10 septillion years. Providing some sense of perspective, Google points out that this is “a number that vastly exceeds the age of the Universe”.

Well, what do you think about that. Google is edging toward infinity, the contemplation of which drove a dude named Cantor nuts. What is the motivation for an online advertising company being sued in numerous countries for a range of alleged business behaviors to need praise for its AI achievements. The firm’s Transformer technology IS the smart software innovation.

Google re-organized in smart software division, marginalizing some heavy Google hitters. It drove out Googlers who were asking questions about baked in algorithmic bias. It cut off discussion of the synthetic data activity. It shifted the AI research to London, a convenient 11 hours away by jet and a convenient eight time zones away from San Francisco.

The write up trots out the really fast computing trope for quantum computing:

In terms of performance, there is nothing to match Willow. The “classically hardest benchmark that can be done on a quantum computer today” was demolished in a matter of minutes. This same task would take one of the fastest supercomputer available an astonishing 10,000,000,000,000,000,000,000,000 years to work through.

Scientific notation exists for a reason. Please, pass the message to Google PR, please.

Okay, another “we are better than anyone else at quantum computing.” By extension, Google is better than anyone else at smart software and probably lots of other things mere comprehensible seeking people claim to do.

And do you think there are multiple universes? Ah, you said, “No.” Google’s smart quantum stuff reports that you are wrong.

Let ‘s think about why Google has an increasing need to be held by a virtual grandmother and not whacked on the head:

- Google is simply unable to address actual problems. From the wild and crazy moon shots to the weirdness of its quantum supremacy thing, the company is claiming advances in fields essentially disconnected from the real world.

- Google believes that the halo effect of being so darned competent in quantum stuff will enhance the excellence of its other products and services.

- Google management has zero clue how to address [a] challengers to its search monopoly, [b] the negative blowback from its unending legal hassles, and [c] the feeling that it has been wronged. By golly, Google IS the leader in AI just as Google is the leader in quantum computing.

Sorry, Google, granny is going to hit you on the back of the head. Scrunch down. You know she’s there, demanding excellence which you know you cannot deliver. For a more “positive” view of Google’s PR machinations couched navigate to “The Google Willow Thing.”

There must be a quantum pony in the multi-universe stable, right?

Stephen E Arnold, December 16, 2024