Enterprise Search: What Is Missing from This List

August 31, 2021

I got a wild and wooly announcement from something called The Market Gossip. The message was that a new report about enterprise search has been published. I never heard of the outfit (Orbis Research) in Dallas.

Take a look at this list of vendors covered in this global predictive report:

Notice anything interesting? I do. First, Elastic (commercial and open source) is not in the list. Second, the Algolia system (a distant cousin of Dassault Exalead) is not mentioned. Weird, because the company got another infusion of cash.) Three, the name of LucidWorks (an open source search recycler) is misspelled. Fourth, the inclusion of MarkLogic is odd because the company offers an XML data management system. Sure, one can create a search solution but that’s like building a real Darth Vader out of Lego blocks. Interesting but of limited utility. Fifth, the inclusion of SAP. Does the German outfit still pitch the long-in-the-tooth TREX system? Sixth, Microsoft offers many search systems. Which, I wonder, is the one explored?

Net net: Quite a thorough research report. Too bad it is tangential to where search and retrieval in the enterprise is going. If the report were generated by artificial intelligence, the algorithm should be tweaked. If humans cooked up this confection, I am not sure what to suggest. Maybe starting over?

Stephen E Arnold, August 30, 2021

Algolia: Now the Need for Sustainable, Robust Revenue Comes

August 27, 2021

We long ago decided Algolia was an outfit worth keeping an eye on. We were right. Now Pulse 2.0 reports, “Algolia: $150 Million Funding and $2.25 Billion Valuation.” The company closed recently on the Series D funding, bringing its total funding to $315 million. Putting that sum to shame is the hefty valuation touted in the headline. Can the firm live up to expectations? Reporter Annie Baker writes:

“This latest funding round reflects Algolia’s hyper growth fueled by demand for ‘building block’ API software that increases developer productivity, the growth in e-commerce, and digital transformation. And this additional investment enables Algolia to scale and serve the increased demand for the company’s Search and Recommendations products as well as fuel the company’s continued product expansion into adjacent markets and use-cases. … This new funding round caps a landmark year that saw significant growth and product innovation. And Algolia launched with the goal of creating fast, instant, and relevant search and discovery experiences that surfaced the desired information quickly. Earlier this year, the company had announced its new vision for dynamic experiences, advancing beyond search to empower businesses to quickly predict a visitor’s intent on their digital property in real time, in the session, and in the moment. And the business, armed with this visitor intent, can surface dynamic content in the form of search results, recommendations, offers, in-app notifications, and more — all while respecting privacy laws and regulations.”

Baker notes Algolia’s approach is a departure from opaque SaaS solutions and monolithic platforms. Instead, the company works with developers to build dynamic, personalized applications using its API platform. Over the last year and a half, Algolia also added seven new executives to its roster. Headquartered in San Francisco, the company was founded in 2012.

Cynthia Murrell, August 27, 2021

Google Fiddled Its Magic Algorithm. What?

August 19, 2021

This story is a hoot. Google, as I recall, has a finely tuned algorithm. It is tweaked, tuned, and tailored to deliver on point results. The users benefit from this intense interest the company has in relevance, precision, recall, and high-value results. Now a former Google engineer or Xoogler in my lingo has shattered my beliefs. Night falls.

Navigate to “Top Google Engineer Abandons Company, Reveals Big Tech Rewrote Algos To Target Trump.” (I love the word “algos”. So colloquial. So in.) I spotted this statement:

Google rewrote its algorithms for news searches in order to target #Trump, according to target Trump, according to @Perpetualmaniac #Google whistleblower, and author of the new book, “Google Leaks: An Expose of Bit Tech Censorship.”

The write up states:

As a senior engineer at Google for many years, Zach was aware of their bias, but watched in horror as the 2016 election of Donald Trump seemed to drive them into dangerous territory. The American ideal of an honest, hard-fought battle of ideas — when the contest is over, shaking hands and working together to solve problems — was replaced by a different, darker ethic alien to this country’s history,” the description adds. Vorhies said he left Google in 2019 with 950 pages of internal documents and gave them to the Justice Department.

Wowza. Is this an admission of unauthorized removal of a commercial enterprise’s internal information?

The sources for this interesting allegation of algorithm fiddling are interesting and possibly a little swizzly.

I am shocked.

The Google fiddling with precision, recall, objectivity, and who knows what else? Why? My goodness. What has happened to cause a former employee to offer such shocking assertions.

The algos are falling on my head and nothing seems to fit. Crying’s not for me. Nothing’s worrying me. Because Google.

Stephen E Arnold, August 19, 2021

Milvus and Mishards: Search Marches and Marches

August 13, 2021

I read “How We Used Semantic Search to Make Our Search 10x Smarter.” I am fully supportive of better search. Smarter? Maybe.

The write up comes from Zilliz which describes itself this way: The developer of Milvus “the world’s most advanced vector database, to accelerate the development of next generation data fabric.”

The system has a search component which is Elasticsearch. The secret sauce which makes the 10x claim is a group of value adding features; for instance, similarity and clustering.

The idea is that a user enters a word or phrase and the system gets related information without entering a string of synonyms or a particularly precise term. I was immediately reminded of Endeca without the MBAs doing manual fiddling and the computational burden the Endeca system and method imposed on constrained data sets. (Anyone remember the demo about wine?)

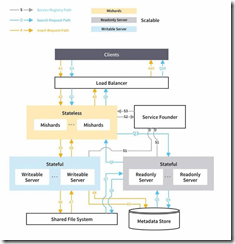

This particular write up includes some diagrams which reveal how the system operates. The diagrams like the one shown below are clear, but I

the world’s most advanced vector database, to accelerate the development of next generation data fabric.

The idea is “similarity search.” If you want to know more, navigate to https://zilliz.com. Ten times smarter. Maybe.

Stephen E Arnold, August 13, 2021

Algolia and Its View of the History of Search: Everyone Has an Opinion

August 11, 2021

Search is similar to love, patriotism, and ethical behavior. Everyone has a different view of the nuances of meaning with a specific utterance. Agree? Let’s assume you cannot define one of these words in a way that satisfies a professor from a mid tier university teaching a class to 20 college sophomores who signed up for something to do with Western philosophy: Post Existentialism. Imagine your definition. I took such a class, and I truly did not care. I wrote down the craziness the brown clad PhD provided, got my A, and never gave that stuff a thought. And you, gentle reader, are you prepared to figure out what an icon in an ibabyrainbow chat stream “means.” We captured a stream for one of my lectures to law enforcement in which she says, “I love you.” Yeah, right.

Now we come to “Evolution of Search Engines Architecture – Algolia New Search Architecture Part 1.” The write up explains finding information, and its methods through the lens of Algolia, a publicly traded firm. Search, which is not defined, characterizes the level of discourse about findability. The write up explains an early method which permitted a user to query by key words. This worked like a champ as long as the person doing the search knew what words to use like “nuclear effects modeling”.

The big leap was faster computers and clever post-Verity methods of getting distributed index to mostly work. I want to mention that Exalead (which may have had an informing role to play in Algolia’s technical trajectory) was a benchmark system. But, alas, key words are not enough. The Endeca facets were needed. Because humans had to do the facet identification, the race was on to get smart software to do a “good enough” job so old school commercial database methods could be consigned to a small room in the back of a real search engine outfit.

Algolia includes a diagram of the post Alta Vista, post Google world. The next big leap was scaling the post Google world. What’s interesting is that in my experience, most search problems result in processing smaller collections of information containing disparate content types. What’s this mean? When were you able to use a free Web search system or an enterprise search system like Elastic or Yext to retrieve text, audio, video, engineering drawings and their associated parts data, metadata from surveilled employee E2EE messages, and TikTok video résumés or the wildly entertaining puff stuff on LinkedIn? The answer is and probably will be for the foreseeable future, “No.” And what about real time data, the content on a sales person’s laptop with the changed product features and customer specific pricing. Oh, right. Some people forget about that. Remember. I am talking about a “small” content set, not the wild and crazy Internet indexes. Where are those changed files on the Department of Energy Web site? Hmmm.

The fourth part of the “evolution” leaps to keeping cloud centric, third party hosted chugging along. Have you noticed the latency when using the OpenText cloud system? What about the display of thumbnails on YouTube? What about retrieving a document from a content management system before lunch, only to find that the system reports, “Document not found.” Yeah, but. Okay, yeah but nothing.

The final section of the write up struck me as a knee slapper. Algolia addresses the “current challenges of search.” Okay, and what are these from the Algolia point of view: The main points have to do with using a cloud system to keep the system up and running without trashing response time. That’s okay, but without a definition of search, the fixes like separating search and indexing may not be the architectural solution. One example is processing streams of heterogeneous data in real time. This is a big thing in some circles and highly specialized systems are needed to “make sense” of what’s rushing into a system. Now means now, not a latency centric method which has remain largely unchanged for – what? — maybe 50 years.

What is my view of “search”? (If you are a believer that today’s search systems work, stop reading.) Here you go:

- One must define search; for example, chemical structure search, code search, HTML content search, video search, and so on. Without a definition, explanations are without context and chock full of generalizations.

- Search works when the content domain is “small” and clearly defined. A one size fits all content is pretty much craziness, regardless of how much money an IPO’ed or SPAC’ed outfit generates.

- The characteristic of the search engines my team and I have tested over the last — what is it now, 40 or 45 years — is that whatever system one uses is “good enough.” The academic calculations mean zero when an employee cannot locate the specific item of information needed to deal with a business issue or a student wants to locate a source for a statement from a source about voter fraud. Good enough is state of the art.

- The technology of search is like a 1962 Corvette. It is nice to look at but terrible to drive.

Net net: Everyone is a search expert now. Yeah, right. Remember: The name of the game is sustainable revenue, not precision and recall, high value results, or the wild and crazy promise that Google made for “universal search”. Ho ho ho.

Stephen E Arnold, August 11, 2021

DuckDuckGo Produces Privacy Income

August 10, 2021

DuckDuckGo advertises that it protects user privacy and does not have targeted ads in search results. Despite its small size, protecting user privacy makes DuckDuckGo a viable alternative to Google. TechRepublic delves into DuckDuckGo’s profits and how privacy is a big money maker in the article, “How DuckDuckGo Makes Money Selling Selling Search, Not Privacy.” DuckDuckGo has had profitable margins since 2014 and made over $100 million in 2020.

Google, Bing, and other companies interested in selling personal data say that it is a necessary evil in order for search and other services to work. DuckDuckGo says that’s not true and the company’s CEO Gabriel Weinberg said:

“It’s actually a big myth that search engines need to track your personal search history to make money or deliver quality search results. Almost all of the money search engines make (including Google) is based on the keywords you type in, without knowing anything about you, including your search history or the seemingly endless amounts of additional data points they have collected about registered and non-registered users alike. In fact, search advertisers buy search ads by bidding on keywords, not people….This keyword-based advertising is our primary business model.”

Weinberg continued that search engines do not need to track as much personal information as they do to personalize customer experiences or make money. Search engines and other online services could limit the amount of user data they track and still generate a profit.

Google made over $147 billion in 2020, but DuckDuckGo’s $100 million is not a small number either. DuckDuckGo’s market share is greater than Bing’s and, if limited to the US market, its market share is second to Google. DuckDuckGo is a like the Little Engine That Could. It is a hard working marketing operation and it keeps chugging along while batting the privacy beach ball along the Madison Avenue sidewalk.

Whitney Grace, August 10, 2021

Google Search: An Intriguing Observation

August 9, 2021

I read “It’s Not SEO: Something Is Fundamentally Broken in Google Search.” I spotted this comment:

Many will remember how remarkably accurate searches were at initial release c. 2017; songs could be found by reciting lyrics, humming melodies, or vaguely describing the thematic or narrative thrust of the song. The picture is very different today. It’s almost impossible to get the system to return even slightly obscure tracks, even if one opens YouTube and reads the title verbatim.

The idea is that the issue resides within Google’s implementation of search and retrieval. I want to highlight this comment offered in the YCombinator Hacker News thread:

While the old guard in Google’s leadership had a genuine interest in developing a technically superior product, the current leaders are primarily concerned with making money. A well-functioning ranking algorithm is only one small part of the whole. As long as the search engine works well enough for the (money-making) main-stream searches, no one in Google’s leadership perceives a problem.

I have a different view of Google search. Let me offer a handful of observations from my shanty in rural Kentucky.

To begin, the original method for determining precision and recall is like a page of text photocopied with that copy then photocopied. After a couple of hundred photocopies, image of the page has degraded. Photocopy for a couple of decades and the document copy is less than helpful. Degradation in search subsystems is inevitable, and it takes place in search as layers or wrappers have been added around systems and methods.

Second, Google must generate revenue; otherwise, the machine will lose velocity, maybe suffer cash depravation. The recent spectacular financial payoffs are not directed at what I call “precision and recall search.” What’s happening, in my opinion, is that accelerated relaxation of queries makes it easier to “match” an ad. More — not necessarily more relevant — matching provides quicker depletion of the ad inventory, more revenue, more opportunities for Google sales partners to pitch ads, and more users believing Google results are the cat’s pajamas. To “go back” to antiquated ideas like precision and recall, relevance, and old-school Boolean breaks the money flow, adds costs, and a forces distasteful steps for those who want big paydays, bonuses, and the cash to solve death and other childish notions.

Third, this comment from Satellite2 is on the money:

Power users as a proportion of Internet’s total user count probably followed an inverted zipf distribution over time. At the begining 100%, then 99, 90%, 9% and now less than one percent. Assuming power users formulate search in ways that are irreconcilable from those of the average user, and assuming Google adapted their models, metrics to the average user and retrained them at each step,then, we are simply no longer a target market of Google.

I interpret this as implying that Google is no longer interested in delivering on point results. I now run the same query across a number of Web search systems and hunt for results which warrant direct inspection. I use, for example, iseek.com, swisscows.ch, yandex.ru, and a handful of other systems.

Net net: The degradation of Google began around 2005 and 2006. In the last 15 years, Google has become a golden goose for some stakeholders. The company’s search systems — where is that universal search baloney, please? — are going to be increasingly difficult to refine so that a user’s query is answered in a user-useful way.

Messrs. Brin and Page bailed, leaving a consultant-like management team. Was their a link between increased legal scrutiny, friskiness in the Google legal department, antics involving hard drugs and death on a Googler’s yacht, and “effciency oriented” applied technologies which have accelerated the cancer of relevance-free content. Facebook takes bullets for its high school management approach. Google, in my view, may be the pinnacle of the ethos of high school science club activities.

What’s the fix? Maybe a challenger from left field will displace the Google? Maybe a for-fee outfit like Infinity will make it to the big time? Maybe Chinese style censorship will put content squabbles in the basement? Maybe Google will simply become more frustrating to users?

The YouTube search case in the essay in Hacker News is spot on. But Google search — both basic and advanced search — is a service which poses risks to users. Where’s a date sort? A key word search? File type search? A federated search across blogs and news? What happened to file type search? Yada yada yada.

Like the long-dead dinosaurs, Googzilla is now watching the climate change. Snow is beginning to fall because the knowledge environment is changing. Hello, Darwin!

Stephen E Arnold, August 9, 2021

Strong Sinequa Helps Out Hapless Microsoft with Enterprise Search

August 9, 2021

Microsoft has enlisted aid or French entrepreneurs have jumped on the opportunity to enhance the already stellar software system available from the SolarWinds and Exchange Server misstep outfit.

Business Wire reveals in a hard hitting write up “Sinequa Brings Intelligent Search to Microsoft Teams” an exciting development. Wait, doesn’t Microsoft search work? Apparently Sinequa’s platform works better. We learn:

“Sinequa for Teams enables organizations to unleash the power of Sinequa’s Intelligent Search platform right within Microsoft Teams. … Sinequa continues to recognize the need to make knowledge discoverable so employees can make better decisions, regardless of where and how they work. The Sinequa platform offers a single access point to surface relevant insights both from within and outside the Microsoft ecosystem. Built for Azure and Microsoft 365 customers with Teams, Sinequa has extended its powerful search technology to Teams to help enterprises elevate productivity and enable better decision-making all in one place.”

The tailored Teams platform promises to improve data findability and analysis while bolstering collaboration and workflows. Sinequa is proud of its ability to provide enterprise search to large and complex organizations. Founded in 2002, the company is based in Paris, France.

Excellence knows no bounds.

Cynthia Murrell, August 9, 2021

Autonomy: An Interesting Legal Document

August 4, 2021

Years ago I did some work for Autonomy. I have followed the dispute between Hewlett Packard and Autonomy. Enterprise search has long been an interest of mine, and Autonomy had emerged as one of the most visible and widely known vendors of search and retrieval systems.

Today (August 3, 2021) I read “Hard Drives at Autonomy Offices Were Destroyed the Same Month CEO Lynch Quit, Extradition Trial Was Told.” The write up contains information with which I was not familiar.

In the write up is a link to “In the City of Westminster Magistrates’ Court The Government of the United States of America V Michael Richard Lynch Findings of Fact and Reasons.” That 62 page document contains a useful summary of the HP – Autonomy deal.

Several observations:

- Generating sustainable revenue for an enterprise search system and ancillary technology is difficult. This is an important fact for anyone engaged in search and retrieval.

- The actions summarized in the document provide a road map of what Autonomy did to maintain its story of success in what has been for decades a quite treacherous market niche. Search is particularly difficult, and vendors have found marketing a heck of a lot easier than delivering a system that meets users’ expectations.

- The information in the document suggests that the American judicial system may find this case a “bridge” between how corporate entities respond to the Wall Street demands for revenue and growth.

Like Fast Search & Transfer, executives found themselves making decisions which make search and retrieval a swamp. Flash forward to the present: Google search is shot through with adaptations to online advertising.

Perhaps the problem is that people expect software to deliver immediate, relevant results. Well, it is clear that most of the search and retrieval systems seeking sustainable revenues have learned that search can deliver good enough results. Good enough is not good enough, however.

Stephen E Arnold, August 4, 2021

Search Atlas Demonstrates Google Search Bias by Location

July 28, 2021

An article at Wired reminds us that Google Search is not the objective source of information it appears to many users. We learn that “A New Tool Shows How Google Results Vary Around the World.” Researchers and PhD students Rodrigo Ochigame of MIT and Katherine Ye of Carnegie Mellon University created Search Atlas, an experimental Google Search interface. The tool displays three different sets of results to the same query based on location and language, illustrating both cultural differences and government preferences. “Information borders,” they call it.

The first example involves image searches for “Tiananmen Square.” Users in the UK and Singapore are shown pictures of the government’s crackdown on student protests in 1989. Those in China, or elsewhere using the Chinese language setting, see pretty photos of a popular tourist destination. Google says the difference has nothing to do with censorship—they officially stopped cooperating with the Chinese government on that in 2010, after all. It is just a matter of localized results for those deemed likely to be planning a trip. Sure. Writer Tom Simonite describes more of the tool’s results:

“The Search Atlas collaborators also built maps and visualizations showing how search results can differ around the globe. One shows how searching for images of ‘God’ yields bearded Christian imagery in Europe and the Americas, images of Buddha in some Asian countries, and Arabic script for Allah in the Persian Gulf and northeast Africa. The Google spokesperson said the results reflect how its translation service converts the English term ‘God’ into words with more specific meanings for some languages, such as Allah in Arabic. Other information borders charted by the researchers don’t map straightforwardly onto national or language boundaries. Results for ‘how to combat climate change’ tend to divide island nations and countries on continents. In European countries such as Germany, the most common words in Google’s results related to policy measures such as energy conservation and international accords; for islands such as Mauritius and the Philippines, results were more likely to cite the enormity and immediacy of the threat of a changing climate, or harms such as sea level rise.”

Search Atlas is not yet widely available, but the researchers are examining ways to make it so. They presented it at last month’s Designing Interactive Systems conference and are testing a private beta. Of course, the tool cannot reveal the inner workings of Google’s closely held algorithms. It does, however, illustrate the outsized power the company has over who can access what information. As co-creator Ye observes:

“People ask search engines things they would never ask a person, and the things they happen to see in Google’s results can change their lives. It could be ‘How do I get an abortion?’ restaurants near you, or how you vote, or get a vaccine.”

The researchers point to Safiya Noble’s 2018 book “Algorithms of Oppression” as an inspiration for their work. They hope their project will bring the biased nature of search algorithms to the attention of a broader audience.

Cynthia Murrell, July 28, 2021