Finding Live Music Performances

April 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Here is a niche search category some of our readers will appreciate. Lifehacker shares “The Best Ways to Find Live Gigs for Music You Love.” Writer David Nield describes how one can tap into a combination of sources to stay up to date on upcoming music events. He begins:

“More than once I’ve missed out on shows in my neighborhood put on by bands I like, just because I’ve been out of the loop. Whether you don’t want to miss gigs by artists you know, or you’re keen to get out and discover some new music, there are lots of ways to stay in touch with the live shows happening in your area—you need never miss a gig again. Pick the one(s) that work best for you from this list.”

First are websites dedicated to spreading the musical word, like Songkick and Bandsintown. One can sign up for notices or simply browse the site by artist or location. These sites can also use one’s listening data from streaming apps to inform their results. Or one can go straight to the source and follow artists on social media or their own websites (but that can get overwhelming if one enjoys many bands). Several music apps like Spotify and Deezer will notify you of upcoming concerts and events for artists you choose. Finally, YouTube lists tour details and ticket links beneath videos of currently touring bands, highlighting events near you. If, that is, you have chosen to share your location with the Google-owned site.

Cynthia Murrell, April 5, 2024

Is the AskJeeves Approach the Next Big Thing Again?

March 14, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Way back when I worked in silicon Valley or Plastic Fantastic as one 1080s wag put it, AskJeeves burst upon the Web search scene. The idea is that a user would ask a question and the helpful digital butler would fetch the answer. This worked for questions like “What’s the temperature in San Mateo?” The system did not work for the types of queries my little group of full-time equivalents peppered assorted online services.

A young wizard confronts a knowledge problem. Thanks, MSFT Copilot. How’s that security today? Okay, I understand. Good enough.

The mechanism involved software and people. The software processed the query and matched up the answer with the outputs in a knowledge base. The humans wrote rules. If there was no rule and no knowledge, the butler fell on his nose. It was the digital equivalent of nifty marketing applied to a system about as aware as the man servant in Kazuo Ishiguro’s The Remains of the Day.

I thought about AskJeeves as a tangent notion as I worked through “LLMs Are Not Enough… Why Chatbots Need Knowledge Representation.” The essay is an exploration of options intended to reduce the computational cost, power sucking, and blind spots in large language models. Progress is being made and will be made. A good example is this passage from the essay which sparked my thinking about representing knowledge. This is a direct quote:

In theory, there’s a much better way to answer these kinds of questions.

- Use an LLM to extract knowledge about any topics we think a user might be interested in (food, geography, etc.) and store it in a database, knowledge graph, or some other kind of knowledge representation. This is still slow and expensive, but it only needs to be done once rather than every time someone wants to ask a question.

- When someone asks a question, convert it into a database SQL query (or in the case of a knowledge graph, a graph query). This doesn’t necessarily need a big expensive LLM, a smaller one should do fine.

- Run the user’s query against the database to get the results. There are already efficient algorithms for this, no LLM required.

- Optionally, have an LLM present the results to the user in a nice understandable way.

Like AskJeeves, the idea is a good one. Create a system to take a user’s query and match it to information answering the question. The AskJeeves’ approach embodied what I called rules. As long as one has the rules, the answers can be plugged in to a database. A query arrives, looks for the answer, and presents it. Bingo. Happy user with relevant information catapults AskJeeves to the top of a world filled with less elegant solutions.

The question becomes, “Can one represent knowledge in such a way that the information is current, usable, and “accurate” (assuming one can define accurate). Knowledge, however, is a slippery fish. Small domains with well-defined domains chock full of specialist knowledge should be easier to represent. Well, IBM Watson and its adventure in Houston suggests that the method is okay, but it did not work. Larger scale systems like an old-fashioned Web search engine just used “signals” to produce lists which presumably presented answers. “Clever,” correct? (Sorry, that’s an IBM Almaden bit of humor. I apologize for the inside baseball moment.)

What’s interesting is that youthful laborers in the world of information retrieval are finding themselves arm wrestling with some tough but elusive problems. What is knowledge? The answer, “It depends” does not provide much help. Where does knowledge originate, the answer “No one knows for sure.” That does not advance the ball downfield either.

Grabbing epistemology by the shoulders and shaking it until an answer comes forth is a tough job. What’s interesting is that those working with large language models are finding themselves caught in a room of mirrors intact and broken. Here’s what TheTemples.org has to say about this imaginary idea:

The myth represented in this Hall tells of the divinity that enters the world of forms fragmenting itself, like a mirror, into countless pieces. Each piece keeps its peculiarity of reflecting the absolute, although it cannot contain the whole any longer.

I have no doubt that a start up with venture funding will solve this problem even though a set cannot contain itself. Get coding now.

Stephen E Arnold, March 14, 2024

Kagi Hitches Up with Wolfram

March 6, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Kagi + Wolfram” reports that the for-fee Web search engine with AI has hooked up with one of the pre-eminent mathy people innovating today. The write up includes PR about the upsides of Kagi search and Wolfram’s computational services. The article states:

…we have partnered with Wolfram|Alpha, a well-respected computational knowledge engine. By integrating Wolfram Alpha’s extensive knowledge base and robust algorithms into Kagi’s search platform, we aim to deliver more precise, reliable, and comprehensive search results to our users. This partnership represents a significant step forward in our goal to provide a search engine that users can trust to find the dependable information they need quickly and easily. In addition, we are very pleased to welcome Stephen Wolfram to Kagi’s board of advisors.

The basic wagon gets a rethink with other animals given a chance to make progress. Thanks, MSFT Copilot. Good enough, but in truth I gave up trying to get a similar image with the dog replaced by a mathematician and the pig replaced with a perky entrepreneur.

The integration of mathiness with smart search is a step forward, certainly more impressive than other firms’ recycling of Web content into bubble gum cards presenting answer. Kagi is taking steps — small, methodical ones — toward what I have described as “search enabled applications” and my friend Dr. Greg Grefenstette described in his book with the snappy title “Search-Based Applications: At the Confluence of Search and Database Technologies (Synthesis Lectures on Information Concepts, Retrieval, and Services, 17).”

It may seem like a big step from putting mathiness in a Web search engine to creating a platform for search enabled applications. It may be, but I like to think that some bright young sprouts will figure out that linking a mostly brain dead legacy app with a Kagi-Wolfram service might be useful in a number of disciplines. Even some super confident really brilliantly wonderful Googlers might find the service useful.

Net net: I am gratified that Kagi’s for-fee Web search is evolving. Google’s apparent ineptitude might give Kagi the chance Neeva never had.

Stephen E Arnold, March 6, 2024

SearXNG: A New Metasearch Engine

March 4, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Internet browsers and search engines are two of the top applications used on computers. Search engine giants like Bing and Google don’t respect users’ privacy and they track everything. They create individual user profiles then sell and use the information for targeted ads. The search engines also demote controversial information and return biased search results. On his blog, FlareXes shares a solution that protects privacy and encompasses metasearch: “Build Your Own Private Search Engine With SearXNG.”

SearXNG is an open source, customizable metasearch engine that returns search results from multiple sources and respects privacy. It was originally built off another open source project SearX. SearXNG has an extremely functional user interface. It also aggregates information from over seventy search engines, including DuckDuckGo, Brave Search, Bing, and Google.

The best thing about SearXNG is protecting user privacy: But perhaps the best thing about SearXNG is its commitment to user privacy. Unlike some search engines, SearXNG doesn’t track users or generate personalized profiles, and it never shares any information with third parties.”

Because SearXNG is a metasearch engine, it supports organic search results. This allows users to review information that would otherwise go unnoticed. That doesn’t mean the returns will allegedly be unbiased. The idea is that SearXNG returns better results than a revenue juggernaut:

SearXNG aggregates data from different search engines that doesn’t mean this could be biased. There is no way for Google to create a profile about you if you’re using SearXNG. Instead, you get high-quality results like Google or Bing. SearXNG also randomizes the results so no SEO or top-ranking will not gonna work. You can also enable independent search engines like Brave Search, Mojeek etc.”

If you want a search engine that doesn’t collect your personal data and has betters search results, warrants a test drive. The installation may require some tech fiddling.

Whitney Grace, March 4, 2024

A Look at Web Search: Useful for Some OSINT Work

February 22, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “A Look at Search Engines with Their Own Indexes.” For me, the most useful part of the 6,000 word article is the identified search systems. The author, a person with the identity Seirdy, has gathered in one location a reasonably complete list of Web search systems. Pulling such a list together takes time and reflects well on Seirdy’s attention to a difficult task. There are some omissions; for example, the iSeek education search service (recently repositioned), and Biznar.com, developed by one of the founders of Verity. I am not identifying problems; I just want to underscore that tracking down, verifying, and describing Web search tools is a difficult task. For a person involved in OSINT, the list may surface a number of search services which could prove useful; for example, the Chinese and Vietnamese systems.

A new search vendor explains the advantages of a used convertible driven by an elderly person to take a French bulldog to the park once a day. The clueless fellow behind the wheel wants to buy a snazzy set of wheels. The son in the yellow shirt loves the vehicle. What does that car sales professional do? Some might suggest that certain marketers lie, sell useless add ons, patch up problems, and fiddle the interest rate financing. Could this be similar to search engine cheerleaders and the experts who explain them? Thanks ImageFX. A good enough illustration with just a touch of bias.

I do want to offer several observations:

- Google dominates Web search. There is an important distinction not usually discussed when some experts analyze Google; that is, Google delivers “search without search.” The idea is simple. A person uses a Google service of which there are many. Take for example Google Maps. The Google runs queries when users take non-search actions; for example, clicking on another part of a map. That’s a search for restaurants, fuel services, etc. Sure, much of the data are cached, but this is an invisible search. Competitors and would-be competitors often forget that Google search is not limited to the Google.com search box. That’s why Google’s reach is going to be difficult to erode quickly. Google has other search tricks up its very high-tech ski jacket’s sleeve. Think about search-enabled applications.

- There is an important difference between building one’s own index of Web content and sending queries to other services. The original Web indexers have become like rhinos and white tigers. It is faster, easier, and cheaper to create a search engine which just uses other people’s indexes. This is called metasearch. I have followed the confusion between search and metasearch for many years. Most people do not understand or care about the difference in approaches. This list illustrates how Web search is perceived by many people.

- Web search is expensive. Years ago when I was an advisor to BearStearns (an estimable outfit indeed), my client and I were on a conference call with Prabhakar Raghavan (then a Yahoo senior “search” wizard). He told me and my client, “Indexing the Web costs only $300,000 US.” Sorry Dr. Raghavan (now the Googler who made the absolutely stellar Google Bard presentation in France after MSFT and OpenAI caught Googzilla with its gym shorts around its ankles in early 2023) you were wrong. That’s why most “new” search systems look for short cuts. These range from recycling open source indexes to ignoring pesky robots.txt files to paying some money to use assorted also-ran indexes.

Net net: Web search is a complex, fast-moving, and little-understood business. People who know now do other things. The Google means overt search, embedded search, and AI-centric search. Why? That is a darned good question which I have tried to answer in my different writings. No one cares. Just Google it.

PS. Download the article. It is a useful reference point.

Stephen E Arnold, February 22, 2024

OpenAI Embarks on Taking Down the Big Guy in Web Search

February 22, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The Google may be getting up there in Internet years; however, due to its size and dark shadow, taking the big fellow down and putting it out of the game may be difficult. Users are accustomed to the Google. Habits, particularly those which become semi automatic like a heroin addict’s fiddling with a spoon, are tough to break. After 25 years, growing out of a habit is reassuring to worried onlookers. But the efficacy of wait-and-see is not getting a bent person straight.

Taking down Googzilla may be a job for lots of little people. Thanks, Google ImageFX. Know thyself, right?

I read “OpenAI Is Going to Face an Uphill Battle If It Takes on Google Search.” The write up describes an aspirational goal of Sam AI-Man’s OpenAI system. The write up says:

OpenAI is reportedly building its own search product to take on Google.

OpenAI is jumping in a CRRC already crowded with special ops people. There is the Kagi subscription search. There is Phind.com and You.com. There is a one-man band called Stract and more. A new and improved Yandex is coming. The reliable Swisscows.com is ruminating in the mountains. The ever-watchful OSINT professionals gather search engines like a mother goose. And what do we get? Bing is going nowhere even with Copilot except in the enterprise market where Excel users are asking, “What the H*ll?” Meanwhile the litigating beast continues to capture 90 percent or more of search traffic and oodles of data. Okay, team, who is going to chop block the Google, a fat and slow player at that?

The write up opines:

But on the search front, it’s still all Google all the way. And even if OpenAI popularized the generative AI craze, the company has a long way to go if it hopes to take down the search giant.

Competitors can dream, plot, innovate, and issue press releases. But for the foreseeable future, the big guy is going to push others out of the way.

Stephen E Arnold, February 22, 2024

Search Is Bad. This Is News?

February 20, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Everyone is a search expert. More and more “experts” are criticizing “search results.” What is interesting is that the number of gripes continues to go up. At the same time, the number of Web search options is creeping higher as well. My hunch is that really smart venture capitalists “know” there is a money to be made. There was one Google; therefore, another one is lurking under a pile of beer cans in a dorm somewhere.

“One Tech Tip: Ready to Go Beyond Google? Here’s How to Use New Generative AI Search Sites” is a “real” news report which explains how to surf on the new ChatGPT-type smart systems. At the same time, the article makes it clear that the Google may have lost its baseball bat on the way to the big game. The irony is that Google has lots of bats and probably owns the baseball stadium, the beer concession, and the teams. Google also owns the information observatory near the sports arena.

The write up reports:

A recent study by German researchers suggests the quality of results from Google, Bing and DuckDuckGo is indeed declining. Google says its results are of significantly better quality than its rivals, citing measurements by third parties.

A classic he said, she said argument. Objective and balanced. But the point is that Google search is getting worse and worse. Bing does not matter because its percentage of the Web search market is low. DuckDuck is a metasearch system like Startpage. I don’t count these as primary search tools; they are utilities for search of other people’s indexes for the most part.

What’s new with the ChatGPT-type systems? Here’s the answer:

Rather than typing in a string of keywords, AI queries should be conversational – for example, “Is Taylor Swift the most successful female musician?” or “Where are some good places to travel in Europe this summer?” Perplexity advises using “everyday, natural language.” Phind says it’s best to ask “full and detailed questions” that start with, say, “what is” or “how to.” If you’re not satisfied with an answer, some sites let you ask follow up questions to zero in on the information needed. Some give suggested or related questions. Microsoft‘s Copilot lets you choose three different chat styles: creative, balanced or precise.

Ah, NLP or natural language processing is the key, not typing key words. I want to add that “not typing” means avoiding when possible Boolean operators which return results in which stings occur. Who wants that? Stupid, right?

There is a downside; for instance:

Some AI chatbots disclose the models that their algorithms have been trained on. Others provide few or no details. The best advice is to try more than one and compare the results, and always double-check sources.

What’s this have to do with Google? Let me highlight several points which make clear how Google remains lost in the retrieval wilderness, leading the following boy scout and girl scout troops into the fog of unknowing:

- Google has never revealed what it indexes or when it indexes content. What’s in the “index” and sitting on Google’s servers is unknown except to some working at Google. In fact, the vast majority of Googlers know little about search. The focus is advertising, not information retrieval excellence.

- Google has since it was inspired by GoTo, Overture, and Yahoo to get into advertising been on a long, continuous march to monetize that which can be shaped to produce clicks. How far from helpful is Google’s system? Wait until you see AI helping you find a pizza near you.

- Google’s bureaucratic methods is what I would call many small rubber boats generally trying to figure out how to get to Advertising Land, but they are caught in a long, difficult storm. The little boats are tough to keep together. How many AI projects are enough? There are never enough.

Net net: The understanding of Web search has been distorted by Google’s observatory. One is looking at information in a Google facility, designed by Googlers, and maintained by Googlers who were not around when the observatory and associated plumbing was constructed. As a result, discussion of search in the context of smart software is distorted.

ChatGPT-type services provide a different entry point to information retrieval. The user still has to figure out what’s right and what’s wonky. No one wants to do that work. Write ups about “new” systems are little more than explanations of why most people will not be able to think about search differently. That observatory is big; it is familiar; and it is owned by Google just like the baseball team, the concessions, and the stadium.

Search means Google. Writing about search means Google. That’s not helpful or maybe it is. I don’t know.

Stephen E Arnold, February 20, 2024

x

x

x

Relevance: Rest in Peace

February 16, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It is Friday, and I am tired of looking at computer generated news with lines like “Insert paragraphs here”. No, don’t bother. The issues I am experiencing with SmartNews and Flipboard are more than annoyances. These, like other aggregation services, are becoming less productive than reading random Reddit posts or the information posted on Blackmagic forum boards. Everyone is trying to find a way to make a buck before the bank account says, “Yo, transaction denied.”

Marketers will find that buying traffic enables many opportunities. Thanks MSFT Copilot whatever. Good enough.

I read “Meta Is Passing on the Apple Tax for Boosted Posts to Advertisers.” What’s the big point in the pontificating online service? How about this passage:

Meta says those who want to boost posts through its iOS apps will now need to add prepaid funds and pay for them before their boosted posts are published. Meta will charge an extra 30 percent to cover Apple’s transaction fee for preloading funds in iOS as well.

My interpretation is: If you want traffic, you will pay for it. And you will pay other fees as well. And if you don’t like it, give those free press release services a whirl.

So what?

- The pay-for-traffic model is now the best and fastest way to get traffic or clicks. Free rides, I think, have been replaced with tolls.

- Next up will be subscriptions to those who want traffic. Just pay a lump sum and you will get traffic. The traffic may be worthless, but for those who like to play roulette, you may get a winner. Remember the house owns zero and double zero plus whatever you lose. Great deal, right?

- The popular click is likely to be shaped, weaponized, or disinformationized.

Net net: Relevance will be quite difficult to define outside of a transactional relationship. Will this matter? Nope because most users accept what a service returns as relevant, accurate, and reliable.

Stephen E Arnold, February 16, 2024

Hewlett Packard and Autonomy: Search and $4 Billion

February 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

More than a decade ago, Hewlett Packard acquired Autonomy plc. Autonomy was one of the first companies to deploy what I call “smart software.” The system used Bayesian methods, still quite new to many in the information retrieval game in the 1990s. Autonomy kept its method in a black box assigned to a company from which Autonomy licensed the functions for information processing. Some experts in smart software overlook BAE Systems’ activity in the smart software game. That effort began in the late 1990s if my memory is working this morning. Few “experts” today care, but the dates are relevant.

Between the date Autonomy opened for business in 1996 and HP’s decision to purchase the company for about $8 billion in 2011, there was ample evidence that companies engaged in enterprise search and allied businesses like legal work processes or augmented magazine advertising were selling for much less. Most of the companies engaged in enterprise search simply went out of business after burning through their funds; for example, Delphes and Entopia. Others sold at what I thought we inflated or generous prices; for example, Vivisimo to IBM for about $28 million and Exalead to Dassault for 135 million euros.

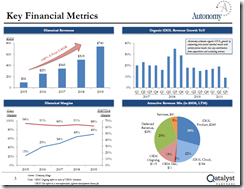

Then along comes HP and its announcement that it purchased Autonomy for a staggering $8 billion. I attended a search-related event when one of the presenters showed this PowerPoint slide:

The idea was that Autonomy’s systems generated multiple lines of revenue, including a cloud service. The key fact on the presentation was that the search-and-retrieval unit was not the revenue rocket ship. Autonomy has shored up its search revenue by acquisition; for example, Soundsoft, Virage, and Zantaz. The company also experimented with bundling software, services, and hardware. But the Qatalyst slide depicted a rosy future because of Autonomy management’s vision and business strategy.

Did I believe the analysis prepared by Frank Quatrone’s team? I accepted some of the comments about the future, and I was skeptical about others. In the period from 2006 to 2012, it was becoming increasingly difficult to overcome some notable failures in enterprise search. The poster child from the problems was Fast Search & Transfer. In a nutshell, Fast Search retreated from Web search, shutting down its Google competitor AllTheWeb.com. The company’s engaging founder John Lervik told me that the future was enterprise search. But some Fast Search customers were slow in paying their bills because of the complexity of tailoring the Fast Search system to a client’s particular requirements. I recall being asked to comment about how to get the Fast Search system to work because my team used it for the FirstGov.gov site (now USA.gov) when the Inktomi solution was no longer viable due to procurement rule changes. Fast Search worked, but it required the same type of manual effort that the Vivisimo system required. Search-and-retrieval for an organization is not a one size fits all thing, a fact Google learned with its spectacular failure with its truly misguided Google Search Appliance product. Fast Search ended with an investigation related to financial missteps, and Microsoft stepped in in 2008 and bought the company for about $1.2 billion. I thought that was a wild and crazy number, but I was one of the lucky people who managed to get Fast Search to work and knew that most licensees would not have the resources or talent I had at my disposal. Working for the White House has some benefits, particularly when Fast Search for the US government was part of its tie up with AT&T. Thank goodness for my counterpart Ms. Coker. But $1.2 billion for Fast Search? That in my opinion was absolutely bonkers from my point of view. There were better and cheaper options, but Microsoft did not ask my opinion until after the deal was closed.

Everyone in the HP Autonomy matter keeps saying the same thing like an old-fashioned 78 RPM record stuck in a groove. Thanks, MSFT Copilot. You produced the image really “fast.” Plus, it is good enough like most search systems.

What is the Reuters’ news story adding to this background? Nothing. The reason is that the news story focuses on one factoid: “HP Claims $4 Billion Losses in London Lawsuit over Autonomy Deal.” Keep in mind that HP paid $11 billion for Autonomy plc. Keep in mind that was 10 times what Microsoft paid for Fast Search. Now HP wants $4 billion. Stripping away everything but enterprise search, I could accept that HP could reasonably pay $1.2 billion for Autonomy. But $11 billion made Microsoft’s purchase of Fast Search less nutso. Because, despite technical differences, Autonomy and Fast Search were two peas in a pod. The similarities were significant. The differences were technical. Neither company was poised to grow as rapidly as their stakeholders envisioned.

When open source search options became available, these quickly became popular. Today if one wants serviceable search-and-retrieval for an enterprise application one can use a Lucene / Solr variant or pick one of a number of other viable open source systems.

But HP bought Autonomy and overpaid. Furthermore, Autonomy had potential, but the vision of Mike Lynch and the resources of HP were needed to convert the promise of Autonomy into a diversified information processing company. Autonomy could have provided high value solutions to the health and medical market; it could have become a key player in the policeware market; it could have leveraged its legal software into a knowledge pipeline for eDiscovery vendors to license and build upon; and it could have expanded its opportunities to license Autonomy stubs into broader OpenText enterprise integration solutions.

But what did HP do? It muffed the bunny. Mr. Lynch exited and set up a promising cyber security company and spent the rest of his time in courts. The Reuters’ article states:

Following one of the longest civil trials in English legal history, HP in 2022 substantially won its case, though a High Court judge said any damages would be significantly less than the $5 billion HP had claimed. HP’s lawyers argued on Monday that its losses resulting from the fraud entitle it to about $4 billion.

If I were younger and had not written three volumes of the Enterprise Search Report and a half dozen books about enterprise search, I would write about the wild and crazy years for enterprise search, its hits, its misses, and its spectacular failures (Yes, Google, I remember the Google Search Appliance quite well.) But I am a dinobaby.

The net net is HP made a poor decision and now years later it wants Mike Lynch to pay for HP’s lousy analysis of the company, its management missteps within its own Board of Directors, and its decision to pay $11 billion for a company in a sector in which at the time simply being profitable was a Herculean achievement. So this dinobaby says, “Caveat emptor.”

Stephen E Arnold, February 12, 2024

The Next Big Thing in Search: A Directory of Web Sites

February 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In the early 1990s, an entrepreneur with whom I had worked in the 1980s convinced me to work on a directory of Web sites. Yahoo was popular at the time, but my colleague had a better idea. The good news is that our idea worked and the online service we built became part of the CMGI empire. Our service was absorbed by one of the leading finding services at the time. Remember Lycos? My partner and I do. Now the Web directory is back decades after those original Yahooligans and our team provided a useful way to locate a Web site.

“Search Chatbots? Pah, This Startup’s Trying on Yahoo’s Old Outfit of Web Directories” presents information about the utility of a directory of Web sites and captures some interesting observations about the findability service El Toco.

The innovator driving the directory concept is Thomas Chopping, a “UK based economist.” He made several observations in a recent article published by the British outfit The Register; for example:

“During the decades since it launched, we’ve been watching Google steadily trying to make search more predictive, by adding things like autocomplete and eventually instant answers,” Chopping told The Register. “This has the byproduct of increasing the amount of time users spend on their site, at the expense of visiting the underlying sources of the data.”

The founder of El Toco also notes:

It’s impossible to browse with conversational-style search tools, which are entirely focused on answering questions. “Right now, this is playing into the hands of Meta and TikTok, because it takes so much effort to find good quality websites via search engines that people stopped bothering.

El Taco wants to facilitate browsing, and the model is a directory listing. The user can browse and click. The system displays a Web site for the user to scan, read, or bookmark.

Another El Taco principle is:

“We don’t need the user’s personal data to work out which results to show, because the user can express this on their own. We don’t need AI to turn the search into a conversation, because this can be done with a few clicks of the user interface

The economist-turned-entrepreneur points out:

“Charging users for Web search is a model which clearly doesn’t work, thanks to Neeva for demonstrating that, so we allow adverts but if the users care they can go into a menu and simply switch them off.”

Will El Taco gain traction? My team and I have been involved in information retrieval for decades. From indexing information about nuclear facilities to providing some advice to an AI search start up a few months ago. I have learned that predicting what will become the next big thing in findability is quite difficult.

A number of interesting Web search solutions are available. Some are niche-focused like Biznar. Others are next-generation “phinding” services like Phind.com. Others are metasearch solutions like iSeek. Some are less crazy Google-style systems like Swisscows. And there are more coming every day.

Why? Let me share several observations or “learnings” from a half century of working in the information retrieval sector:

- People have different information needs and a one-size-fits-all search system is fraught with problems. One person wants to search for “pizza near me”. Another wants information about Dark Web secure chat services.

- Almost everyone considers themselves a good or great online searcher. Nothing could be further from the truth. Just ask the OSINT professionals at any intelligence conference.

- Search companies with some success often give in to budgeting for a minimally viable system, selling traffic or user data, and to dark patterns in pursuit of greater revenue.

- Finding information requires effort. Convenience, however, is the key feature of most finding systems. Microfilm is not convenient; therefore, it sucks. Looking at research data takes time and expertise; therefore, old-fashioned work sucks. Library work involving books is not for everyone; therefore, library research sucks. Only a tiny percentage of online users want to exert significant effort finding, validating, and making sense of information. Most people prefer to doom scroll or watch dance videos on a mobile device.

Net net: El Taco is worth a close look. I hope that editorial policies, human curation, and frequent updating become the new normal. I am just going to remain open minded. Information is an extremely potent tool. If I tell you human teeth can explode, do you ask for a citation? Do you dismiss the idea because of your lack of knowledge? Do you begin to investigate of high voltage on the body of a person who works around a 133 kV transmission line? Do you dismiss my statement because I am obviously making up a fact because everyone knows that electricity is 115 to 125 volts?

Unfortunately only subject matter experts operating within an editorial policy and given adequate time can figure out if a scientific paper contains valid data or made-up stuff like that allegedly crafted by the former presidents of Harvard and Stanford University and probably faculty at the university closest to your home.

Our 1992 service had a simple premise. We selected Web sites which contained valid and useful information. We did not list porn sites, stolen software repositories, and similar potentially illegally or harmful purveyors of information. We provided the sites our editors selected with an image file that was our version of the old Good Housekeeping Seal of Approval.

The idea was that in the early days of the Internet and Web sites, a parent or teacher could use our service without too much worry about setting off a porn storm or a parent storm. It worked, we sold, and we made some money.

Will the formula work today? Sure, but excellence and selectivity have been key attributes for decades. Give El Taco a look.

Stephen E Arnold, February 12, 2024