TikTok Sells Books. Who Knew? Amazon?

May 20, 2022

And some were concerned social media had made books obsolete. To the contrary, reports BBC News, “TikTok Helps UK Book Sales Hit Record Levels, Publishers Association Says.” The UK’s Publishers Association was pleased to see sales of printed books in that country rise by 5% last year. It is especially impressive, notes the organization’s chief executive Stephen Lotinga, given bookstores were still closed for the first quarter of 2021. He credits a TikTok trend with at least part of the increase. The article reports:

“The organization said four of the top five young adult bestsellers in 2021 had been driven by the BookTok trend. … Publishers Association chief executive Stephen Lotinga said viral videos on platforms like TikTok and YouTube had been ‘really significant’ in encouraging readers to discover books. ‘Anecdotally, we’ve had lots of individual booksellers talking about the fact that they’re having lots of young people coming into their book stores, talking about books that they have heard about on TikTok and asking for them,’ he said. ‘It is having an impact on the number of books sold, but the shape of what’s being sold is changing as well. Throughout the pandemic period, we saw people increasingly buying what we call backlist books, which are books that have been published in the past.’ Many of the titles that have taken off on TikTok are several years old rather than brand new releases.”

Rather than a preference for new releases, we learn, BookTok favors books with unexpected or dramatic endings. At least that appears to be the current trend. The bump in print-book sales was accompanied by a dip by 1% in digital sales. Interestingly, audio-book downloads beat out both with an increase of 14%.

Cynthia Murrell, May 20, 2022

China Targets Low-Profile Social Network Douban for Censorship

May 20, 2022

China continues to do one of the things it does best: control the flow of information within its borders. Rest of World reports, “China’s Most Chaotic Social Network Survived Beijing’s Censors—Until Now.” Writer Viola Zhou describes the low-profile site:

“The chaotic Chinese social network Douban never looked for fame; it was designed for people with niche obsessions and an urge to talk about them. … Douban began as a review site for books, film, and music: the interests of its charismatic founder, Ah Bei. It quickly grew into a social network of millions of users.”

Those users bonded around shared interests both playful and serious. To keep the site rooted in a spirit of community, it has resisted both large-scale advertising and (unlike other social networks) government propaganda accounts. Douban managed to avoid scrutiny by China’s fervent censors since it launched in 2005. Until now. Zhou continues:

“In March, a government censorship task force was set up at the company’s headquarters. Over the past year, some of its most popular groups have shut down, its app was scrubbed from major Chinese stores, and on April 14, Douban froze a significant traffic driver, the gossip forum Goose Group, though it’s unclear whether each of those actions were the decisions of the website or government regulators. As China’s tech crackdown seeps into all parts of online life, the ability to organize around something as mild as shared interests is being throttled by Beijing’s censors. Rest of World spoke to more than a dozen early Douban employees, prominent group admins, and current users, most of whom requested anonymity in order to freely discuss Chinese censorship. For them, the reining-in of Douban signals that its creative, tight-knit communities have become an unacceptable political risk, as the Chinese government grows increasingly vigilant about any form of civil gathering.”

Yes, it seems citizens coming together over any topic, no matter how far from political or social matters, is a threat. The pressure on Douban is said to be part of the government’s campaign against a scourge dubbed “fan circle chaos.” Colorful. Some users hold out hope their beloved groups will someday be reinstated. Meanwhile, founder Ah Bei’s account has been inactive since 2019. See the write-up for more about Douban and some of its forums that have been shuttered.

Cynthia Murrell, May 20, 2022

Some Twitter Twaddle

May 2, 2022

Twitter matters to a modest number of self promoters. Nah, I won’t name those who are laboring away in the self branding rock climbing event. I think software posts when one of my research team cranks out a story for the Beyond Search blog. I suppose I should know, but I am not a digital rock climber.

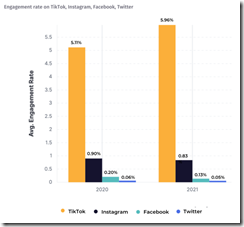

I read “New Study: TikTok Industry Engagement Benchmarks for 2022.” Since I don’t know much about Twitter “twaddle,” the factoid in the write up which caught my attention explores the much-desired notion of “engagement.”

The TikTok outfit with some connection to China has an engagement score of six percent. I am not sure what the other 94 percent means. Maybe those folks are in non engagement mode? If the data in the write up are on the money, other big name social services are breathing the fumes from the Chinese coal burning video platform; to wit:

Facebook: 0.83 percent

Instagram: 0.13 percent

Twitter: 0.05 percent

The write up includes a nifty chart:

What’s interesting is that an outfit which I thought was a Big Dog (YouTube) was not included.

Will the rocket guy with trendy golf carts jazz up the Tweeter’s engagement score? I find it amusing that the wall climbers avoid TikTok, yet express considerable delight when Top Tweeters meet at the gym to talk about their achievements.

If the other social media companies are not delivering “engagement,” will CNN Plus or whatever it was, make a comeback?

Perhaps some Chinese thinking would help? Here’s an example for the Tweeters to ponder?

Navigate to “Tiktok Dominates in Tech Earnings, Digital Ad Giants Struggle to Compete.” Consider this passage:

Based on Insider Intelligence’s forecast, TikTok will garner 755 million monthly users globally in 2022, and its market share in social networking could top 20% this year or nearly 25% by 2024. The short-video-based platform’s immense popularity is exactly the reason why it is making the competition field harder for other platforms.

Maybe the Tweeters should TikTok?Maybe traditional media companies should emulate TikTok?

Stephen E Arnold, May2, 2022

NCC April TikTok: Yeah, Not Good for Teenies

April 29, 2022

We wonder whether China will more aggressively exploit TikTok’s ability to influence. The New York Post describes “How TikTok Has Become a Dangerous Breeding Ground for Mental Disorders.” Apparently, tiktoks discussing mental health conditions are trending, especially among teen girls. This would be a good thing—if they were all produced by medical experts, contained good information, and offered guidance for seeking professional help when warranted. Instead influencers, many of whom are teenagers themselves, purport to help others self-diagnose their mental conditions. As one might imagine, this rarely goes well. Writer Riki Schlott tells us:

“After nearly two years of lockdowns and school closures, lonely teens are spending more time online, and many inevitably come across mental health content on TikTok. When they do, the platform’s algorithm kicks in, serving suggestible young girls even more videos on the topic. While mental health awareness is surely a good thing, well-meaning influencers are inadvertently harming young, impressionable viewers, many of whom seem to be incorrectly self-diagnosing with disorders or suddenly manifesting symptoms because they are now aware of them.”

The author continues, expanding her warning to include social media in general:

“Eating disorders have also been shown to spread within friend groups. As a member of Gen Z, I’ve watched firsthand what social media has done to a generation of young women — it even left behind self-harm scars on many of my peers’ wrists. I know a terrifying number of peers who have self harmed, many of whom were habitual social media users. Rates of depression have doubled among teen girls between 2009 and 2019, and self-harm hospital admissions have soared 100 percent for girls aged 10 to 14 during the rise of social media between 2010 and 2014, the most recently available data.”

Clearly a solution is needed, but Schlott knows where we cannot turn—politicians are too “clueless” to craft effective regulations and the platforms are too greedy to do anything about it. Instead it falls to parents to take responsibility for their teens’ media consumption, as difficult as that may be. Citing psychology professor and author on the subject Dr. Jean Twenge, the write-up advises a few precautions. First parents must recognize that, unlike playing age-appropriate games or texting friends on their devices, social media is completely inappropriate for children, tweens, and young teens. The platforms themselves officially limit accounts to those 13 and older, but Twenge suggests holding off until a child is 16 if possible. She also proposes a household rule whereby everyone, including parents, stops using electronic devices an hour before bedtime and leaves their phones outside their bedrooms at night. Yes, parents too—after all, leading by example is often the only way to convince teens to comply.

Cynthia Murrell, April 29, 2022

An Ad Agency Decides: No Photoshopping of Bodies or Faces for Influencers

April 11, 2022

Presumably Ogilvy will exempt retouched food photos (what? hamburgers from a fast food outlet look different from the soggy burger in a box). Will Ogilvy outlaw retouched vehicle photographs (what? the Toyota RAV’s paint on your ride looks different from the RAV’s in print and online advertisements). Will models from a zippy London or Manhattan agency look different from the humanoid doing laundry at 11 15 on a Tuesday in Earl’s Court laundrette (what? a model with out make up, some retouching, and slick lighting?). Yes, Ogilvy has standards. See this CBS News item, which is allegedly accurate. Overbilling is not Photoshopping. Overbilling is a different beastie.

I think I know the answer to my doubts about the scope of this ad edit as reported in “Ogilvy Will No Longer Work with Influencers Who Edit Their Bodies or Faces for Ads.” The write up reports:

Ogilvy UK will no longer work with influencers who distort or retouch their bodies or faces for brand campaigns in a bid to combat social media’s “systemic” mental health harms.

I love the link to mental health harms. Here’s a quote which I find amusing:

The ban applies to all parts of the Ogilvy UK group, which counts the likes of Dove among its clients. Dove’s global vice president external communications and sustainability, Firdaous El Honsali, came out in support of the policy. “We are delighted to see our partner Ogilvy tackling this topic. Dove only works with influencers that do not distort their appearance on social media – and together with Ogilvy and our community of influencers, we have created several campaigns that celebrate no digital distortion,” El Honsali says.

Several observations:

- Ogilvy is trying to adjust to the new world of selling because influencers don’t think about Ogilvy. If you want an influencer, my hunch is that you take what the young giants offer.

- Like newspapers, ad agencies are trapped in models from the hay days of broadsheets sold on street corners. By the way, how are those with old business models doing in the zip zip TikTok world?

- Talking about rules is easy. Enforcing them is difficult. I bet the PowerPoint used in the meeting to create these rules for influencers was a work of marketing art.

Yep, online advertising, consolidation of agency power, and the likes of Amazon-, Facebook (Zuckbook), and YouTube illustrate one thing: The rules are set or left fuzzy by the digital platforms, not the intermediaries.

And the harm thing? Yep, save the children one influencer at a time.

Stephen E Arnold, April 11, 2022

Twitter and a Loophole? Unfathomable

April 6, 2022

Twitter knows Russia is pushing false narratives about the war in Ukraine. That is why it now refuses to amplify tweets from Russian state-affiliated media outlets like RT or Sputnik. However, the platform is not doing enough to restrain the other hundred-some Russian government accounts, according to the BBC News piece, “How Kremlin Accounts Manipulate Twitter.” Reporter James Clayton cites QUT Digital Media Research Centre‘s Tim Graham as he writes:

“Intrigued by this spider web of Russian government accounts, Mr Graham – who specializes in analyzing co-ordinated activity on social media – decided to investigate further. He analyzed 75 Russian government Twitter profiles which, in total, have more than 7 million followers. The accounts have received 30 million likes, been retweeted 36 million times and been replied to 4 million times. He looked at how many times each Twitter account retweeted one of the other 74 profiles within an hour. He discovered that the Kremlin’s network of Twitter accounts work together to retweet and drive up traffic. This practice is sometimes called ‘astroturfing’ – when the owner of several accounts uses the profiles they control to retweet content and amplify reach. ‘It’s a coordinated retweet network,’ Mr Graham says. ‘If these accounts weren’t retweeting stuff at the same time, the network would just be a bunch of disconnected dots. … They are using this as an engine to drive their preferred narrative onto Twitter, and they’re getting away with it,’ he says. Coordinated activity, using multiple accounts, is against Twitter’s rules.”

Twitter is openly more lenient on tweets by government officials under what it calls “public interest exceptions.” Even so, we are told there are supposed to be no exceptions on coordinated behavior. The BBC received no response from Twitter officials when it asked them about Graham’s findings. Clayton generously notes it can be difficult to prove content is false amid the chaos of war, and the platform has been removing claims as they are proven false. He also notes Facebook and other social media platforms have a similar Russia problem. The article allows Twitter may eventually ban Kremlin accounts entirely, as it banned Donald Trump in January 2021. Perhaps.

Cynthia Murrell, April 6, 2022

California: Knee Jerk Reflex Decades After the Knee Cap Whack

March 23, 2022

Talk about reflexes. I read “California Bill Would Let Parents Sue Social Media Companies for Addicting Kids.” [You will have to pay to read the original and wordy write up.] The main idea is that an attentive parent with an ambulance chaser or oodles of cash can sue outfits like the estimable Meta Zuck thing, the China-linked TikTok, or the “we do good” YouTube and other social media entities. (No, I don’t want to get into definitions. I will leave that to the legal eagles.) The write up states:

Assembly Bill 2408, or the Social Media Platform Duty to Children Act, was introduced by Republican Jordan Cunningham of Paso Robles and Democrat Buffy Wicks of Oakland with support from the University of San Diego School of Law Children’s Advocacy Institute. It’s the latest in a string of legislative and political efforts to crack down on social media platforms’ exploitation of their youngest users.

I like the idea that commercial enterprises should not addict child users. I want to point out that the phrasing is ambiguous. I assume the real news outfit means content consumers under a certain age, not the less positive meaning of the phrase.

I think the legislation is a baby step in a helpful direction. But it has taken decades for the toddler to figure out how to find the digital choo choo train. The reflex reaction seems to lag as well. Whack. And years later a foot moves forward.

Stephen E Arnold, March 23, 2022

TikTok: Child Harm?

March 10, 2022

I will keep this brief. Navigate to “TikTok under Investigation in US over Harms to Children.” The article explains why an Instagram probe is now embracing TikTok. From my point of view, this “harm” question must be addressed. Glib statements like “Senator, I will send you a report” have allowed certain high technology firms to skate with the wind at their backs. Now the low friction surface is cracking. The “environment” of questioning is changing. Will the digital speed skaters fall into chilly water or with the help of legal eagle glide over the danger spots? Kudos to the US attorneys general who, like me, believe that more than cute comments are needed. Note: I will be speaking at the 2022 National Cyber Crime Conference. The professionals at the Massachusetts’ Attorney General’s office are crafting another high value program.

Stephen E Arnold, March 10, 2022

Who Is the Bigger Disruptor: A Twitch Streamer or a Ring Announcer?

February 17, 2022

People can agree on is that there is a lot of misinformation surrounding COVID-19. What is considered “misinformation” depends on an individuals’ beliefs. The facts remains, however, that COVID-19 is real, vaccines do not contain GPS chips, and the pandemic has been one big pain. Whenever it is declared we are in a post-pandemic world, misinformation will be regarded as one of the biggest fallouts with an on-going ripple effect.

The Verge explores how one controversial misinformation spreader will be discussed about for years to come: “The Joe Rogan Controversy Is What Happens When You Put Podcasts Behind A Wall.” Misinformation supporters, among them conspiracy theorists, used to be self-contained in their own corner of the globe, but they erupted out of their crazy zone like Vesuvius engulfing Pompeii. Rogan’s faux pas caused Spotify podcast show to remove over seventy episodes of his show or deplatform him.

Other podcast platforms celebrated the demise of a popular Spotify show and attempted to sell more subscriptions for their own content. These platforms should not be celebrating, though. Spotify owned Rogan’s show and his controversy has effectively ruined the platform, but it could happen at any time to Spotify’s rivals. Rogan is not the only loose cannon with a podcast and it does not take much for anything to be considered offensive, then canceled. The rival platforms might be raking in more dollars right now, but:

“We’re moving away from a world in which a podcast player functions as a search engine and toward one in which they act as creators and publishers of that content. This means more backlash and room for questions like: why are you paying Rogan $100 million to distribute what many consider to be harmful information? Fair questions!

This is the cost of high-profile deals and attempts to expand podcasting’s revenue. Both creators and platforms are implicated in whatever content’s distributed, hosted, and sold, and both need to think clearly about how they’ll handle inevitable controversy.”

There is probably an argument about the right to Freedom of Speech throughout this controversy, but there is also the need to protect people from harm. It is one large, gray zone with only a tight rope to walk across it.

So Amouranth or Mr. Rogan? Jury’s out.

Whitney Grace, January 17, 2022

A News Blog Complains about Facebook Content Policies

January 20, 2022

Did you know that the BMJ (in 1840 known as the Provincial Medical and Surgical Journal and then after some organizational and administrative cartwheels emerged in 1857 as the British Medical Journal? Now the $64 question, “Did you know that Facebook appears to consider the BMJ as a Web log or blog?” Quite a surprise to me and probably to quite a few others who have worked in the snooty world of professional publishing.

The most recent summary of the dust up between the Meta Zuck outfit and the “news blog” BMJ appears in “Facebook Versus The BMJ: When Fact Checking Goes Wrong.” The write up contains a number of Meta gems, and a read of the “news blog” item is a good use of time.

I want to highlight one items from the write up:

Cochrane, the international provider of high quality systematic reviews of medical evidence, has experienced similar treatment by Instagram, which, like Facebook, is owned by the parent company Meta. A Cochrane spokesperson said that in October its Instagram account was “shadow banned” for two weeks, meaning that “when other users tried to tag Cochrane, a message popped up saying @cochraneorg had posted material that goes against ‘false content’ guidelines” (fig 1). Shadow banning may lead to posts, comments, or activities being hidden or obscured and stop appearing in searches. After Cochrane posted on Instagram and Twitter about the ban, its usual service was eventually restored, although it has not received an explanation for why it fell foul of the guidelines in the first place.

I like this shadow banning thing.

How did the Meta Zuck respond? According to the “news blog”:

Meta directed The BMJ to its advice page, which said that publishers can appeal a rating directly with the relevant fact checking organization within a week of being notified of it. “Fact checkers are responsible for reviewing content and applying ratings, and this process is independent from Meta,” it said. This means that, as in The BMJ’s case, if the fact checking organization declines to change a rating after an appeal from a publisher, the publisher has little recourse. The lack of an independent appeals process raises concerns, given that fact checking organizations have been accused of bias.

There are other interesting factoids in the “news blog’s” write up.

Quickly, several observations:

- Opaque actions plague the “news blog”, the British Medical Journal and other luminaries; for example, the plight of the esteemed performer Amouranth of the Inflate-a-Pool on Amazon Twitch. Double talking and fancy dancing from Meta- and Amazon-type outfits just call attention to the sophomoric and Ted Mack Amateur Hour approach to an important function of a publicly-traded organization with global influence.

- A failure of “self regulation” can cause airplanes to crash and financial disruption to occur. Now knowledge is the likely casualty of a lack of a backbone and an ethical compass. Right now I am thinking of a ethics free, shape shifting octopus like character with zero interest in other creatures except their function as money generators.

- A combination of “act now, apologize if necessary” has fundamentally altered the social contract among corporations, governments, and individuals.

So now the BMJ (founded in 1840) has been morphed into a “news blog” pitching cow doody?

Imposed change is warranted perhaps? Adulting is long overdue at a certain high-tech outfit and a number of others of this ilk.

Stephen E Arnold, January 20, 2022