Online and In Control: WhatsApp Fingered

August 17, 2021

I read an interesting article called “Did America just lose Afghanistan because of WhatsApp?” I am not sure the author is going to become the TikTok sensation of policy analysis. The point of view is interesting, and it may harbor some high-value insight.

The write up states:

Open source reporting shows that rather than rocking up and going toe to toe with the Afghan national army, they appear to have simply called everyone in the entire country, instead, told them they were in control, and began assuming the functions of government as they went:

The Taliban let the residents of Kabul know they were in control through WhatsApp, gave them numbers to call if they ran into any problems. https://t.co/TPOZt8AQsm pic.twitter.com/QhggIWYymx

The article contains other references to Taliban communications via social media like Twitter and WhatsApp. The author notes:

WhatsApp is an American product. It can be switched off by its parent, Facebook, Inc, at any time and for any reason. The fact that the Taliban were able to use it at all, quite apart from the fact that they continue to use it to coordinate their activities even now as American citizens’ lives are imperiled by the Taliban advance which is being coordinated on that app, suggests that U.S. military intelligence never bothered to monitor Taliban numbers and never bothered to ask Facebook to ban them. They probably still haven’t even asked Facebook to do this, judging from the fact that the Taliban continues to use the app with impunity. This might explain why Afghanistan collapsed as quickly as it did.

The articles makes another statement which is thought provoking; to wit:

And as a result, they [the Taliban] took Afghanistan with almost no conflict. I suspect this is because they convinced everyone they would win before they showed up.

The write up contains links and additional detail. Consult the source document for this information. I am not sure how long the post will remain up, nor do I anticipate that it will receive wide distribution.

Stephen E Arnold, August 17, 2021

Biased? Abso-Fricken-Lutely

August 16, 2021

To be human is to be biased. Call it a DNA thing or blame it on a virus from a pangolin. In the distant past, few people cared about biases. Do you think those homogeneous nation states emerged because some people just wanted to invent the biathlon?

There’s a reasonably good run down of biases in A Handy Guide to Cognitive Biases: Short Cuts. One is able to scan bi8ases by an alphabetical list (a bit of a rarity these days) or by category.

The individual level of biases may give some heartburn; for example, the base rate neglect fallacy. The examples are familiar to some of the people with whom I have worked over the years. These clear thinkers misjudge the probability of an event by ignoring background information. I would use the phrase “ignoring context,” but I defer to the team which aggregated and assembled the online site.

Worth a look. Will most people absorb the info and adjust? Will the mystery of Covid’s origin be resolved in a definitive, verifiable way? Yeah, maybe.

Stephen E Arnold, August 16, 2021

Traditional Sports Media: Sucking Dust and Breathing Fumes?

August 12, 2021

The TikTok video format is becoming a norm core channel. I want to mention that Amazon Twitch is having a new media moment as well. I read “Lionel Messi’s Twitch App Interview Shows How Social Media Is Conquering Sports.” Note that this link is generated by DailyHunt and the story itself is output by smart software; thus, the link may be dead, and there’s not much I can do to rectify the situation.

The story contained this statement, which may be spot or or just wild and crazy Internet digital baloney:

Spanish influencer Ibai Llanos chatted with Lionel Messi on Amazon.com Inc.’s streaming platform Twitch after the world’s best-paid athlete signed with French soccer club Paris Saint Germain from Barcelona.

Here’s the kicker (yep, Messi-esque I know):

More than 3,17,000 people watched the exclusive interview, the kind of prestigious content that would often be sold to the highest bidder for TV broadcast in different territories. Llanos was introduced to Messi by Sergio Aguero, a fellow Barcelona player and video-game enthusiast who is friendly with the social media celebrity. Sports viewing is shifting steadily onto streaming platforms, and even overtaking traditional broadcast TV in the Asia Pacific region, according to GlobalWebIndex.

What? Twitch? Who is the star? Messi? The write up states:

Soccer clubs are eager to tap this new revenue source after they were hit hard by the coronavirus pandemic, especially as they need to win over younger audiences who enjoy video gaming just as much as traditional sports. Llanos has drawn 7 million Twitch followers since he started out commenting on esports tournaments from his home. He’s brought a humorous commenting style to everything from toy-car races to chess games. He’s now becoming a sports entrepreneur in his own right, collaborating with Barcelona’s Gerard Pique to broadcast the Copa America soccer competition in Spain. Llanos streamed a top-tier Spanish game for the first time in April under a deal between the Spanish league and TV rights owner Mediapro.

Observations I jotted down as I worked through this “smart software” output:

- Amazon Twitch plays a part in this shift to an influencer, streaming platform, and rights holder model

- The pivot point Llanos has direct access and channel options

- Eyeballs clump around the “force” of the stream, the personalities, and those who want to monetize this semi-new thing.

Big deal? Well, not for me, but for those with greyhounds in the race, yep. Important if true.

Stephen E Arnold, August 12, 2021

Thailand Does Not Want Frightening Content

August 6, 2021

The prime minister of Thailand is Prayut Chan-o-cha. He is a retired Royal Thai Army officer, and he is not into scary content. What’s the fix? “PM Orders Internet Blocked For Anyone Spreading Info That Might Frighten People” reported:

Prime Minister Prayut Chan-o-cha has ordered internet service providers to immediately block the internet access of anyone who propagates information that may frighten people. The order, issued under the emergency situation decree, was published in the Royal Gazette on Thursday night and takes effect on Friday. It prohibits anyone from “reporting news or disseminating information that may frighten people or intentionally distorting information to cause a misunderstanding about the emergency situation, which may eventually affect state security, order or good morality of the people.”

So what’s “frightening?” I for one find the idea of having access to the Internet blocked. Why not just put the creator of frightening content in one of Thailand’s exemplary and humane prisons? These, as I understand the situation, feature ample space, generous prisoner care services, and healthful food. With an occupancy level of 300 percent, what’s not to like?

Frightening so take PrisonStudies.org offline I guess.

Stephen E Arnold, August 6, 2021

That Online Thing Spawns Emily Post-Type Behavior, Right?

July 21, 2021

Friendly virtual watering holes or platforms for alarmists? PC Magazine reports, “Neighborhood Watch Goes Rogue: The Trouble with Nextdoor and Citizen.” Writer Christopher Smith introduces his analysis:

“Apps like Citizen and Nextdoor, which ostensibly exist to keep us apprised of what’s going on in our neighborhoods, buzz our smartphones at all hours with crime reports, suspected illegal activity, and other complaints. But residents can also weigh in with their own theories and suspicions, however baseless and—in many cases—racist. It begs the question: Where do these apps go wrong, and what are they doing now to regain consumer trust and combat the issues within their platforms?”

Smith considers several times that both community-builder Nextdoor and the more security-focused Citizen hosted problematic actions and discussions. Both apps have made changes in response to criticism. For example, Citizen was named Vigilante when it first launched in 2016 and seemed to encourage users to visit and even take an active role in nearby crime scenes. After Apple pulled it from its App Store within two days, the app relaunched the next year with the friendlier name and warnings against reckless behavior. But Citizen still stirs up discussion by sharing publicly available emergency-services data like 911 calls, sometimes with truly unfortunate results. Though the app says it is now working on stronger moderation to prevent such incidents, it also happens to be ramping up its law-enforcement masquerade. Ironically, Citizen itself cannot seem to keep its users’ data safe.

Then there is Nextdoor. During last year’s protests following the murder of George Floyd, its moderators were caught removing posts announcing protests but allowing ones that advocated violence against protestors. The CEO promised reforms in response, and the company soon axed the “Forward to Police” feature. (That is okay, cops weren’t relying on it much anyway. Go figure.) It has also enacted a version of sensitivity training and language guardrails. Meanwhile, Facebook is making its way into the neighborhood app game. Surely that company’s foresight and conscientiousness are just what this situation needs. Smith concludes:

“In theory, community apps like Citizen, Nextdoor, and Facebook Neighborhoods bring people together at time when many of us turn to the internet and our devices to make connections. But it’s a fine line between staying on top of what’s going on around us and harassing the people who live and work there with ill-advised posts and even calls to 911. The companies themselves have a financial incentive to keep us engaged (Nextdoor just filed to go public), whether its users are building strong community ties or overreacting to doom-and-gloom notifications. Can we trust them not to lead us into the abyss, or is it on us not to get caught up neighborhood drama and our baser instincts?”

Absolutely not and, unfortunately, yes.

Cynthia Murrell, July 21, 2021

Online Anonymity: Maybe a Less Than Stellar Idea

July 20, 2021

On one hand, there is a veritable industrial revolution in identifying, tracking, and pinpointing online users. On the other hand, there is the confection of online anonymity. The idea is that by obfuscation, using a fake name, or hijacking an account set up for one’s 75 year old spinster aunt — a person can be anonymous. And what fun some can have when their online actions are obfuscated either by cleverness, Tor cartwheels, and more sophisticated methods using free email and “trial” cloud accounts. I am not a big fan of online anonymity for three reasons:

- Online makes it easy for a person to listen to one’s internal demons’ chatter and do incredibly inappropriate things. Anonymity and online, in my opinion, are a bit like reverting to 11 year old thinking often with an adult’s suppressed perceptions and assumptions about what’s okay and what’s not okay.

- Having a verified identity linked to an online action imposes social constraints. The method may not be the same as a small town watching the actions of frisky teens and intervening or telling a parent at the grocery that their progeny was making life tough for the small kid with glasses who was studying Lepidoptera.

- Individuals doing inappropriate things are often exposed, discovered, or revealed by friends, spouses angry about a failure to take out the garbage, or a small investigative team trying to figure out who spray painted the doors of a religious institution.

When I read “Abolishing Online Anonymity Won’t Tackle the Underlying Problems of Racist Abuse.” I agree. The write up states:

There is an argument that by forcing people to reveal themselves publicly, or giving the platforms access to their identities, they will be “held accountable” for what they write and say on the internet. Though the intentions behind this are understandable, I believe that ID verification proposals are shortsighted. They will give more power to tech companies who already don’t do enough to enforce their existing community guidelines to protect vulnerable users, and, crucially, do little to address the underlying issues that render racial harassment and abuse so ubiquitous.

The observation is on the money.

I would push back a little. Limiting online use to those who verify their identity may curtail some of the crazier behaviors online. At this time, fractious behavior is the norm. Continuous division of cultural norms, common courtesies, and routine interactions destroys.

My thought is that changing the anonymity to real identity might curtail some of the behavior online systems enable.

Stephen E Arnold, July 20, 2021

A Good Question and an Obvious Answer: Maybe Traffic and Money?

July 19, 2021

I read “Euro 2020: Why Is It So Difficult to Track Down Racist Trolls and Remove Hateful Messages on Social Media?” The write up expresses understandable concern about the use of social media to criticize athletes. Some athletes have magnetism and sponsors want to use that “pull” to sell products and services. I remember a technology conference which featured a former football quarterback who explained how to succeed. He did not reference the athletic expertise of a former high school science club member and officer. As I recall, the pitch was working hard, fighting (!), and a overcoming a coach calling a certain athlete (me, for example) a “fat slug.” Relevant to innovating in online databases? Yes, truly inspirational and an anecdote from the mists of time.

The write up frames its concern this way about derogatory social media “posts”:

Over a quarter of the comments were sent from anonymous private accounts with no posts of their own. But identifying perpetrators of online hate is just one part of the problem.

And the real “problem”? The article states:

It’s impossible to discover through open-source techniques that an account is being operated from a particular country.

Maybe.

Referencing Instagram (a Facebook property), the Sky story notes:

Other users may anonymise their existing accounts so that the comments they post are not traceable to them in the offline world.

Okay, automated systems with smart software don’t do the job. Will another government bill in the UK help.

The write up does everything but comment about the obvious; for example, my view is that online accounts must be linked to a human and verified before posts are permitted.

The smart software thing, the government law thing, and the humans making decision thing, are not particularly efficacious. Why? The online systems permit — if not encourage — anonymity because money maybe? That’s a question for the Sky Data and Forensics team. It is:

a multi-skilled unit dedicated to providing transparent journalism from Sky News. We gather, analyse and visualise data to tell data-driven stories. We combine traditional reporting skills with advanced analysis of satellite images, social media and other open source information. Through multimedia storytelling we aim to better explain the world while also showing how our journalism is done.

Okay.

Stephen E Arnold, July 19, 2021

Zuckin and Duckin: Socialmania at Facebook

July 19, 2021

I read “Zuck Is a Lightweight, and 4 More Things We Learned about Facebook from ‘An Ugly Truth’.” My initial response was, “No Mashable professionals will be invited to the social Zuckerberg’s Hawaii compound.” Bummer. I had a few other thoughts as well, but, first, here’s couple of snippets in what is possible to characterize a review of a new book by Sheera Frenkel and Cecilia Kang. I assume any publicity is good publicity.

Here’s an I circled in Facebook social blue:

Frenkel and Kang’s careful reporting shows a company whose leadership is institutionally ill-equipped to handle the Frankenstein’s monster they built.

Snappy. To the point.

Another? Of course, gentle reader:

Zuckerberg designed the platform for mindless scrolling: “I kind of want to be the new MTV,” he told friends.

Insightful but TikTok, which may have some links to the sensitive Chinese power plant, aced out the F’Book.

And how about this?

[The Zuck] was explicitly dismissive of what she said.” Indeed, the book provides examples where Sandberg was afraid of getting fired, or being labeled as politically biased, and didn’t even try to push back…

Okay, and one more:

Employees are fighting the good fight.

Will I buy the book? Nah, this review is close enough. What do I think will happen to Facebook? In the short term, not much. The company is big and generating big payoffs in power and cash. Longer term? The wind down will continue. Google, for example, is dealing with stuck disc brakes on its super car. Facebook may be popping in and out of view in that outstanding vehicle’s rear view mirrors. One doesn’t change an outfit with many years of momentum.

Are the book’s revelations on the money. Probably reasonably accurate but disenchantment can lead to some interesting shaping of non fiction writing. And the Mashable review? Don’t buy a new Hawaiian themed cabana outfit yet. What about Facebook’s management method? Why change? It worked in high school. It worked when testifying before Congress. It worked until a couple of reporters shifted into interview mode and reporters are unlikely to rack up the likes on Facebook.

Stephen E Arnold, July xx, 2021

Social Media and News Diversity

July 16, 2021

Remember the filter bubble phrase. The idea is that in an online world one can stumble into, be forced into, or be seduced into an info flow which reinforces what one believes to be accurate. The impetus for filter bubbling is assumed to be social media. Not so fast, pilgrim.

“Study: Social Media Contributes to a More Diverse News Diet — Wait, What?!” provides rock solid, dead on proof that social media is not the bad actor here. I must admit that the assertion is one I do not hear too often. I noted this passage:

The study found that people who use search engines, social media, and aggregators to access news can actually have more diverse information diets.

The study is “More Diverse, More Politically Varied: How Social Media, Search Engines and Aggregators Shape News Repertoires in the United Kingdom.” With a word like “repertoire” in the title, one can almost leap at the assumption that the work was from Britain’s most objectively wonderful institutions of higher learning. None of that Cambridge Analytica fluff. These are Oxfordians and Liverpudlians. Liverpool is a hot bed of “repertoire” I have heard. You can download the document at this url from Sage, a fine professional publisher, at https://journals.sagepub.com/doi/pdf/10.1177/14614448211027393.

The original study states:

There is still much to learn about how the rise of new, ‘distributed’, forms of news access through search engines, social media and aggregators are shaping people’s news use.

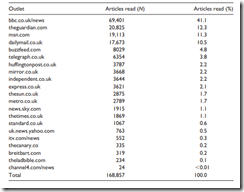

That lines up with my understanding of what is known about the upsides and downsides of social media technology, content, its use, and its creators. There’s a handy list of tracked articles read:

The Canary.co is interesting because it runs headlines which are probably intuitively logical in Oxford and Liverpool pubs. Here’s a headline from July 11, 2021:

Boris Johnson Toys with Herd Immunity Despite Evidence Linking Long Covid to Brain Damage.

I am not sure about Mr. Johnson’s toying, herd immunity, and brain damage. But I live in rural Kentucky, not Oxford or Liverpool.

The Sage write up includes obligatory math; for example:

And then charge forward into the discussion of this breakthrough research.

Social media exposes their users to more diverse opinions. I will pass that along to the folks who hang out in the tavern in Harrod’s Creek. Some of those individuals get their info from quite interesting groups on Telegram. SocialClu, anyone? Brain damage? TikTok?

Stephen E Arnold, July 16, 2021

Facebook Has Channeled Tacit Software, Just without the Software

July 14, 2021

I would wager a free copy of my book CyberOSINT that anyone reading this blog post remembers Tacit Software, founded in the late 1990s. The company wrote a script which determined what employee in an organization was “consulted” most frequently. I recall enhancements which “indexed” content to make it easier for a user to identify content which may have been overlooked. But the killer feature was allowing a person with appropriate access to identify individuals with particular expertise. Oracle, the number one in databases, purchased Tacit Software and integrated the function into Oracle Beehive. If you want to read marketing collateral about Beehive, navigate to this link. Oh, good luck with pinpointing the information about Tacit. If you dig a bit, you will come across information which suggests that the IBM Clever method was stumbled upon and implemented about the same time that Backrub went online. Small community in Silicon Valley? Yes, it is.

So what?

I thought about this 1997 innovation in Silicon Valley when I read “Facebook’s Groups to Highlight Experts.” With billions of users, I wonder why it took Facebook years to figure out that it could identify individuals who “knew” something. Progress never stops in me-to land, of course. Is Facebook using its estimable smart software to identify those in know?

The article reports:

There are more than 70 million administrators and moderators running active groups, Facebook says. When asked how they’re vetting the qualifications of designated experts, a Facebook spokesperson said it’s “all up the discretion of the admin to designate experts who they believe are knowledgeable on certain topics.”

I think this means that humans identify experts. What if the human doing the identifying does not know anything about the “expertise” within another Facebooker?

Yeah, maybe give Oracle Beehive a jingle. Just a thought.

Stephen E Arnold, July 14, 2021