Will AI Kill Us All? No, But the Hype Can Be Damaging to Mental Health

June 11, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I missed the talk about how AI will kill us all. Planned? Nah, heavy traffic. From what I heard, none of the cyber investigators believed the person trying hard to frighten law enforcement cyber investigators. There are other — slightly more tangible threats. One of the attendees whose name I did not bother to remember asked me, “What do you think about artificial intelligence?” My answer was, “Meh.”

A contrarian walks alone. Why? It is hard to make money being negative. At the conference I attended June 4, 5, and 6, attendees with whom I spoke just did not care. Thanks, MSFT Copilot. Good enough.

Why you may ask? My method of handling the question is to refer to articles like this: “AI Appears to Rapidly Be Approaching Be Approaching a Brick Wall Where It Can’t Get Smarter.” This write up offers an opinion not popular among the AI cheerleaders:

Researchers are ringing the alarm bells, warning that companies like OpenAI and Google are rapidly running out of human-written training data for their AI models. And without new training data, it’s likely the models won’t be able to get any smarter, a point of reckoning for the burgeoning AI industry

Like the argument that AI will change everything, this claim applies to systems based upon indexing human content. I am reasonably certain that more advanced smart software with different concepts will emerge. I am not holding my breath because much of the current AI hoo-hah has been gestating longer than new born baby elephant.

So what’s with the doom pitch? Law enforcement apparently does not buy the idea. My team doesn’t. For the foreseeable future, applied smart software operating within some boundaries will allow some tasks to be completed quickly and with acceptable reliability. Robocop is not likely for a while.

One interesting question is why the polarization. First, it is easy. And, second, one can cash in. If one is a cheerleader, one can invest in a promising AI start and make (in theory) oodles of money. By being a contrarian, one can tap into the segment of people who think the sky is falling. Being a contrarian is “different.” Plus, by predicting implosion and the end of life one can get attention. That’s okay. I try to avoid being the eccentric carrying a sign.

The current AI bubble relies in a significant way on a Google recipe: Indexing text. The approach reflects Google’s baked in biases. It indexes the Web; therefore, it should be able to answer questions by plucking factoids. Sorry, that doesn’t work. Glue cheese to pizza? Sure.

Hopefully new lines of investigation may reveal different approaches. I am skeptical about synthetic (or made up data that is probably correct). My fear is that we will require another 10, 20, or 30 years of research to move beyond shuffling content blocks around. There has to be a higher level of abstraction operating. But machines are machines and wetware (human brains) are different.

Will life end? Probably but not because of AI unless someone turns over nuclear launches to “smart” software. In that case, the crazy eccentric could be on the beam.

Stephen E Arnold, June 11, 2024

A Cultural Black Hole: Lost Data

May 22, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

A team in Egypt discovered something mysterious near the pyramids. I assume National Geographic will dispatch photographers. Archeologists will probe. Artifacts will be discovered. How much more is buried under the surface of Giza? People have been digging for centuries, and their efforts are rewarded. But what about the artifacts of the digital age?

Upon opening the secret chamber, the digital construct explains to the archeologist from the future that there is a little problem getting the digital information. Thanks, MSFT Copilot.

My answer is, “Yeah, good luck.” The ephemeral quality of online information means that finding something buried near the pyramid of Djoser is going to be more rewarding than looking for the once findable information about MIC, RAC, and ZPIC on a US government Web site. The same void exists for quite a bit of human output captured in now-disappeared systems like The Point (Top 5% of the Internet) and millions of other digital constructs.

A survey report conducted by the Pew Research Center highlights link rot. The idea is simple. Click on a link and the indexed or pointed to content cannot be found. “When Online Content Disappears” has a snappy subtitle:

38 percent of Web pages that existed in 2013 are no longer accessible a decade later.

Wait, are national libraries like the Library of Congress supposed to keep “information.” What about the National Archives? What about the Internet Archive (an outfit busy in court)? What about the Google? (That’s the “all” the world’s information, right?) What about Bibliothèque nationale de France with its rich tradition of keeping French information?

News flash. Unlike the fungible objects unearthed in Egypt, data archeologists are going to have to buy old hard drives on eBay, dig through rubbish piles in “recycling” facilities, or scour yard sales for old machines. Then one has to figure out how to get the data. Presumably smart software can filter through the bits looking for useful data. My suggestion? Don’t count on this happening?

Here are several highlights from the Pew Report:

- Some 38% of webpages that existed in 2013 are not available today, compared with 8% of pages that existed in 2023.

- Nearly one-in-five tweets are no longer publicly visible on the site just months after being posted.

- 21% of all the government webpages we examined contained at least one broken link… Across every level of government we looked at, there were broken links on at least 14% of pages; city government pages had the highest rates of broken links.

The report presents a picture of lost data. Trying to locate these missing data will be less fruitful than digging in the sands of Egypt.

The word “rot” is associated with decay. The concept of “link rot” complements the business practices of government agencies and organizations once gathering, preserving, and organizing data. Are libraries at fault? Are regulators the problem? Are the content creators the culprits?

Sure, but the issue is that as the euphoria and reality of digital information slosh like water in a swimming pool during an earthquake, no one knows what to do. Therefore, nothing is done until knee jerk reflexes cause something to take place. In the end, no comprehensive collection plan is in place for the type of information examined by the Pew folks.

From my vantage point, online and digital information are significant features of life today. Like goldfish in a bowl, we are not able to capture the outputs of the digital age. We don’t understand the datasphere, my term for the environment in which much activity exists.

The report does not address the question, “So what?”

That’s part of the reason future data archeologists will struggle. The rush of zeros and ones has undermined information itself. If ignorance of these data create bliss, one might say, “Hello, Happy.”

Stephen E Arnold, May 22, 2023

E2EE: Not Good Enough. So What Is Next?

May 21, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

What’s wrong with software? “

I think one !*#$ thing about the state of technology in the world today is that for so many people, their job, and therefore the thing keeping a roof over their family’s head, depends on adding features, which then incentives people to, well, add features. Not to make and maintain a good app.

Who has access to the encrypted messages? Someone. That’s why this young person is distraught as she is escorted to the police van. Thanks, MSFT Copilot. Good enough.

This statement appears in “A Rant about Phone Messaging Apps UI.” But there are some more interesting issues in messaging; specifically, E2EE or end to end encrypted messaging. The current example of talking about the wrong topic in a quite important application space is summarized in Business Insider, an estimable online publication with snappy headlines like this one: “”In the Battle of Telegram vs Signal, Elon Musk Casts Doubt on the Security of the App He Once Championed.” That write up reports as “real” news:

Signal has also made its cryptography open-source. It is widely regarded as a remarkably secure way to communicate, trusted by Jeff Bezos and Amazon executives to conduct business privately.

I want to point out that Edward Snowden “endorses” Signal. He does not use Telegram. Does he know something that others may not have tucked into their memory stack?

The Business Insider “real” news report includes this quote from a Big Dog at Signal:

“We use cryptography to keep data out of the hands of everyone but those it’s meant for (this includes protecting it from us),” Whittaker wrote. “The Signal Protocol is the gold standard in the industry for a reason–it’s been hammered and attacked for over a decade, and it continues to stand the test of time.”

Pavel Durov, the owner of Telegram, and the brother of the person like two Ph.D.’s (his brother Nikolai), suggests that Signal is insecure. Keep in mind that Mr. Durov has been the subject of some scrutiny because after telling the estimable Tucker Carlson that Telegram is about free speech. Why? Telegram blocked Ukraine’s government from using a Telegram feature to beam pro-Ukraine information into Russia. That’s a sure-fire way to make clear what country catches Mr. Durov’s attention. He did this, according to rumors reaching me from a source with links to the Ukraine, because Apple or maybe Google made him do it. Blaming the alleged US high-tech oligopolies is a good red herring and a sinky one at that.

What Telegram got to do with the complaint about “features”? In my view, Telegram has been adding features at a pace that is more rapid than Signal, WhatsApp, and a boatload of competitors. have those features created some vulnerabilities in the Telegram set up? In fact, I am not sure Telegram is a messaging platform. I also think that the company may be poised to do an end run around open sourcing its home-grown encryption method.

What does this mean? Here are a few observations:

- With governments working overtime to gain access to encrypted messages, Telegram may have to add some beef.

- Established firms and start ups are nosing into obfuscation methods that push beyond today’s encryption methods.

- Information about who is behind an E2EE messaging service is tough to obtain? What is easy to document with a Web search may be one of those “fake” or misinformation plays.

Net net: E2EE is getting long in the tooth. Something new is needed. If you want to get a glimpse of the future, catch my lecture about E2EE at the upcoming US government Cycon 2024 event in September. Want a preview? We have a briefing. Write benkent2020 at yahoo dot com for restrictions and prices.

Stephen E Arnold, May 21, 2024

AI and the Workplace: Change Will Happen, Just Not the Way Some Think

May 15, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “AI and the Workplace.” The essay contains observations related to smart software in the workplace. The idea is that employees who are savvy will experiment and try to use the technology within today’s work framework. I think that will happen just as the essay suggests. However, I think there is a larger, more significant impact that is easy to miss. Looking at today’s workplace is missing a more significant impact. Employees either [a] want to keep their job, [b] gain new skills and get a better job, or [c] quit to vegetate or become an entrepreneur. I understand.

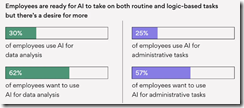

The data in the report make clear that some employees are what I call change flexible; that is, these motivated individuals differentiate from others at work by learning and experimenting. Note that more than half the people in the “we don’t use AI” categories want to use AI.

These data come from the cited article and an outfit called Asana.

The other data in the report. Some employees get a productivity boost; others just chug along, occasionally getting some benefit from AI. The future, therefore, requires learning, double checking outputs, and accepting that it is early days for smart software. This makes sense; however, it misses where the big change will come.

In my view, the major shift will appear in companies founded now that AI is more widely available. These organizations will be crafted to make optimal use of smart software from the day the new idea takes shape. A new news organization might look like Grok News (the Elon Musk project) or the much reviled AdVon. But even these outfits are anchored in the past. Grok News just substitutes smart software (which hopefully will not kill its users) for old work processes and outputs. AdVon was a “rip and replace” tool for Sports Illustrated. That did not go particularly well in my opinion.

The big job impact will be on new organizational set ups with AI baked in. The types of people working at these organizations will not be from the lower 98 percent of the work force pool. I think the majority of employees who once expected to work in information processing or knowledge work will be like a 58 year old brand manager at a vape company. Job offers will not be easy to get and new companies might opt for smart software and search engine optimization marketing. How many workers will that require? Maybe zero. Someone on Fiverr.com will do the job for a couple of hundred dollars a month.

In my view, new companies won’t need workers who are not in the top tier of some high value expertise. Who needs a consulting team when one bright person with knowledge of orchestrating smart software is able to do the work of a marketing department, a product design unit, and a strategic planning unit? In fact, there may not be any “employees” in the sense of workers at a warehouse or a consulting firm like Deloitte.

Several observations are warranted:

- Predicting downstream impacts of a technology unfamiliar to a great many people is tricky and sometimes impossible. Who knew social media would spawn a renaissance in getting tattooed?

- Visualizing how an AI-centric start up is assembled is a challenge? I submit it won’t look like an insurance company today. What’s a Tesla repair station look like? The answer, “Not much.”

- Figuring out how to be one of the elite who gets a job means being perceived as “smart.” Unlike Alina Habba, I know that I cannot fake “smart.” How many people will work hard to maximize the return on their intelligence? The answer, in my experience, is, “Not too many, dinobaby.”

Looking at the future from within the framework of today’s datasphere distorts how one perceives impact. I don’t know what the future looks like, but it will have some quite different configurations than the companies today have. The future will arrive slowly and then it becomes the foundation of a further evolution. What’s the grandson of tomorrow’s AI firm look like? Beauty will be in the eye of the beholder.

Net net: Where will the never-to-be-employed find something meaningful to do?

Stephen E Arnold, May 15, 2024

AI May Help Real Journalists Explain Being Smart. May, Not Will

May 9, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I found the link between social media and stupid people interesting. I am not sure I embrace the causal chain as presented in “As IQ Scores Decline in the US, Experts Blame the Rise of Tech — How Stupid Is Your State?” The “real” news story has a snappy headline, but social media and IQ? Let’s take a look. The write up states:

Here’s the first sentence of the write up. Note the novel coinage, dumbening. I assume the use of dumb as a gerund open the door to such statements as “I dumb” or “We dumbed together at Harvard’s lecture about ethics” or “My boss dumbed again, like he did last summer.”

Do all Americans go through a process of dumbening?

A tour group has a low IQ when it comes to understanding ancient rock painting. Should we blame technology and social media? Thanks, MSFT Copilot. Earning extra money because you do great security?

The write up explains that IQ scores are going down after a “rise” which began in 1905. What causes this decline? Is it broken homes? Lousy teachers? A lack of consequences for inattentiveness? Skipping school? Crappy pre-schools? Bus rides? School starting too early or too late? Dropping courses in art, music, and PE? Chemical-infused food? Television? Not learning cursive?

The answer is, “Technology.” More specifically, the culprit is social media. The article quotes a professor, who opines:

The professor [Hetty Roessingh, professor emerita of education at the University of Calgary] said that time spent with devices like phones and iPads means less time for more effective methods of increasing one’s intelligence level.

Several observations:

- Wow.

- Technology is an umbrella term. Social media is an umbrella term. What exactly is causing people to be dumb?

- What about an IQ test being mismatched to those who take it? My IQ was pretty low when I lived in Campinas, Brazil. It was tough to answer questions I could not read until I learned Portuguese.

Net net: Dumbening. You got it.

Stephen E Arnold, May 9, 2024

A High-Tech Best Friend and Campfire Lighter

May 1, 2024

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

A dog is allegedly man’s best friend. I have a French bulldog,

and I am not 100 percent sure that’s an accurate statement. But I have a way to get the pal I have wanted for years.

Ars Technica reports “You Can Now Buy a Flame-Throwing Robot Dog for Under $10,000” from Ohio-based maker Throwflame. See the article for footage of this contraption setting fire to what appears to be a forest. Terrific. Reporter Benj Edwards writes:

“Thermonator is a quadruped robot with an ARC flamethrower mounted to its back, fueled by gasoline or napalm. It features a one-hour battery, a 30-foot flame-throwing range, and Wi-Fi and Bluetooth connectivity for remote control through a smartphone. It also includes a LIDAR sensor for mapping and obstacle avoidance, laser sighting, and first-person view (FPV) navigation through an onboard camera. The product appears to integrate a version of the Unitree Go2 robot quadruped that retails alone for $1,600 in its base configuration. The company lists possible applications of the new robot as ‘wildfire control and prevention,’ ‘agricultural management,’ ‘ecological conservation,’ ‘snow and ice removal,’ and ‘entertainment and SFX.’ But most of all, it sets things on fire in a variety of real-world scenarios.”

And what does my desired dog look like? The GenY Tibby asleep at work? Nope.

I hope my Thermonator includes an AI at the controls. Maybe that will be an add-on feature in 2025? Unitree, maker of the robot base mentioned above, once vowed to oppose the weaponization of their products (along with five other robotics firms.) Perhaps Throwflame won them over with assertions their device is not technically a weapon, since flamethrowers are not considered firearms by federal agencies. It is currently legal to own this mayhem machine in 48 states. Certain restrictions apply in Maryland and California. How many crazies can get their hands on a mere $9,420 plus tax for that kind of power? Even factoring in the cost of napalm (sold separately), probably quite a few.

Cynthia Murrell, May 1, 2024

Research into Baloney Uses Four Letter Words

March 25, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I am critical of university studies. However, I spotted one which strikes as the heart of the Silicon Valley approach to life. “Research Shows That People Who BS Are More Likely to Fall for BS” has an interesting subtitle; to wit:

People who frequently mislead others are less able to distinguish fact from fiction, according to University of Waterloo researchers

A very good looking bull spends time reviewing information helpful to him in selling his artificial intelligence system. Unlike the two cows, he does not realize that he is living in a construct of BS. Thanks, MSFT Copilot. How are you doing with those printer woes today? Good enough, I assume.

Consider the headline in the context of promises about technologies which will “change everything.” Examples range from the marvels of artificial intelligence to the crazy assertions about quantum computing. My hunch is that the reason baloney has become one of the most popular mental foods in the datasphere is that people desperately want a silver bullet. Other know that if a silver bullet is described with appropriate language and a bit of sizzle, the thought can be a runway for money.

What’s this mean? We have created a culture in North America that makes “technology” and “glittering generalities” into hyperbole factories. Why believe me? Let’s look at the “research.”

The write up reports:

People who frequently try to impress or persuade others with misleading exaggerations and distortions are themselves more likely to be fooled by impressive-sounding misinformation… The researchers found that people who frequently engage in “persuasive bullshitting” were actually quite poor at identifying it. Specifically, they had trouble distinguishing intentionally profound or scientifically accurate fact from impressive but meaningless fiction. Importantly, these frequent BSers are also much more likely to fall for fake news headlines.

Let’s think about this assertion. The technology story teller is an influential entity. In the world of AI, for example, some firms which have claimed “quantum supremacy” showcase executives who spin glorious word pictures of smart software reshaping the world. The upsides are magnetic; the downsides dismissed.

What about crypto champions? Telegram, founded by two Russian brothers, are spinning fabulous tales of revenue from advertising in an encrypted messaging system and cheerleading for a more innovative crypto currency. Operating from Dubai, there are true believers. What’s not to like? Maybe these bros have the solution that has long been part of the Harvard winkle confections.

What shocked me about the write up was the use of the word “bullshit.” Here’s an example from the academic article:

“We found that the more frequently someone engages in persuasive bullshitting, the more likely they are to be duped by various types of misleading information regardless of their cognitive ability, engagement in reflective thinking, or metacognitive skills,” Littrell said. “Persuasive BSers seem to mistake superficial profoundness for actual profoundness. So, if something simply sounds profound, truthful, or accurate to them that means it really is. But evasive bullshitters were much better at making this distinction.”

What if the write up is itself BS? What if the journal publishing the article — British Journal of Social Psychology — is BS? On one level, I want to agree that those skilled in the art of baloney manufacturing, distributing, and outputting have a quite specific skill. On the other hand, I admit that I cannot determine at first glance if the information provided is not synthetic, ripped off, shaped, or weaponized. I would assert that most people are not able to identify what is “verifiable”, “an accurate accepted fact”, or “true.”

We live in a post-reality era. When the presidents of outfits like Harvard and Stanford face challenges to their research accuracy, what can I do when confronted with a media release about BS. Upon reflection, I think the generalization that people cannot figure out what’s on point or not is true. When drug store cashiers cannot make change, I think that’s strong anecdotal evidence that other parts of their mental toolkit have broken or missing parts.

But the statement that those who output BS cannot themselves identify BS may be part of a broader educational failure. Lazy people, those who take short cuts, people who know how to do the PT Barnum thing, and sales professionals trying to close a deal reflect a societal issue. In a world of baloney, everything is baloney.

Stephen E Arnold, March 25, 2024

Old Code, New Code: Can You Make It Work Again… Sort Of?

March 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Even hippy dippy super slick AI start ups have a technical debt problem. It is, in my opinion, no different from the “costs” imposed on outfits like JPMorgan Chase or (heaven help us) AMTRAK. Software which mostly works is subject to two environmental problems. First, the people who wrote the code or made it work that last time catastrophe struck (hello, AT&T, how are those pushed updates working for you now?) move on, quit, or whatever. Second, the technical options for remediating the problem are evolving (how are those security hot fixes working out, Microsoft?).

The helpful father asks an question the aspiring engineer cannot answer. Thus it was when the wizard was a child, and it is when the wizard is working on a modern engineering project. Buildings tip; aircraft lose doors and wheels. Software updates kill computers. Self-driving cars cannot. Thanks, MSFT Copilot. Did you get your model airplane to fly when you were a wee lad? I think I know the answer.

I thought about this problem of the cost of code remediating, fixing, redoing, upgrading or whatever term fast-talking sales engineers use in their Zooms and PowerPoints as I read “The High-Risk Refactoring.” The write up does a good job of explaining in a gentle way what happens when suits authorize making old code like new again. (The suits do not know the agonies of the original developers, but why should “history” intrude on a whiz bang GenX or GenY management type?

The article says:

it’s highly important to ensure the system works the same way after the swap with the new code. In that regard, immediately spotting when something breaks throughout the whole refactoring process is very helpful. No one wants to find that out in production.

No kidding.

In most cases, there are insufficient skilled people and money to create a new or revamped system, get it up and running in parallel for an appropriate period of time, identify the problems, remediate them, and then make the cut over. People buy cars this way, but that’s not how most organizations, regardless of size, “do” software. Okay, the take your car in, buy a new one, and drive off will not work in today’s business environment.

The write up focuses on what most organizations do; that is, write or fix new code and stick it into a system. There may or may not be resources for a staging server, but the result is the same. The old software has been “fixed” and the documentation is “sort of written” and people move on to other work or in the case of consulting engineering firms, just get replaced by a new, higher margin professional.

The write up takes a different approach and concludes with four suggestions or questions to ask. I quote:

“Refactor if things are getting too complicated, but stop if can’t prove it works.

Accompany new features with refactoring for areas you foresee to be subject to a change, but copy-pasting is ok until patterns arise.

Be proactive in finding new ways to ensure refactoring predictability, but be conservative about the assumption QA will find all the bugs.

Move business logic out of busy components, but be brave enough to keep the legacy code intact if the only argument is “this code looks wrong”.

These are useful points. I would like to suggest some bright white lines for those who have to tackle an IRS-mainframe- or AT&T-billing system type of challenge as well as tweaking an artificial intelligence solution to respond to those wonky multi-ethnic images Google generated in order to allow the Sundar & Prabhakar Comedy Team to smile sheepishly and apologize again for lousy software.

Are you ready? Let’s go:

- Fixes add to the complexity of the code base. As time goes stumbling forward, the complexity of the software becomes greater. The cost of making sure the fix works and does not create exciting dependency behavior goes up. Thus, small fixes “cost” more, and these costs are tough to control.

- The safest fixes are “wrappers”; that is, no one in his or her right mind wants to change software written in 1978 for a machine no longer in production by the manufacturer. Therefore, new software is written to interact in a “safe” way with the original software. The new code “fixes up” the problem without screwing up what grandpa programmer wrote almost half a century ago. The problem is that “wrappers” tend to slow stuff down. The fix is to say one will optimize the system while one looks for a new project or job.

- The software used for “fixing” a problem is becoming the equivalent of repairing an aircraft component with Dawn laundry detergent. The “fix” is cheap, easy to use, and good enough. The software equivalent of this Dawn solution is that it will not stand the test of time. Instead of code crafted in good old COBOL or Assembler, we have some Fancy Dan tools which may fall out of favor in a matter of months, not decades.

Many projects result in better, faster, and cheaper. The reminder “Pick two” is helpful.

Net net: Fixing up lousy or flawed software is going to increase risks and costs. The question asked by bean counters is, “How much?” The answer is, “No one knows until the project is done … if ever.”

Stephen E Arnold, March 18, 2024

Stanford: Tech Reinventing Higher Education: I Would Hope So

March 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “How Technology Is Reinventing Education.” Essays like this one are quite amusing. The ideas flow without important context. Let’s look at this passage:

“Technology is a game-changer for education – it offers the prospect of universal access to high-quality learning experiences, and it creates fundamentally new ways of teaching,” said Dan Schwartz, dean of Stanford Graduate School of Education (GSE), who is also a professor of educational technology at the GSE and faculty director of the Stanford Accelerator for Learning. “But there are a lot of ways we teach that aren’t great, and a big fear with AI in particular is that we just get more efficient at teaching badly. This is a moment to pay attention, to do things differently.”

A university expert explains to a rapt audience that technology will make them healthy, wealthy, and wise. Well, that’s the what the marketing copy which the lecturer recites. Thanks, MSFT Copilot. Are you security safe today? Oh, that’s too bad.

I would suggest that Stanford’s Graduate School of Education consider these probably unimportant points:

- The president of Stanford University resigned allegedly because he fudged some data in peer-reviewed documents. True or false. Does it matter? The fellow quit.

- The Stanford Artificial Intelligence Lab or SAIL innovated with cooking up synthetic data. Not only was synthetic data the fast food of those looking for cheap and easy AI training data, Stanford became super glued to the fake data movement which may be good or it may be bad. Hallucinating is easier if the models are training using fake information perhaps?

- Stanford University produced some outstanding leaders in the high technology “space.” The contributions of famous graduates have delivered social media, shaped advertising systems, and interesting intelware companies which dabble in warfighting and saving lives from one versatile software and consulting platform.

The essay operates in smarter-than-you territory. It presents a view of the world which seems to be at odds with research results which are not reproducible, ethics-free researchers, and an awareness of how silly it looks to someone in rural Kentucky to have a president accused of pulling a grade-school essay cheating trick.

Enough pontification. How about some progress in remediating certain interesting consequences of Stanford faculty and graduates innovations?

Stephen E Arnold, March 15, 2024

Techno Bashing from Thumb Typers. Give It a Rest, Please

March 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Every generation says that the latest cultural and technological advancements make people stupider. Novels were trash, the horseless carriage ruined traveling, radio encouraged wanton behavior, and the list continues. Everything changed with the implementation of television aka the boob tube. Too much television does cause cognitive degradation. In layman’s terms, it means the brain goes into passive functioning rather than actively thinking. It would be almost a Zen moment. Addiction is fun for some.

The introduction of videogames, computers, and mobile devices augmented the decline of brain function. The combination of AI-chatbots and screens, however, might prove to be the ultimate dumbing down of humans. APA PsycNet posted a new study by Umberto León-Domínguez called, “Potential Cognitive Risks Of Generative Transformer-Based AI-Chatbots On Higher Order Executive Thinking.”

Psychologists already discovered that spending too much time on a screen (i.e. playing videogames, watching TV or YouTube, browsing social media, etc.) increases the risk of depression and anxiety. When that is paired with AI-chatbots, or programs designed to replicate the human mind, humans rely on the algorithms to think for them.

León-Domínguez wondered if too much AI-chatbot consumption impaired cognitive development. In his abstract he invented some handy new terms that:

“The “neuronal recycling hypothesis” posits that the brain undergoes structural transformation by incorporating new cultural tools into “neural niches,” consequently altering individual cognition. In the case of technological tools, it has been established that they reduce the cognitive demand needed to solve tasks through a process called “cognitive offloading.” Cognitive offloading”perfectly describes younger generations and screen addicts. “Cultural tools into neural niches” also respects how older crowds view new-fangled technology, coupled with how different parts of the brain are affected with technology advancements. The modern human brain works differently from a human brain in the 18th-century or two thousand years ago.

He found:

“The pervasive use of AI chatbots may impair the efficiency of higher cognitive functions, such as problem-solving. Importance: Anticipating AI chatbots’ impact on human cognition enables the development of interventions to counteract potential negative effects. Next Steps: Design and execute experimental studies investigating the positive and negative effects of AI chatbots on human cognition.”

Are we doomed? No. Do we need to find ways to counteract stupidity? Yes. Do we know how it will be done? No.

Isn’t tech fun?

Whitney Grace, March 6, 2024