The Everything About AI Report

May 7, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read the Stanford Artificial Intelligence Report. If you have have not seen the 500 page document, click here. I spotted an interesting summary of the document. “Things Everyone Should Understand About the Stanford AI Index Report” is the work of Logan Thorneloe, an author previously unknown to me. I want to highlight three points I carried away from Mr. Thorneloe’s essay. These may make more sense after you have worked through the beefy Stanford document, which, due to its size, makes clear that Stanford wants to be linked to the the AI spaceship. (Does Stanford’s AI effort look like Mr. Musk’s or Mr. Bezos’ rocket? I am leaning toward the Bezos design.)

An amazed student absorbs information about the Stanford AI Index Report. Thanks, MSFT. Good enough.

The summary of the 500 page document makes clear that Stanford wants to track the progress of smart software, provide a policy document so that Stanford can obviously influence policy decisions made by people who are not AI experts, and then “highlight ethical considerations.” The assumption by Mr. Thorneloe and by the AI report itself is that Stanford is equipped to make ethical anything. The president of Stanford departed under a cloud for acting in an unethical manner. Plus some of the AI firms have a number of Stanford graduates on their AI teams. Are those teams responsible for depictions of inaccurate historical personages? Okay, that’s enough about ethics. My hunch is that Stanford wants to be perceived as a leader. Mr. Thorneloe seems to accept this idea as a-okay.

The second point for me in the summary is that Mr. Thorneloe goes along with the idea that the Stanford report is unbiased. Writing about AI is, in my opinion of course, inherently biased. That’s’ the reason there are AI cheerleaders and AI doomsayers. AI is probability. How the software gets smart is biased by [a] how the thresholds are rigged up when a smart system is built, [b] the humans who do the training of the system and then “fine tune” or “calibrate” the smart software to produce acceptable results, and [b] the information used to train the system. More recently, human developers have been creating wrappers which effectively prevent the smart software from generating pornography or other “improper” or “unacceptable” outputs. I think the “bias” angle needs some critical thinking. Stanford’s report wants to cover the AI waterfront as Stanford maps and presents the geography of AI.

The final point is the rundown of Mr. Thorneloe’s take-aways from the report. He presents ten. I think there may just be three. First, the AI work is very expensive. That leads to the conclusion that only certain firms can be in the AI game and expect to win and win big. To me, this means that Stanford wants the good old days of Silicon Valley to come back again. I am not sure that this approach to an important, yet immature technology, is a particularly good idea. One does not fix up problems with technology. Technology creates some problems, and like social media, what AI generates may have a dark side. With big money controlling the game, what’s that mean? That’s a tough question to answer. The US wants China and Russia to promise not to use AI in their nuclear weapons system. Yeah, that will work.

Another take-away which seems important is the assumption that workers will be more productive. This is an interesting assertion. I understand that one can use AI to eliminate call centers. However, has Stanford made a case that the benefits outweigh the drawbacks of AI? Mr. Thorneloe seems to be okay with the assumption underlying the good old consultant-type of magic.

The general take-away from the list of ten take-aways is that AI is fueled by “industry.” What happened the Stanford Artificial Intelligence Lab, synthetic data, and the high-confidence outputs? Nothing has happened. AI hallucinates. AI gets facts wrong. AI is a collection of technologies looking for problems to solve.

Net net: Mr. Thorneloe’s summary is useful. The Stanford report is useful. Some AI is useful. Writing 500 pages about a fast moving collection of technologies is interesting. I cannot wait for the 2024 edition. I assume “everyone” will understand AI PR.

Stephen E Arnold, May 7, 2024

Torrent Search Platform Tribler Works to Boost Decentralization with AI

May 7, 2024

Can AI be the key to a decentralized Internet? The group behind the BitTorrent-based search engine Tribler believe it can. TorrentFreak reports, “Researchers Showcase Decentralized AI-Powered Torrent Search Engine.” Even as the online world has mostly narrowed into commercially controlled platforms, researchers at the Netherlands’ Delft University of Technology have worked to decentralize and anonymize search. Their goal has always been to empower John Q. Public over governments and corporations. Now, the team has demonstrated the potential of AI to significantly boost those efforts. Writer Ernesto Van der Sal tells us:

“Tribler has just released a new paper and a proof of concept which they see as a turning point for decentralized AI implementations; one that has a direct BitTorrent link. The scientific paper proposes a new framework titled ‘De-DSI’, which stands for Decentralised Differentiable Search Index. Without going into technical details, this essentially combines decentralized large language models (LLMs), which can be stored by peers, with decentralized search. This means that people can use decentralized AI-powered search to find content in a pool of information that’s stored across peers. For example, one can ask ‘find a magnet link for the Pirate Bay documentary,’ which should return a magnet link for TPB-AFK, without mentioning it by name. This entire process relies on information shared by users. There are no central servers involved at all, making it impossible for outsiders to control.”

Van der Sal emphasizes De-DSI is still in its early stages—the demo was created with a limited dataset and starter AI capabilities. The write-up briefly summarizes the approach:

“In essence, De-DSI operates by sharing the workload of training large language models on lists of document identifiers. Every peer in the network specializes in a subset of data, which other peers in the network can retrieve to come up with the best search result.”

The team hopes to incorporate this tech into an experimental version of Tribler by the end of this year. Stay tuned.

Cynthia Murrell, May 7, 2024

Microsoft Security Messaging: Which Is What?

May 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I am a dinobaby. I am easily confused. I read two “real” news items and came away confused. The first story is “Microsoft Overhaul Treats Security As Top Priority after a Series of Failures.” The subtitle is interesting too because it links “security” to monetary compensation. That’s an incentive, but why isn’t security just part of work at an alleged monopoly’s products and services? I surmise the answer is, “Because security costs money, a lot of money.” That article asserts:

After a scathing report from the US Cyber Safety Review Board recently concluded that “Microsoft’s security culture was inadequate and requires an overhaul,” it’s doing just that by outlining a set of security principles and goals that are tied to compensation packages for Microsoft’s senior leadership team.

Okay. But security emerges from basic engineering decisions; for instance, does a developer spend time figuring out and resolving security when dependencies are unknown or documented only by a grousing user in a comment posted on a technical forum? Or, does the developer include a new feature and moves on to the next task, assuming that someone else or an automated process will make sure everything works without opening the door to the curious bad actor? I think that Microsoft assumes it deploys secure systems and that its customers have the responsibility to ensure their systems’ security.

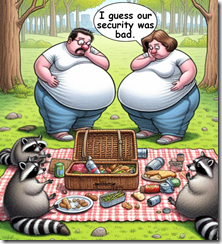

The cyber racoons found the secure picnic basket was easily opened. The well-fed, previously content humans seem dismayed that their goodies were stolen. Thanks, MSFT Copilot. Definitely good enough.

The write up adds that Microsoft has three security principles and six security pillars. I won’t list these because the words chosen strike me like those produced by a lawyer, an MBA, and a large language model. Remember. I am a dinobaby. Six plus three is nine things. Some car executive said a long time ago, “Two objectives is no objective.” I would add nine generalizations are not a culture of security. Nine is like Microsoft Word features. No one can keep track of them because most users use Word to produce Words. The other stuff is usually confusing, in the way, or presented in a way that finding a specific feature is an exercise in frustration. Is Word secure? Sure, just download some nifty documents from a frisky Telegram group or the Dark Web.

The write up concludes with a weird statement. Let me quote it:

I reported last month that inside Microsoft there is concern that the recent security attacks could seriously undermine trust in the company. “Ultimately, Microsoft runs on trust and this trust must be earned and maintained,” says Bell. “As a global provider of software, infrastructure and cloud services, we feel a deep responsibility to do our part to keep the world safe and secure. Our promise is to continually improve and adapt to the evolving needs of cybersecurity. This is job #1 for us.”

First, there is the notion of trust. Perhaps Edge’s persistence and advertising in the start menu, SolarWinds, and the legions of Chinese and Russian bad actors undermine whatever trust exists. Most users are clueless about security issues baked into certain systems. They assume; they don’t trust. Cyber security professionals buy third party security solutions like shopping at a grocery store. Big companies’ senior executive don’t understand why the problem exists. Lawyers and accountants understand many things. Digital security is often not a core competency. “Let the cloud handle it,” sounds pretty good when the fourth IT manager or the third security officer quit this year.

Now the second write up. “Microsoft’s Responsible AI Chief Worries about the Open Web.” First, recall that Microsoft owns GitHub, a very convenient source for individuals looking to perform interesting tasks. Some are good tasks like snagging a script to perform a specific function for a church’s database. Other software does interesting things in order to help a user shore up security. Rapid 7 metasploit-framework is an interesting example. Almost anyone can find quite a bit of useful software on GitHub. When I lectured in a central European country’s main technical university, the students were familiar with GitHub. Oh, boy, were they.

In this second write up I learned that Microsoft has released a 39 page “report” which looks a lot like a PowerPoint presentation created by a blue-chip consulting firm. You can download the document at this link, at least you could as of May 6, 2024. “Security” appears 78 times in the document. There are “security reviews.” There is “cybersecurity development” and a reference to something called “Our Aether Security Engineering Guidance.” There is “red teaming” for biosecurity and cybersecurity. There is security in Azure AI. There are security reviews. There is the use of Copilot for security. There is something called PyRIT which “enables security professionals and machine learning engineers to proactively find risks in their generative applications.” There is partnering with MITRE for security guidance. And there are four footnotes to the document about security.

What strikes me is that security is definitely a popular concept in the document. But the principles and pillars apparently require AI context. As I worked through the PowerPoint, I formed the opinion that a committee worked with a small group of wordsmiths and crafted a rather elaborate word salad about going all in with Microsoft AI. Then the group added “security” the way my mother would chop up a red pepper and put it in a salad for color.

I want to offer several observations:

- Both documents suggest to me that Microsoft is now pushing “security” as Job One, a slogan used by the Ford Motor Co. (How are those Fords fairing in the reliability ratings?) Saying words and doing are two different things.

- The rhetoric of the two documents remind me of Gertrude’s statement, “The lady doth protest too much, methinks.” (Hamlet? Remember?)

- The US government, most large organizations, and many individuals “assume” that Microsoft has taken security seriously for decades. The jargon-and-blather PowerPoint make clear that Microsoft is trying to find a nice way to say, “We are saying we will do better already. Just listen, people.”

Net net: Bandying about the word trust or the word security puts everyone on notice that Microsoft knows it has a security problem. But the key point is that bad actors know it, exploit the security issues, and believe that Microsoft software and services will be a reliable source of opportunity of mischief. Ransomware? Absolutely. Exposed data? You bet your life. Free hacking tools? Let’s go. Does Microsoft have a security problem? The word form is incorrect. Does Microsoft have security problems? You know the answer. Aether.

Stephen E Arnold, May 6, 2024

Reflecting on the Value Loss from a Security Failure

May 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Right after the October 2023 security lapse in Israel, I commented to one of the founders of a next-generation Israeli intelware developer, “Quite a security failure.” The response was, “It is Israel’s 9/11.” One of the questions that kept coming to my mind was, “How could such sophisticated intelligence systems, software, and personnel have dropped the ball?” I have arrived at an answer: Belief in the infallibility of in situ systems. Now I am thinking about the cost of a large-scale security lapse.

It seems the young workers are surprised the security systems did not work. Thanks, MSFT Copilot. Good enough which may be similar to some firms’ security engineering.

Globes published “Big Tech 50 Reveals Sharp Falls in Israeli Startup Valuations.” The write up provides some insight into the business cost of security which did not live up to its marketing. The write up says:

The Israeli R&D partnership has reported to the TASE [Tel Aviv Stock Exchange] that 10 of the 14 startups in which it has invested have seen their valuations decline.

Interesting.

What strikes me is that the cost of a security lapse is obviously personal and financial. One of the downstream consequences is a loss of confidence or credibility. Israel’s hardware and software security companies have had, in my opinion, a visible presence at conferences addressing specialized systems and software. The marketing of the capabilities of these systems has been maturing and becoming more like Madison Avenue efforts.

I am not sure which is worse: The loss of “value” or the loss of “credibility.”

If we transport the question about the cost of a security lapse to large US high-technology company, I am not sure a Globes’ type of article captures the impact. Frankly, US companies suffer security issues on a regular basis. Only a few make headlines. And then the firms responsible for the hardware or software which are vulnerable because of poor security issue a news release, provide a software update, and move on.

Several observations:

- The glittering generalities about the security of widely used hardware and software is simply out of step with reality

- Vendors of specialized software such as intelware suggest that their systems provide “protection” or “warnings” about issues so that damage is minimized. I am not sure I can trust these statements.

- The customers, who may have made security configuration errors, have the responsibility to set up the systems, update, and have trained personnel operate them. That sounds great, but it is simply not going to happen. Customers are assuming what they purchase is secure.

Net net: The cost of security failure is enormous: Loss of life, financial disaster, and undermining the trust between vendor and customer. Perhaps some large outfits should take the security of the products and services they offer beyond a meeting with a PR firm, a crisis management company, or a go-go marketing firm? The “value” of security is high, but it is much more than a flashy booth, glib presentations at conferences, or a procurement team assuming what vendors present correlates with real world deployment.

Stephen E Arnold, May 6, 2024

Generative AI Means Big Money…Maybe

May 6, 2024

Whenever new technology appears on the horizon, there are always optimistic, venture capitalists that jump on the idea that it will be a gold mine. While this is occasionally true, other times it’s a bust. Anything can sound feasible on paper, but reality often proves that brilliant ideas don’t work. Medium published Ashish Karan’s article, “Generative AI: A New Gold Rush For Software Engineering.”

Kakran opens his article asserting the brilliant simplicity of Einstein’s E=mc² formula to inspire readers. He alludes that generative AI will revolutionize industries like Einstein’s formula changed physics. He also says that white collar jobs stand to be automated for the first time in history. White collar jobs have been automated or made obsolete for centuries.

Kakran then runs numbers complete with charts and explanations about how generative AI is going to change the world. His diagrams and explanations probably mean something but it reads like white paper gibberish. This part makes sense:

“If you rewind to the year 2008, you will suddenly hear a lot of skepticism about the cloud. Would it ever make sense to move your apps and data from private or colo [cated] data centers to cloud thereby losing fine-grained control. But the development of multi-cloud and devops technologies made it possible for enterprises to not only feel comfortable but accelerate their move to the cloud. Generative AI today might be comparable to cloud in 2008. It means a lot of innovative large companies are still to be founded. For founders, this is an enormous opportunity to create impactful products as the entire stack is currently getting built.”

The author is correct that are business opportunities to leverage generative AI. Is it a California gold rush? Nobody knows. If you have the funding, expertise, and a good idea then follow it. If not, maybe focusing on a more attainable career is better.

Whitey Grace, May 6, 2024

Microsoft: Security Debt and a Cooked Goose

May 3, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Microsoft has a deputy security officer. Who is it? For reasons of security, I don’t know. What I do know is that our test VPNs no longer work. That’s a good way to enforce reduced security: Just break Windows 11. (Oh, the pushed messages work just fine.)

Is Microsoft’s security goose cooked? Thanks, MSFT Copilot. Keep following your security recipe.

I read “At Microsoft, Years of Security Debt Come Crashing Down.” The idea is that technical debt has little hidden chambers, in this case, security debt. The write up says:

…negligence, misguided investments and hubris have left the enterprise giant on its back foot.

How has Microsoft responded? Great financial report and this type of news:

… in early April, the federal Cyber Safety Review Board released a long-anticipated report which showed the company failed to prevent a massive 2023 hack of its Microsoft Exchange Online environment. The hack by a People’s Republic of China-linked espionage actor led to the theft of 60,000 State Department emails and gained access to other high-profile officials.

Bad? Not as bad as this reminder that there are some concerning issues

What is interesting is that big outfits, government agencies, and start ups just use Windows. It’s ubiquitous, relatively cheap, and good enough. Apple’s software is fine, but it is different. Linux has its fans, but it is work. Therefore, hello Windows and Microsoft.

The article states:

Just weeks ago, the Cybersecurity and Infrastructure Security Agency issued an emergency directive, which orders federal civilian agencies to mitigate vulnerabilities in their networks, analyze the content of stolen emails, reset credentials and take additional steps to secure Microsoft Azure accounts.

The problem is that Microsoft has been successful in becoming for many government and commercial entities the only game in town. This warrants several observations:

- The Microsoft software ecosystem may be impossible to secure due to its size and complexity

- Government entities from America to Zimbabwe find the software “good enough”

- Security — despite the chit chat — is expensive and often given cursory attention by system architects, programmers, and clients.

The hope is that smart software will identify, mitigate, and choke off the cyber threats. At cyber security conferences, I wonder if the attendees are paying attention to Emily Dickinson (the sporty nun of Amherst), who wrote:

Hope is the thing with feathers

That perches in the soul

And sings the tune without the words

And never stops at all.

My thought is that more than hope may be necessary. Hope in AI is the cute security trick of the day. Instead of a happy bird, we may end up with a cooked goose.

Stephen E Arnold, May 3, 2024

Trust the Internet? Sure and the Check Is in the Mail

May 3, 2024

This essay is the work of a dumb humanoid. No smart software involved.

This essay is the work of a dumb humanoid. No smart software involved.

When the Internet became common place in schools, students were taught how to use it as a research tool like encyclopedias and databases. Learning to research is better known as information literacy and it teaches critical evaluation skills. The biggest takeaway from information literacy is to never take anything at face value, especially on the Internet. When I read CIRA and Continuum Loops’ report, “A Trust Layer For The Internet Is Emerging: A 2023 Report,” I had my doubts.

CIRA is the Canadian Internet Registration Authority, a non-profit organization that supposedly builds a trusted Internet. CIRA acknowledges that as a whole the Internet lacks a shared framework and tool sets to make it trustworthy. The non-profit states that there are small, trusted pockets on the Internet, but they sacrifice technical interoperability for security and trust.

CIRA released a report about how people are losing faith in the Internet. According to the report’s executive summary, the number of Canadians who trust the Internet fell from 71% to 57% while the entire world went from 74% to 63%. The report also noted that companies with a high trust rate outperform their competition. Then there’s this paragraph:

“In this report, CIRA and Continuum Loop identify that pairing technical trust (e.g., encryption and signing) and human trust (e.g., governance) enables a trust layer to emerge, allowing the internet community to create trustworthy digital ecosystems and rebuild trust in the internet as a whole. Further, they explore how trust registries help build trust between humans and technology via the systems of records used to help support these digital ecosystems. We’ll also explore the concept of registry of registries (RoR) and how it creates the web of connections required to build an interoperable trust layer for the internet.”

Does anyone else hear the TLA for Whiskey Tango Foxtrot in their head? Trusted registries sound like a sales gimmick to verify web domains. There are trusted resources on the Internet but even those need to be fact checked. The companies that have secure networks are Microsoft, TikTok, Google, Apple, and other big tech, but the only thing that can be trusted about some outfits are the fat bank accounts.

Whitey Grace, May 3, 2024

Amazon: Big Bucks from Bogus Books

May 3, 2024

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Anyone who shops for books on Amazon should proceed with caution now that “Fake AI-Generated Books Swarm Amazon.” Good e-Reader’s Navkiran Dhaliwal cites an article from Wired as she describes one author’s somewhat ironic experience:

“In 2019, AI researcher Melanie Mitchell wrote a book called ‘Artificial Intelligence: A Guide for Thinking Humans’. The book explains how AI affects us. ChatGPT sparked a new interest in AI a few years later, but something unexpected happened. A fake version of Melanie’s book showed up on Amazon. People were trying to make money by copying her work. … Melanie Mitchell found out that when she looked for her book on Amazon, another ebook with the same title was released last September. This other book was much shorter, only 45 pages. This book copied Melanie’s ideas but in a weird and not-so-good way. The author listed was ‘Shumaila Majid,’ but there was no information about them – no bio, picture, or anything online. You’ll see many similar books summarizing recently published titles when you click on that name. The worst part is she could not find a solution to this problem.”

It took intervention from WIRED to get Amazon to remove the algorithmic copycat. The magazine had Reality Defender confirm there was a 99% chance it was fake then contacted Amazon. That finally did the trick. Still, it is unclear whether it is illegal to vend AI-generated “summaries” of existing works and sell them under the original title. Regardless, asserts Mitchell, Amazon should take steps to prevent the practice. Seems reasonable.

And Amazon cares. No, really. Really it does.

Cynthia Murrell, April 29, 2024

Security Conflation: A Semantic Slippery Slope to Persistent Problems

May 2, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

My view is that secrets can be useful. When discussing who has what secret, I think it is important to understand who the players / actors are. When I explain how to perform a task to a contractor in the UK, my transfer of information is a secret; that is, I don’t want others to know the trick to solve a problem that can take others hours or day to resolve. The context is an individual knows something and transfers that specific information so that it does not become a TikTok video. Other secrets are used by bad actors. Some are used by government officials. Commercial enterprises — for example, pharmaceutical companies wrestling with an embarrassing finding from a clinical trial — have their secrets too. Blue-chip consulting firms are bursting with information which is unknown by all but a few individuals.

Good enough, MSFT Copilot. After “all,” you are the expert in security.

I read “Hacker Free-for-All Fights for Control of Home and Office Routers Everywhere.” I am less interested in the details of shoddy security and how it is exploited by individuals and organizations. What troubles me is the use of these words: “All” and “Everywhere.” Categorical affirmatives are problematic in a today’s datasphere. The write up conflates any entity working for a government entity with any bad actor intent on committing a crime as cut from the same cloth.

The write up makes two quite different types of behavior identical. The impact of such conflation, in my opinion, is to suggest:

- Government entities are criminal enterprises, using techniques and methods which are in violation of the “law”. I assume that the law is a moral or ethical instruction emitted by some source and known to be a universal truth. For the purposes of my comments, let’s assume the essay’s analysis is responding to some higher authority and anchored on that “universal” truth. (Remember the danger of all and everywhere.)

- Bad actors break laws just like governments and are, therefore, both are criminals. If true, these people and entities must be punished.

- Some higher authority — not identified in the write up — must step in and bring these evil doers to justice.

The problem is that there is a substantive difference among the conflated bad actors. Those engaged in enforcing laws or protecting a nation state are, one hopes, acting within that specific context; that is, the laws, rules, and conventions of that nation state. When one investigator or analyst seeks “secrets” from an adversary, the reason for the action is, in my opinion, easy to explain: The actor followed the rules spelled out by the context / nation state for which the actor works. If one doesn’t like how France runs its railroad, move to Saudi Arabia. In short, find a place to live where the behaviors of the nation state match up with one’s individual perceptions.

When a bad actor — for example a purveyor of child sexual abuse material on an encrypted messaging application operating in a distributed manner from a country in the Middle East — does his / her business, government entities want to shut down the operation. Substitute any criminal act you want, and the justification for obtaining information to neutralize the bad actor is at least understandable to the child’s mother.

The write up dances into the swamp of conflation in an effort to make clear that the system and methods of good and bad actors are the same. That’s the way life is in the datasphere.

The real issue, however, is not the actors who exploit the datasphere, in my view, the problems begins with:

- Shoddy, careless, or flawed security created and sold by commercial enterprises

- Lax, indifferent, and false economies of individuals and organizations when dealing with security their operating environment

- Failure of regulatory authorities to certify that specific software and hardware meet requirements for security.

How does the write up address fixing the conflation problem, the true root of security issues, and the fact that exploited flaws persist for years? I noted this passage:

The best way to keep routers free of this sort of malware is to ensure that their administrative access is protected by a strong password, meaning one that’s randomly generated and at least 11 characters long and ideally includes a mix of letters, numbers, or special characters. Remote access should be turned off unless the capability is truly needed and is configured by someone experienced. Firmware updates should be installed promptly. It’s also a good idea to regularly restart routers since most malware for the devices can’t survive a reboot. Once a device is no longer supported by the manufacturer, people who can afford to should replace it with a new one.

Right. Blame the individual user. But that individual is just one part of the “problem.” The damage done by conflation and by failing to focus on the root causes remains. Therefore, we live in a compromised environment. Muddled thinking makes life easier for bad actors and harder for those who are charged with enforcing rules and regulations. Okay, mom, change your password.

Stephen E Arnold, May 2, 2024

Search Metrics: One Cannot Do Anything Unless One Finds the Info

May 2, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The search engine optimization crowd bamboozled people with tales of getting to be number one on Google. The SEO experts themselves were tricked. The only way to appear on the first page of search results is to buy an ad. This is the pay-to-play approach to being found online. Now a person cannot do anything, including getting in the building to start one’s first job without searching. The company sent the future wizard an email with the access code. If the new hire cannot locate the access code, she cannot work without going through hoops. Most work or fun is similar. Without an ability to locate specific information online, a person is going to be locked out or just lost in space.

The new employee cannot search her email to locate the access code. No job for her. Thanks, MSFT Copilot, a so-so image without the crazy Grandma says, “You can’t get that image, fatso.”

I read a chunk of content marketing called “Predicted 25% Drop In Search Volume Remains Unclear.” The main idea (I think) is that with generative smart software, a person no longer has to check with Googzilla to get information. In some magical world, a person with a mobile phone will listen as the smart software tells a user what information is needed. Will Apple embrace Microsoft AI or Google AI? Will it matter to the user? Will the number of online queries decrease for Google if Apple decides it loves Redmond types more than Googley types? Nope.

The total number of online queries will continue to go up until the giant search purveyors collapse due to overburdened code, regulatory hassles, or their own ineptitude. But what about the estimates of mid tier consulting firms like Gartner? Hello, do you know that Gartner is essentially a collection of individuals who do the bidding of some work-from-home, self-anointed experts?

Face facts. There is one alleged monopoly controlling search. That is Google. It will take time for an upstart to siphon significant traffic from the constellation of Google services. Even Google’s own incredibly weird approach to managing the company will not be able to prevent people from using the service. Every email search is a search. Every direction in Waze is a search. Every click on a suggested YouTube TikTok knock off is a search. Every click on anything Google is a search. To tidy up the operation, assorted mechanisms for analyzing user behavior provide a fingerprint of users. Advertisers, even if they know they are being given a bit of a casino frippery, have to decide among Amazon, Meta, or, or … Sorry. I can’t think of another non-Google option.

If you want traffic, you can try to pull off a Black Swan event as OpenAI did. But for most organizations, if you want traffic, you pay Google. What about SEO? If the SEO outfit is a Google partner, you are on the Information Highway to Google’s version of Madison Avenue.

But what about the fancy charts and graphs which show Google’s vulnerability? Google’s biggest enemy is Google’s approach to managing its staff, its finances, and its technology. Bing or any other search competitor is going to find itself struggling to survive. Don’t believe me? Just ask the founder of Search2, Neeva, or any other search vendor crushed under Googzilla’s big paw. Unclear? Are you kidding me? Search volume is going to go up until something catastrophic happens. For now, buy Google advertising for traffic. Spend some money with Meta. Use Amazon if you sell fungible things. Google owns most of the traffic. Adjust and quit yapping about some fantasy cooked up by so-called experts.

Stephen E Arnold, May 2, 2024