Amazon Rekognition: Great but…

November 9, 2018

I have been following the Amazon response to employee demands to cut off the US government. Put that facial recognition technology on “ice.” The issue is an intriguing one; for example, Rekognition plugs into DeepLens. DeepLens connects with Sagemaker. The construct allows some interesting policeware functions. Ah, you didn’t know that? Some info is available if you view the October 30 and November 6, 2018, DarkCyber. Want more info? Write benkent2020 at yahoo dot com.

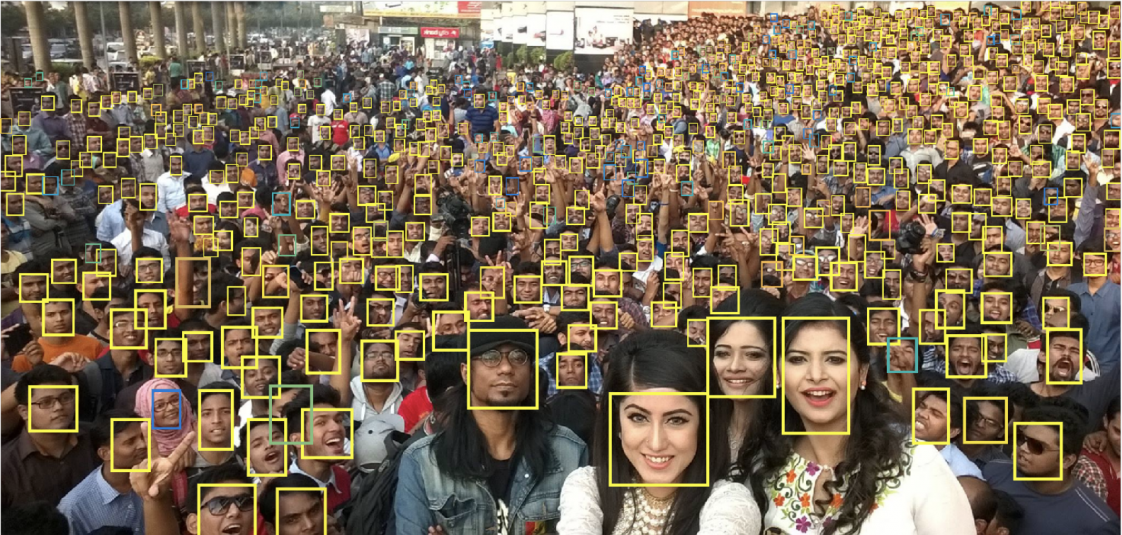

How realistic is 99 percent accuracy? Pretty realistic when one has one image and a bounded data set against which to compare a single image of of adequate resolution and sharpness.

What caught my attention was the “real” news in “Amazon Told Employees It Would Continue to Sell Facial Recognition Software to Law Enforcement.” I am less concerned about the sales to the US government. I was drawn to these verbal perception shifters:

- under fire. [Amazon is taking flak from its employees who don’t want Amazon technology used by LE and similar services.]

- track human beings [The assumption is tracking is bad until the bad actor tracked is trying to kidnap your child, then tracking is wonderful. This is the worse type of situational reasoning.]

- send them back into potentially dangerous environments overseas. [Are Central and South America overseas, gentle reader?]

These are hot buttons.

But I circled in pink this phrase:

Rekognition is research proving the system is deeply flawed, both in terms of accuracy and regarding inherent racial bias.

Well, what does one make of the statement that Rekognition is powerful but has fatal flaws?

Want proof that Rekognition is something more closely associated with Big Lots than Amazon Prime? The write up states:

The American Civil Liberties Union tested Rekognition over the summer and found that the system falsely identified 28 members of Congress from a database of 25,000 mug shots. (Amazon pushed back against the ACLU’s findings in its study, with Matt Wood, its general manager of deep learning and AI, saying in a blog post back in July that the data from its test with the Rekognition API was generated with an 80 percent confidence rate, far below the 99 percent confidence rate it recommends for law enforcement matches.)

Yeah, 99 percent confidence. Think about that. Pretty reasonable, right? Unfortunately 99 percent is like believing in the tooth fairy, just in terms of a US government spec or Statement of Work. Reality for the vast majority of policeware systems is in the 75 to 85 percent range. Pretty good in my book because these are achievable accuracy percentages. The 99 percent stuff is window dressing and will be for years to come.

Also, Amazon, the Verge points out, is not going to let folks tinker with the Rekognition system to determine how accurate it really is. I learned:

The company has also declined to participate in a comprehensive study of algorithmic bias run by the National Institute of Standards and Technology that seeks to identify when racial and gender bias may be influencing a facial recognition algorithm’s error rate.

Yep, how about those TREC accuracy reports?

My take on this write up is that Amazon is now in the sites of the “real” journalists.

Perhaps the Verge would like Amazon to pull out of the JEDI procurement?

Great idea for some folks.

Perhaps the Verge will dig into the other components of Rekognition and then plot the improvements in accuracy when certain types of data sets are used in the analysis.

Facial recognition is not the whole cloth. Rekognition is one technology thread which needs a context that moves beyond charged language and accuracy rates which are in line with those of other advanced systems.

Amazon’s strength is not facial recognition. The company has assembled a policeware construct. That’s news.

Stephen E Arnold, November 9, 2018