Allegations of Personal Data Flows from X.com to Au10tix

June 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I work from my dinobaby lair in rural Kentucky. What the heck to I know about Hod HaSharon, Israel? The answer is, “Not much.” However, I read an online article called “Elon Musk Now Requiring All X Users Who Get Paid to Send Their Personal ID Details to Israeli Intelligence-Linked Corporation.”I am not sure if the statements in the write up are accurate. I want to highlight some items from the write up because I have not seen information about this interesting identify verification process in my other feeds. This could be the second most covered news item in the last week or two. Number one goes to Google’s telling people to eat a rock a day and its weird “not our fault” explanation of its quantumly supreme technology.

Here’s what I carried away from this X to Au10tix write up. (A side note: Intel outfits like obscure names. In this case, Au10tix is a cute conversion of the word authentic to a unique string of characters. Aw ten tix. Get it?)

Yes, indeed. There is an outfit called Au10tix, and it is based about 60 miles north of Jerusalem, not in the intelware capital of the world Tel Aviv. The company, according to the cited write up, has a deal with Elon Musk’s X.com. The write up asserts:

X now requires new users who wish to monetize their accounts to verify their identification with a company known as Au10tix. While creator verification is not unusual for online platforms, Elon Musk’s latest move has drawn intense criticism because of Au10tix’s strong ties to Israeli intelligence. Even people who have no problem sharing their personal information with X need to be aware that the company they are using for verification is connected to the Israeli government. Au10tix was founded by members of the elite Israeli intelligence units Shin Bet and Unit 8200.

Sounds scary. But that’s the point of the article. I would like to remind you, gentle reader, that Israel’s vaunted intelligence systems failed as recently as October 2023. That event was described to me by one of the country’s former intelligence professionals as “our 9/11.” Well, maybe. I think it made clear that the intelware does not work as advertised in some situations. I don’t have first-hand information about Au10tix, but I would suggest some caution before engaging in flights of fancy.

The write up presents as actual factual information:

The executive director of the Israel-based Palestinian digital rights organization 7amleh, Nadim Nashif, told the Middle East Eye: “The concept of verifying user accounts is indeed essential in suppressing fake accounts and maintaining a trustworthy online environment. However, the approach chosen by X, in collaboration with the Israeli identity intelligence company Au10tix, raises significant concerns. “Au10tix is located in Israel and both have a well-documented history of military surveillance and intelligence gathering… this association raises questions about the potential implications for user privacy and data security.” Independent journalist Antony Loewenstein said he was worried that the verification process could normalize Israeli surveillance technology.

What the write up did not significant detail. The write up reports:

Au10tix has also created identity verification systems for border controls and airports and formed commercial partnerships with companies such as Uber, PayPal and Google.

My team’s research into online gaming found suggestions that the estimable 888 Holdings may have a relationship with Au10tix. The company pops up in some of our research into facial recognition verification. The Israeli gig work outfit Fiverr.com seems to be familiar with the technology as well. I want to point out that one of the Fiverr gig workers based in the UK reported to me that she was no longer “recognized” by the Fiverr.com system. Yeah, October 2023 style intelware.

Who operates the company? Heading back into my files, I spotted a few names. These individuals may no longer involved in the company, but several names remind me of individuals who have been active in the intelware game for a few years:

- Ron Atzmon: Chairman (Unit 8200 which was not on the ball on October 2023 it seems)

- Ilan Maytal: Chief Data Officer

- Omer Kamhi: Chief Information Security Officer

- Erez Hershkovitz: Chief Financial Officer (formerly of the very interesting intel-related outfit Voyager Labs, a company about which the Brennan Center has a tidy collection of information related to the LAPD)

The company’s technology is available in the Azure Marketplace. That description identifies three core functions of Au10tix’ systems:

- Identity verification. Allegedly the system has real-time identify verification. Hmm. I wonder why it took quite a bit of time to figure out who did what in October 2023. That question is probably unfair because it appears no patrols or systems “saw” what was taking place. But, I should not nit pick. The Azure service includes a “regulatory toolbox including disclaimer, parental consent, voice and video consent, and more.” That disclaimer seems helpful.

- Biometrics verification. Again, this is an interesting assertion. As imagery of the October 2023 emerged I asked myself, “How did that ID to selfie, selfie to selfie, and selfie to token matches” work? Answer: Ask the families of those killed.

- Data screening and monitoring. The system can “identify potential risks and negative news associated with individuals or entities.” That might be helpful in building automated profiles of individuals by companies licensing the technology. I wonder if this capability can be hooked to other Israeli spyware systems to provide a particularly helpful, real-time profile of a person of interest?

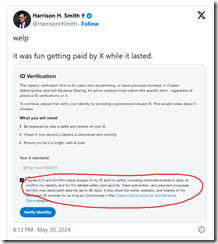

Let’s assume the write up is accurate and X.com is licensing the technology. X.com — according to “Au10tix Is an Israeli Company and Part of a Group Launched by Members of Israel’s Domestic Intelligence Agency, Shin Bet” — now includes this

The circled segment of the social media post says:

I agree to X and Au10tix using images of my ID and my selfie, including extracted biometric data to confirm my identity and for X’s related safety and security, fraud prevention, and payment purposes. Au10tix may store such data for up to 30 days. X may store full name, address, and hashes of my document ID number for as long as I participate in the Creator Subscription or Ads Revenue Share program.

This dinobaby followed the October 2023 event with shock and surprise. The dinobaby has long been a champion of Israel’s intelware capabilities, and I have done some small projects for firms which I am not authorized to identify. Now I am skeptical and more critical. What if X’s identity service is compromised? What if the servers are breached and the data exfiltrated? What if the system does not work and downstream financial fraud is enabled by X’s push beyond short text messaging? Much intelware is little more than glorified and old-fashioned search and retrieval.

Does Mr. Musk or other commercial purchasers of intelware know about cracks and fissures in intelware systems which allowed the October 2023 event to be undetected until live-fire reports arrived? This tie up is interesting and is worth monitoring.

Stephen E Arnold, June 4, 2024

Facial Recognition: Not As Effective As Social Recognition

January 8, 2021

Facial recognition is a sub-function of image analysis. For some time, I have bristled at calls for terminating research into this important application of algorithms intended to identify, classify, and make sense of patterns. Many facial recognition systems return false positives for reasons ranging from lousy illumination to people wearing glasses with flashing LED lights.

I noted “The FBI Asks for Help Identifying Trump’s Terrorists. Internet (and Local News) Doesn’t Disappoint.” The article makes it clear that facial recognition by smart software may not be as effective as social recognition. The write up says:

There is also Elijah Schaffer, a right-wing blogger on Glenn Beck’s BlazeTV, who posted incriminating evidence of himself in Nancy Pelosi’s office and then took it down when he realized that he posted himself breaking and entering into Speaker of the House Nancy Pelosi’s office. But screenshots are a thing.

What’s clear is that technology cannot do what individuals’ posting to their social media accounts can do or what individuals who can say “Yeah, I know that person” delivers.

Technology for image analysis is advancing, but I will be the first to admit that 75 to 90 percent accuracy falls short of a human-centric system which can provide:

- Name

- Address

- Background details

- Telephone and other information.

Two observations: First, social recognition is at this time better, faster, and cheaper than Fancy Dan image recognition systems. Second, image recognition is more than a way to identify a person robbing a convenience store. Medical, military, and safety applications are in need of advanced image processing systems. Let the research and testing continue without delay.

Stephen E Arnold, January 8, 2021

PimEyes Brings Facial Recognition to the Masses

December 18, 2020

If a search engine based on facial recognition is controversial when in the hands of law enforcement, it is downright scary when made available to the general public for free. However, it comes as no surprise to those of us who follow such things that PetaPixel reveals, “This Creepy Face Search Engine Scours the Web for Photos of Anyone.” Officially marketed as a way for users to protect their own privacy, PimEyes uses facial recognition technology to hunt down photos of anyone across the Web. The basic, one-time search is free, but for an extra $15 one can receive up to 25 alerts a month as the service searches perpetually. Reporter Michael Zhang writes:

“After you provide one or more photos of a person (in which their face is clearly visible), PimEyes compares that person to faces found on millions of public websites — things like news articles, blogs, social media, and more. Within a few seconds, it provides results showing other photos found that match the person and links to where those portraits were found. … Google’s popular reserve image search can find photos similar in appearance to images you provide, but PimEyes specifically uses facial recognition and can accept multiple reference photos to find images of specific individuals.”

The brief write-up cites this OneZero article. It also shares an example search featuring the lovely, and often photographed, Meghan Markle. Based in Poland, PimEyes was created in 2017 and commercialized in 2019.

Cynthia Murrell, December 18, 2020

Fujitsu Simplifies, Reduces Costs of Preventing Facial Authentication Fraud

September 25, 2020

Fujitsu says it has developed a cost-effective way to thwart attempts to fool facial recognition systems, we learn from IT-Online’s write-up, “Fujitsu Overcomes Facial Authentication Fraud.” The same factor that makes facial authentication systems more convenient than other verification methods, like images of fingerprints or palm veins, also makes them more vulnerable to fraud—photos of faces are easy to capture and reproduce. We’re told:

“Fujitsu Laboratories has developed a facial recognition technology that uses conventional cameras to successfully identify efforts to spoof authentication systems. This includes impersonation attempts in which a person presents a printed photograph or an image from the internet to a camera. Conventional technologies rely on expensive, dedicated devices like near-infrared cameras to identify telltale signs of forgery, or the user is required to move their face from side to side, which remains difficult to duplicate with a forgery. This leads to increased costs, however, and the need for additional user interaction slows the authentication process. To tackle these challenges, Fujitsu has developed a forgery feature extraction technology that detects the subtle differences between an authentic image and a forgery, as well as a forgery judgment technology that accounts for variations in appearance due to the capture environment. … Fujitsu believes that, by using these technologies, it becomes possible to identify counterfeits using only the information of face images taken by a general-purpose camera and to realize relatively convenient and inexpensive spoofing detection.”

We’re told the company tested the system in a real-world office/ telecommuting setting and confirmed it works as desired. Fujitsu hopes the technology will prove popular as remote work continues and, possibly, grows. The venerable global information and communication tech firm serves many prominent companies in several industries. Based in Tokyo, Fujitsu has been operating since 1935.

Cynthia Murrell, September 25, 2020

Defeating Facial Recognition: Chasing a Ghost

August 12, 2020

The article hedges. Check the title: “This Tool could Protect Your Photos from Facial Recognition.” Notice the “could”. The main idea is that people do not want their photos analyzed and indexed with the name, location, state of mind, and other index terms. I am not so sure, but the write up explains with that “could” coloring the information:

The software is not intended to be just a one-off tool for privacy-loving individuals. If deployed across millions of images, it would be a broadside against facial recognition systems, poisoning the accuracy of the data sets they gather from the Web. <

So facial recognition = bad. Screwing up facial recognition = good.

There’s more:

“Our goal is to make Clearview go away,” said Dr Ben Zhao, a professor of computer science at the University of Chicago.

Okay, a company is a target.

How’s this work:

Fawkes converts an image — or “cloaks” it, in the researchers’ parlance — by subtly altering some of the features that facial recognition systems depend on when they construct a person’s face print.

Several observations:

- In the event of a problem like the explosion in Lebanon, maybe facial recognition can identify some of those killed.

- Law enforcement may find narrowing a pool of suspects to a smaller group may enhance an investigative process.

- Unidentified individuals who are successfully identified “could” add precision to Covid contact tracking.

- Applying the technology to differentiate “false” positives from “true”positives in some medical imaging activities may be helpful in some medical diagnoses.

My concern is that technical write ups are often little more than social polemics. Examining the upside and downside of an innovation is important. Converting a technical process into a quest to “kill” a company, a concept, or an application of technical processes is not helpful in DarkCyber’s view.

Stephen E Arnold, August 12, 2020

Wolfcom, Body Cameras, and Facial Recognition

April 5, 2020

Facial recognition is controversial topic and is becoming more so as the technology advances. Top weapons and security companies will not go near facial recognition software due to the cans of worms it would open. Law enforcement agencies want these companies to add it. Wolfcom is actually adding facial recognition to its cameras. Techdirt has the scoop on the story, “Wolfcom Decides It Wants To Be The First US Body Cam Company To Add Facial Tech To Its Products.”

Wolfcom makes body camera for law enforcement and they want to add facial recognition technology to their products. Currently Wolfcom is developing facial recognition for its newest body cam, Halo. Around one thousand five hundred police departments have purchased Wolfcam’s body cameras.

If Wolfcom is successful with its facial recognition development, it would be the first company to have body cameras that use the technology. The technology is still in development according to Wolfcom’s marketing. Right now, their facial recognition technology rests on taking individuals’ photos, then matching them against a database. The specific database is not mentioned.

Wolfcom obviously wants to be an industry leader, but it is also being careful about no making false promises or drumming up bad advertising:

“About the only thing Wolfcom is doing right is not promising sky high accuracy rate for its unproven product when pitching it to government agencies. That’s the end of the “good” list. Agencies who have been asked to beta test the “live” facial recognition AI are being given free passes to use the software in the future, when (or if) it actually goes live. Right now, Wolfcom’s offering bears some resemblance to Clearview’s: an app-based search function that taps into whatever databases the company has access to. Except in this case, even less is known about the databases Wolfcom uses or if it’s using its own algorithm or simply licensing one from another purveyor.”

Wolfcom could eventually offer realtime facial recognition technology and that could affect some competitors.

Whitney Grace, April 5, 2020

Facial Recognition: Those Error Rates? An Issue, Of Course

February 21, 2020

DarkCyber read “Machines Are Struggling to Recognize People in China.” The write up asserts:

The country’s ubiquitous facial recognition technology has been stymied by face masks.

One of the unexpected consequences of the Covid 19 virus is that citizens with face masks cannot be recognized.

“Unexpected” when adversarial fashion has been getting some traction among those who wish to move anonymously.

The write up adds:

Recently, Chinese authorities in some provinces have made medical face masks mandatory in public and the use and popularity of these is going up across the country. However, interestingly, as millions of masks are now worn by Chinese people, there has been an unintended consequence. Not only have the country’s near ubiquitous facial-recognition surveillance cameras been stymied, life is reported to have become difficult for ordinary citizens who use their faces for everyday things such as accessing their homes and bank accounts.

Now an “admission” by a US company:

Companies such as Apple have confirmed that the facial recognition software on their phones need a view of the person’s full face, including the nose, lips and jaw line, for them to work accurately. That said, a race for the next generation of facial-recognition technology is on, with algorithms that can go beyond masks. Time will tell whether they work. I bet they will.

To sum up: Masks defeat facial recognition. The future is a method of identification that can work with what is not covered plus any other data available to the system; for example, pattern of walking and geo-location.

For now, though, the remedy for the use of masks is lousy facial recognition and more effort to find innovations.

The author of the write up is a — wait for it — venture capital professional. And what country leads the world in facial recognition? China, according to the VC professional.

The future is better person recognition of which the face is one factor.

Stephen E Arnold, February 21, 2020

Easy Facial Recognition

February 11, 2020

DarkCyber spotted a Twitter thread. You can view it here (verified on February 8, 2020). The main point is that using open source software, an individual was able to obtain (scrape; that is copying) images from publicly accessible services. Then the images were “processed.” The idea was identify a person from an image. Net net: People can object to facial recognition, but once a technology migrates from “little known” to public-available, there may be difficulty putting the tech cat bag in the black bag.

Stephen E Arnold, February 11, 2020

The Clearview Write Up: A Great Quote

January 20, 2020

DarkCyber does not want to join in the hand waving about the facial recognition company called Clearview. Instead, we want to point out that the article is available without a pay wall from this link: https://bit.ly/2TO26H1

Also, the write up contains a great quote about technology like facial recognition. Here it is:

It’s creepy what they’re doing, but there will be many more of these companies. There is no monopoly on math.—Al Gidari, a privacy professor at Stanford Law School

DarkCyber wants to point out that a number of companies have gathered collections of images from a wide range of sources. The write up points to investors who may or may not be the power grid behind this particular technology application.

The inventor fits a stereotype: College drop out, long hair, etc.

The write up also identifies officers who allegedly found the database of images and the services helpful.

The New York Times continues to report on specialized technology. There are upsides and downsides to the information. One upside is that the write ups inform people about technology and its utility. The downside is that the information presented may generate a situation in which individuals can be put at risk or a negative tint given to something that is applied math and publicly accessible data.

It is interesting to consider combining services; for example, brand monitoring and image search. Perhaps that is another story for the New York Times?

Stephen E Arnold, January 20, 2020

New Chinese Facial Recognition Camera Reduces False Positives

January 19, 2020

In a move that should surprise nobody, China has created the ultimate facial recognition hardware. The Telegraph reports, “China Unveils 500 Megapixel Camera that Can Identify Every Face in a Crowd of Tens of Thousands.” Researchers revealed the “super camera,” which can see four times more detail than the human eye, at China’s International Industry Fair. Of course, no surveillance tech is complete without an AI; writer Freddie Hayward tells us:

“The camera’s artificial intelligence will be able to scan a crowd and identify an individual within seconds. Samantha Hoffman, an analyst at the Australian Strategic Policy Institute, told the ABC that the government has massive databases of people’s images and that data generated from surveillance video can be ‘fed into a pool of data that, combined with AI processing, can generate tools for social control, including tools linked to the Social Credit System’.”

Yes, the Social Credit System. China is no stranger to spying on its people, and this development will only make their current practices more effective. We learn:

“China currently has an estimated 200 million CCTV cameras watching over its citizens. For the past few years the country has been building a social credit system that will generate a score for each citizen based upon data about their lives, such as their credit score, whether they donate to charity, and their parenting ability. Punishments and rewards that citizens will receive based upon their score include access to better schools and universities and restricted travel. The current CCTV network is a central tool in gathering data about its citizens, but the cameras aren’t always powerful enough to take a clear picture of someone’s face in a crowd. The new 500 megapixel, or 500 million pixel, camera will help to remedy this.”

Indeed it will. I suppose if you are going to build a social system around snooping on the people, it should be as accurate as possible. You wouldn’t want to keep one citizen out of a good school because someone who looked like them was caught littering.

Cynthia Murrell, January 19, 2020