Backpressure: A Bit of a Problem in Enterprise Search in 2024

March 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have noticed numerous references to search and retrieval in the last few months. Most of these articles and podcasts focus on making an organization’s data accessible. That’s the same old story told since the days of STAIRS III and other dinobaby artifacts. The gist of the flow of search-related articles is that information is locked up or silo-ized. Using a combination of “artificial intelligence,” “open source” software, and powerful computing resources — problem solved.

A modern enterprise search content processing system struggles to keep pace with the changes to already processed content (the deltas) and the flow of new content in a wide range of file types and formats. Thanks, MSFT Copilot. You have learned from your experience with Fast Search & Transfer file indexing it seems.

The 2019 essay “Backpressure Explained — The Resisted Flow of Data Through Software” is pertinent in 2024. The essay, written by Jay Phelps, states:

The purpose of software is to take input data and turn it into some desired output data. That output data might be JSON from an API, it might be HTML for a webpage, or the pixels displayed on your monitor. Backpressure is when the progress of turning that input to output is resisted in some way. In most cases that resistance is computational speed — trouble computing the output as fast as the input comes in — so that’s by far the easiest way to look at it.

Mr. Phelps identifies several types of backpressure. These are:

- More info to be processed than a system can handle

- Reading and writing file speeds are not up to the demand for reading and writing

- Communication “pipes” between and among servers are too small, slow, or unstable

- A group of hardware and software components cannot move data where it is needed fast enough.

I have simplified his more elegantly expressed points. Please, consult the original 2019 document for the information I have hip hopped over.

My point is that in the chatter about enterprise search and retrieval, there are a number of situations (use cases to those non-dinobabies) which create some interesting issues. Let me highlight these and then wrap up this short essay.

In an enterprise, the following situations exist and are often ignored or dismissed as irrelevant. When people pooh pooh my observations, it is clear to me that these people have [a] never been subject to a legal discovery process associated with enterprise search fraud and [b] are entitled whiz kids who don’t do too much in the quite dirty, messy, “real” world. (I do like the variety in T shirts and lumberjack shirts, however.)

First, in an enterprise, content changes. These “deltas” are a giant problem. I know that none of the systems I have examined, tested, installed, or advised which have a procedure to identify a change made to a PowerPoint, presented to a client, and converted to an email confirming a deal, price, or technical feature in anything close to real time. In fact, no one may know until the president’s laptop is examined by an investigator who discovers the “forgotten” information. Even more exciting is the opposing legal team’s review of a laptop dump as part of a discovery process “finds” the sequence of messages and connects the dots. Exciting, right. But “deltas” pose another problem. These modified content objects proliferate like gerbils. One can talk about information governance, but it is just that — talk, meaningless jabber.

Second, the content which an employees needs to answer a business question in a timely manner can reside in am employee’s laptop or a mobile phone, a digital notebook, in a Vimeo video or one of those nifty “private” YouTube videos, or behind the locked doors and specialized security systems loved by some pharma company’s research units, a Word document in something other than English, etc. Now the content is changed. The enterprise search fast talkers ignore identifying and indexing these documents with metadata that pinpoints the time of the change and who made it. Is this important? Some contract issues require this level of information access. Who asks for this stuff? How about a COTR for a billion dollar government contract?

Third, I have heard and read that modern enterprise search systems “use”, “apply,” “operate within” industry standard authentication systems. Sure they do within very narrowly defined situations. If the authorization system does not work, then quite problematic things happen. Examples range from an employee’s failure to find the information needed and makes a really bad decision. Alternatively the employee goes on an Easter egg hunt which may or may not work, but if the egg found is good enough, then that’s used. What happens? Bad things can happen? Have you ridden in an old Pinto? Access control is a tough problem, and it costs money to solve. Enterprise search solutions, even the whiz bang cloud centric distributed systems, implement something, which is often not the “right” thing.

Fourth, and I am going to stop here, the problem of end-to-end encrypted messaging systems. If you think employees do not use these, I suggest you do a bit of Eastern egg hunting. What about the content in those systems? You can tell me, “Our company does not use these.” I say, “Fine. I am a dinobaby, and I don’t have time to talk with you because you are so much more informed than I am.”

Why did I romp though this rather unpleasant issue in enterprise search and retrieval? The answer is, “Enterprise search remains a problematic concept.” I believe there is some litigation underway about how the problem of search can morph into a fantasy of a huge business because we have a solution.”

Sorry. Not yet. Marketing and closing deals are different from solving findability issues in an enterprise.

Stephen E Arnold, March 27, 2024

HP Autonomy: A Modest Disagreement Escalates

May 15, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

About 12 years ago, Hewlett Packard acquired Autonomy. The deal was, as I understand the deal, HP wanted to snap up Autonomy to make a move in the enterprise services business. Autonomy was one of the major providers of search and some related content processing services in 2010. Autonomy’s revenues were nosing toward $800 million, a level no other search and retrieval software company had previously achieved.

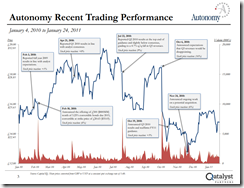

However, as Qatalyst Partners reported in an Autonomy profile, the share price was not exactly hitting home runs each quarter:

Source: Autonomy Trading and Financial Statistics, 2011 by Qatalyst Partners

After some HP executive turmoil, the deal was done. After a year or so, HP analysts determined that the Silicon Valley company paid too much for Autonomy. The result was high profile litigation. One Autonomy executive found himself losing and suffering the embarrassment of jail time.

“Autonomy Founder Mike Lynch Flown to US for HPE Fraud Trial” reports:

Autonomy founder Mike Lynch has been extradited to the US under criminal charges that he defrauded HP when he sold his software business to them for $11 billion in 2011. The 57-year-old is facing allegations that he inflated the books at Autonomy to generate a higher sale price for the business, the value of which HP subsequently wrote down by billions of dollars.

Although I did some consulting work for Autonomy, I have no unique information about the company, the HP allegations, or the legal process which will unspool in the US.

In a recent conversation with a person who had first hand knowledge of the deal, I learned that HP was disappointed with the Autonomy approach to business. I pushed back and pointed out three things to a person who was quite agitated that I did not share his outrage. My points, as I recall, were:

- A number of search-and-retrieval companies failed to generate revenue sufficient to meet their investors’ expectations. These included outfits like Convera (formerly Excalibur Technologies), Entopia, and numerous other firms. Some were sold and were operated as reasonably successful businesses; for example, Dassault Systèmes and Exalead. Others were folded into a larger business; for example, Microsoft’s purchase of Fast Search & Transfer and Oracle’s acquisition of Endeca. The period from 2008 to 2013 was particularly difficult for vendors of enterprise search and content processing systems. I documented these issues in The Enterprise Search Report and a couple of other books I wrote.

- Enterprise search vendors and some hybrid outfits which developed search-related products and services used bundling as a way to make sales. The idea was not new. IBM refined the approach. Buy a mainframe and get support free for a period of time. Then the customer could pay a license fee for the software and upgrades and pay for services. IBM charged me $850 to roll a specialist to look at my three out-of-warranty PC 704 servers. (That was the end of my reliance on IBM equipment and its marvelous ServeRAID technology.) Libraries, for example, could acquire hardware. The “soft” components had a different budget cycle. The solution? Split up the deal. I think Autonomy emulated this approach and added some unique features. Nevertheless, the market for search and content related services was and is a difficult one. Fast Search & Transfer had its own approach. That landed the company in hot water and the founder on the pages of newspapers across Scandinavia.

- Sales professionals could generate interest in search and content processing systems by describing the benefits of finding information buried in a company’s file cabinets, tucked into PowerPoint presentations, and sleeping peacefully in email. Like the current buzz about OpenAI and ChatGPT, expectations are loftier than the reality of some implementations. Enterprise search vendors like Autonomy had to deal with angry licensees who could not find information, heated objections to the cost of reindexing content to make it possible for employees to find the file saved yesterday (an expensive and difficult task even today), and howls of outrage because certain functions had to be coded to meet the specific content requirements of a particular licensee. Remember that a large company does not need one search and retrieval system. There are many, quite specific requirements. These range from engineering drawings in the R&D center to the super sensitive employee compensation data, from the legal department’s need to process discovery information to the mandated classified documents associated with a government contract.

These issues remain today. Autonomy is now back in the spot light. The British government, as I understand the situation, is not chasing Dr. Lynch for his methods. HP and the US legal system are.

The person with whom I spoke was not interested in my three points. He has a Harvard education and I am a geriatric. I will survive his anger toward Autonomy and his obvious affection for the estimable HP, its eavesdropping Board and its executive revolving door.

What few recall is that Autonomy was one of the first vendors of search to use smart software. The implementation was described as Neuro Linguistic Programming. Like today’s smart software, the functioning of the Autonomy core technology was a black box. I assume the litigation will expose this Autonomy black box. Is there a message for the ChatGPT-type outfits blossoming at a prodigious rate?

Yes, the enterprise search sector is about to undergo a rebirth. Organizations have information. Findability remains difficult. The fix? Merge ChatGPT type methods with an organization’s content. What do you get? A party which faded away in 2010 is coming back. The Beatles and Elvis vibe will be live, on stage, act fast.

Stephen E Arnold, May 15, 2023

The Mysterious Knowledge Management and Enterprise Search Magic Is Coming Back

March 23, 2023

Note: This post was written by a real, still alive dinobaby. No smart software needed yet.

In the glory days of pre-indictment enterprise search innovators, some senior managers worried that knowledge loss would cost them. The fix, according to some of the presentations I endured, was to use an enterprise search system from one of the then-pre-eminent vendors. No, I won’t name them, but you can hunt for a copy of my Enterprise Search Report (there are three editions of the tome) and check out the companies’ technology which I analyzed.

The glory days, 2nd edition is upon us if I understand “A Testing Environment for AI and Language Models.”

Not having information generates “digital friction.” I noted this passage:

According to a recent survey of 1,000 IT managers at large enterprises, 67% expressed concern over the loss of knowledge and expertise when employees leave the company. The cost of knowledge loss and inefficient knowledge sharing is significant, with IDC estimating that Fortune 500 companies lose approximately $31.5 billion each year by failing to share knowledge. This figure is particularly alarming, given the current uncertain economic climate. By improving information search and retrieval tools, a Fortune 500 company with 4,000 employees could save roughly $2 million per month in lost productivity. Intelligent enterprise search is a critical tool that can help prevent information islands and enable organizations to effortlessly find, surface, and share knowledge and corporate expertise. Seamless access to knowledge and expertise within the digital workplace is essential. The right enterprise search platform can connect workers to knowledge and expertise, as well as connect disparate information silos to facilitate discovery, innovation, and productivity.

Yes, the roaring 2000s all over again.

The only question I have is what start up will be the “new” Autonomy, Delphi, Entopia, Fast Search & Transfer, Grokker, Klevu, or Uniqa, et al? Which of the 2nd generation of enterprise search systems will have an executive accused of financial Fancy Dancing? What will the 2nd edition’s buzzwords do to surf on AI/ML, neural nets, and deep learning?

Exciting. Will these new systems solve the problem of employees’ quitting and taking their know how and “knowledge” with them? Sure. (Why should I be the one to suggest that investors’ dreams could be like Silicon Valley Bank’s risk management methods? And what about “knowledge”? No problem, of course.

Stephen E Arnold, March 23, 2023

Enterprise Search: Bold Predictions and a Massive Infowarp

July 12, 2022

Writing about enterprise search was a “thing” in the mid to late 2000s. There were big deals. Microsoft bought Fast Search & Transfer as an investigation in the firm’s financial methods. Then the Autonomy acquisition happened, and, as you may know, that sage continues to unfold. Vivisimo was acquired by IBM, and it’s rather useful clustering and metasearch system disappeared into the outstanding management environment of Big Blue. Enterprise search vendors flipped and pivoted: Some became customer support systems. Others morphed into smart news. A few from the Golden Age of Search hung in, and these firms are still pitching enterprise search but with a Silicon Valley, New Era spin.

I read “Enterprise Search Market to Witness Massive Growth by 2028: IBM Corporation, Lucid Work Incorporation [not the well funded name of the outfit, however], Microsoft Corporation, Dassault System” [not the correct spelling of the firm’s name]. How much can one trust a write up which misspells the names of the companies subjected to an intensive analysis process?

My answer is, “Not at all.”

Let’s take a look at some of the information in the write up.

The list of vendors included in the report is:

Attivio Software Incorporation

Coveo Corporation

Dassault Systems S.A. [The accepted spelling is Dassault Systèmes]

IBM Corporation

Lucid Work Incorporation. [Wow, the name of the company is LucidWorks. Pretty careless.]

Microsoft Corporation

SAP AG

Oracle Corporation

X1 Technologies Inc.

Okay, the names of some of the companies is incorrect. Bad.

Second, I loved this passage:

The research covers the most recent information about current events. This information is useful for businesses planning to produce significantly improved things, as well as for customers gaining an idea of what will be available in the future.

I have zero clue what this quoted passage means. Current events to me and many others involves the financial crisis, Russia’s non war war, and assorted pandemics. Monkeypox. Boo!

Third, did you notice that the vendor providing search and retrieval to numerous companies and to many vendors is not included in the report. I am referring to Elastic, cheerleader for the widely popular Elasticsearch. Why omit the vendor with many installations. I can see skipping over Algolia, Sinequa, and Yext, among others. But Elastic? Yikes.

Here’s my take on this report:

- I am not sure it will be useful

- I don’t see an indication that the features of the specific search engines are compared, contrasted, and evaluated. Oracle has a number of search solutions. Will these be evaluated or will the analysts focus on structured query language, ignoring Endeca and other systems the firm owns?

- Misspellings are easy to make with smart software helpfully replacing words automatically. However, getting the company names wrong is a red light.

Net net: Enterprise search will indeed witness – that is, be an observer of rapid growth in certain software sectors – I just think that enterprise search is now a utility. More modern methods of fusing and locating high value information are available. Buying a report which describes ageing dinosaurs may not be a prudent use of available funds.

Stephen E Arnold, July 12, 2022

SeMI: Yet Another Smart Search System

May 2, 2022

Once upon a time, search engines were incapable of understanding queries phrased like a question. With the advent of smarter technology, particularly machine learning and AI, search engines are almost as smart as a human. TechCrunch discusses how one company has created its take on smart search: “SeMI Technologies’ Search Engine Opens Up New Ways To Query Your Data.”

SeMi Technologies invented Weaviate, a vector search engine that uses a unique AI-first database with machine outputting vectors aka embedding. The company wishes to commoditize the technology and has an open source business model. Bob can Luijt is the CEO and co-founder of SeMI. He wants his vector search engine to remain open source so it can help people and businesses that truly need it. SeMi did not create the models used in Weaviate, instead, they deliver the power and systems recommendations.

SeMI Technologies has had over one hundred use cases, including startups powered by vector search engines and use Weaviate to deliver results. SeMi was not actively seeking investors when it received funding in 2020:

“SeMI raised a $1.2 million seed in August 2020 from Zetta Venture Partners and ING Ventures and since then has been on the radar of venture capital companies. Since then, its software has been downloaded almost 750,000 times, growth of about 30% per month. Van Luijt didn’t give specifics on the company’s growth metrics, but did say the number of downloads can correlate to sales of enterprise licenses and managed services. In addition, the spike in usage and understanding of the added value of Weaviate has caused all growth metrics to go up, and the company to exhaust its seed funding.

The company has received more funding in a Series A round that ended with $16 million. The CEO will use the money to hire more employees in the US and Europe, expand its open source community, focus on go-to-market and products centered on the open source core, and invest in research where machine learning overlaps with computer science.

Whitney Grace, May 2, 2022

Deepset: Following the Trail of DR LINK, Fast Search and Transfer, and Other Intrepid Enterprise Search Vendors

April 29, 2022

I noted a Yahooooo! news story called “Deepset Raises $14M to Help Companies Build NLP Apps.” To me the headline could mean:

Customization is our business and services revenue our monetization model

Precursor enterprise search vendors tried to get gullible prospects to believe a company could install software and employees could locate the information needed to answer a business question. STAIRS III, Personal Library Software / SMART, and the outfit with forward truncation (InQuire) among others were there to deliver.

Then reality happened. Autonomy and Verity upped the ante with assorted claims. The Golden Age of Enterprise Search was poking its rosy fingers through the cloud of darkness related to finding an answer.

Quite a ride: The buzzwords sawed through the doubt and outfits like Delphis, Entopia, Inference, and many others embraced variations on the smart software theme. Excursions into asking the system a question to get an answer gained steam. Remember the hand crafted AskJeeves or the mind boggling DR LINK; that was, document retrieval via linguistic knowledge.

Today there are many choices for enterprise search: Free Elastic, Algolia, Funnelback now the delightfully named Squiz, Fabasoft Mindbreeze, and, of course, many, many more.

Now we have Deepset, “the startup behind the open source NLP framework Haystack, not to be confused with Matt Dunie’s memorable “haystack with needles” metaphor, the intelware company Haystack, or a basic piles of dead grass.

The article states:

CEO Milos Rusic co-founded Deepset with Malte Pietsch and Timo Möller in 2018. Pietsch and Möller — who have data science backgrounds — came from Plista, an adtech startup, where they worked on products including an AI-powered ad creation tool. Haystack lets developers build pipelines for NLP use cases. Originally created for search applications, the framework can power engines that answer specific questions (e.g., “Why are startups moving to Berlin?”) or sift through documents. Haystack can also field “knowledge-based” searches that look for granular information on websites with a lot of data or internal wikis.

What strikes me? Three things:

- This is essentially a consulting and services approach

- Enterprise becomes apps for a situation, department, or specific need

- The buzzwords are interesting: NLP, semantic search, BERT, and humor.

Humor is a necessary quality which trying to make decades old technology work for distributed, heterogeneous data, email on a sales professionals mobile, videos, audio recordings, images, engineering diagrams along with the nifty datasets for the gizmos in the illustration, etc.

A question: Is $14 million enough?

Crickets.

Stephen E Arnold, April 29, 2022

Enterprise Search Vendor Buzzword Bonanza!

April 25, 2022

Enterprise search vendors are similar to those two Red Bull-sponsored wizards who wanted to change aircraft—whilst in flight. How did that work out? The pilots survived. That aircraft? Yeah, Liberty, Liberty Mutual as the YouTube ads intone.

Enterprise search vendors want to become something different. Typical repositionings include customer support which entails typing in a word and scanning for matches and business intelligence which often means indexing content, matching words and phrases on a list, and generating alerts. There are other variations which include analyzing content and creating a report which tallies text messages from outraged customers.

Let’s check out reality. “Enterprise search” means finding information. Words and phrase are helpful. Users want these systems to know what is needed and then output it without asking the user to do anything. The challenge becomes assigning a jazzy marketing hook to make enterprise search into something more vital, more compelling, and more zippy.

Navigate to “What Should We Remember?” Bonanza. The diagram is a remarkable array of categories and concepts tailor-made for search marketers. Here’s an example of some of the zingy concepts:

- Zero-risk bias

- Social comparison

- Fundamental attribution

- Barnum effect — Who? The circus person?

Now mix in natural language processing, semantic analysis, entity extraction, artificial intelligence, and — my fave — predictive analytics.

How quickly will outfits in the enterprise search sector gravitate to these more impactful notions? Desperation is a motivating factor. Maybe weeks or months?

Stephen E Arnold, April 25, 2022

Enterprise Search Vendors: Sure, Some Are Missing But Does Anyone Know or Care?

April 20, 2022

I came across a site called Software Suggest and its article “Coveo Enterprise Search Alternatives.” Wow. What’s a good word for bad info?

The system generated 29 vendors in addition to Coveo. The options were not in alphabetical order or any pattern I could discern. What outfits are on the list? Here are the enterprise search vendors for February 2022, the most recent incarnation of this list. My comments are included in parentheses for each system. By the way, an alternative is picking from two choices. This is more correctly labeled “options.” Just another indication of hippy dippy information about information retrieval.

AddSearch (Web site search which is not enterprise search)

Algolia (a publicly trade search company hiring to reinvent enterprise search just as Fast Search & Transfer did more than a decade ago)

Bonsai.io (another Eleasticsearch repackager)

Coveo (no info, just a plea for comments)

C Searcher(from HNsoft in Portugal. desktop search last updated in 2018 according to the firm’s Web site)

CTX Search (the expired certificate does bode well)

Datafari (maybe open source? chat service has no action since May 2021)

Expertrec Search Engine (an eCommerce solution, not an enterprise search system)

Funnelback (the name is now Squiz. The technology Australian)

Galaktic (a Web site search solution from Taglr, an eCommerce search service)

IBM Watson (yikes)

Inbenta (A Catalan outfit which shapes its message to suit the purchasing climate)

Indica Enterprise Search (based in the Netherlands but the name points to a cannabis plant)

Intrasearch (open source search repackaged with some spicy AI and other buzzwords)

Lateral (the German company with an office in Tasmania offers an interface similar to that of Babel Street and Geospark Analytics for an organization’s content)

Lookeen (desktop search for “all your data”. All?)

OnBase ECM (this is a tricky one. ISYS Search sold to Lexmark. Lexmark sold to Highland. Highland appears to be the proud possessor of ISYS Search and has grafted it to an enterprise content management system)

OpenText (the proud owner of many search systems, including Tuxedo and everyone’s fave BRS Search)

Relevancy Platform (three years ago, Searchspring Relevancy Platform was acquired by Scaleworks which looks like a financial outfit)

Sajari (smart site search for eCommerce)

SearchBox Search (Elasticsearch from the cloud)

Searchify (a replacement for Index Tank. who?)

SearchUnify (looks like a smart customer support system, a pitch used by Coveo and others in the sector)

Site Search 360 (not an enterprise search solution in my opinion)

SLI Systems (eCommerce search, not enterprise search, but I could be off base here)

Team Search (TransVault searches Azure Tenancy set ups)

Wescale (mobile eCommerce search)

Wizzy (the name is almost as interesting as the original Purple Yogi system and another eCommerce search system)

Wuha (not as good a name as Purple Yogi. A French NLP search outfit)

X1 Search (from Idea Labs, X1 is into eDiscovery and search)

This is quite an incomplete and inconsistent list from Software Suggest. It is obvious that there is considerable confusion about the meaning of “enterprise search.” I thought I provided a useful definition in my book “The Landscape of Enterprise Search,” published by Panda Press a decade ago. The book, like me, is not too popular or well known. As a result, the blundering around in eCommerce search, Web site search, application specific search, and enterprise search is painful. Who cares? No one at Software Suggest I posit.

My hunch is that this is content marketing for Coveo. Just a guess, however.

Stephen E Arnold, April xx, 2022

Enterprise Search: What Did Shakespeare Allegedly Write?

November 15, 2021

The statement, according to my ratty copy of Shakespeare’s plays edited by one of the professors who tried to get me out of the university’s computer “room” in 1964, presents the Bard’s original, super authentic words this way:

The play is Hamlet. The queen, looking queenly, says to the fellow Thespian: “The lady doth protest too much, methinks.”

Ironic? You decide. I just wanted to regurgitate what the professor wanted. Irony played no part in getting and A and getting back to the IBM mainframe and the beloved punch card machine.

I thought about “protesting too much” after I read “Making a Business Case for Enterprise Search.”

I noted this statement:

In effect you have to develop a Fourth Dimension costing model to account for the full range of potential costs.

Okay, the 4th dimension. Experts (real and self anointed) have been yammering about enterprise search for decades.

Why does an organization snap at the marketing line deployed by vendors of search and retrieval technology? The answer is obvious, at least to me. Someone believes that finding information is needed for some organizational instrumentality. Examples include finding an email so it can be deleted before litigation begins. Another is to locate the PowerPoint which contains the price the now terminated sales professional presented to close a very big contract. How about pinpoint who in the organization had access to the chemical composition of a new anti viral? Another? A shipment went walkabout. Some person making minimum wage has to locate products to be able to send out another shipment.

The laughable part of “enterprise search” is that there is no single system, including the craziness pitched by Amazon, Microsoft, Google, start ups with AI centric systems, or small outfits which have been making minimal revenue headway for a very long time from a small city in Austria or a suburb of the delightful metropolis of Moscow.

The cost of failing to find information cannot be reduced to the made up data about how long a person spends hunting for information. I believe a mid tier consulting outfit and a librarian cooked up this info-confection. Nor is any accountant going to be able to back out the “cost” of search in a cloud database service provided by one of the regulators’ favorite monopolies. No system manager I know keeps track of what time and effort goes into making it possible for a 23 year old art history major locate the specific technical innovation in an autonomous drone. Information of this type requires features not included in Everything, X1, Solr, or the exciting Amazon knock off of Elastic’s follow on to Compass.

Enterprise information retrieval has been a thing for about 50 years. Where has the industry gone? Well, one search executive did a year in prison. Another is fighting extradition for financial fancy dancing. Dozens have just failed. Remember Groxis? And many others have gone to the search-doesn’t-work section of the dead software cemetery.

I find it interesting that people have to explain search in the midst of smart software, blockchain, and a shift to containerized development.

Oh, well. There’s the Sinequa calculator thing.

Stephen E Arnold, November 15, 2021

Elastic CEO on New Products and AWS Battle

November 10, 2021

Here is an interesting piece from InfoWorld about a company we have been following for years. Elastic is the primary developer behind the open source Elasticsearch and made its money vending managed services for the platform. Lately, though, the company has been expanding into new markets—application performance management (APM), observability, and security information event management (SIEM). The company’s CEO discusses this expansion as well as its struggle with Amazon over the use of Elasticsearch in, “Elastic’s Shay Banon: Why We Went Beyond our Search Roots—and Stood Up to ‘Bully’ AWS.”

First, reporter Scott Carey asks about the move into security. Banon admits Elastic was late to the SEIM game, but that timing gave the CEO a unique perspective. He makes this observation:

“When I got into security, I really didn’t understand why the market is so fragmented. I think a big part of it is top-down selling. It’s not like CISOs [Chief Information Security Officers] aren’t smart, but they’re not practitioners, so you can go in and more easily communicate to them that they need certain protection. I could see that there was tension between the security team and developers, operations, devops teams. Security didn’t trust them, and it was the same story as before with operators and developers. This is where I think our biggest opportunity is in the security market. To be one of the companies that brings the trends that caused dev and ops to come together and bring it to security.”

See the write-up for more of Banon’s observations on security, APM, and observability. As for the licensing battle with Amazon, that began in 2015 when AWS implemented its own managed Elasticsearch service without collaborating with Elastic. Carey notes both MongoDB and Cloudflare had similar issues with the mammoth cloud-services vendor. Elastic ultimately took a controversial step to deal with the problem. We learn:

“In a January blog post, Banon outlined how the company was changing its license for Elasticsearch from Apache 2.0 to a dual Elastic License and Server Side Public License (SSPL), a change ‘aimed at preventing companies from taking our Elasticsearch and Kibana products and providing them directly as a service without collaborating with us.’ AWS has since renamed its now-forked service as OpenSearch.”

Banon states he did not really want to change the license but felt he had to take a stand against AWS, which he compared to a schoolyard bully. The CEO has some sympathy for those who feel the decision was unfair to developers outside Elastic who had contributed to Elasticsearch. However, he notes, his company did develop 99% of the software. See the article for more of his reasoning, his perspective on Elasticsearch’s “very open and very simple” new license, and where he sees the company going in the future.

Cynthia Murrell November 10, 2021