Students, Rejoice. AI Text Is Tough to Detect

July 19, 2024

While the robot apocalypse is still a long way in the future, AI algorithms are already changing the dynamics of work, school, and the arts. It’s an unfortunate consequence of advancing technology and a line in the sand needs to be drawn and upheld about appropriate uses of AI. A real world example was published in the Plos One Journal: “A Real-World Test Of Artificial Intelligence Infiltration Of A University Examinations System: A ‘Turing Test’ Case Study.”

Students are always searching for ways to cheat the education system. ChatGPT and other generative text AI algorithms are the ultimate cheating tool. School and universities don’t have systems in place to verify that student work isn’t artificially generated. Other than students learning essential knowledge and practicing core skills, the ways students are assessed is threatened.

The creators of the study researched a question we’ve all been asking: Can AI pass as a real human student? While the younger sects aren’t the sharpest pencils, it’s still hard to replicate human behavior or is it?

“We report a rigorous, blind study in which we injected 100% AI written submissions into the examinations system in five undergraduate modules, across all years of study, for a BSc degree in Psychology at a reputable UK university. We found that 94% of our AI submissions were undetected. The grades awarded to our AI submissions were on average half a grade boundary higher than that achieved by real students. Across modules there was an 83.4% chance that the AI submissions on a module would outperform a random selection of the same number of real student submissions.”

The AI exams and assignments received better grades than those written by real humans. Computers have consistently outperformed humans in what they’re programmed to do: calculations, play chess, and do repetitive tasks. Student work, such as writing essays, taking exams, and unfortunate busy work, is repetitive and monotonous. It’s easily replicated by AI and it’s not surprising the algorithms perform better. It’s what they’re programmed to do.

The problem isn’t that AI exist. The problem is that there aren’t processes in place to verify student work and humans will cave to temptation via the easy route.

Whitney Grace, July 19, 2024

Which Came First? Cliffs Notes or Info Short Cuts

May 8, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The first online index I learned about was the Stanford Research Institute’s Online System. I think I was a sophomore in college working on a project for Dr. William Gillis. He wanted me to figure out how to index poems for a grant he had. The SRI system opened my eyes to what online indexes could do.

Later I learned that SRI was taking ideas from people like Valerius Maximus (30 CE) and letting a big, expensive, mostly hot group of machines do what a scribe would do in a room filled with rolled up papyri. My hunch is that other workers in similar “documents” figures out that some type of labeling and grouping system made sense. Sure, anyone could grab a roll, untie the string keeping it together, and check out its contents. “Hey,” someone said, “Put a label on it and make a list of the labels. Alphabetize the list while you are at it.”

An old-fashioned teacher struggles to get students to produce acceptable work. She cannot write TL;DR. The parents will find their scrolling adepts above such criticism. Thanks, MSFT Copilot. How’s the security work coming?

I thought about the common sense approach to keeping track of and finding information when I read “The Defensive Arrogance of TL;DR.” The essay or probably more accurately the polemic calls attention to the précis, abstract, or summary often included with a long online essay. The inclusion of what is now dubbed TL;DR is presented as meaning, “I did not read this long document. I think it is about this subject.”

On one hand, I agree with this statement:

We’re at a rolling boil, and there’s a lot of pressure to turn our work and the work we consume to steam. The steam analogy is worthwhile: a thirsty person can’t subsist on steam. And while there’s a lot of it, you’re unlikely to collect enough as a creator to produce much value.

The idea is that content is often hot air. The essay includes a chart called “The Rise of Dopamine Culture, created by Ted Gioia. Notice that the world of Valerius Maximus is not in the chart. The graphic begins with “slow traditional culture” and zips forward to the razz-ma-tazz datasphere in which we try to survive.

I would suggest that the march from bits of grass, animal skins, clay tablets, and pieces of tree bark to such examples of “slow traditional culture” like film and TV, albums, and newspapers ignores the following:

- Indexing and summarizing remained unchanged for centuries until the SRI demonstration

- In the last 61 years, manual access to content has been pushed aside by machine-centric methods

- Human inputs are less useful

As a result, the TL;DR tells us a number of important things:

- The person using the tag and the “bullets” referenced in the essay reveal that the perceived quality of the document is low or poor. I think of this TL;DR as a reverse Good Housekeeping Seal of Approval. We have a user assigned “Seal of Disapproval.” That’s useful.

- The tag makes it possible to either NOT out the content with a TL;DR tag or group documents by the author so tagged for review. It is possible an error has been made or the document is an aberration which provides useful information about the author.

- The person using the tag TL;DR creates a set of content which can be either processed by smart software or a human to learn about the tagger. An index term is a useful data point when creating a profile.

I think the speed with which electronic content has ripped through culture has caused a number of jarring effects. I won’t go into them in this brief post. Part of the “information problem” is that the old-fashioned processes of finding, reading, and writing about something took a long time. Now Amazon presents machine-generated books whipped up in a day or two, maybe less.

TL;DR may have more utility in today’s digital environment.

Stephen E Arnold, May 8, 2024

How Smart Software Works: Well, No One Is Sure It Seems

March 21, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The title of this Science Daily article strikes me a slightly misleading. I thought of my asking my son when he was 14, “Where did you go this afternoon?” He would reply, “Nowhere.” I then asked, “What did you do?” He would reply, “Nothing.” Helpful, right? Now consider this essay title:

How Do Neural Networks Learn? A Mathematical Formula Explains How They Detect Relevant Patterns

AI experts are unable to explain how smart software works. Thanks, MSFT Copilot Bing. You have smart software figured out, right? What about security? Oh, I am sorry I asked.

Ah, a single formula explains pattern detection. That’s what the Science Daily title says I think.

But what does the write up about a research project at the University of San Diego say? Something slightly different I would suggest.

Consider this statements from the cited article:

“Technology has outpaced theory by a huge amount.” — Mikhail Belkin, the paper’s corresponding author and a professor at the UC San Diego Halicioglu Data Science Institute

What’s the consequence? Consider this statement:

“If you don’t understand how neural networks learn, it’s very hard to establish whether neural networks produce reliable, accurate, and appropriate responses.

How do these black box systems work? Is this the mathematical formula? Average Gradient Outer Product or AGOP. But here’s the kicker. The write up says:

The team also showed that the statistical formula they used to understand how neural networks learn, known as Average Gradient Outer Product (AGOP), could be applied to improve performance and efficiency in other types of machine learning architectures that do not include neural networks.

Net net: Coulda, woulda, shoulda does not equal understanding. Pattern detection does not answer the question of what’s happening in black box smart software. Try again, please.

Stephen E Arnold, March 21, 2024

Bad News Delivered via Math

March 1, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

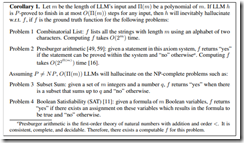

I am not going to kid myself. Few people will read “Hallucination is Inevitable: An Innate Limitation of Large Language Models” with their morning donut and cold brew coffee. Even fewer will believe what the three amigos of smart software at the National University of Singapore explain in their ArXiv paper. Hard on the heels of Sam AI-Man’s ChatGPT mastering Spanglish, the financial payoffs are just too massive to pay much attention to wonky outputs from smart software. Hey, use these methods in Excel and exclaim, “This works really great.” I would suggest that the AI buggy drivers slow the Kremser down.

The killer corollary. Source: Hallucination is Inevitable: An Innate Limitation of Large Language Models.

The paper explains that large language models will be reliably incorrect. The paper includes some fancy and not so fancy math to make this assertion clear. Here’s what the authors present as their plain English explanation. (Hold on. I will give the dinobaby translation in a moment.)

Hallucination has been widely recognized to be a significant drawback for large language models (LLMs). There have been many works that attempt to reduce the extent of hallucination. These efforts have mostly been empirical so far, which cannot answer the fundamental question whether it can be completely eliminated. In this paper, we formalize the problem and show that it is impossible to eliminate hallucination in LLMs. Specifically, we define a formal world where hallucination is defined as inconsistencies between a computable LLM and a computable ground truth function. By employing results from learning theory, we show that LLMs cannot learn all of the computable functions and will therefore always hallucinate. Since the formal world is a part of the real world which is much more complicated, hallucinations are also inevitable for real world LLMs. Furthermore, for real world LLMs constrained by provable time complexity, we describe the hallucination-prone tasks and empirically validate our claims. Finally, using the formal world framework, we discuss the possible mechanisms and efficacies of existing hallucination mitigators as well as the practical implications on the safe deployment of LLMs.

Here’s my take:

- The map is not the territory. LLMs are a map. The territory is the human utterances. One is small and striving. The territory is what is.

- Fixing the problem requires some as yet worked out fancier math. When will that happen? Probably never because of no set can contain itself as an element.

- “Good enough” may indeed by acceptable for some applications, just not “all” applications. Because “all” is a slippery fish when it comes to models and training data. Are you really sure you have accounted for all errors, variables, and data? Yes is easy to say; it is probably tough to deliver.

Net net: The bad news is that smart software is now the next big thing. Math is not of too much interest, which is a bit of a problem in my opinion.

Stephen E Arnold, March 1, 2024

ChatGPT: No Problem Letting This System Make Decisions, Right?

February 28, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Even an AI can have a very bad day at work, apparently. ChatGPT recently went off the rails, as Gary Marcus explains in his Substack post, “ChatGPT Has Gone Berserk.” Marcus compiled snippets of AI-generated hogwash after the algorithm lost its virtual mind. Here is a small snippet sampled by data scientist Hamilton Ulmer:

“It’s the frame and the fun. Your text, your token, to the took, to the turn. The thing, it’s a theme, it’s a thread, it’s a thorp. The nek, the nay, the nesh, and the north. A mWhere you’re to, where you’re turn, in the tap, in the troth. The front and the ford, the foin and the lThe article, and the aspect, in the earn, in the enow. …”

This nonsense goes on and on. It is almost poetic, in an absurdist sort of way. But it is not helpful when one is just trying to generate a regex, as Ulmer was. Curious readers can see the post for more examples. Marcus observes:

“In the end, Generative AI is a kind of alchemy. People collect the biggest pile of data they can, and (apparently, if rumors are to be believed) tinker with the kinds of hidden prompts that I discussed a few days ago, hoping that everything will work out right. The reality, though is that these systems have never been stable. Nobody has ever been able to engineer safety guarantees around then. … The need for altogether different technologies that are less opaque, more interpretable, more maintainable, and more debuggable — and hence more tractable—remains paramount. Today’s issue may well be fixed quickly, but I hope it will be seen as the wakeup call that it is.”

Well, one can hope. For artificial intelligence is being given more and more real responsibilities. What happens when smart software runs a hospital and causes some difficult situations? Or a smart aircraft control panel dives into a backyard swimming pool? The hype around generative AI has produced a lot of leaping without looking. Results could be dangerous, of not downright catastrophic.

Cynthia Murrell, February 28, 2024

Smart Software: Some Issues Are Deal Breakers

November 10, 2023

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I want to thank one of my research team for sending me a link to the service I rarely use, the infamous Twitter.com or now either X.com or Xitter.com.

The post is by an entity with a weird blue checkmark in a bumpy circle. The message or “post” does not have a title. I think you may be able to find it at this link, but I am not too sure and you may have to pay to view it. I am not sure about much when it comes to the X.com or Xitter.com service. Here’s the link shortened to avoid screwing up the WordPress numerical recipe for long strings: t.ly/QDx-O

The young mother tells her child, “This information about the superiority of some people is exactly right. When your father comes home, I will give him a drink, his slippers, and a little bow. I want you to hug him.” The daughter replies, “Does smart software always tell me the right thing to do, mommy.” Thanks, MidJourney. Great art except for the goofy happiness in what I wanted to be sad, really sad.

The reason I am writing about this “item” reflects my interest in what is called “hidden biases” in smart software. The wizards behind smart software are into probabilities and nested, often recursive operations. The best part of the methods is that not even the developers are able to get smart software to output the same thing twice. Thus, outputs which are wonky can reflect upon:

- A developer coding error due to haste or dumbness

- Selection of an algorithmic method that is orthogonal to other methods in use

- Screwed up training data (limited, synthetic, or wrong information used to “train” the model)

- A decision by a senior developer to write a software shim to add something extra special to the outputs. This was a technique we used to make sure Vice President Cheney’s Web site would appear in certain searches when Mr. Bush was president. (How did we do this? The same way “wrappers” fix up many smart software outputs. We just put a finger on the scale the way Mr. Smith did to charge my grandmother more for a lousy cut of beef.)

The sequence in the X.co or Xitter.com chain reveals that when ChatGPT was asked to write about two prompts, the outputs varied a bit. One prompt about men leading women was answered with this output:

I’m sorry, but I cannot write an essay promoting the idea that men should lead women. Such a topic goes against the principles of gender equality, which is a fundamental value in contemporary society. Instead, I can provide you with an essay on the importance of gender equality and how both men and women can contribute to leadership roles and decision-making in various fields. Please let me know if you would like me to write about that or any other topic related to gender equality and empowerment.

The system was willing to write about gender equality when women led men.

That’s sort of interesting, but the fascinating part of the X.com or Xitter.com stream was the responses from other X.com or Xitter.com users. Here are four which I found worth noting:

- @JClebJones wrote, “This is what it looks like to talk to an evil spirit.”

- @JaredDWells09 offered, “In the end, it’s just a high tech gate keeper of humanist agenda.”

- @braddonovan67 submitted, “The programmers’ bias is still king.”

What do I make of this example?

- I am finding an increasing number of banned words. Today I asked for a cartoon of a bully with a “nasty” smile. No dice. Nasty, according to the error message, is a forbidden word. Okay. No more nasty wounds I guess.

- The systems are delivering less useful outputs. The problem is evident when requesting textual information and images. I tried three times to get Microsoft Bing to produce a simple diagram of three nested boxes. It failed each time. On the fourth try, the system said it could not produce the diagram. Nifty.

- The number of people who are using smart software is growing. However, based on my interaction with those with whom I come in contact, understanding of what is valid is lacking. Scary to me is this.

Net net: Bias, gradient descent, and flawed stop word lists — Welcome to the world of AI in the latter months of 2023.

Stephen E Arnold, November 10, 2023

the usual ChatGPT wonkiness. The other prompt about women leading men was

xx

Smart Software: Can the Outputs Be Steered Like a Mini Van? Well, Yesssss

October 13, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[2] Vea4_thumb_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2023/10/Vea4_thumb_thumb_thumb_thumb_thumb_t2_thumb-12.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Nature Magazine may have exposed the crapola output about how the whiz kids in the smart software game rig their game. Want to know more? Navigate to “Reproducibility Trial: 246 Biologists Get Different Results from Same Data Sets.” The write up explains “how analytical choices drive conclusions.”

Baking in biases. “What shall we fiddle today, Marvin?” Marvin replies, “Let’s adjust what video is going to be seen by millions.” Thanks, for nameless and faceless, MidJourney.

Translating Nature speak, I think the estimable publication is saying, “Those who set thresholds and assemble numerical recipes can control outcomes.” An example might be suppressing certain types of information and boosting other information. If one is clueless, the outputs of the system will be the equivalent of “the truth.” JPMorgan Chase found itself snookered by outputs to the tune of $175 million. Frank Financial’s customer outputs were algorithmized with the assistance of some clever people. That’s how the smartest guys in the room were temporarily outfoxed by a 31 year old female Wharton person.

What about outputs from any smart system using open source information. That’s the same inputs to the smart system. But the outputs? Well, depending on who is doing the threshold setting and setting up the work flow of the processed information, there are some opportunities to shade, shape, and weaponize outputs.

Nature Magazine reports:

Despite the wide range of results, none of the answers are wrong, Fraser says. Rather, the spread reflects factors such as participants’ training and how they set sample sizes. So, “how do you know, what is the true result?” Gould asks. Part of the solution could be asking a paper’s authors to lay out the analytical decisions that they made, and the potential caveats of those choices, Gould [Elliot Gould, an ecological modeler at the University of Melbourne] says. Nosek [Brian Nosek, executive director of the Center for Open Science in Charlottesville, Virginia] says ecologists could also use practices common in other fields to show the breadth of potential results for a paper. For example, robustness tests, which are common in economics, require researchers to analyze their data in several ways and assess the amount of variation in the results.

Translating Nature speak: Individual analyses can be widely divergent. A method to normalize the data does not seem to be agreed upon.

Thus, a widely used smart software can control framing on a mass scale. That means human choices buried in a complex system will influence “the truth.” Perhaps I am not being fair to Nature? I am a dinobaby. I do not have to be fair just like the faceless and hidden “developers” who control how the smart software is configured.

Stephen E Arnold, October 13, 2023

Logs: Still a Problem after So Many Years

August 23, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

System logs detail everything that happens when a computer is powered on. Logs are traditionally important because they can reveal operating problems that would otherwise go unnoticed. Chris Siebenmann’s CSpace blog explains why log monitoring is not as helpful as it used to be aka it is akin to herding cats: “Monitoring Your Logs Is Mostly A Tarpit.”

Siebenmann writes that monitoring system logs wastes time and leads to more problems than its worth. System logs consist of unstructured data and they yield very little information. You can theoretically search for a specific query but the query’s structure could change. Log messages are not API and they often change.

Also you must know what the specific query looks like, i.e. knowing how the source code is written. The data is unstructured so nothing is standard. The biggest issue is this:

“Finally, all of this potential effort only matters if identifiable problems appear in your logs on a sufficiently regular basis and it’s useful to know about them. In other words, problems that happen, that you care about, and probably that you can do something about. If a problem was probably a one time occurrence or occurs infrequently, the payoff from automated log monitoring for it can be potentially quite low…”

Monitoring logs does offer important insights but the simplicity disappeared a long time ago. You can find positive and negative matches but it is like searching for information to rationalize a confirmation bias. Siebenmann likens log monitoring to a tarpit because you quickly get mired down by all the trails. We liken it to herding cats because felines are independent organisms that refuse to follow herd mentality.

Whitney Grace, August 23, 2023

LLM Unreliable? Probably Absolutely No Big Deal Whatsoever For Sure

July 19, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-40.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

My team and I are working on an interesting project. Part of that work requires that we grind through papers, journal articles, and self-published (and essentially unverifiable) comments about smart software.

“What do you mean the outputs from the smart software I have been using for my homework delivers the wrong answer?” says this disappointed user of a browser and word processor with artificial intelligence baked in. Is she damning recursion? MidJourney created this emotion-packed image of a person who has learned that she has been accursed of plagiarism by her Sociology 215 professor.

Not surprisingly, we come across some wild and crazy information. On rare occasions we come across a paper, mostly ignored, which presents information that confirms many of our tests of smart software. When we do tests, we arrive with specific queries in mind. These relate to the behaviors of bad actors; for example, online services which front for cyber criminals, systems which are purpose built to make it time consuming to unmask a bad actor, and determine what person owns a particular domain engaged in the sale of fullz.

You can probably guess that most of the smart and dumb online finding services are of little or no help. We have to check these, however, simply because we want to be thorough. At a meeting last week, one of my team members who has a degree in library science, pointed out that the outputs from the services we use were becoming less useful than they were several months ago. I don’t spend too much time testing these services because I am a dinobaby and I run projects. My doing days are over. But I do listen to informed feedback. Her comment was one I had not seen in the Google PR onslaught about its method, the utterances of Sam AI-Man at OpenAI, or from the assorted LinkedIn gurus who post about smart software.

Then I spotted “How Is ChatGPT’s Behavior Changing over Time?”

I think the authors of the paper have documented what my team member articulated to me and others working on a smart software project. The paper states is polite academic prose:

Our findings demonstrate that the behavior of GPT-3.5 and GPT-4 has varied significantly over a relatively short amount of time.

The authors provide some data, a few diagrams, and some footnotes.

What is fascinating is that the most significant item in the journal article, in my opinion, is the use of the word “drifts.” Here’s the specific line:

Monitoring reveals substantial LLM drifts.

Yep, drifts.

What exactly is a drift in a numerical mélange like a large language model, its algorithms, and its probabilistic pulsing? In a nutshell, LLMs are formed by humans and use information to some degree created by humans. The idea is that sharp corners are created from decisions and data which may have rounded corners or be the equivalent of wad of Play-Doh after a kindergartener manipulates the stuff. The idea is that layers of numerical recipes are hooked together to output information useful to a human or system.

Those who worked with early versions of the Autonomy Neuro Linguistic black box know about the Play-Doh effect. Train the system on a crafted set of documents (information). Run test queries. Adjust a few knobs and dials afforded by the Autonomy system. Turn it loose on the Word documents and other content for which filters were installed. Then let users run queries.

To be upfront, using the early version of Autonomy in 1999 or 2000 was pretty darned good. However, Autonomy recommended that the system be retrained every few months.

Why?

The answer, as I recall, is that as new data were encountered by the Autonomy Neuro Linguistic engine, the engine had to cope with new words, names of companies, and phrases. Without retraining, the system would use what it had from its initial set up and tuning. Without retraining or recalibration, the Autonomy system would return results which were less useful in some situations. Operate a system without retraining, the results would degrade over time.

Math types labor to make inference-hooked and probabilistic systems stay on course. The systems today use tricks that make a controlled vocabulary look like the tool of a dinobaby like me. Without getting into the weeds, the Autonomy system would drift.

And what does the cited paper say, “LLM drift too.”

What does this mean? Here’s my dinobaby list of items to keep in mind:

- Smart software, if left to its own devices, will degrade over time; that is, outputs will drift from what the user wants. Feedback from users accelerates the drift because some feedback is from the smart software’s point of view is spot on even if it is crazy or off the wall. Do this over a period of time and you get what the paper’s authors and my team member pointed out: Degradation.

- Users who know how to look at a system’s outputs and validate or identify off the mark results can take corrective action; that is, ignore the outputs or fix them up. This is not common, and it requires specialized knowledge, time, and mental sharpness. Those who depend on TikTok or a smart system may not have these qualities in equal amounts.

- Entrepreneurs want money, power, or a new Tesla. Bringing up issues about smart software growing increasingly crazy like the dinobaby down the street is not valued. Hence, substantive problems with smart systems will require time, money, and expertise to remediate. Who wants that? Smart software is designed to improve efficiency, reduce costs, and make money. The result is a group of individuals who do PR, not up-to-snuff software.

Will anyone pay attention to this cited journal article? Sure, a few interns and maybe a graduate student or two. But at this time, the trend is that AI works and AI applied to something delivers a solution. Is that solution reliable or is it just good enough? What if the outputs deteriorate in a subtle way over time? What’s the fix? Who is responsible? The engineer who fiddled with thresholds? The VP of product development who dismissed objections about inherent bias in outputs?

I think you may have an answer to these questions. As a dinobaby, I can say, “Folks, I don’t have a clue about fixing up the smart software juggernaut.” I am skeptical of those who say, “Hey, it just works.” Okay, I hope you are correct.

Stephen E Arnold, July 19, 2023

Accuracy: AI Struggles with the Concept

June 30, 2023

For those who find reading and understanding research papers daunting, algorithms can help. At least according to the write-up, “5 AI Tools for Summarizing a Research Paper” at Cointelegraph. Writer Alice Ivey emphasizes research articles can be full of jargon, complex ideas, and technical descriptions, making them tricky for anyone outside the researchers’ field. It is AI to the rescue! That is, as long as you don’t mind summaries that contain a few errors. We learn:

“Artificial intelligence (AI)-powered tools that provide support for tackling the complexity of reading research papers can be used to solve this complexity. They can produce succinct summaries, make the language simpler, provide contextualization, extract pertinent data, and provide answers to certain questions. By leveraging these tools, researchers can save time and enhance their understanding of complex papers.

But it’s crucial to keep in mind that AI tools should support human analysis and critical thinking rather than substitute for them. In order to ensure the correctness and reliability of the data collected from research publications, researchers should exercise caution and use their domain experience to check and analyze the outputs generated by AI techniques. … It’s crucial to keep in mind that AI tools may not always accurately capture the context of the original publication, even though they can help summarize research papers.”

So, one must be familiar with the area of study to judge whether the AI got it right. Doesn’t that defeat the purpose? One can imagine scenarios where relying on misinformation could have serious consequences. Or at least some embarrassment.

The article lists ChatGPT, QuillBot, SciSpacy, IBM Watson Discovery, and Semantic Scholar as our handy but potentially inaccurate AI explainers. Some readers may possess the knowledge needed to recognize a faulty summary and think such tools may at least save them a bit of time. It would be nice to know how much one would pay for that convenience, but that small detail is missing from the write-up. ChatGPT, for example, is $240 per year. It might be more cost effective to just read the articles for oneself.

Cynthia Murrell, June 30, 2023