Ah, Emergent Behavior: Tough to Predict, Right?

December 28, 2022

Super manager Jeff (I manage people well) Dean and a gam of Googlers published “Emergent Abilities of Large Language Models.” The idea is that those smart software systems informed by ingesting large volumes of content demonstrate behaviors the developers did not expect. Surprise!

Also, Google published a slightly less turgid discussion of the paper which has 16 authors. in a blog post called “Characterizing Emergent Phenomena in Large Language Models.” This post went live in November 2022, but the time required to grind through the 30 page “technical” excursion was not available to me until this weekend. (Hey, being retired and working on my new lectures for 2023 is time-consuming. Plus, disentangling Google’s techy content marketing from the often tough to figure out text and tiny graphs is not easy for my 78 year old eyes.

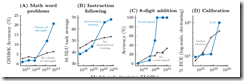

Helpful, right? Source: https://openreview.net/pdf?id=yzkSU5zdwD

In a nutshell, the smart software does things the wizards had not anticipated. According to the blog post:

The existence of emergent abilities has a range of implications. For example, because emergent few-shot prompted abilities and strategies are not explicitly encoded in pre-training, researchers may not know the full scope of few-shot prompted abilities of current language models. Moreover, the emergence of new abilities as a function of model scale raises the question of whether further scaling will potentially endow even larger models with new emergent abilities. Identifying emergent abilities in large language models is a first step in understanding such phenomena and their potential impact on future model capabilities. Why does scaling unlock emergent abilities? Because computational resources are expensive, can emergent abilities be unlocked via other methods without increased scaling (e.g., better model architectures or training techniques)? Will new real-world applications of language models become unlocked when certain abilities emerge? Analyzing and understanding the behaviors of language models, including emergent behaviors that arise from scaling, is an important research question as the field of NLP continues to grow.

The write up emulates other Googlers’ technical write ups. I noted several facets of the topic not included in the paper on OpenReview.net’s version of the paper. (Note: Snag this document now because many Google papers, particularly research papers, have a tendency to become unfindable for the casual online search expert.)

First, emergent behavior means humans were able to observe unexpected outputs or actions. The question is, “What less obvious emergent behaviors are operating within the code edifice?” Is it possible the wizards are blind to more substantive but subtle processes. Could some of these processes be negative? If so, which are and how does the observer identify those before an undesirable or harmful outcome is discovered?

Second, emergent behavior, in my view of bio-emulating systems, evokes the metaphor of cancer. If we assume the emergent behavior is cancerous, what’s the mechanism for communicating these behaviors to others working in the field in a responsible way? Writing a 30 page technical paper takes time, even for super duper Googlers. Perhaps the “emergent” angle requires a bit more pedal to the metal?

Third, how does the emergent behavior fit into the Google plan to make its approach to smart software the de facto standard? There is big money at stake because more and more organizations will want smart software. But will these outfits sign up with a system that demonstrates what might be called “off the reservation” behavior? One example is the use of Google methods for war fighting? Will smart software write a sympathy note to those affected by an emergent behavior or just a plain incorrect answer buried in a subsystem?

Net net: I discuss emergent behavior in my lecture about shadow online services. I cover what the software does and what use humans make of these little understood yet rapidly diffusing methods.

Stephen E Arnold, December 28, 2022