A Different View of Smart Software with a Killer Cost Graph

February 22, 2023

I read “The AI Crowd is Mad.” I don’t agree. I think the “in” word is hallucinatory. Several writes up have described the activities of Google and Microsoft as an “arm’s race.” I am not sure about that characterization either.

The write up includes a statement with which I agree; to wit:

… when listening to podcasters discussing the technology’s potential, a stereotypical assessment is that these models already have a pretty good accuracy, but that with (1) more training, (2) web-browsing support and (3) the capabilities to reference sources, their accuracy problem can be fixed entirely.

In my 50 plus year career in online information and systems, some problems keep getting kicked down the road. New technology appears and stubs its toe on one of those cans. Rusted cans can slice the careless sprinter on the Information Superhighway and kill the speedy wizard via the tough to see Clostridium tetani bacterium. The surface problem is one thing; the problem which chugs unseen below the surface may be a different beastie. Search and retrieval is one of those “problems” which has been difficult to solve. Just ask someone who frittered away beaucoup bucks improving search. Please, don’t confuse monetization with effective precision and recall.

The write up also includes this statement which resonated with me:

if we can’t trust the model’s outcomes, and we paste-in a to-be-summarized text that we haven’t read, then how can we possibly trust the summary without reading the to-be-summarized text?

Trust comes up frequently when discussing smart software. In fact, the Sundar and Prabhakar script often includes the word “trust.” My response has been and will be “Google = trust? Sure.” I am not willing to trust Microsoft’s Sidney or whatever it is calling itself today. After one update, we could not print. Yep, skill in marketing is not reliable software.

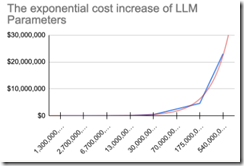

But the highlight of the write up is this chart. For the purpose of this blog post, let’s assume the numbers are close enough for horseshoes:

Source: https://proofinprogress.com/posts/2023-02-01/the-ai-crowd-is-mad.html

What the data suggest to me is that training and retraining models is expensive. Google figured this out. The company wants to train using synthetic data. I suppose it will be better than the content generated by organizations purposely pumping misinformation into the public text pool. Many companies have discovered that models, not just queries, can be engineered to deliver results which the super software wizards did not think about too much. (Remember dying from that cut toe on the Information Superhighway?)

The cited essay includes another wonderful question. Here it is:

But why aren’t Siri and Watson getting smarter?

May I suggest the reasons based on our dabbling with AI infused machine indexing of business information in 1981:

- Language is slippery, more slippery than an eel in Vedius Pollio’s eel pond. Thus, subject matter experts have to fiddle to make sure the words in content and the words in a query sort of overlap or overlap enough for the searcher to locate the needed information.

- Narrow domains on scientific, technical, and medical text are easier to index via a software. Broad domains like general content are more difficult for the software. A static model and the new content “drift.” This is okay as long as the two are steered together. Who has the time, money, or inclination to admit that software intelligence and human intelligence are not yet the same except in PowerPoint pitch decks and academic papers with mostly non reproducible results. But who wants narrow domains. Go broad and big or go home.

- The basic math and procedures may be old. Autonomy’s Neuro Linguistic Programming method was crafted by a stats-mad guy in the 18th century. What needs to be fiddled with are [a] sequences of procedures, [b] thresholds for a decision point, [c] software add ons that work around problems that no one knew existed until some smarty pants posts a flub on Twitter, among other issues.

Net net: We are in the midst of a marketing war. The AI part of the dust up is significant, but with the application of flawed smart software to the generation of content which may be incorrect, another challenge awaits: The Edsel and New Coke of artificial intelligence.

Stephen E Arnold, February 22, 2023