Is Philosophy Irrelevant to Smart Software? Think Before Answering, Please

January 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I listened to Lex Fridman’s interview with the founder of Extropic. The is into smart software and “inventing” a fresh approach to the the plumbing required to make AI more like humanoids.

As I listened to the questions and answers, three factoids stuck in my mind:

- Extropic’s and its desire to just go really fast is a conscious decision shared among those involved with the company; that is, we know who wants to go fast because they work there or work at the firm. (I am not going to argue about the upside and downside of “going fast.” That will be another essay.)

- The downstream implications of the Extropic vision are secondary to the benefits of finding ways to avoid concentration of AI power. I think the idea is that absolute power produces outfits like the Google-type firms which are bedeviling competitors, users, and government authorities. Going fast is not a thrill for processes that require going slow.

- The decisions Extropic’s founder have made are bound up in a world view, personal behaviors for productivity, interesting foods, and learnings accreted over a stellar academic and business career. In short, Extropic embodies a philosophy.

Philosophy, therefore, influences decisions. So we come to my topic in this essay. I noted two different write ups about how informed people take decisions. I am not going to refer to philosophers popular in introductory college philosophy classes. I am going to ignore the uneven treatment of philosophers in Will and Ariel Durant’s Story of Philosophy. Nah. I am going with state of the art modern analysis.

The first online article I read is a survey (knowledge product) of the estimable IBM / Watson outfit or a contractor. The relatively current document is “CEO Decision Making in the Ago of AI.” The main point of the document in my opinion is summed up in this statement from a computer hardware and services company:

Any decision that makes its way to the CEO is one that involves high degrees of uncertainty, nuance, or outsize impact. If it was simple, someone else— or something else—would do it. As the world grows more complex, so does the nature of the decisions landing on a CEO’s desk.

But how can a CEO decide? The answer is, “Rely on IBM.” I am not going to recount the evolution (perhaps devolution) of IBM. The uncomfortable stories about shedding old employees (the term dinobaby originated at IBM according to one former I’ve Been Moved veteran). I will not explain how IBM’s decisions about chip fabrication, its interesting hiring policies of individuals who might have retained some fondness for the land of their fathers and mothers, nor the fancy dancing required to keep mainframes as a big money pump. Nope.

The point is that IBM is positioning itself as a thought leader, a philosopher of smart software, technology, and management. I find this interesting because IBM, like some Google type companies, are case examples of management shortcoming. These same shortcomings are swathed in weird jargon and buzzwords which are bent to one end: Generating revenue.

Let me highlight one comment from the 27 page document and urge you to read it when you have a few moments free. Here’s the one passage I will use as a touchstone for “decision making”:

The majority of CEOs believe the most advanced generative AI wins.

Oh, really? Is smart software sufficiently mature? That’s news to me. My instinct is that it is new information to many CEOs as well.

The second essay about decision making is from an outfit named Ness Labs. That essay is “The Science of Decision-Making: Why Smart People Do Dumb Things.” The structure of this essay is more along the lines of a consulting firm’s white paper. The approach contrasts with IBM’s free-floating global survey document.

The obvious implication is that if smart people are making dumb decisions, smart software can solve the problem. Extropic would probably agree and, were the IBM survey data accurate, “most CEOs” buy into a ride on the AI bandwagon.k

The Ness Labs’ document includes this statement which in my view captures the intent of the essay. (I suggest you read the essay and judge for yourself.)

So, to make decisions, you need to be able to leverage information to adjust your actions. But there’s another important source of data your brain uses in decision-making: your emotions.

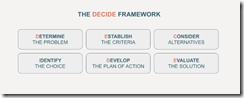

Ah, ha, logic collides with emotions. But to fix the “problem” Ness Labs provides a diagram created in 2008 (a bit before the January 2022 Microsoft OpenAI marketing fireworks:

Note that “decide” is a mnemonic device intended to help me remember each of the items. I learned this technique in the fourth grade when I had to memorize the names of the Great Lakes. No one has ever asked me to name the Great Lakes by the way.

Okay, what we have learned is that IBM has survey data backing up the idea that smart software is the future. Those data, if on the money, validate the go-go approach of Extropic. Plus, Ness Labs provides a “decider model” which can be used to create better decisions.

I concluded that philosophy is less important than fostering a general message that says, “Smart software will fix up dumb decisions.” I may be over simplifying, but the implicit assumptions about the importance of artificial intelligence, the reliability of the software, and the allegedly universal desire by big time corporate management are not worth worrying about.

Why is the cartoon philosopher worrying? I think most of this stuff is a poorly made road on which those jockeying for power and money want to drive their most recent knowledge vehicles. My tip? Look before crossing that information superhighway. Speeding myths can be harmful.

Stephen E Arnold, January 8, 2024