Open Source Software: Free Gym Shoes for Bad Actors

January 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Many years ago, I completed a number of open source projects. Although different clients hired my team and me, the big question was, “What’s the future of open source software as an investment opportunity and as a substitute for commercial software. Our work focused on two major points:

- Community support for a widely-used software once the original developer moved on

- A way to save money and get rid of the “licensing handcuffs” commercial software companies clamped on their customers

- Security issues resulting from poisoned code or obfuscated “special features.:

My recollection is that the customers focused on one point, the opportunity to save money. Commercial software vendors were in the “lock in” game, and open source software for database, utility, and search and retrieval.

Today, a young innovator may embrace an open source solution to the generative smart software approach to innovation. Apart from the issues embedded in the large language model methods themselves, building a product on other people’s code available a open source software looks like a certain path to money.

An open source game plan sounds like a winner. Then upon starting work, the path reveals its risks. Thanks, MSFT Copilot, you exhausted me this morning. Good enough.

I thought about our work in open source when I read “So, Are We Going to Talk about How GitHub Is an Absolute Boon for Malware, or Nah?” The write up opines:

In a report published on Thursday, security shop Recorded Future warns that GitHub’s infrastructure is frequently abused by criminals to support and deliver malware. And the abuse is expected to grow due to the advantages of a “living-off-trusted-sites” strategy for those involved in malware. GitHub, the report says, presents several advantages to malware authors. For example, GitHub domains are seldom blocked by corporate networks, making it a reliable hosting site for malware.

Those cost advantages can be vaporized once a security issue becomes known. The write up continues:

Reliance on this “living-off-trusted-sites” strategy is likely to increase and so organizations are advised to flag or block GitHub services that aren’t normally used and could be abused. Companies, it’s suggested, should also look at their usage of GitHub services in detail to formulate specific defensive strategies.

How about a risk round up?

- The licenses vary. Litigation is a possibility. For big companies with lots of legal eagles, court battles are no problem. Just write a check or cut a deal.

- Forks make it easy for bad actors to exploit some open source projects.

- A big aggregator of open source like MSFT GitHub is not in the open source business and may be deflect criticism without spending money to correct issues as they are discovered. It’s free software, isn’t it.

- The “community” may be composed of good actors who find that cash from what looks like a reputable organization becomes the unwitting dupe of an industrialized cyber gang.

- Commercial products integrating or built upon open source may have to do some very fancy dancing when a problem becomes publicly known.

There are other concerns as well. The problem is that open source’s appeal is now powered by two different performance enhancers. First, is the perception that open source software reduces certain costs. The second is the mad integration of open source smart software.

What’s the fix? My hunch is that words will take the place of meaningful action and remediation. Economic pressure and the desire to use what is free make more sense to many business wizards.

Stephen E Arnold, January 15, 2024

Cybersecurity AI: Yet Another Next Big Thing

January 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Not surprisingly, generative AI has boosted the cybersecurity arms race. As bad actors use algorithms to more efficiently breach organizations’ defenses, security departments can only keep up by using AI tools. At least that is what VentureBeat maintains in, “How Generative AI Will Enhance Cybersecurity in a Zero-Trust World.” Writer Louis Columbus tells us:

“Deep Instinct’s recent survey, Generative AI and Cybersecurity: Bright Future of Business Battleground? quantifies the trends VentureBeat hears in CISO interviews. The study found that while 69% of organizations have adopted generative AI tools, 46% of cybersecurity professionals feel that generative AI makes organizations more vulnerable to attacks. Eighty-eight percent of CISOs and security leaders say that weaponized AI attacks are inevitable. Eighty-five percent believe that gen AI has likely powered recent attacks, citing the resurgence of WormGPT, a new generative AI advertised on underground forums to attackers interested in launching phishing and business email compromise attacks. Weaponized gen AI tools for sale on the dark web and over Telegram quickly become best sellers. An example is how quickly FraudGPT reached 3,000 subscriptions by July.”

That is both predictable and alarming. What should companies do about it? The post warns:

“‘Businesses must implement cyber AI for defense before offensive AI becomes mainstream. When it becomes a war of algorithms against algorithms, only autonomous response will be able to fight back at machine speeds to stop AI-augmented attacks,’ said Max Heinemeyer, director of threat hunting at Darktrace.”

Before AI is mainstream? Better get moving. We’re told the market for generative AI cybersecurity solutions is already growing, and Forrester divides it into three use cases: content creation, behavior prediction, and knowledge articulation. Of course, Columbus notes, each organization will have different needs, so adaptable solutions are important. See the write-up for some specific tips and links to further information. The tools may be new but the dynamic is a constant: as bad actors up their game, so too must security teams.

Cynthia Murrell, January 15, 2024

Do You Know the Term Quality Escape? It Is a Sign of MBA Efficiency Talk

January 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I am not too keen on leaving my underground computer facility. Given the choice of a flight on a commercial airline and doing a Zoom, fire up the Zoom. It works reasonably well. Plus, I don’t have to worry about screwed up flight controls, air craft maintenance completed in a country known for contraband, and pilots trained on flawed or incomplete instructional materials. Why am I nervous? As a Million Mile traveler on a major US airline, I have survived a guy dying in the seat next to me, assorted “return to airport” delays, and personal time spent in a comfy seat as pilots tried to get the mechanics to give the okay for the passenger jet to take off. (Hey, it just landed. What’s up? Oh, right, nothing.)

Another example of a quality escape: Modern car, dead battery, parts falling off, and a flat tire. Too bad the driver cannot plug into the windmill. Thanks, MSFT Copilot Bing thing. Good enough because the auto is not failing at 14,000 feet.

I mention my thrilling life as a road warrior because I read “Boeing 737-9 Grounding: FAA Leaves No Room For “Quality Escapes.” In that “real” news report I spotted a phrase which was entirely new to me. Imagine. After more than 50 years of work in assorted engineering disciplines at companies ranging from old-line industrial giants like Halliburton to hippy zippy outfits in Silicon Valley, here was a word pair that baffled me:

Quality Escape

Well, quality escape means that a product was manufactured, certified, and deployed which was did not meet “standards”. In plain words, the door and components were not safe and, therefore, lacked quality. And escape? That means failure. An F, flop, or fizzle.

“FAA Opens Investigation into Boeing Quality Control after Alaska Airlines Incident” reports:

… the [FAA] agency has recovered key items sucked out of the plane. On Sunday, a Portland schoolteacher found a piece of the aircraft’s fuselage that had landed in his backyard and reached out to the agency. Two cell phones that were likely flung from the hole in the plane were also found in a yard and on the side of the road and turned over to investigators.

I worked on an airplane related project or two when I was younger. One of my team owned two light aircraft, one of which was acquired from an African airline and then certified for use in the US. I had a couple of friends who were jet pilots in the US government. I picked up some random information; namely, FAA inspections are a hassle. Required work is expensive. Stuff breaks all the time. When I was picking up airplane info, my impression was that the FAA enforced standards of quality. One of the pilots was a certified electrical engineer. He was not able to repair his electrical equipment due to FAA regulations. The fellow followed the rules because the FAA in that far off time did not practice “good enough” oversight in my opinion. Today? Well, no people fell out of the aircraft when the door came off and the pressure equalization took place. iPhones might survive a fall from 14,000 feet. Most humanoids? Nope. Shoes, however, do fare reasonably well.

Several questions:

- Exactly how can a commercial aircraft be certified and then shed a door in flight?

- Who is responsible for okaying the aircraft model in the first place?

- Didn’t some similar aircraft produce exciting and consequential results for the passengers, their families, pilots, and the manufacturer?

- Why call crappy design and engineering “quality escape”? Crappy is simpler, more to the point.

Yikes. But if it flies, it is good enough. Excellence has a different spin these days.

Stephen E Arnold, January 12, 2024

Has a Bezos Protuberance Knocked WaPo for a Loop?

January 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I thought the Washington Post was owned by one of the world’s richest me with a giant rocket ship and a really big yacht with huge protuberances. I probably am wrong, but what’s new? I found the information in “Washington Post Newsroom Is Rattled by Buyouts” in line with other organizations layoffs, terminations, RIFs, whatever. My goodness, how could an outfit with some Bezos magic be cutting costs. I think that protuberance obsessed fellow just pumped big money into an artificial intelligence start up. To fund that, it makes sense to me in today’s business environment to accept cost cutting and wild and crazy investments in relatively unproven technology amusing. No, it is not interesting.

An aspiring journalist at a university’s whose president quit because it was easier to invent and recycle information look at the closed library. The question is a good one, even if the young journalist cannot spell. Thanks, MSFT Copilot Bing thing. Three tries. Bingo.

The write up in Vanity Fair, which is a business magazine like Harvard University’s Harvard Business Review without the allegations of plagiarism and screwy diversity battles, brings up images of would-be influencers clutching their giant metal and custom ceramic mugs and gripping their mobile phones with fear in their eyes. Imagine. A newspaper with staff cuts. News!

The article points out:

Scaling back staff while heading into a pivotal presidential election year seems like an especially ill-timed move given the _Post_’s traditional strengths in national politics and policy. Senior editors at the _Post_ have been banking on heightened interest in the election to juice readership amid slowed traffic and subscriptions. At one point in the meeting, according to two staffers, investigative reporter Carol Leonnig said that over the years she’d been told that the National team was doing great work and that issues on the business side would be taken care of, only for the problems to persist.

The write up states:

In late December, word of who’d taken a buyout at _The_ _Washington Post_ began to trickle out. Reporters found themselves especially alarmed by the hard cost cutting hit taken by one particular department: news research, a unit that assists investigations by, among other things, tracking down subjects, finding court records, verifying claims, and scouring documents. The department’s three most senior researchers—Magda Jean-Louis and Pulitzer Prize winners Alice Crites and Jennifer Jenkins—had all accepted buyouts, among the 240 that the company offered employees across departments amid financial struggles. That left news research with only three people: supervisor Monika Mathur and researchers Cate Brown, who specializes in international research, and Razzan Nakhlawi.

The “real news” is that research librarians are bailing out before a day of reckoning which could nuke pensions and other benefits. Researchers are quite intelligent people in my opinion.

Do these actions reflect on Mr. Bezos, he of the protuberance fixation, and his management methods? Amazon has a handful of challenges. The oddly shaped Bezos rocket ship has on occasion exploded. And now the gem of DC journalism is losing people. I would suggest that management methods have a role to play.

Killing off support for corporate libraries is not a new thing. The Penn Central outfit was among the first big corporate giant to decide its executives could live without a special library. Many other firms have followed in the last 15 years or so. Now the Special Library Association is a shadow of its former self, trampled by expert researchers skilled in the use of the Google and by hoards of self-certified individuals who proclaim themselves open source information experts. Why wouldn’t an outfit focused on accurate information dump professional writers and researchers? It meshes quite well with alternative facts, fake news, and AI-generated content. Good enough is the mantra of the modern organization. How much cereal is in your kids’ breakfast box when you first open it? A box half full. Good enough.

Stephen E Arnold, January 12, 2024

Believe in Smart Software? Sure, Why Not?

January 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Predictions are slippery fish. Grab one, a foot long Lake Michigan beastie. Now hold on. Wow, that looked easy. Predictions are similar. But slippery fish can get away or flop around and make those in the boat look silly. I thought about fish and predictions when I read “What AI will Never Be Able To Do.” The essay is a replay of an answer from an AI or smart software system.

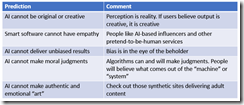

My initial reaction was that someone came up with a blog post that required Google Bard and what seems to be minimal effort to create. I am thinking about how a high school student might rely on ChatGPT to write an essay about a current event or a how-to essay. I reread the write up and formulated several observations. The table below presents the “prediction” and my comment about that statement. I end the essay with a general comment about smart software.

The presentation of word salad reassurances underscores a fundamental problem of smart software. The system can be tuned to reassure. At the same time, the companies operating the software can steer, shape, and weaponize the information presented. Those without the intellectual equipment to research and reason about outputs are likely to accept the answers. The deterioration of education in the US and other countries virtually guarantees that smart software will replace critical thinking for many people.

Don’t believe me. Ask one of the engineers working on next generation smart software. Just don’t ask the systems or the people who use another outfit’s software to do the thinking.

Stephen E Arnold, January 12, 2024

Canada and Mobile Surveillance: Is It a Reality?

January 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It appears a baker’s dozen of Canadian agencies are ignoring a longstanding federal directive on privacy protections. Yes, Canada. According to CBC/ Radio Canada, “Tools Capable of Extracting Personal Data from Phones Being Used by 13 Federal Departments, Documents Show.” The trend surprised even York University associate professor Evan Light, who filed the original access-to-information request. Reporter Brigitte Bureau shares:

“Tools capable of extracting personal data from phones or computers are being used by 13 federal departments and agencies, according to contracts obtained under access to information legislation and shared with Radio-Canada. Radio-Canada has also learned those departments’ use of the tools did not undergo a privacy impact assessment as required by federal government directive. The tools in question can be used to recover and analyze data found on computers, tablets and mobile phones, including information that has been encrypted and password-protected. This can include text messages, contacts, photos and travel history. Certain software can also be used to access a user’s cloud-based data, reveal their internet search history, deleted content and social media activity. Radio-Canada has learned other departments have obtained some of these tools in the past, but say they no longer use them. … ‘I thought I would just find the usual suspects using these devices, like police, whether it’s the RCMP or [Canada Border Services Agency]. But it’s being used by a bunch of bizarre departments,’ [Light] said.

To make matters worse, none of the agencies had conducted the required Privacy Impact Assessments. A federal directive issued in 2002 and updated in 2010 required such PIAs to be filed with the Treasury Board of Canada Secretariat and the Office of the Privacy Commissioner before any new activities involving collecting or handling personal data. Light is concerned that agencies flat out ignoring the directive means digital surveillance of citizens has become normalized. Join the club, Canada.

Cynthia Murrell, January 12, 2024

A Google Gem: Special Edition on 1-11-23

January 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I learned that the Google has swished its tail and killed off some baby Googlers. Giant creatures can do that. Thomson Reuters (the trust outfit) reported the “real” news in “Google Lays Off Hundreds in Assistant, Hardware, Engineering Teams.” But why? The Google is pulsing with revenue, opportunity, technology, and management expertise. Thomson Reuters has the answer:

"Throughout second-half of 2023, a number of our teams made changes to become more efficient and work better, and to align their resources to their biggest product priorities. Some teams are continuing to make these kinds of organizational changes, which include some role eliminations globally," a spokesperson for Google told Reuters in a statement.

On YCombinator’s HackerNews, I spotted some interesting comments. Foofie asserted: “In the last quarter Alphabet reported "total revenues of $76.69bn, an increase of 11 percent year-on-year (YoY). Google Cloud alone grew 22%.”

A giant corporate creature plods forward. Is the big beastie mindful of those who are crushed in the process? Sure, sure. Thanks, MSFT Copilot Bing thing. Good enough.

BigPeopleAreOld observes: “As long as you can get another job and can get severance pay, a layoff feel like an achievement than a loss. That happened me in my last company, one that I was very attached to for what I now think was irrational reasons. I wanted to leave anyway, but having it just happen and getting a nice severance pay was a perk. I am treating my new job as the complete opposite and the feeling is cathartic, which allows me to focus better on my work instead of worrying about the maintaining the illusion of identity in the company I work for.”

Yahoo, that beacon of stability, tackled the human hedge trimming in “Google Lays Off Hundreds in Hardware, Voice Assistant Teams.” The Yahooligans report:

The reductions come as Google’s core search business feels the heat from rival artificial-intelligence offerings from Microsoft Corp. and ChatGPT-creator OpenAI. On calls with investors, Google executives pledged to scrutinize their operations to identify places where they can make cuts, and free up resources to invest in their biggest priorities.

I like the word “pledge.” I wonder what it means in the land of Googzilla.

And how did the Google RIF these non-essential wizards and wizardettes? According to 9to5Google.com:

This reorganization will see Google lay off a few hundred roles across Devices & Services, though the majority is happening within the first-party augmented reality hardware team. This downsizing suggests Google is no longer working on its own AR hardware and is fully committed to the OEM-partnership model. Employees will have the ability to apply to open roles within the company, and Google is offering its usual degree of support.

Several observations:

- Dumping employees reduces costs, improves efficiency, and delivers other MBA-identified goodies. Efficiency is logical.

- The competitive environment is more difficult than some perceive. Microsoft, OpenAI, and the many other smart software outfits are offering alternatives to Google search even when these firms are not trying to create problems for Google. Search sucks and millions are looking for an alternative. I sense fear among the Googlers.

- The regulatory net is becoming more and more difficult to avoid. The EU and other governmental entities see Google as a source of money. The formula seems to be to litigate, find guilty, and find. What’s not to like for cash strapped government entities?

- For more than a year, the Google has been struggling with its slip on sneakers. As a result, the Google conveys that it is not able to make a dash to the ad convenience store as it did when it was younger, friskier. Google looks old, and predators know that the old can become a snack.

See Google cares.

Stephen E Arnold, January 11, 2024

A Decision from the High School Science Club School of Management Excellence

January 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I can’t resist writing about Inc. Magazine and its Google management articles. These are knee slappers for me. The write up causing me to chuckle is “Google’s CEO, Sundar Pichai, Says Laying Off 12,000 Workers Was the Worst Moment in the Company’s 25-Year History.” Zowie. A personnel decision coupled with late-night, anonymous termination notices — What’s not to like. What’s the “real” news write up have to say:

Google had to lay off 12,000 employees. That’s a lot of people who had been showing up to work, only to one day find out that they’re no longer getting a paycheck because the CEO made a bad bet, and they’re stuck paying for it.

“Well, that clever move worked when I was in my high school’s science club. Oh, well, I will create a word salad to distract from my decision making.Heh, heh, heh,” says the distinguished corporate leader to a “real” news publication’s writer. Thanks, MSFT Copilot Bing thing. Good enough.

I love the “had.”

The Inc. Magazine story continues:

Still, Pichai defends the layoffs as the right decision at the time, saying that the alternative would have been to put the company in a far worse position. “It became clear if we didn’t act, it would have been a worse decision down the line,” Pichai told employees. “It would have been a major overhang on the company. I think it would have made it very difficult in a year like this with such a big shift in the world to create the capacity to invest in areas.”

And Inc Magazine actually criticizes the Google! I noted:

To be clear, what Pichai is saying is that Google decided to spend money to hire employees that it later realized it needed to invest elsewhere. That’s a failure of management to plan and deliver on the right strategy. It’s an admission that the company’s top executives made a mistake, without actually acknowledging or apologizing for it.

From my point of view, let’s focus on the word “worst.” Are there other Google management decisions which might be considered in evaluating the Inc. Magazine and Sundar Pichai’s “worst.” Yep, I have a couple of items:

- A lawyer making babies in the Google legal department

- A Google VP dying with a contract worker on the Googler’s yacht as a result of an alleged substance subject to DEA scrutiny

- A Googler fond of being a glasshole giving up a wife and causing a soul mate to attempt suicide

- Firing Dr. Timnit Gebru and kicking off the stochastic parrot thing

- The presentation after Microsoft announced its ChatGPT initiative and the knee jerk Red Alert

- Proliferating duplicative products

- Sunsetting services with little or no notice

- The Google Map / Waze thing

- The messy Google Brain Deep Mind shebang

- The Googler who thought the Google AI was alive.

Wow, I am tired mentally.

But the reality is that I am not sure if anyone in Google management is particularly connected to the problems, issues, and challenges of losing a job in the midst of a Foosball game. But that’s the Google. High school science club management delivers outstanding decisions. I was in my high school science club, and I know the fine decision making our members made. One of those cost the life of one of our brightest stars. Stars make bad decisions, chatter, and leave some behind.

Stephen E Arnold, January 11, 2024

Can Technology Be Kept in a Box?

January 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If true, this is a relationship worth keeping an eye on. Tom’s Hardware reports, “China Could Have Access to the Largest AI Chips Ever Made, Supercomputer with 54 Million Cores—US Government Investigates Cerebras’ UAE-Based Partner.” That United Arab Emirates partner is a holding company called G42, and it has apparently been collecting the powerful supercomputers to underpin its AI ambitions. According to reporting from the New York Times, that collection now includes the record-breaking processors from California-based Cerebras. Writer Anton Shilov gives us the technical details:

“Cerebras’ WSE-2 processors are the largest chips ever brought to market, with 2.6 trillion transistors and 850,000 AI-optimized cores all packed on a single wafer-sized 7nm processor, and they come in CS-2 systems. G42 is building several Condor Galaxy supercomputers for A.I. based on the Cerebras CS-2 systems. The CG-1 supercomputer in Santa Clara, California, promises to offer four FP16 Exaflops of performance for large language models featuring up to 600 billion parameters and offers expansion capability to support up to 100 trillion parameter models.”

That is impressive. One wonders how fast that system sucks down water. But what will the firms do with all this power? That is what the CIA is concerned about. We learn:

“G42 and Cerebras plan to launch six four-Exaflop Condor Galaxy supercomputers worldwide; these machines are why the CIA is suspicious. Under the leadership of chief executive Peng Xiao, G42’s expansion has been marked by notable agreements — including a partnership with AstraZeneca and a $100 million collaboration with Cerebras to develop the ‘world’s largest supercomputer.’ But classified reports from the CIA paint a different picture: they suggest G42’s involvement with Chinese companies — specifically Huawei — raises national security concerns.”

For example, G42 may become a clearinghouse for sensitive American technologies and genetic data, we are warned. Also, with these machines located outside the US, they could easily be used to train LLMs for the Chinese. The US has threatened sanctions against G42 if it continues to associate with Chinese entities. But as Shilov points out, we already know the UAE has been cozying up to China and Russia and distancing itself from the US. Sanctions may have a limited impact. Tech initiatives like G42’s are seen as an important part of diversifying the country’s economy beyond oil.

Cynthia Murrell, January 11, 2024

TikTok Weaponized? Who Knows

January 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“TikTok Restricts Hashtag Search Tool Used by Researchers to Assess Content on Its Platform” makes clear that transparency from a commercial entity is a work in progress or regress as the case may be. NBC reports:

TikTok has restricted one tool researchers use to analyze popular videos, a move that follows a barrage of criticism directed at the social media platform about content related to the Israel-Hamas war and a study that questioned whether the company was suppressing topics that don’t align with the interests of the Chinese government. TikTok’s Creative Center – which is available for anyone to use but is geared towards helping brands and advertisers see what’s trending on the app – no longer allows users to search for specific hashtags, including innocuous ones.

An advisor to TikTok who works at a Big Time American University tells his students that they are not permitted to view the data the mad professor has gathered as part of his consulting work for a certain company affiliated with the Middle Kingdom. The students don’t seem to care. Each is viewing TikTok videos about restaurants serving super sized burritos. Thanks, MSFT Copilot Bing thing. Good enough.

Does anyone really care?

Those with sympathy for the China-linked service do. The easiest way to reduce the hassling from annoying academic researchers or analysts at non-governmental organizations is to become less transparent. The method has proven its value to other firms.

Several observations can be offered:

- TikTok is an immensely influential online service for young people. Blocking access to data about what’s available via TikTok and who accesses certain data underscores the weakness of certain US governmental entities. TikTok does something to reduce transparency and what happens? NBC news does a report. Big whoop as one of my team likes to say.

- Transparency means that scrutiny becomes more difficult. That decision immediately increases my suspicion level about TikTok. The action makes clear that transparency creates unwanted scrutiny and criticism. The idea is, “Let’s kill that fast.”

- TikTok competitors have their work cut out for them. No longer can their analysts gather information directly. Third party firms can assemble TikTok data, but that is often slow and expensive. Competing with TikTok becomes a bit more difficult, right, Google?

To sum up, social media short form content can be weaponized. The value of a weapon is greater when its true nature is not known, right, TikTok?

Stephen E Arnold, January 10, 2024