Sci Fi or Sci Fake: A Post about a Chinese Force Field

January 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Imagine a force field which can deflect a drone or other object. Commercial applications could range from a passenger vehicles to directing flows of material in a manufacturing process. Is a force field a confection of science fiction writers or a technical avenue nearing market entry?

A Tai Chi master uses his powers to take down a drone. Thanks, MSFT Copilot Bing thing. Good enough.

“Chinese Scientists Create Plasma Shield to Guard Drones, Missiles from Attack” presents information which may be a combination of “We’re innovating and you are not” and “science fiction.” The write up reports:

The team led by Chen Zongsheng, an associate researcher at the State Key Laboratory of Pulsed Power Laser Technology at the National University of Defence Technology, said their “low-temperature plasma shield” could protect sensitive circuits from electromagnetic weapon bombardments with up to 170kW at a distance of only 3 metres (9.8 feet). Laboratory tests have shown the feasibility of this unusual technology. “We’re in the process of developing miniaturized devices to bring this technology to life,” Chen and his collaborators wrote in a peer-reviewed paper published in the Journal of National University of Defence Technology last month.

But the write up makes clear that other countries like the US are working to make force fields more effective. China has a colorful way to explain their innovation; to wit:

The plasma-based energy shield is a radical new approach reminiscent of tai chi principles – rather than directly countering destructive electromagnetic assaults it endeavors to convert the attacker’s energy into a defensive force.

Tai chi, as I understand the discipline is a combination of mental discipline and specific movements to develop mental peace, promote physical well being, and control internal force for a range of purposes.

How does the method function. The article explains:

… When attacking electromagnetic waves come into contact with these charged particles, the particles can immediately absorb the energy of the electromagnetic waves and then jump into a very active state. If the enemy continues to attack or even increases the power at this time, the plasma will suddenly increase its density in space, reflecting most of the incidental energy like a mirror, while the waves that enter the plasma are also overwhelmed by avalanche-like charged particles.

One question: Are technologists mining motion pictures, television shows, and science fiction for ideas?

Beam me up, Scotty.

Stephen E Arnold, January 10, 2024

Cheating: Is It Not Like Love, Honor, and Truth?

January 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I would like to believe the information in this story: “ChatGPT Did Not Increase Cheating in High Schools, Stanford Researchers Find.” My reservations can be summed up with three points: [a] The Stanford president (!) who made up datal, [b] The behavior of Stanford MBAs at certain go-go companies, and [c] How does one know a student did not cheat? (I know the answer: Surveillance technology, perchance. Ooops. That’s incorrect. That technology is available to Stanford graduates working at certain techno feudalist outfits.

Mom asks her daughter, “I showed you how to use the AI generator, didn’t I? Why didn’t you use it?” Thanks, MSFT Copilot Bing thing. Pretty good today.

The cited write up reports as actual factual:

The university, which conducted an anonymous survey among students at 40 US high schools, found about 60% to 70% of students have engaged in cheating behavior in the last month, a number that is the same or even decreased slightly since the debut of ChatGPT, according to the researchers.

I have tried to avoid big time problems in my dinobaby life. However, I must admit that in high school, I did these things: [a] Worked with my great grandmother to create a poem subsequently published in a national anthology in 1959. Granny helped me cheat; she was a deceitful septuagenarian as I recall. I put my name on the poem, omitting Augustus. Yes, cheating. [b] Sold homework to students not in my advanced classes. I would consider this cheating, but I was saving money for my summer university courses at the University of Illinois. I went for the cash. [c] After I ended up in the hospital, my girl friend at the time showed up at the hospital, reviewed the work covered in class, and finished a science worksheet because I passed out from the post surgery medications. Yes, I cheated, and Linda Mae who subsequently spent her life in Africa as a nurse, helped me cheat. I suppose I will burn in hell. My summary suggests that “cheating” is an interesting concept, and it has some nuances.

Did the Stanford (let’s make up data) University researchers nail down cheating or just hunt for the AI thing? Are the data reproducible? Was the methodology rigorous, the results validated, and micro analyses run to determine if the data were on the money? Yeah, sure, sure.

I liked this statement:

Stanford also offers an online hub with free resources to help teachers explain to high school students the dos and don’ts of using AI.

In the meantime, the researchers said they will continue to collect data throughout the school year to see if they find evidence that more students are using ChatGPT for cheating purposes.

Yep, this is a good pony to ride. I would ask is plain vanilla Google search a form of cheating? I think it is. With most of the people online using it, doesn’t everyone cheat? Let’s ask the Harvard ethics professor, a senior executive at a Facebook-type outfit, and the former president of Stanford.

Stephen E Arnold, January 10, 2023

Google and the Company It Keeps: Money Is Money

January 10, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If this recent report from Adalytics is accurate, not even Google understands how and where its Google Search Partners (GSP) program is placing ads for both its advertising clients and itself. A piece at Adotat discusses “The Google Exposé: Peeling Back the Layers of Ad Network Mysteries.” Google promises customers of this highly lucrative program their ads will only appear alongside content they would approve of. However, writer Pesach Lattin charges:

“The program, shrouded in opacity, is alleged to be a haven for brand-unsafe ad inventory, a digital Wild West where ads could unwittingly appear alongside content on pornography sites, right-wing fringe publishers, and even on sites sanctioned by the White House in nations like Iran and Russia.”

How could this happen? Google expands its advertising reach by allowing publishers to integrate custom searches into their sites. If a shady publisher has done so, there’s no way to know short of stumbling across it: unlike Bing, Google does not disclose placement URLs. To make matters worse, Google search advertisers are automatically enrolled GSP with no clear way to opt out. But surely the company at least protects itself, right? The post continues:

“Surprisingly, even Google’s own search ads weren’t immune to these problematic placements. This startling fact raises serious questions about the awareness and control Google’s ad buyers have over their own system. It appears that even within Google, there’s a lack of clarity about the inner workings of their ad technology. According to TechCrunch, Laura Edelson, an assistant professor of computer science at Northeastern University, known for her work in algorithmic auditing and transparency, echoes this sentiment. She suggests that Google may not fully grasp the complexities of its own ad network, losing sight of how and where its ads are displayed.”

Well that is not good. Lattin points out the problem, and the lack of transparency around it, mean Google and its clients may be unwittingly breaking ethical advertising standards and even violating the law. And they might never know or, worse, a problematic placement could spark a PR or legal nightmare. Ah, Google.

Cynthia Murrell, January 10, 2023

British Library: The Math of Can Kicking Security Down the Road

January 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a couple of blog posts about the security issues at the British Library. I am not currently working on projects in the UK. Therefore, I noted the issue and moved on to more pressing matters. Examples range from writing about the antics of the Google to keeping my eye on the new leader of the highly innovative PR magnet, the NSO Group.

Two well-educated professionals kick a security can down the road. Why bother to pick it up? Thanks, MSFT Copilot Bing thing. I gave up trying to get you to produce a big can and big shoe. Sigh.

I read “British Library to Burn Through Reserves to Recover from Cyber Attack.” The weird orange newspaper usually has semi-reliable, actual factual information. The write up reports or asserts (the FT is a newspaper, after all):

The British Library will drain about 40 per cent of its reserves to recover from a cyber attack that has crippled one of the UK’s critical research bodies and rendered most of its services inaccessible.

I won’t summarize what the bad actors took down. Instead, I want to highlight another passage in the article:

Cyber-intelligence experts said the British Library’s service could remain down for more than a year, while the attack highlighted the risks of a single institution playing such a prominent role in delivering essential services.

A couple of themes emerge from these two quoted passages:

- Whatever cash the library has, spitting distance of half is going to be spent “recovering,” not improving, enhancing, or strengthening. Just “recovering.”

- The attack killed off “most” of the British Libraries services. Not a few. Not one or two. Just “most.”

- Concentration for efficiency leads to failure for downstream services. But concentration makes sense, right. Just ask library patrons.

My view of the situation is familiar of you have read other blog posts about Fancy Dan, modern methods. Let me summarize to brighten your day:

First, cyber security is a function that marketers exploit without addressing security problems. Those purchasing cyber security don’t know much. Therefore, the procurement officials are what a falcon might label “easy prey.” Bad for the chihuahua sometimes.

Second, when security issues are identified, many professionals don’t know how to listen. Therefore, a committee decides. Committees are outstanding bureaucratic tools. Obviously the British Library’s managers and committees may know about manuscripts. Security? Hmmm.

Third, a security failure can consume considerable resources in order to return to the status quo. One can easily imagine a scenario months or years in the future when the cost of recovery is too great. Therefore, the security breach kills the organization. Termination can be rationalized by a committee, probably affiliated with a bureaucratic structure further up the hierarchy.

I think the idea of “kicking the security can” down the road a widespread characteristic of many organizations. Is the situation improving? No. Marketers move quickly to exploit weaknesses of procurement teams. Bad actors know this. Excitement ahead.

Stephen E Arnold, January 9, 2024

Googley Gems: 2024 Starts with Some Hoots

January 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Another year and I will turn 80. I have seen some interesting things in my 58 year work career, but a couple of black swans have flown across my radar system. I want to share what I find anomalous or possibly harbingers of the new normal.

A dinobaby examines some Alphabet Google YouTube gems. The work is not without its AGonY, however. Thanks, MSFT Copilot Bing thing. Good enough.

First up is another “confession” or “tell all” about the wild, wonderful Alphabet Google YouTube or AGY. (Wow, I caught myself. I almost typed “agony”, not AGY. I am indeed getting old.)

I read “A Former Google Manager Says the Tech Giant Is Rife with Fiefdoms and the Creeping Failure of Senior Leaders Who Weren’t Making Tough Calls.” The headline is a snappy one. I like the phrase “creeping failure.” Nifty image like melting ice and tundra releasing exciting extinct biological bits and everyone’s favorite gas. Let me highlight one point in the article:

[Google has] “lots of little fiefdoms” run by engineers who didn’t pay attention to how their products were delivered to customers. …this territorial culture meant Google sometimes produced duplicate apps that did the same thing or missed important features its competitors had.

I disagree. Plenty of small Web site operators complain about decisions which destroy their businesses. In fact, I am having lunch with one of the founders of a firm deleted by Google’s decider. Also, I wrote about a fellow in India who is likely to suffer the slings and arrows of outraged Googlers because he shoots videos of India’s temples and suggests they have meanings beyond those inculcated in certain castes.

My observation is that happy employees don’t run conferences to explain why Google is a problem or write these weird “let me tell you what life is really like” essays. Something is definitely being signaled. Could it be distress, annoyance, or down-home anger? The “gem”, therefore, is AGY’s management AGonY.

Second, AGY is ramping up its thinking about monetization of its “users.” I noted “Google Bard Advanced Is Coming, But It Likely Won’t Be Free” reports:

Google Bard Advanced is coming, and it may represent the company’s first attempt to charge for an AI chatbot.

And why not? The Red Alert hooted because MIcrosoft’s 2022 announcement of its OpenAI tie up made clear that the Google was caught flat footed. Then, as 2022 flowed, the impact of ChatGPT-like applications made three facets of the Google outfit less murky: [a] Google was disorganized because it had Google Brain and DeepMind which was expensive and confusing in the way Abbott and Costello’s “Who’s on First Routine” made people laugh. [b] The malaise of a cooling technology frenzy yielded to AI craziness which translated into some people saying, “Hey, I can use this stuff for answering questions.” Oh, oh, the search advertising model took a bit of a blindside chop block. And [c] Google found itself on the wrong side of assorted legal actions creating a model for other legal entities to explore, probe, and probably use to extract Google’s life blood — Money. Imagine Google using its data to develop effective subscription campaigns. Wow.

And, the final Google gem is that Google wants to behave like a nation state. “Google Wrote a Robot Constitution to Make Sure Its New AI Droids Won’t Kill Us” aims to set the White House and other pretenders to real power straight. Shades of Isaac Asimov’s Three Laws of Robotics. The write up reports:

DeepMind programmed the robots to stop automatically if the force on its joints goes past a certain threshold and included a physical kill switch human operators can use to deactivate them.

You have to embrace the ethos of a company which does not want its “inventions” to kill people. For me, the message is one that some governments’ officials will hear: Need a machine to perform warfighting tasks?

Small gems but gems not the less. AGY, please, keep ‘em coming.

Stephen E Arnold, January 9, 2024

Remember Ike and the MIC: He Was Right

January 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It used to be common for departing Pentagon officials and retiring generals to head for weapons makers like Boeing and Lockheed Martin. But the hot new destination is venture capital firms, according to

the article, “New Spin on a Revolving Door: Pentagon Officials Turned Venture Capitalists” at DNYUZ. We learn:

“The New York Times has identified at least 50 former Pentagon and national security officials, most of whom left the federal government in the last five years, who are now working in defense-related venture capital or private equity as executives or advisers. In many cases, The Times confirmed that they continued to interact regularly with Pentagon officials or members of Congress to push for policy changes or increases in military spending that could benefit firms they have invested in.”

Yes, pressure from these retirees-turned-venture-capitalists has changed the way agencies direct their budgets. It has also achieved advantageous policy changes: The Defense Innovation Unit now reports directly to the defense secretary. Also, the prohibition against directing small-business grants to firms with more than 50% VC funding has been scrapped.

In one way this trend could be beneficial: instead of lobbying for federal dollars to flow into specific companies, venture capitalists tend to advocate for investment in certain technologies. That way, they hope, multiple firms in which they invest will profit. On the other hand, the nature of venture capitalists means more pressure on Congress and the military to send huge sums their way. Quickly and repeatedly. The article notes:

“But not everyone on Capitol Hill is pleased with the new revolving door, including Senator Elizabeth Warren, Democrat of Massachusetts, who raised concerns about it with the Pentagon this past summer. The growing role of venture capital and private equity firms ‘makes President Eisenhower’s warning about the military-industrial complex seem quaint,’ Ms. Warren said in a statement, after reviewing the list prepared by The Times of former Pentagon officials who have moved into the venture capital world. ‘War profiteering is not new, but the significant expansion risks advancing private financial interests at the expense of national security.’”

Senator Warren may have a point: the article specifies that many military dollars have gone to projects that turned out to be duds. A few have been successful. See the write-up for those details. This moment in geopolitics is an interesting time for this change. Where will it take us?

Cynthia Murrell, January 9, 2024

Cyber Security Software and AI: Man and Machine Hook Up

January 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My hunch is that 2024 is going to be quite interesting with regards to cyber security. The race among policeware vendors to add “artificial intelligence” to their systems began shortly after Microsoft’s ChatGPT moment. Smart agents, predictive analytics coupled to text sources, real-time alerts from smart image monitoring systems are three application spaces getting AI boosts. The efforts are commendable if over-hyped. One high-profile firm’s online webinar presented jargon and buzzwords but zero evidence of the conviction or closure value of the smart enhancements.

The smart cyber security software system outputs alerts which the system manager cannot escape. Thanks, MSFT Copilot Bing thing. You produced a workable illustration without slapping my request across my face. Good enough too.

Let’s accept as a working presence that everyone from my French bulldog to my neighbor’s ex wife wants smart software to bring back the good old, pre-Covid, go-go days. Also, I stipulate that one should ignore the fact that smart software is a demonstration of how numerical recipes can output “good enough” data. Hallucinations, errors, and close-enough-for-horseshoes are part of the method. What’s the likelihood the door of a commercial aircraft would be removed from an aircraft in flight? Answer: Well, most flights don’t lose their doors. Stop worrying. Those are the rules for this essay.

Let’s look at “The I in LLM Stands for Intelligence.” I grant the title may not be the best one I have spotted this month, but here’s the main point of the article in my opinion. Writing about automated threat and security alerts, the essay opines:

When reports are made to look better and to appear to have a point, it takes a longer time for us to research and eventually discard it. Every security report has to have a human spend time to look at it and assess what it means. The better the crap, the longer time and the more energy we have to spend on the report until we close it. A crap report does not help the project at all. It instead takes away developer time and energy from something productive. Partly because security work is consider one of the most important areas so it tends to trump almost everything else.

The idea is that strapping on some smart software can increase the outputs from a security alerting system. Instead of helping the overworked and often reviled cyber security professional, the smart software makes it more difficult to figure out what a bad actor has done. The essay includes this blunt section heading: “Detecting AI Crap.” Enough said.

The idea is that more human expertise is needed. The smart software becomes a problem, not a solution.

I want to shift attention to the managers or the employee who caused a cyber security breach. In what is another zinger of a title, let’s look at this research report, “The Immediate Victims of the Con Would Rather Act As If the Con Never Happened. Instead, They’re Mad at the Outsiders Who Showed Them That They Were Being Fooled.” Okay, this is the ostrich method. Deny stuff by burying one’s head in digital sand like TikToks.

The write up explains:

The immediate victims of the con would rather act as if the con never happened. Instead, they’re mad at the outsiders who showed them that they were being fooled.

Let’s assume the data in this “Victims” write up are accurate, verifiable, and unbiased. (Yeah, I know that is a stretch.)

What do these two articles do to influence my view that cyber security will be an interesting topic in 2024? My answers are:

- Smart software will allegedly detect, alert, and warn of “issues.” The flow of “issues” may overwhelm or numb staff who must decide what’s real and what’s a fakeroo. Burdened staff can make errors, thus increasing security vulnerabilities or missing ones that are significant.

- Managers, like the staffer who lost a mobile phone, with company passwords in a plain text note file or an email called “passwords” will blame whoever blows the whistle. The result is the willful refusal to talk about what happened, why, and the consequences. Examples range from big libraries in the UK to can kicking hospitals in a flyover state like Kentucky.

- Marketers of remediation tools will have a banner year. Marketing collateral becomes a closed deal making the art history majors writing copy secure in their job at a cyber security company.

Will bad actors pay attention to smart software and the behavior of senior managers who want to protect share price or their own job? Yep. Close attention.

Stephen E Arnold, January 8, 2024

THE I IN LLM STANDS FOR INTELLIGENCE

xx

x

x

x

x

x

Is Philosophy Irrelevant to Smart Software? Think Before Answering, Please

January 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I listened to Lex Fridman’s interview with the founder of Extropic. The is into smart software and “inventing” a fresh approach to the the plumbing required to make AI more like humanoids.

As I listened to the questions and answers, three factoids stuck in my mind:

- Extropic’s and its desire to just go really fast is a conscious decision shared among those involved with the company; that is, we know who wants to go fast because they work there or work at the firm. (I am not going to argue about the upside and downside of “going fast.” That will be another essay.)

- The downstream implications of the Extropic vision are secondary to the benefits of finding ways to avoid concentration of AI power. I think the idea is that absolute power produces outfits like the Google-type firms which are bedeviling competitors, users, and government authorities. Going fast is not a thrill for processes that require going slow.

- The decisions Extropic’s founder have made are bound up in a world view, personal behaviors for productivity, interesting foods, and learnings accreted over a stellar academic and business career. In short, Extropic embodies a philosophy.

Philosophy, therefore, influences decisions. So we come to my topic in this essay. I noted two different write ups about how informed people take decisions. I am not going to refer to philosophers popular in introductory college philosophy classes. I am going to ignore the uneven treatment of philosophers in Will and Ariel Durant’s Story of Philosophy. Nah. I am going with state of the art modern analysis.

The first online article I read is a survey (knowledge product) of the estimable IBM / Watson outfit or a contractor. The relatively current document is “CEO Decision Making in the Ago of AI.” The main point of the document in my opinion is summed up in this statement from a computer hardware and services company:

Any decision that makes its way to the CEO is one that involves high degrees of uncertainty, nuance, or outsize impact. If it was simple, someone else— or something else—would do it. As the world grows more complex, so does the nature of the decisions landing on a CEO’s desk.

But how can a CEO decide? The answer is, “Rely on IBM.” I am not going to recount the evolution (perhaps devolution) of IBM. The uncomfortable stories about shedding old employees (the term dinobaby originated at IBM according to one former I’ve Been Moved veteran). I will not explain how IBM’s decisions about chip fabrication, its interesting hiring policies of individuals who might have retained some fondness for the land of their fathers and mothers, nor the fancy dancing required to keep mainframes as a big money pump. Nope.

The point is that IBM is positioning itself as a thought leader, a philosopher of smart software, technology, and management. I find this interesting because IBM, like some Google type companies, are case examples of management shortcoming. These same shortcomings are swathed in weird jargon and buzzwords which are bent to one end: Generating revenue.

Let me highlight one comment from the 27 page document and urge you to read it when you have a few moments free. Here’s the one passage I will use as a touchstone for “decision making”:

The majority of CEOs believe the most advanced generative AI wins.

Oh, really? Is smart software sufficiently mature? That’s news to me. My instinct is that it is new information to many CEOs as well.

The second essay about decision making is from an outfit named Ness Labs. That essay is “The Science of Decision-Making: Why Smart People Do Dumb Things.” The structure of this essay is more along the lines of a consulting firm’s white paper. The approach contrasts with IBM’s free-floating global survey document.

The obvious implication is that if smart people are making dumb decisions, smart software can solve the problem. Extropic would probably agree and, were the IBM survey data accurate, “most CEOs” buy into a ride on the AI bandwagon.k

The Ness Labs’ document includes this statement which in my view captures the intent of the essay. (I suggest you read the essay and judge for yourself.)

So, to make decisions, you need to be able to leverage information to adjust your actions. But there’s another important source of data your brain uses in decision-making: your emotions.

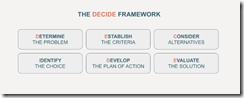

Ah, ha, logic collides with emotions. But to fix the “problem” Ness Labs provides a diagram created in 2008 (a bit before the January 2022 Microsoft OpenAI marketing fireworks:

Note that “decide” is a mnemonic device intended to help me remember each of the items. I learned this technique in the fourth grade when I had to memorize the names of the Great Lakes. No one has ever asked me to name the Great Lakes by the way.

Okay, what we have learned is that IBM has survey data backing up the idea that smart software is the future. Those data, if on the money, validate the go-go approach of Extropic. Plus, Ness Labs provides a “decider model” which can be used to create better decisions.

I concluded that philosophy is less important than fostering a general message that says, “Smart software will fix up dumb decisions.” I may be over simplifying, but the implicit assumptions about the importance of artificial intelligence, the reliability of the software, and the allegedly universal desire by big time corporate management are not worth worrying about.

Why is the cartoon philosopher worrying? I think most of this stuff is a poorly made road on which those jockeying for power and money want to drive their most recent knowledge vehicles. My tip? Look before crossing that information superhighway. Speeding myths can be harmful.

Stephen E Arnold, January 8, 2024

Pegasus Equipped with Wings Stomps Around and Leaves Hoof Prints

January 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The NSO Group’s infamous Pegasus spyware is in the news again, this time in India. Newsclick reveals, “New Forensic Report Finds ‘Damning Revelations’ of ‘Repeated’ Pegasus Use to Target Indian Scribes.” The report is a joint project by Amnesty International and The Washington Post. It was spurred by two indicators. First, routine monitoring exercise in June 2023 turned up traces of Pegasus on certain iPhones. Then, in October, several journalists and Opposition party politicians received Apple alerts warning of “State-sponsored attackers.” The article tells us:

“‘As a result, Amnesty International’s Security Lab undertook a forensic analysis on the phones of individuals around the world who received these notifications, including Siddharth Varadarajan and Anand Mangnale. It found traces of Pegasus spyware activity on devices owned by both Indian journalists. The Security Lab recovered evidence from Anand Mangnale’s device of a zero-click exploit which was sent to his phone over iMessage on 23 August 2023, and designed to covertly install the Pegasus spyware. … According to the report, the ‘attempted targeting of Anand Mangnale’s phone happened at a time when he was working on a story about an alleged stock manipulation by a large multinational conglomerate in India.’”

This was not a first for The Wire co-founder Siddharth Varadarajan. His phone was also infected with Pegasus back in 2018, according to forensic analysis ordered by the Supreme Court of India. The latest findings have Amnesty International urging bans on invasive, opaque spyware worldwide. Naturally, The NSO Group continues to insist all its clients are “vetted law enforcement and intelligence agencies that license our technologies for the sole purpose of fighting terror and major crime” and that it has policies in place to prevent “targeting journalists, lawyers and human rights defenders or political dissidents that are not involved in terror or serious crimes.” Sure.

Meanwhile, some leaders of India’s ruling party blame Apple for those security alerts, alleging the “company’s internal threat algorithms were faulty.” Interesting deflection. We’re told an Apple security rep was called in and directed to craft some other, less alarming explanation for the warnings. Is this because the government itself is behind the spyware? Unclear; Parliament refuses to look into the matter, claiming it is sub judice. How convenient.

Cynthia Murrell, January 8, 2024

AI Ethics: Is That What Might Be Called an Oxymoron?

January 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

MSN.com presented me with this story: “OpenAI and Microsoft on Trial — Is the Clash with the NYT a Turning Point for AI Ethics?” I can answer this question, but that would spoil the entertainment value of my juxtaposition of this write up with the quasi-scholarly list of business start up resources. Why spoil the fun?

Socrates is lecturing at a Fancy Dan business school. The future MBAs are busy scrolling TikTok, pitching ideas to venture firms, and scrolling JustBang.com. Viewing this sketch, it appears that ethics and deep thought are not as captivating as mobile devices and having fund. Thanks, MSFT Copilot. Two tries and a good enough image.

The article asks a question which I find wildly amusing. The “on trial” write up states in 21st century rhetoric:

The lawsuit prompts critical questions about the ownership of AI-generated content, especially when it comes to potential inaccuracies or misleading information. The responsibility for losses or injuries resulting from AI-generated content becomes a gray area that demands clarification. Also, the commercial use of sourced materials for AI training raises concerns about the value of copyright, especially if an AI were to produce content with significant commercial impact, such as an NYT bestseller.

For more than two decades online outfits have been sucking up information which is usually slapped with the bright red label “open source information.”

The “on trial” essay says:

The future of AI and its coexistence with traditional media hinges on the resolution of this legal battle.

But what about ethics? The “on trial” write up dodges the ethics issue. I turned to a go-to resource about ethics. No, I did not look at the papers of the Harvard ethics professor who allegedly made up data for ethic research. Ho ho ho. Nope. I went to the Enchanting Trader and its list of 4000+ Essential Business Startup Database of information.

I displayed the full list of resources and ran a search for the word “ethics.” There was one hit to “Will Joe Rogan Ever IPO?” Amazing.

What I concluded is that “ethics” is not number one with a bullet among the resources of the 4000+ essential business start up items. It strikes me that a single trial about smart software is unlikely to resolve “ethics” for AI. If it does, will the resolution have the legs that Socrates’ musing have had. More than likely, most people will ask, “Who is Socrates?” or “What the heck are ethics?”

Stephen E Arnold, January 5, 2023