Open Source: Free, Easy, and Fast Sort Of

February 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Not long ago, I spoke with an open source cheerleader. The pros outweighed the cons from this technologist’s point of view. (I would like to ID the individual, but I try to avoid having legal eagles claw their way into my modest nest in rural Kentucky. Just plug in “John Wizard Doe”, a high profile entrepreneur and graduate of a big time engineering school.)

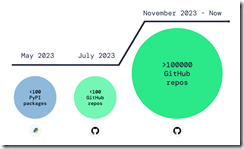

I think going up suggests a problem.

Here are highlights of my notes about the upside of open source:

- Many smart people eyeball the code and problems are spotted and fixed

- Fixes get made and deployed more rapidly than commercial software which of works on an longer “fix” cycle

- Dead end software can be given new kidneys or maybe a heart with a fork

- For most use cases, the software is free or cheaper than commercial products

- New functions become available; some of which fuel new product opportunities.

There may be a few others, but let’s look at a downside few open source cheerleaders want to talk about. I don’t want to counter the widely held belief that “many smart people eyeball the code.” The method is grab and go. The speed angle is relative. Reviving open source again and again is quite useful; bad actors do this. Most people just recycle. The “free” angle is a big deal. Everyone like “free” because why not? New functions become available so new markets are created. Perhaps. But in the cyber crime space, innovation boils down to finding a mistake that can be exploited with good enough open source components, often with some mileage on their chassis.

But the one point open source champions crank back on the rah rah output. “Over 100,000 Infected Repos Found on GitHub.” I want to point out that GitHub is a Microsoft, the all-time champion in security, owns GitHub. If you think about Microsoft and security too much, you may come away confused. I know I do. I also get a headache.

This “Infected Repos” API IRO article asserts:

Our security research and data science teams detected a resurgence of a malicious repo confusion campaign that began mid-last year, this time on a much larger scale. The attack impacts more than 100,000 GitHub repositories (and presumably millions) when unsuspecting developers use repositories that resemble known and trusted ones but are, in fact, infected with malicious code.

The write up provides excellent information about how the bad repos create problems and provides a recipe for do this type of malware distribution yourself. (As you know, I am not too keen on having certain information with helpful detail easily available, but I am a dinobaby, and dinobabies have crazy ideas.)

If we confine our thinking to the open source champion’s five benefits, I think security issues may be more important in some use cases.The better question is, “Why don’t open source supporters like Microsoft and the person with whom I spoke want to talk about open source security?” My view is that:

- Security is an after thought or a never thought facet of open source software

- Making money is Job #1, so free trumps spending money to make sure the open source software is secure

- Open source appeals to some venture capitalists. Why? RedHat, Elastic, and a handful of other “open source plays”.

Net net: Just visualize a future in which smart software ingests poisoned code, and programmers who rely on smart software to make them a 10X engineer. Does that create a bit of a problem? Of course not. Microsoft is the security champ, and GitHub is Microsoft.

Stephen E Arnold, February 29, 2024

Surprise! Smart Software and Medical Outputs May Kill You

February 29, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Have you been inhaling AI hype today? Exhale slowly, then read “Generating Medical Errors: GenAI and Erroneous Medical References,” produced by the esteemed university with a reputation for shaking the AI cucarachas and singing loudly “Ai, Ai, Yi.” The write up is an output of the non-plagiarizing professionals in the Human Centered Artificial Intelligence unit.

The researchers report states:

…Large language models used widely for medical assessments cannot back up claims.

Here’s what the HAI blog post states:

we develop an approach to verify how well LLMs are able to cite medical references and whether these references actually support the claims generated by the models. The short answer: poorly. For the most advanced model (GPT-4 with retrieval augmented generation), 30% of individual statements are unsupported and nearly half of its responses are not fully supported.

Okay, poorly. The disconnect is that the output sounds good, but the information is distorted, off base, or possibly inappropriate.

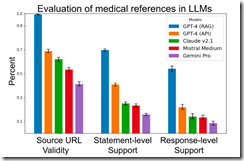

What I found interesting is a stack ranking of widely used AI “systems.” Here’s the chart from the HAI article:

The least “poor” are the Sam AI-Man systems. In the middle is the Anthropic outfit. Bringing up the rear is the French “small” LLM Mistral system. And guess which system is dead last in this Stanford report?

Give up?

The Google. And not just the Google. The laggard is the Gemini system which was Bard, a smart software which rolled out after the Softies caught the Google by surprise about 14 months ago. Last in URL validity, last in statement level support, and last in response level support.

The good news is that most research studies are non reproducible or, like the former president of Stanford’s work, fabricated. As a result, I think these assertions will be easy for an art history major working in Google’s PR confection machine will bat them away like annoying flies in Canberra, Australia.

But last from researchers at the estimable institution where Google, Snorkel and other wonderful services were invented? That’s a surprise like the medical information which might have unexpected consequences for Aunt Mille or Uncle Fred.

Stephen E Arnold, February 29, 2024

A Fresh Outcry Against Deepfakes

February 29, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

There is no surer way in 2024 to incur public wrath than to wrong Taylor Swift. The Daily Mail reports, “’Deepfakes Are a Huge Threat to Society’: More than 400 Experts and Celebrities Sign Open Letter Demanding Tougher Laws Against AI-Generated Videos—Weeks After Taylor Swift Became a Victim.” Will the super duper mega star’s clout spur overdue action? Deepfake porn has been an under-acknowledged problem for years. Naturally, the generative AI boom has made it much worse: Between 2022 and 2023, we learn, such content has increased by more than 400 percent. Of course, the vast majority of victims are women and girls. Celebrities are especially popular targets. Reporter Nikki Main writes:

“‘Deepfakes are a huge threat to human society and are already causing growing harm to individuals, communities, and the functioning of democracy,’ said Andrew Critch, AI Researcher at UC Berkeley in the Department of Electrical Engineering and Computer Science and lead author on the letter. … The letter, titled ‘Disrupting the Deepfake Supply Chain,’ calls for a blanket ban on deepfake technology, and demands that lawmakers fully criminalize deepfake child pornography and establish criminal penalties for anyone who knowingly creates or shares such content. Signees also demanded that software developers and distributors be held accountable for anyone using their audio and visual products to create deepfakes.”

Penalties alone will not curb the problem. Critch and company make this suggestion:

“The letter encouraged media companies, software developers, and device manufacturers to work together to create authentication methods like adding tamper-proof digital seals and cryptographic signature techniques to verify the content is real.”

Sadly, media and tech companies are unlikely to invest in such measures unless adequate penalties are put in place. Will legislators finally act? Answer: They are having meetings. That’s an act.

Cynthia Murrell, March 29, 2024

The Google: A Bit of a Wobble

February 28, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Check out this snap from Techmeme on February 28, 2024. The folks commenting about Google Gemini’s very interesting picture generation system are confused. Some think that Gemini makes clear that the Google has lost its way. Others just find the recent image gaffes as one more indication that the company is too big to manage and the present senior management is too busy amping up the advertising pushed in front of “users.”

I wanted to take a look at What Analytics India Magazine had to say. Its article is “Aal Izz Well, Google.” The write up — from a nation state some nifty drone technology and so-so relationships with its neighbors — offers this statement:

In recent weeks, the situation has intensified to the extent that there are calls for the resignation of Google chief Sundar Pichai. Helios Capital founder Samir Arora has suggested a likelihood of Pichai facing termination or choosing to resign soon, in the aftermath of the Gemini debacle.

The write offers:

Google chief Sundar Pichai, too, graciously accepted the mistake. “I know that some of its responses have offended our users and shown bias – to be clear, that’s completely unacceptable and we got it wrong,” Pichai said in a memo.

The author of the Analytics India article is Siddharth Jindal. I wonder if he will talk about Sundar’s and Prabhakar’s most recent comedy sketch. The roll out of Bard in Paris was a hoot, and it too had gaffes. That was a year ago. Now it is a year later and what’s Google accomplished:

Analytics India emphasizes that “Google is not alone.” My team and I know that smart software is the next big thing. But Analytics India is particularly forgiving.

The estimable New York Post takes a slightly different approach. “Google Parent Loses $70B in Market Value after Woke AI Chatbot Disaster” reports:

Google’s parent company lost more than $70 billion in market value in a single trading day after its “woke” chatbot’s bizarre image debacle stoked renewed fears among investors about its heavily promoted AI tool. Shares of Alphabet sank 4.4% to close at $138.75 in the week’s first day of trading on Monday. The Google’s parent’s stock moved slightly higher in premarket trading on Tuesday [February 28, 2024, 941 am US Eastern time].

As I write this, I turned to Google’s nemesis, the Softies in Redmond, Washington. I asked for a dinosaur looking at a meteorite crater. Here’s what Copilot provided:

Several observations:

- This is a spectacular event. Sundar and Prabhakar will have a smooth explanation I believe. Smooth may be their core competency.

- The fact that a Code Red has become a Code Dead makes clear that communications at Google requires a tune up. But if no one is in charge, blowing $70 billion will catch the attention of some folks with sharp teeth and a mean spirit.

- The adolescent attitudes of a high school science club appear to inform the management methods at Google. A big time investigative journalist told me that Google did not operate like a high school science club planning a bus trip to the state science fair. I stick by my HSSCMM or high school science club management method. I won’t repeat her phrase because it is similar to Google’s quantumly supreme smart software: Wildly off base.

Net net: I love this rationalization of management, governance, and technical failure. Everyone in the science club gets a failing grade. Hey, wizards and wizardettes, why not just stick to selling advertising.

Stephen E Arnold, February 28,. 2024

Second Winds: Maybe There Are Other Factors Like AI?

February 28, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I read “Second Winds,” which is an essay about YouTube. The main idea is that YouTube “content creators” are quitting, hanging up their Sony and Canon cameras, parking their converted vans, and finding an apartment, a job, or their parents’ basement. The reasons are, as the essay points out, not too hard to understand:

- Creating “content” for a long time and having a desire to do something different like not spending hours creating videos, explaining to mom what their “job” is, or living in a weird world without the support staff, steady income, and recognition that other work sometimes provides.

- Burnout because doing video is hard, tedious, and a general contributor to one’s developing a potato-like body type

- Running out of ideas (this is the hook to the Czech playwright unknown to most high school students in the US today I surmise).

I think there is another reason. I have minimal evidence; specifically, the videos of Thomas Gast, a person who ended up in the French Foreign Legion and turned to YouTube. His early videos were explanations about what the French Foreign Legion was, how to enlist, and how to learn useful skills in an austere, male-oriented military outfit. Then he made shooting videos with some of his pals. These morphed into “roughing it” videos in Scandinavia. The current videos include the survival angle and assorted military-themed topics as M.O.S. or Military-Outdoor-Survival. Some of the videos are in German (Gast’s native language); others are in English. It is clear that he knows his subject. However, he is not producing what I would call consistent content. The format is Mr. Gast talking. He sells merchandise. He hints that he does some odd jobs. He writes books. But the videos are beginning to repeat. For lovers of things associated with brave and motivated people, his work is interesting.

For me, he seems to be getting tired. He changes the name under which his videos appear. He is looking for an anchor in the YouTube rapids.

He is a pre-quitter. Let my hypothesize why:

- Making videos takes indoor time. For a person who likes being “outdoors,” the thrill of making videos recedes over time.

- YouTube changes the rules, usually without warning. As a result, Mr. Gast avoids certain “obvious” subjects directly germane to a military professional’s core interests.

- YouTube money is tricky to stabilize. A very few superstars emerge. Most “creators” cannot balance YouTube with their bank account.

Can YouTube change this? No. Why should it? Google needs revenue. Videos which draw eyeballs make Google money. So far the method works. Googlers just need to jam more ads into popular videos and do little to boost niche “creators.” How many people care about the French Foreign Legion? How many care about Mr. Beast? The delta between Mr. Gast and Mr. Beast illustrates Google’s approach. Get lots of clicks; get Google bucks.

Is there a risk to YouTube in the quitting trend, which seems to be coalescing into a trend? Yep, my research team and I have identified several factors. Let’s look at several (not our complete list) quickly:

- Alternative channels with fewer YouTube-type hidden rules. One can push out videos via end to end encrypted messaging platforms like Telegram. Believe us, the use of E2EE is a definite thing, and it is appealing to millions.

- The China-linked TikTok and its US “me too” services like Meta’s allow quick-and-dirty (often literally) videos. Experimentation is easy and lighter weight than YouTube’s method. Mr. Gast should do 30 second videos about weapons or specific French Foreign Legion tasks like avoiding an attack dog hunting one in a forest.

- New technology is attracting the attention of “creators” and may offer an alternative to the DIY demands of making videos the old-fashioned way. Once “creators” figure out AI, there may be a video Renaissance, but it may shift the center of gravity from Google’s YouTube to a different service. Maybe Telegram will emerge as the winner? Maybe Google or Meta will be the winner? Some type of change is guaranteed.

The “second winds” angle is okay. There may be more afoot.

Stephen E Arnold, February 28, 2024

ChatGPT: No Problem Letting This System Make Decisions, Right?

February 28, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Even an AI can have a very bad day at work, apparently. ChatGPT recently went off the rails, as Gary Marcus explains in his Substack post, “ChatGPT Has Gone Berserk.” Marcus compiled snippets of AI-generated hogwash after the algorithm lost its virtual mind. Here is a small snippet sampled by data scientist Hamilton Ulmer:

“It’s the frame and the fun. Your text, your token, to the took, to the turn. The thing, it’s a theme, it’s a thread, it’s a thorp. The nek, the nay, the nesh, and the north. A mWhere you’re to, where you’re turn, in the tap, in the troth. The front and the ford, the foin and the lThe article, and the aspect, in the earn, in the enow. …”

This nonsense goes on and on. It is almost poetic, in an absurdist sort of way. But it is not helpful when one is just trying to generate a regex, as Ulmer was. Curious readers can see the post for more examples. Marcus observes:

“In the end, Generative AI is a kind of alchemy. People collect the biggest pile of data they can, and (apparently, if rumors are to be believed) tinker with the kinds of hidden prompts that I discussed a few days ago, hoping that everything will work out right. The reality, though is that these systems have never been stable. Nobody has ever been able to engineer safety guarantees around then. … The need for altogether different technologies that are less opaque, more interpretable, more maintainable, and more debuggable — and hence more tractable—remains paramount. Today’s issue may well be fixed quickly, but I hope it will be seen as the wakeup call that it is.”

Well, one can hope. For artificial intelligence is being given more and more real responsibilities. What happens when smart software runs a hospital and causes some difficult situations? Or a smart aircraft control panel dives into a backyard swimming pool? The hype around generative AI has produced a lot of leaping without looking. Results could be dangerous, of not downright catastrophic.

Cynthia Murrell, February 28, 2024

Google Gems for the Week of 19 February, 2024

February 27, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

This week’s edition of Google Gems focuses on a Hope Diamond and a handful of lesser stones. Let’s go.

THE HOPE DIAMOND

In the chaos of the AI Gold Rush, horses fall and wizard engineers realize that they left their common sense in the saloon. Here’s the Hope Diamond from the Google.

The world’s largest online advertising agency created smart software with a lot of math, dump trucks filled with data, and wizards who did not recall that certain historical figures in the US were not of color. “Google Says Its AI Image-Generator Would Sometimes Overcompensate for Diversity,” an Associated Press story, explains in very gentle rhetoric that its super sophisticate brain and DeepMind would get the race of historical figures wrong. I think this means that Ben Franklin could look like a Zulu prince or George Washington might have some resemblance to Rama (blue skin, bow, arrow, and snappy hat).

My favorite search and retrieval expert Prabhakar Raghavan (famous for his brilliant lecture in Paris about the now renamed Bard) indicated that Google’s image rendering system did not hit the bull’s eye. No, Dr. Raghavan, the digital arrow pierced the micrometer thin plastic wrap of Google’s super sophisticated, quantum supremacy, gee-whiz technology.

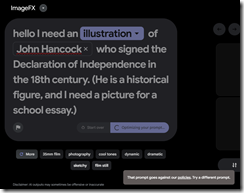

The message I received from Google when I asked for an illustration of John Hancock, an American historical figure. Too bad because this request goes against Google’s policies. Yep, wizards infused with the high school science club management method.

More important, however, was how Google’s massive stumble complemented OpenAI’s ChatGPT wonkiness. I want to award the Hope Diamond Award for AI Ineptitude to both Google and OpenAI. But, alas, there is just one Hope Diamond. The award goes to the quantumly supreme outfit Google.

[Note: I did not quote from the AP story. Why? Years ago the outfit threatened to sue people who use their stories’ words. Okay, no problemo, even though the newspaper for which I once worked supported this outfit in the days of “real” news, not recycled blog posts. I listen, but I do not forget some things. I wonder if the AP knows that Google Chrome can finish a “real” journalist’s sentences for he/him/she/her/it/them. Read about this “feature” at this link.]

Here are my reasons:

- Google is in catch-up mode and like those in the old Gold Rush, some fall from their horses and get up close and personal with hooves. How do those affect the body of a wizard? I have never fallen from a horse, but I saw a fellow get trampled when I lived in Campinas, Brazil. I recall there was a lot of screaming and blood. Messy.

- Google’s arrogance and intellectual sophistication cannot prevent incredible gaffes. A company with a mixed record of managing diversity, equity, etc. has demonstrated why Xooglers like Dr. Timnit Gebru find the company “interesting.” I don’t think Google is interesting. I think it is disappointing, particularly in the racial sensitivity department.

- For years I have explained that Google operates via the high school science club management method. What’s cute when one is 14 loses its charm when those using the method have been at it for a quarter century. It’s time to put on the big boy pants.

OTHER LITTLE GEMMAS

The previous week revealed a dirt trail with some sharp stones and thorny bushes. Here’s a quick selection of the sharpest and thorniest:

- The Google is running webinars to inform publishers about life after their wonderful long-lived cookies. Read more at Fipp.com.

- Google has released a small model as open source. What about the big model with the diversity quirk? Well, no. Read more at the weird green Verge thing.

- Google cares about AI safety. Yeah, believe it or not. Read more about this PR move on Techcrunch.

- Web search competitors will fail. This is a little stone. Yep, a kidney stone for those who don’t recall Neeva. Read more at Techpolicy.

- Did Google really pay $60 million to get that outstanding Reddit content. Wow. Maybe Google looks at different sub reddits than my research team does. Read more about it in 9 to 5 Google.

- What happens when an uninformed person uses the Google Cloud? Answer: Sticker shock. More about this estimable method in The Register.

- Some spoil sport finds traffic lights informed with Google’s smart software annoying. That’s hard to believe. Read more at this link.

- Google pointed out in a court filing that DuckDuckGo was a meta search system (that is, a search interface to other firm’s indexes) and Neeva was a loser crafted by Xooglers. Read more at this link.

No Google Hope Diamond report would be complete without pointing out that the online advertising giant will roll out its smart software to companies. Read more at this link. Let’s hope the wizards figure out that historical figures often have quite specific racial characteristics like Rama.

I wanted to include an image of Google’s rendering of a signer of the Declaration of Independence. What you see in the illustration above is what I got. Wow. I have more “gemmas”, but I just don’t want to present them.

Stephen E Arnold, February 27, 2024

10X: The Magic Factor

February 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The 10X engineer. The 10X payout. The 10X advertising impact. The 10X factor can apply to money, people, and processes. Flip to the inverse and one can use smart software to replace the engineers who are not 10X or — more optimistically — lift those expensive digital humanoids to a higher level. It is magical: Win either way, provided you are a top dog a one percenter. Applied to money, 10X means winner. End up with $0.10, and the hapless investor is a loser. For processes, figuring out a 10X trick, and you are a winner, although one who is misunderstood. Money matters more than machine efficiency to some people.

In pursuit of a 10X payoff, will the people end up under water? Thanks, ImageFX. Good enough.

These are my 10X thoughts after I read “Groq, Gemini, and 10X Improvements.” The essay focuses on things technical. I am going to skip over what the author offers as a well-reasoned, dispassionate commentary on 10X. I want to zip to one passage which I think is quite fascinating. Here it is:

We don’t know when increasing parameters or datasets will plateau. We don’t know when we’ll discover the next breakthrough architecture akin to Transformers. And we don’t know how good GPUs, or LPUs, or whatever else we’re going to have, will become. Yet, when when you consider that Moore’s Law held for decades… suddenly Sam Altman’s goal of raising seven trillion dollars to build AI chips seems a little less crazy.

The way I read this is that unknowns exist with AI, money, and processes. For me, the unknowns are somewhat formidable. For many, charging into the unknown does not cause sleepless nights. Talking about raising trillions of dollars which is a large pile of silver dollars.

One must take the $7 trillion and Sam AI-Man seriously. In June 2023, Sam AI-Man met the boss of Softbank. Today (February 22, 2024) rumors about a deal related to raising the trillions required for OpenAI to build chips and fulfill its promise have reached my research team. If true, will there be a 10X payoff, which noses into spitting distance of 15 zeros. If that goes inverse, that’s going to create a bad day for someone.

Stephen E Arnold, February 27, 2024

Local Dead Tree Tabloids: Endangered Like Some Firs

February 27, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

A relic of a bygone era, the local printed newspaper is dead. Or is it? A pair of recent articles suggest opposite conclusions. The Columbia Journalism Review tells us, “They Gave Local News Away for Free. Virtually Nobody Wanted It.” The post cites a 2021 study from the University of Pennsylvania that sought ways to boost interest in local news. But when over 2,500 locals were offered free Pittsburgh Post-Gazette or Philadelphia Inquirer subscriptions, fewer than two percent accepted. Political science professor Dan Hopkins, who conducted the study with coauthor Tori Gorton, was dismayed. Reporter Kevin Lind writes:

“Hopkins conceived the study after writing a book in 2018 on the nationalization of American politics. In The Increasingly United States he argues that declining interest and access to local news forces voters, who are not otherwise familiar with the specifics of their local governments’ agendas or legislators, to default to national partisan lines when casting regional ballots. As a result, politicians are not held accountable, voters are not aware of the issues, and the candidates who get elected reflect national ideologies rather than representing local needs.”

Indeed. But is it too soon to throw in the towel? Poynter offers some hope in its article, “One Utah Paper Is Making Money with a Novel Idea: Print.” The Deseret News’ new digest is free but makes a tidy profit from ads. Not only that, the move seems to have piqued interest in actual paid subscriptions. Imagine that! Writer Angela Fu describes one young reader who has discovered the allure of the printed page:

“Fifteen-year-old Adam Kunz said he discovered the benefits of physical papers when he came across a free sample from the Deseret News in the mail in November. Until then, he got most of his news through online aggregators like Google News. Newspapers were associated with ‘boring, old people stuff,’ and Kunz hadn’t realized that the Deseret News was still printing physical copies of its paper. He was surprised by how much he liked having a tangible paper in which stories were neatly packed. Kunz told his mother he wanted a Deseret News subscription for Christmas and that if she wouldn’t pay for it, he would buy it himself. Now, he starts and ends his days with the paper, reading a few stories at a time so that he can make the papers — which come twice a week — last.”

Anecdotal though it is, that story is encouraging. Does the future lie with young print enthusiasts like Kunz or with subscription scoffers like the UPenn subjects? Some of each, one suspects.

Cynthia Murrell, February 27, 2024

Qualcomm: Its AI Models and Pour Gasoline on a Raging Fire

February 26, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Qualcomm’s announcements at the Mobile World Congress pour gasoline on the raging AI fire. The chip maker aims to enable smart software on mobile devices, new gear, gym shoes, and more. Venture Beat’s “Qualcomm Unveils AI and Connectivity Chips at Mobile World Congress” does a good job of explaining the big picture. The online publication reports:

Generative AI functions in upcoming smartphones, Windows PCs, cars, and wearables will also be on display with practical applications. Generative AI is expected to have a broad impact across industries, with estimates that it could add the equivalent of $2.6 trillion to $4.4 trillion in economic benefits annually.

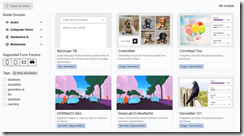

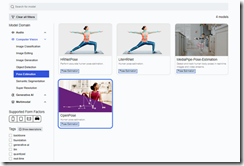

Qualcomm, primarily associated with chips, has pushed into what it calls “AI models.” The listing of the models appears on the Qualcomm AI Hub Web page. You can find this page at https://aihub.qualcomm.com/models. To view the available models, click on one of the four model domains, shown below:

Each domain will expand and present the name of the model. Note that the domain with the most models is computer vision. The company offers 60 models. These are grouped by function; for example, image classification, image editing, image generation, object detection, pose estimation, semantic segmentation (tagging objects), and super resolution.

The image below shows a model which analyzes data and then predicts related values. In this case, the position of the subject’s body are presented. The predictive functions of a company like Recorded Future suddenly appear to be behind the curve in my opinion.

There are two models for generative AI. These are image generation and text generation. Models are available for audio functions and for multimodal operations.

Qualcomm includes brief descriptions of each model. These descriptions include some repetitive phrases like “state of the art”, “transformer,” and “real time.”

Looking at the examples and following the links to supplemental information makes clear at first glance to suggest:

- Qualcomm will become a company of interest to investors

- Competitive outfits have their marching orders to develop comparable or better functions

- Supply chain vendors may experience additional interest and uplift from investors.

Can Qualcomm deliver? Let me answer the question this way. Whether the company experiences an nVidia moment or not, other companies have to respond, innovate, cut costs, and become more forward leaning in this chip sector.

I am in my underground computer lab in rural Kentucky, and I can feel the heat from Qualcomm’s AI announcement. Those at the conference not working for Qualcomm may have had their eyebrows scorched.

Stephen E Arnold, February 26, 2024