Synthetic Data: From Science Fiction to Functional Circumscription

March 4, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Synthetic data are information produced by algorithms, not by real-world events. It’s created using real-world data and numerical recipes. The appeal is that it is easier than collecting real life information, cheaper than dealing with data from real life, and faster than fooling around with surveys, monitoring devices, and law suits. In theory, synthetic data is one promising way of skirting the expense of getting humans involved.

“What Is [a] Synthetic Sample – And Is It All It’s Cracked Up to Be?” tackles the subject of a synthetic sample, a topic which is one slice of the synthetic data universe. The article seeks “to uncover the truth behind artificially created qualitative and quantitative market research data.” I am going to avoid the question, “Is synthetic data useful?” because the answer is, “Yes.” Bean counters and those looking to find a way out of the pickle barrel filled with expensive brine are going to chase after the magic of algorithms producing data to do some machine learning magic.

In certain situations, fake flowers are super. Other times, the faux blooms are just creepy. Thanks, MSFT Copilot Bing thing. Good enough.

Are synthetic data better than real world data? The answer from my vantage point is, “It depends.” Fancy math can prove that for some use cases, synthetic data are “good enough”; that is, the data produce results close enough to what a “real” data set provides. Therefore, just use synthetic data. But for other applications, synthetic data might throw some sand in the well-oiled marketing collateral describing the wonders of synthetic data. (Some university research labs are quite skilled in PR speak, but the reality of their methods may not line up with the PowerPoints used to raise venture capital.)

This essay discusses a research project to figure out if a synthetic sample works or in my lingo if the synthetic sample is good enough. The idea is that as long as the synthetic data is within a specified error range, the synthetic sample can be used and may produce “reliable” or useful results. (At least one hopes this is the case.)

I want to focus on one portion of the cited article and invite you to read the complete Kantar explanation.

Here’s the passage which snagged my attention:

… right now, synthetic sample currently has biases, lacks variation and nuance in both qual and quant analysis. On its own, as it stands, it’s just not good enough to use as a supplement for human sample. And there are other issues to consider. For instance, it matters what subject is being discussed. General political orientation could be easy for a large language model (LLM), but the trial of a new product is hard. And fundamentally, it will always be sensitive to its training data – something entirely new that is not part of its training will be off-limits. And the nature of questioning matters – a highly ’specific’ question that might require proprietary data or modelling (e.g., volume or revenue for a particular product in response to a price change) might elicit a poor-quality response, while a response to a general attitude or broad trend might be more acceptable.

These sentences present several thorny problems is academic speak. Let’s look at them in the vernacular of rural Kentucky where I live.

First, we have the issue of bias. Training data can be unintentionally or intentionally biased. Sample radical trucker posts on Telegram, and use those messages to train a model like Reor. That output is going to express views that some people might find unpalatable. Therefore, building a synthetic data recipe which includes this type of Telegram content is going to be oriented toward truck driver views. That’s good and bad.

Second, a synthetic sample may require mixing data from a “real” sample. That’s a common sense approach which reduces some costs. But will the outputs be good enough. The question then becomes, “Good enough for what applications?” Big, general questions about how a topic is presented might be close enough for horseshoes. Other topics like those focusing on dealing with a specific technical issue might warrant more caution or outright avoidance of synthetic data. Do you want your child or wife to die because the synthetic data about a treatment regimen was close enough for horseshoes. But in today’s medical structure, that may be what the future holds.

Third, many years ago, one of the early “smart” software companies was Autonomy, founded by Mike Lynch. In the 1990s, Bayesian methods were known but some — believe it or not — were classified and, thus, not widely known. Autonomy packed up some smart software in the Autonomy black box. Users of this system learned that the smart software had to be retrained because new terms and novel ideas not in the original training set were not findable by the neuro linguistic program’s engine. Yikes, retraining requires human content curation of data sets, time to retrain the system, and the expense of redeploying the brains of the black boxes. Clients did not like this and some, to be frank, did not understand why a product did not work like an MG sports car. Synthetic data has to be trained to “know” about new terms and avid the “certain blindness” probability based systems possess.

Fourth, the topic of “proprietary data modeling” means big bucks. The idea behind synthetic data is that it is cheaper. Building proprietary training data and keeping it current is expensive. Is it better? Yeah, maybe. Is it faster? Probably not when humans are doing the curation, cleaning, verifying, and training.

The write up states:

But it’s likely that blended models (human supplemented by synthetic sample) will become more common as LLMs get even more powerful – especially as models are finetuned on proprietary datasets.

Net net: Synthetic data warrants monitoring. Some may want to invest in synthetic data set companies like Kantar, for instance. I am a dinobaby, and I like the old-fashioned Stone Age approach to data. The fancy math embodies sufficient risk for me. Why increase risk? Remember my reference to a dead loved one? That type of risk.

Stephen E Arnold, March 4, 2023

Technology Becomes Detroit

March 4, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Have you ever heard of technical debt? Technical debt is when an IT team prioritize speedy delivery of a product over creating a feasible, quality product. Technology history is full of technical debt. Some of the more famous cases are the E.T. videogame for the Atari, Windows Vista, and the Samsung Galaxy Gear. Technical debt is an ongoing issue for IT departments and tech companies. It’s apparently getting worse. ITPro details the current problems with technical debt in, “IT Leaders Need To Accept They’ll Never Escape Technical Debt, But That Doesn’t Mean They Should Down Tools.”

Gordon Haff is a senior leader at Red Hat and a technology evangelist. Haff told ITPro that tech experts will continue to remain hindered as they continue to deal with technical debt and shill shortages. Tech experts want to advance their field with transformative projects but they’re held back by the same aforementioned issues. Haff stressed that as soon as one project is complete, tech experts build the next project on existing architecture. It creates a technical debt infrastructure.

Haff provided an example using a band-aid metaphor:

“Haff pointed toward application modernization as a prime example of this rinse and repeat trend. Many enterprises, he said, deliberately choose to not tinker with certain applications due to the fact they still worked nominally.

Fast forward several years later, these applications are overhauled and modernized, then are left to their own devices – to some extent – and reassessed during the next transformation cycle.

‘If you go back 10 years, we had this sort of bimodal IT, or fast-slow IT, that was kind of the thing,” he explained. “The idea was ‘we’ll leave that old stuff, we’ll shove that off into the corner and not worry about it’ and the cool kids can work on all this greenfield, often new customer-facing applications.

‘But by and large, it’s then a case of ‘oh we actually need to deal with this core business stuff’ and these older applications.’”

Haff suggests that IT experts shouldn’t approach their work with a “one and done” mindset. They should realize their work is constantly evolving. These should be aware of how to go with the flow and program legacy systems that don’t transform into large messes. There’s a reason videogame companies have beta tests, restaurants have soft openings, and musicals have previews. They test things to deliver quality products. Technical debt leads to technical rot.

Whitney Grace, March 4, 2024

Forget the Words. Do Short-Form Video by Hiring a PR Professional

March 1, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I think “Everyone’s a Sellout Now” is about 4,000 words. The main idea is that traditional publishing is roached. Artists and writers must learn to do video editing or have enough of mommy and daddy’s money to pay someone to promote the creator’s output. The essay is well written; however, I am not sure it conveys a TikTok fact unknown or hiding in the world of BlueSky-type services.

This bright young student should have used a ChatGPT-type service. Thanks, MSFT Copilot. At least you are outputting which is more than I can say for your fierce but lagging competitor.

I noted this passage:

Because self-promotion sucks.

I think I agree, but why not hire an “output handler.” The OH does the PR.

Here’s another quote to note:

The problem is that America more or less runs on the concept of selling out.

Is there a fix for the gasoline of America? Yes. The essay asserts:

author-content creators succeed by making the visually uninteresting labor of typing on a laptop worthwhile to watch.

The essay concludes with this less-than-uplifting comment:

To achieve the current iteration of the American dream, you’ve got to shout into the digital void and tell everyone how great you are. All that matters is how many people believe you.

Downer? Yes, and what makes it fascinating is that the author gets paid for writing. I think this is a “real job.”

Several observations:

- I think smart software is going to do more than write wacko stuff for SmartNews-type publications.

- Readers of “downer” essays are likely to go more “down”; that is, become less positive and increasingly antagonistic to what makes the US of A tick

- The essay delivers the news about the importance of TikTok without pointing out that the service is China-affiliated and provides content not permitted for consumption in China.

Net net: Hire a gig worker to do the OH. Pay for PR. Quit complaining or complain in fewer words.

PS. The categorical affirmative of “everyone” is disproved with a single example. As I have pointed out in an essay about a grousing Xoogler, I operate differently. Therefore, the everyone is like fuzzy antecedents. Sloppy.

Stephen E Arnold, March 1, 2024

Bad News Delivered via Math

March 1, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I am not going to kid myself. Few people will read “Hallucination is Inevitable: An Innate Limitation of Large Language Models” with their morning donut and cold brew coffee. Even fewer will believe what the three amigos of smart software at the National University of Singapore explain in their ArXiv paper. Hard on the heels of Sam AI-Man’s ChatGPT mastering Spanglish, the financial payoffs are just too massive to pay much attention to wonky outputs from smart software. Hey, use these methods in Excel and exclaim, “This works really great.” I would suggest that the AI buggy drivers slow the Kremser down.

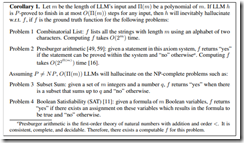

The killer corollary. Source: Hallucination is Inevitable: An Innate Limitation of Large Language Models.

The paper explains that large language models will be reliably incorrect. The paper includes some fancy and not so fancy math to make this assertion clear. Here’s what the authors present as their plain English explanation. (Hold on. I will give the dinobaby translation in a moment.)

Hallucination has been widely recognized to be a significant drawback for large language models (LLMs). There have been many works that attempt to reduce the extent of hallucination. These efforts have mostly been empirical so far, which cannot answer the fundamental question whether it can be completely eliminated. In this paper, we formalize the problem and show that it is impossible to eliminate hallucination in LLMs. Specifically, we define a formal world where hallucination is defined as inconsistencies between a computable LLM and a computable ground truth function. By employing results from learning theory, we show that LLMs cannot learn all of the computable functions and will therefore always hallucinate. Since the formal world is a part of the real world which is much more complicated, hallucinations are also inevitable for real world LLMs. Furthermore, for real world LLMs constrained by provable time complexity, we describe the hallucination-prone tasks and empirically validate our claims. Finally, using the formal world framework, we discuss the possible mechanisms and efficacies of existing hallucination mitigators as well as the practical implications on the safe deployment of LLMs.

Here’s my take:

- The map is not the territory. LLMs are a map. The territory is the human utterances. One is small and striving. The territory is what is.

- Fixing the problem requires some as yet worked out fancier math. When will that happen? Probably never because of no set can contain itself as an element.

- “Good enough” may indeed by acceptable for some applications, just not “all” applications. Because “all” is a slippery fish when it comes to models and training data. Are you really sure you have accounted for all errors, variables, and data? Yes is easy to say; it is probably tough to deliver.

Net net: The bad news is that smart software is now the next big thing. Math is not of too much interest, which is a bit of a problem in my opinion.

Stephen E Arnold, March 1, 2024

Student Surveillance: It Is a Thing

March 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Once mobile phones were designed with cameras, all technology was equipped with one. Installing cameras and recording devices is SOP now, but facial recognition technology will soon become as common unless privacy advocates have their way. Students at the University of Waterloo were upset to learn that vending machines on their campus were programmed with the controversial technology. The Kitchener explores how the scandal started in: “ ‘Facial Recognition’ Error Message On Vending Machine Sparks Concern At University Of Waterloo.”

A series of smart vending machines decorated with M&M graphics and dispense candy were located throughout the Waterloo campus. They raised privacy concerns when a student noticed an error message about the facial recognition application on one machine. The machines were then removed from campus. Until they were removed, word spread quickly and students covered a hole believed to hold a camera.

Students believed that vending machines didn’t need to have facial recognition applications. They also wondered if there were more places on campus where they were being monitored with similar technology.

The vending machines are owned by MARS, an international candy company, and manufactured by Invenda. The MARS company didn’t respond to queries but Invenda shared more information about the facial recognition application:

“Invenda also did not respond to CTV’s requests for comment but told Stanley in an email ‘the demographic detection software integrated into the smart vending machine operates entirely locally.’ ‘It does not engage in storage, communication, or transmission of any imagery or personally identifiable information,’ it continued.

According to Invenda’s website, the Smart Vending Machines can detect the presence of a person, their estimated age and gender. The website said the ‘software conducts local processing of digital image maps derived from the USB optical sensor in real-time, without storing such data on permanent memory mediums or transmitting it over the Internet to the Cloud.’”

Invenda also said the software is compliant with the European Union privacy General Data Protection Regulation but that doesn’t mean it is legal in Canada. The University of Waterloo has asked that the vending machines be removed from campus.

Net net: Cameras will proliferate and have smart software. Just a reminder.

Whitney Grace, March 1, 2024