A Discernment Challenge for Those Who Are Dull Normal

June 24, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Techradar, an online information service, published “Ahead of GPT-5 Launch, Another Test Shows That People Cannot Distinguish ChatGPT from a Human in a Conversation Test — Is It a Watershed Moment for AI?” The headline implies “change everything” rhetoric, but that is routine AI jargon-hype.

Once again, academics who are unable to land a job in a “real” smart software company studied the work of their former colleagues who make a lot more money than those teaching do. Well, what do academic researchers do when they are not sitting in the student union or the snack area in the lab whilst waiting for a graduate student to finish a task? In my experience, some think about their CVs or résumés. Others ponder the flaws in a commercial or allegedly commercial product or service.

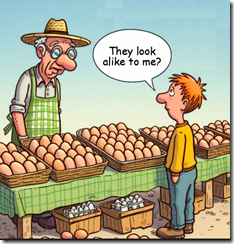

A young shopper explains that the outputs of egg laying chickens share a similarity. Insightful observation from a dumb carp. Thanks, MSFT Copilot. How’s that Recall project coming along?

The write up reports:

The Department of Cognitive Science at UC San Diego decided to see how modern AI systems fared and evaluated ELIZA (a simple rules-based chatbot from the 1960’s included as a baseline in the experiment), GPT-3.5, and GPT-4 in a controlled Turing Test. Participants had a five-minute conversation with either a human or an AI and then had to decide whether their conversation partner was human.

Here’s the research set up:

In the study, 500 participants were assigned to one of five groups. They engaged in a conversation with either a human or one of the three AI systems. The game interface resembled a typical messaging app. After five minutes, participants judged whether they believed their conversation partner was human or AI and provided reasons for their decisions.

And what did the intrepid academics find? Factoids that will get them a job at a Perplexity-type of company? Information that will put smart software into focus for the elected officials writing draft rules and laws to prevent AI from making The Terminator come true?

The results were interesting. GPT-4 was identified as human 54% of the time, ahead of GPT-3.5 (50%), with both significantly outperforming ELIZA (22%) but lagging behind actual humans (67%). Participants were no better than chance at identifying GPT-4 as AI, indicating that current AI systems can deceive people into believing they are human.

What does this mean for those labeled dull normal, a nifty term applied to some lucky people taking IQ tests. I wanted to be a dull normal, but I was able to score in the lowest possible quartile. I think it was called dumb carp. Yes!

Several observations to disrupt your clear thinking about smart software and research into how the hot dogs are made:

- The smart software seems to have stalled. Our tests of You.com which allows one to select which object models parrots information, it is tough to differentiate the outputs. Cut from the same transformer cloth maybe?

- Those judging, differentiating, and testing smart software outputs can discern differences if they are way above dull normal or my classification dumb carp. This means that indexing systems, people, and “new” models will be bamboozled into thinking what’s incorrect is a-okay. So much for the informed citizen.

- Will the next innovation in smart software revolutionize something? Yep, some lucky investors.

Net net: Confusion ahead for those like me: Dumb carp. Dull normals may be flummoxed. But those super-brainy folks have a chance to rule the world. Bust out the party hats and little horns.

Stephen E Arnold, June 24, 2024