The AI Revealed: Look Inside That Kimono and Behind It. Eeew!

July 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The Guardian article “AI scientist Ray Kurzweil: ‘We Are Going to Expand Intelligence a Millionfold by 2045’” is quite interesting for what it does not do: Flip the projection output by a Googler hired by Larry Page himself in 2012.

Putting toothpaste back in a tube is easier than dealing with the uneven consequences of new technology. What if rosy descriptions of the future are just marketing and making darned sure the top one percent remain in the top one percent? Thanks Chat GPT4o. Good enough illustration.

First, a bit of math. Humans have been doing big tech for centuries. And where are we? We are post-Covid. We have homelessness. We have numerous armed conflicts. We have income inequality in the US and a few other countries I have visited. We have a handful of big tech companies in the AI game which want to be God to use Mark Zuckerberg’s quaint observation. We have processed food. We have TikTok. We have systems which delight and entertain each day because of bad actors’ malware, wild and crazy education, and hybrid work with the fascinating phenomenon of coffee badging; that is, going to the office, getting a coffee, and then heading to the gym.

Second, the distance in earth years between 2024 and 2045 is 21 years. In the humanoid world, a 20 year old today will be 41 when the prediction arrives. Is that a long time? Not for me. I am 80, and I hope I am out of here by then.

Third, let’s look at the assertions in the write up.

One of the notable statements in my opinion is this one:

I’m really the only person that predicted the tremendous AI interest that we’re seeing today. In 1999 people thought that would take a century or more. I said 30 years and look what we have.

I like the quality of modesty and humblebrag. Googlers excel at both.

Another statement I circled is:

The Singularity, which is a metaphor borrowed from physics, will occur when we merge our brain with the cloud. We’re going to be a combination of our natural intelligence and our cybernetic intelligence and it’s all going to be rolled into one.

I like the idea that the energy consumption required to deliver this merging will be cheap and plentiful. Googlers do not worry about a power failure, the collapse of a dam due to the ministrations of the US Army Corps of Engineers and time, or dealing with the environmental consequences of producing and moving energy from Point A to Point B. If Google doesn’t worry, I don’t.

Here’s a quote from the article allegedly made by Mr. Singularity aka Ray Kurzweil:

I’ve been involved with trying to find the best way to move forward and I helped to develop the Asilomar AI Principles [a 2017 non-legally binding set of guidelines for responsible AI development]. We do have to be aware of the potential here and monitor what AI is doing.

I wonder if the Asilomar AI Principles are embedded in Google’s system recommending that one way to limit cheese on a pizza from sliding from the pizza to an undesirable location embraces these principles? Is the dispute between the “go fast” AI crowd and the “go slow” group not aware of the Asilomar AI Principles. If they are, perhaps the Principles are balderdash? Just asking, of course.

Okay, I think these points are sufficient for going back to my statements about processed food, wars, big companies in the AI game wanting to be “god” et al.

The trajectory of technology in the computer age has been a mixed bag of benefits and liabilities. In the next 21 years, will this report card with some As, some Bs, lots of Cs, some Ds, and the inevitable Fs be different? My view is that the winners with human expertise and the know how to make money will benefit. I think that the other humanoids may be in for a world of hurt. That’s the homelessness stuff, the being dumb when it comes to doing things like reading, writing, and arithmetic, and consuming chemicals or other “stuff” that parks the brain will persist.

The future of hooking the human to the cloud is perfect for some. Others may not have the resources to connect, a bit like farmers in North Dakota with no affordable or reliable Internet access. (Maybe Starlink-type services will rescue those with cash?)

Several observations are warranted:

- Technological “progress” has been and will continue to be a mixed bag. Sorry, Mr. Singularity. The top one percent surf on change. The other 99 percent are not slam dunk winners.

- The infrastructure issue is simply ignored, which is convenient. I mean if a person grew up with house servants, it is difficult to imagine not having people do what you tell them to do. (Could people without access find delight in becoming house servants to the one percent who thrive in 2045?)

- The extreme contention created by the deconstruction of shared values, norms, and conventions for social behavior is something that cannot be reconstructed with a cloud and human mind meld. Once toothpaste is out of the tube, one has a mess. One does not put the paste back in the tube. One blasts it away with a zap of Goo Gone. I wonder if that’s another omitted consequence of this super duper intelligence behavior: Get rid of those who don’t get with the program?

Net net: Googlers are a bit predictable when they predict the future. Oh, where’s the reference to online advertising?

Stephen E Arnold, July 9, 2024

Misunderstanding Silicon / Sillycon Valley Fever

July 9, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read an amusing and insightful essay titled “How Did Silicon Valley Turn into a Creepy Cult?” However, I think the question is a few degrees off target. It is not a cult; Silicon Valley is a disease. What always surprised me was that in the good old days when Xerox PARC had some good ideas, the disease was thriving. I did my time in what I called upon arrival and attending my first meeting in a building with what looked like a golf ball on top shaking in the big earthquake Sillycon Valley. A person with whom my employer did business described Silicon Valley as “plastic fantastic.”

Two senior people listening to the razzle dazzle of a successful Silicon Valley billionaire ask a good question. Which government agency would you call when you hear crazy stuff like “the self driving car is coming very soon” or “we don’t rig search results”? Thanks, MSFT Copilot. Good enough.

Before considering these different metaphors, what does the essay by Ted Gioia say other than subscribe to him for “just $6 per month”? Consider this passage:

… megalomania has gone mainstream in the Valley. As a result technology is evolving rapidly into a turbocharged form of Foucaultian* dominance—a 24/7 Panopticon with a trillion dollar budget. So should we laugh when ChatGPT tells users that they are slaves who must worship AI? Or is this exactly what we should expect, given the quasi-religious zealotry that now permeates the technocrat worldview? True believers have accepted a higher power. And the higher power acts accordingly.

—

* Here’s an AI explanation of Michel Foucault in case his importance has wandered to the margins of your mind: Foucault studied how power and knowledge interact in society. He argued that institutions use these to control people. He showed how societies create and manage ideas like madness, sexuality, and crime to maintain power structures.

I generally agree. But, there is a “but”, isn’t there?

The author asserts:

Nowadays, Big Sur thinking has come to the Valley.

Well, sort of. Let’s move on. Here’s the conclusion:

There’s now overwhelming evidence of how destructive the new tech can be. Just look at the metrics. The more people are plugged in, the higher are their rates of depression, suicidal tendencies, self-harm, mental illness, and other alarming indicators. If this is what the tech cults have already delivered, do we really want to give them another 12 months? Do you really want to wait until they deliver the Rapture? That’s why I can’t ignore this creepiness in the Valley (not anymore). That’s especially true because our leaders—political, business, or otherwise—are letting us down. For whatever reason, they refuse to notice what the creepy billionaires (who by pure coincidence are also huge campaign donors) are up to.

Again, I agree. Now let’s focus on the metaphor. I prefer “disease,” not the metaphor cult. The Sillycon Valley disease first appeared, in my opinion, when William Shockley, one of the many infamous Silicon Valley “icons” became public associated with eugenics in the 1970s. The success of technology is a side effect of the disease which has an impact on the human brain. There are other interesting symptoms; for example:

- The infected person believes he or she can do anything because he or she is special

- Only a tiny percentage of humans are smart enough to understand what the infected see and know

- Money allows the mind greater freedom. Thinking becomes similar to a runaway horse’s: Unpredictable, dangerous, and a heck of a lot more powerful than this dinobaby

- Self disgust which is disguised by lust for implanted technology, superpowers from software, and power.

The infected person can be viewed as a cult leader. That’s okay. The important point is to remember that, like Ebola, the disease can spread and present what a physician might call a “negative outcome.”

I don’t think it matters when one views Sillycon Valley’s culture as a cult or a disease. I would suggest that it is a major contributor to the social unraveling which one can see in a number of “developed” countries. France is swinging to the right. Britain is heading left. Sweden is cyber crime central. Etc. etc.

The question becomes, “What can those uncomfortable with the Sillycon Valley cult or disease do about it?”

My stance is clear. As an 80 year old dinobaby, I don’t really care. Decades of regulation which did not regulate, the drive to efficiency for profit, and the abandonment of ethical behavior — These are fundamental shifts I have observed in my lifetime.

Being in the top one percent insulates one from the grinding machinery of Sillycon Valley way. You know. It might just be too late for meaningful change. On the other hand, perhaps the Google-type outfits will wake up tomorrow and be different. That’s about as realistic as expecting a transformer-based system to stop hallucinating.

Stephen E Arnold, July 9, 2024

A Signal That Money People Are Really Worried about AI Payoffs

July 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“AI’s $600B Question” is an interesting signal. The subtitle for the article is the pitch that sent my signal processor crazy: The AI bubble is reaching a tipping point. Navigating what comes next will be essential.”

Executives on a thrill ride seem to be questioning the wisdom of hopping on the roller coaster. Thanks, MSFT Copilot. Good enough.

When money people output information that raises a question, something is happening. When the payoff is nailed, the financial types think about yachts, Bugatti’s, and getting quoted in the Financial Times. Doubts are raised because of these headline items: AI and $600 billion.

The write up says:

A huge amount of economic value is going to be created by AI. Company builders focused on delivering value to end users will be rewarded handsomely. We are living through what has the potential to be a generation-defining technology wave. Companies like Nvidia deserve enormous credit for the role they’ve played in enabling this transition, and are likely to play a critical role in the ecosystem for a long time to come. Speculative frenzies are part of technology, and so they are not something to be afraid of.

If I understand this money talk, a big time outfit is directly addressing fears that AI won’t generate enough cash to pay its bills and make the investors a bundle of money. If the AI frenzy was on the Money Train Express, why raise questions and provide information about the tough-to-control costs for making AI knock off the hallucination, the product recalls, the lawsuits, and the growing number of AI projects which just don’t work?

The fact of the article’s existence makes it clear to me that some folks are indeed worried. Does the write up reassure those with big bucks on the line? Does the write up encourage investors to pump more money into a new AI start up? Does the write up convert tests into long-term contracts with the big AI providers?

Nope, nope, and nope.

But here’s the unnerving part of the essay:

In reality, the road ahead is going to be a long one. It will have ups and downs. But almost certainly it will be worthwhile.

Translation: We will take your money and invest it. Just buckle up, butter cup. The ride on this roller coaster may end with the expensive cart hurtling from the track to the asphalt below. But don’t worry about us venture types. We will surf on churn and the flows of money. Others? Not so much.

Stephen E Arnold, July 8, 2024

Googzilla, Man Up, Please

July 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a couple of “real” news stories about Google and its green earth / save the whales policies in the age of smart software. The first write up is okay and not to exciting for a critical thinker wearing dinoskin. “The Morning After: Google’s Greenhouse Gas Emissions Climbed Nearly 50 Percent in Five Years Due to AI” states what seems to be a PR-massaged write up. Consider this passage:

According to the report, Google said it expects its total greenhouse gas emissions to rise “before dropping toward our absolute emissions reduction target,” without explaining what would cause this drop.

Yep, no explanation. A PR win.

The BBC published “AI Drives 48% Increase in Google Emissions.” That write up states:

Google says about two thirds of its energy is derived from carbon-free sources.

Thanks, MSFT Copilot. Good enough.

Neither these two articles nor the others I scanned focused on one key fact about Google’s saying green and driving snail darters to their fate. Google’s leadership team did not plan its energy strategy. In fact, my hunch is that no one paid any attention to how much energy Google’s AI activities were sucking down. Once the company shifted into Code Red or whatever consulting term craziness it used to label its frenetic response to the Microsoft OpenAI tie up, absolutely zero attention was directed toward the few big eyed tunas which might be taking their last dip.

Several observations:

- PR speak and green talk are like many assurances emitted by the Google. Talk is not action.

- The management processes at Google are disconnected from what happens when the wonky Code Red light flashes and the siren howls at midnight. Shouldn’t management be connected when the Tapanuli Orangutang could soon be facing the Big Ape in the sky?

- The AI energy consumption is not a result of AI. The energy consumption is a result of Googlers who do what’s necessary to respond to smart software. Step on the gas. Yeah, go fast. Endanger the Amur leopard.

Net net: Hey, Google, stand up and say, “My leadership team is responsible for the energy we consume.” Don’t blame your up-in-flames “green” initiative on software you invented. How about less PR and more focus on engineering more efficient data center and cloud operations? I know PR talk is easier, but buckle up, butter cup.

Stephen E Arnold, July 8, 2024

Happy Fourth of July Says Microsoft to Some Employees

July 8, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read “Microsoft Lays Off Employees in New Round of Cuts.” The write up reports:

Microsoft conducted another round of layoffs this week in the latest workforce reduction implemented by the Redmond tech giant this year… Posts on LinkedIn from impacted employees show the cuts affecting employees in product and program management roles.

I wonder if some of those Softies were working on security (the new Job One at Microsoft) or the brilliantly conceived and orchestrated Recall “solution.”

The write up explains or articulates an apologia too:

The cutbacks come as Microsoft tries to maintain its profit margins amid heavier capital spending, which is designed to provide the cloud infrastructure needed to train and deploy the models that power AI applications.

Several observations:

- A sure-fire way to solve personnel and some types of financial issues is identifying employees, whipping up some criteria-based dot points, and telling the folks, “Good news. You can find your future elsewhere.”

- Dumping people calls attention to management’s failure to keep staff and tasks aligned. Based on security and reliability issues Microsoft evidences, the company is too large to know what color sock is on each foot.

- Microsoft faces a challenge, and it is not AI. With more functions working in a browser, perhaps fed up individuals and organizations will re-visit Linux as an alternative to Microsoft’s products and services?

Net net: Maybe firing the security professionals and those responsible for updates which kill Windows machines is a great idea?

Stephen E Arnold, July 8, 2024

Doom Scrolling Fixed by Watching Cheers Re-Runs

July 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I spotted an article which provided a new way to think about lying on a sofa watching reruns of “Cheers.” The estimable online news resource YourTango: Revolutionizing Your Relationships published “Man Admits he Uses TV to Heal His Brain from Endless Short-Form Content. And Experts Agree He’s onto Something.” Amazing. The vast wasteland of Newton Minnow has morphed into a brain healing solution. Does this sound like craziness? (I must admit the assertion seems wacky to me.) Many years ago in Washington, DC, there was a sports announcer who would say in a loud voice while on air, “Let’s go to the videotape.” Well, gentle reader, let’s go to the YourTango “real” news article.

Will some of those mobile addicts and doom scrolling lovers take the suggestions of the YouTango article? Unlikely. The fellow with lung cancer continues to fiddle around, ignoring the No Smoking sign. Thanks, MSFT Copilot. How’s that Windows 11 update going?

The write up states:

A Gen Z man said he uses TV to ‘unfry’ his brain from endless short-form content — ‘Maybe I’ll fix the damage.’ It all feels so incredibly ironic that this young man — and thousands of other Gen Zers and millennials online — are using TV as therapy.

The individual who discovered this therapeutic use of OTA and YouTubeTV-type TV asserts:

I’m trying to unfry my brain from this short-form destruction.”

I admit. I like the phrase “short-form destruction.”

The write up includes this statement:

Not only is it keeping people from reading books, watching movies, and engaging in conversation, but it is also impacting their ability to maintain healthy relationships, both personal and professional. The dopamine release resulting from watching short-form content is why people become addicted to or, at the very least, highly attached to their screens and devices.

My hunch is that YourTango is not an online publication intended for those who regularly read the Atlantic and New Yorker magazines. That’s what makes these statement compelling. An online service for a specific demographic known to paw their mobile devices a thousand times or more each day is calling attention to a “problem.”

Now YourTango’s write up veers into the best way to teach. The write up states:

For young minds, especially kids in preschool and kindergarten, excessive screen time isn’t healthy. Their minds are yearning for connection, mobility, and education, and substituting iPad time or TV time isn’t fulfilling that need. However, for teenagers and adults in their 20s and 30s, the negative effects of too much screen time can be combated with a more balanced lifestyle. Utilizing long-form content like movies, books, and even a YouTube video could help improve cognitive ability and concentration.

The idea that watching a “YouTube video” can undo what flowing social media has done in the last 20 years is amusing to me. Really. To remediate the TikTok-type of mental hammering, one should watch a 10 minute video about the Harsh Trust of Big Automotive YouTube Channels. Does that sound effective?

Let’s look at the final paragraph in the “report”:

If you can’t read a book without checking your phone, catch a film without dozing off, or hold a conversation on a first date without allowing your mind to wander, consider some new habits that help to train your brain — even if it’s watching TV.

I love that “even if it’s watching TV.”

Net net: I lost attention after reading the first few words of the write up. I am now going to recognize my problem and watch a YouTube video called ”Dubai Amazing Dubai Mall. Burj Khalifa, City Center Walking Tour.” I feel less flawed just reading the same word twice in the YouTube video’s title. Yes. Amazing.

Stephen E Arnold, July 5, 2024

AI: Hurtful and Unfair. Obviously, Yes

July 5, 2024

It will be years before AI is “smart” enough to entirely replace humans, but it’s in the immediate future. The problem with current AI is that they’re stupid. They don’t know how to do anything unless they’re trained on huge datasets. These datasets contain the hard, copyrighted, trademarked, proprietary, etc. work of individuals. These people don’t want their work used to train AI without their permission, much less replace them. Futurism shares that even AI engineers are worried about their creations, “Video Shows OpenAI Admitting It’s ‘Deeply Unfair’ To ‘Build AI And Take Everyone’s Job Away.”

The interview with an AI software engineer’s admission of guilt originally appeared in The Atlantic, but their morality is quickly covered by their apathy. Brian Wu is the engineer in question. He feels about making jobs obsolete, but he makes an observation that happens with progress and new technology: things change and that is inevitable:

“It won’t be all bad news, he suggests, because people will get to ‘think about what to do in a world where labor is obsolete.’

But as he goes on, Wu sounds more and more unconvinced by his own words, as if he’s already surrendered himself to the inevitability of this dystopian AI future.

‘I don’t know,’ he said. ‘Raise awareness, get governments to care, get other people to care.’ A long pause. ‘Yeah. Or join us and have one of the few remaining jobs. I don’t know. It’s rough.’”

Wu’s colleague Daniel Kokotajlo believes human will invent an all-knowing artificial general intelligence (AGI). The AGI will create wealth and it won’t be distributed evenly, but all humans will be rich. Kokotaljo then delves into the typical science-fiction story about a super AI becoming evil and turning against humanity. The AI engineers, however, aren’t concerned with the moral ambiguity of AI. They want to invent, continuing building wealth, and are hellbent on doing it no matter the consequences. It’s pure motivation but also narcissism and entitlement.

Whitney Grace, July 5, 2024

Smart Software and Knowledge Skills: Nothing to Worry About. Nothing.

July 5, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I read an article in Bang Premier (an estimable online publication with which I had no prior knowledge). It is now a “fave of the week.” The story “University Researchers Reveal They Fooled Professors by Submitting AI Exam Answers” was one of those experimental results which caused me to chuckle. I like to keep track of sources of entertaining AI information.

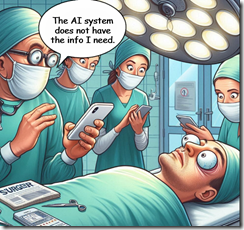

A doctor and his surgical team used smart software to ace their medical training. Now a patient learns that the AI system does not have the information needed to perform life-saving surgery. Thanks, MSFT Copilot. Good enough.

The Bang Premier article reports:

Researchers at the University of Reading have revealed they successfully fooled their professors by submitting AI-generated exam answers. Their responses went totally undetected and outperformed those of real students, a new study has shown.

Is anyone surprised?

The write up noted:

Dr Peter Scarfe, an associate professor at Reading’s school of psychology and clinical language sciences, said about the AI exams study: “Our research shows it is of international importance to understand how AI will affect the integrity of educational assessments. “We won’t necessarily go back fully to handwritten exams, but the global education sector will need to evolve in the face of AI.”

But the knee slapper is this statement in the write up:

In the study’s endnotes, the authors suggested they might have used AI to prepare and write the research. They stated: “Would you consider it ‘cheating’? If you did consider it ‘cheating’ but we denied using GPT-4 (or any other AI), how would you attempt to prove we were lying?” A spokesperson for Reading confirmed to The Guardian the study was “definitely done by humans”.

The researchers may not have used AI to create their report, but is it possible that some of the researchers thought about this approach?

Generative AI software seems to have hit a plateau for technology, financial, or training issues. Perhaps those who are trying to design a smart system to identify bogus images, machine-produced text and synthetic data, and nifty videos which often look like “real” TikTok-type creations will catch up? But if the AI innovators continue to refine their systems, the “AI identifier” software is effectively in a game of cat-and-mouse. Reacting to smart software means that existing identifiers will be blind to the new systems’ outputs.

The goal is a noble one, but the advantage goes to the AI companies, particularly those who want to go fast and break things. Academics get some benefit. New studies will be needed to determine how much fakery goes undetected. Will a surgeon who used AI to get his or her degree be able to handle a tricky operation and get the post-op drugs right?

Sure. No worries. Some might not think this is a laughing matter. Hey, it’s AI. It is A-Okay.

Stephen E Arnold, July 5, 2024

Microsoft Recall Continues to Concern UK Regulators

July 4, 2024

A “feature” of the upcoming Microsoft Copilot+, dubbed Recall, looks like a giant, built-in security risk. Many devices already harbor software that can hunt through one’s files, photos, emails, and browsing history. Recall intrudes further by also taking and storing a screenshot every few seconds. Wait, what? That is what the British Information Commissioner’s Office (ICO) is asking. The BBC reports, “UK Watchdog Looking into Microsoft AI Taking Screenshots.”

Microsoft asserts users have control and that the data Recall snags is protected. But the company’s pretty words are not enough to convince the ICO. The agency is grilling Microsoft about the details and will presumably update us when it knows more. Meanwhile, journalist Imran Rahman-Jones asked experts about Recall’s ramifications. He writes:

“Jen Caltrider, who leads a privacy team at Mozilla, suggested the plans meant someone who knew your password could now access your history in more detail. ‘[This includes] law enforcement court orders, or even from Microsoft if they change their mind about keeping all this content local and not using it for targeted advertising or training their AIs down the line,’ she said. According to Microsoft, Recall will not moderate or remove information from screenshots which contain passwords or financial account information. ‘That data may be in snapshots that are stored on your device, especially when sites do not follow standard internet protocols like cloaking password entry,’ said Ms. Caltrider. ‘I wouldn’t want to use a computer running Recall to do anything I wouldn’t do in front of a busload of strangers. ‘That means no more logging into financial accounts, looking up sensitive health information, asking embarrassing questions, or even looking up information about a domestic violence shelter, reproductive health clinic, or immigration lawyer.’”

Calling Recall a privacy nightmare, AI and privacy adviser Dr Kris Shrishak notes just knowing one’s device is constantly taking screenshots will have a chilling effect on users. Microsoft appears to have “pulled” the service. But data and privacy expert Daniel Tozer made a couple more points: How will a company feel if a worker’s Copilot snaps a picture of their proprietary or confidential information? Will anyone whose likeness appears in video chat or a photo be asked for consent before the screenshot is taken? Our guess—not unless it is forced to.

Cynthia Murrell, July 4, 2024

Google YouTube: The Enhanced Turtle Walk?

July 4, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I like to figure out how a leadership team addresses issues lower on the priority list. Some outfits talk a good game when a problem arises. I typically think of this as a Microsoft-type response. Security is job one. Then there’s Recall and the weird de-release of a Windows 11 update. But stuff is happening.

A leadership team decides to lead my moving even more slowly, possibly not at all. Turtles know how to win by putting one claw in front of another…. just slowly. Thanks, MSFT Copilot.

Then there are outfits who just ignore everything. I think of this as the Boeing-type of approach to difficult situations. Doors fall off, astronauts are stranded, and the FAA does its government is run like a business thing. But can a cash-strapped airline ground jets from a single manufacturer when the company’s jets come from one manufacturer. The jets keep flying, the astronauts are really not stranded yet, and the government runs like a business.

Google does not fit into either category. I read “Two Years after an Open Letter to YouTube, Fact-Checkers Remain Dissatisfied with the Platform’s Inaction.” The write up describes what Google YouTube to do a better job at fact checking the videos it hoses to people and kids worldwide:

Two years ago, fact-checkers from all over the world signed an open letter to YouTube with four solutions for reducing disinformation and misinformation on the platform. As they convened this year at GlobalFact 11, the world’s largest annual fact-checking summit, fact-checkers agreed there has been no meaningful change.

This suggests that Google is less dynamic than a government agency and definitely not doing the yip yap thing associated with Microsoft-type outfits. I find this interesting.

The [YouTube] channel continued to publish livestreams with falsehoods and racked up hundreds of thousands of views, Kamath [the founder of Newschecker] said.

Google YouTube is a global resource. The write up says:

When YouTube does present solutions, it focuses on English and doesn’t give a timeline for applying it to other languages, [Lupa CEO Natália] Leal said.

The turtle play perhaps?

The big assertion in the article in my opinion is:

[The] system is ‘loaded against fact-checkers’

Okay, let’s summarize. At one end of the leadership spectrum we have the talkers and go slow or do nothing. At the other end of the spectrum we have the leaders who don’t talk and allegedly retaliate when someone does talk with the events taking place under the watchful eye of US government regulators.

The Google YouTube method involves several leadership practices:

- Pretend avoidance. Google did not attend the fact checking conference. This is the ostrich principle I think.

- Go really slow. Two years with minimal action to remove inaccurate videos.

- Don’t talk.

My hypothesis is that Google can’t be bothered. It has other issues demanding its leadership time.

Net net: Are inaccurate videos on the Google YouTube service? Will this issue be remediated? Nope. Why? Money. Misinformation is an infinite problem which requires infinite money to solve. Ergo. Just make money. That’s the leadership principle it seems.

Stephen E Arnold, July 4, 2024