Old Problem, New Consequences: AI and Data Quality

August 6, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

Grab a business card from several years ago. Just for laughs send an email to the address on the card or dial one of the numbers printed on it. What happens? Does the email bounce? Does the person you called answer? In my experience, the business cards I have gathered at conferences in 2021 are useless. The number rings in space or a non-human voice says, “The number has been disconnected.” The emails go into a black hole. I would, based on my experience, peg the 100 random cards I had one of my colleagues pull from the files that work at fewer than 30 percent. In 24 months, 70 percent of the data are invalid. An optimist would say, “You have 30 people you can contact.” A pessimist would say, “Wow, you lost 70 contacts.” A 20-something whiz kid at one of the big time AI companies would say, “Good enough.”

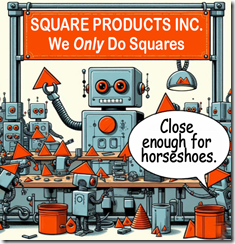

An automated data factory purports to manufacture squares. What does it do? Triangles are good enough and close enough for horseshoes. Does the factory call the triangles squares? Of course, it does. Thanks, MSFT Copilot. Security is Job One today I hear.

I read “Data Quality: The Unseen Villain of Machine Learning.” The write up states:

Too often, data scientists are the people hired to “build machine learning models and analyze data,” but bad data prevents them from doing anything of the sort. Organizations put so much effort and attention into getting access to this data, but nobody thinks to check if the data going “in” to the model is usable. If the input data is flawed, the output models and analyses will be too.

Okay, that’s a reasonable statement. But this passage strikes me as a bit orthogonal to the observations I have made:

It is estimated that data scientists spend between 60 and 80 percent of their time ensuring data is cleansed, in order for their project outcomes to be reliable. This cleaning process can involve guessing the meaning of data and inferring gaps, and they may inadvertently discard potentially valuable data from their models. The outcome is frustrating and inefficient as this dirty data prevents data scientists from doing the valuable part of their job: solving business problems. This massive, often invisible cost slows projects and reduces their outcomes.

The painful reality, in my experience, consists of three factors:

- Data quality depends on the knowledge and resources available to a subject matter expert. A data quality expert might define quality as consistent data; that is, the name field has a name. The SME figures out if the data are in line with other data and what’s is off base.

- The time required to “ensure” data quality is rarely available. There are interruptions, Zooms, and automated calendars that ping a person for a meeting. Data quality is easily killed by time suffocation.

- The volume of data begs for automated procedures and, of course, AI. The problem is that the range of errors related to validity is sufficiently broad to allow “flawed” data to enter a systems. Good enough creates interesting consequences.

The write up says:

Data quality shouldn’t be a case of waiting for an issue to occur in production and then scrambling to fix it. Data should be constantly tested, wherever it lives, against an ever-expanding pool of known problems. All stakeholders should contribute and all data must have clear, well-defined data owners. So, when a data scientist is asked what they do, they can finally say: build machine learning models and analyze data.

This statement makes clear why flawed data remain flawed. The fix, according to some, is synthetic data. Are these data of high quality? It depends on what one means by “quality.” Today the benchmark is good enough. Good enough produces outputs that are not. But who knows? Not the harried person looking for something, anything, to put in a presentation, journal article, or podcast.

Stephen E Arnold, August 6, 2024