Copilot and Hackers: Security Issues Noted

August 12, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The online publication Cybernews ran a story I found interesting. It title suggests something about Black Hat USA 2024 attendees I have not considered. Here’s the headline:

Black Hat USA 2024: : Microsoft’s Copilot Is Freaking Some Researchers Out

Wow. Hackers (black, gray, white, and multi-hued) are “freaking out.” As defined by the estimable Urban Dictionary, “freaking” means:

Obscene dancing which simulates sex by the grinding the of the genitalia with suggestive sounds/movements. often done to pop or hip hop or rap music

No kidding? At Black Hat USA 2024?

Thanks, Microsoft Copilot. Freak out! Oh, y0ur dance moves are good enough.

The article reports:

Despite Microsoft’s claims, cybersecurity researcher Michael Bargury demonstrated how Copilot Studio, which allows companies to build their own AI assistant, can be easily abused to exfiltrate sensitive enterprise data. We also met with Bargury during the Black Hat conference to learn more. “Microsoft is trying, but if we are honest here, we don’t know how to build secure AI applications,” he said. His view is that Microsoft will fix vulnerabilities and bugs as they arise, letting companies using their products do so at their own risk.

Wait. I thought Microsoft has tied cash to security work. I thought security was Job #1 at the company which recently accursed Delta Airlines of using outdated technology and failing its customers. Is that the Microsoft that Mr. Bargury is suggesting has zero clue how to make smart software secure?

With MSFT Copilot turning up in places that surprise me, perhaps the Microsoft great AI push is creating more problems. The SolarWinds glitch was exciting for some, but if Mr. Bargury is correct, cyber security life will be more and more interesting.

Stephen E Arnold, August 12, 2024

Apple Does Not Just Take Money from Google

August 12, 2024

In an apparent snub to Nvidia, reports MacRumors, “Apple Used Google Tensor Chips to Develop Apple Intelligence.” The decision to go with Google’s TPUv5p chips over Nvidia’s hardware is surprising, since Nvidia has been dominating the AI processor market. (Though some suggest that will soon change.) Citing Apple’s paper on the subject, writer Hartley Charlton reveals:

“The paper reveals that Apple utilized 2,048 of Google’s TPUv5p chips to build AI models and 8,192 TPUv4 processors for server AI models. The research paper does not mention Nvidia explicitly, but the absence of any reference to Nvidia’s hardware in the description of Apple’s AI infrastructure is telling and this omission suggests a deliberate choice to favor Google’s technology. The decision is noteworthy given Nvidia’s dominance in the AI processor market and since Apple very rarely discloses its hardware choices for development purposes. Nvidia’s GPUs are highly sought after for AI applications due to their performance and efficiency. Unlike Nvidia, which sells its chips and systems as standalone products, Google provides access to its TPUs through cloud services. Customers using Google’s TPUs have to develop their software within Google’s ecosystem, which offers integrated tools and services to streamline the development and deployment of AI models. In the paper, Apple’s engineers explain that the TPUs allowed them to train large, sophisticated AI models efficiently. They describe how Google’s TPUs are organized into large clusters, enabling the processing power necessary for training Apple’s AI models.”

Over the next two years, Apple says, it plans to spend $5 billion in AI server enhancements. The paper gives a nod to ethics, promising no private user data is used to train its AI models. Instead, it uses publicly available web data and licensed content, curated to protect user privacy. That is good. Now what about the astronomical power and water consumption? Apple has no reassuring words for us there. Is it because Apple is paying Google, not just taking money from Google?

Cynthia Murrell, August 12, 2024

Podcasts 2024: The Long Tail Is a Killer

August 9, 2024

![green-dino_thumb_thumb_thumb_thumb_t[2] green-dino_thumb_thumb_thumb_thumb_t[2]](https://arnoldit.com/wordpress/wp-content/uploads/2024/07/green-dino_thumb_thumb_thumb_thumb_t2_thumb.gif) This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

One of my Laws of Online is that the big get bigger. Those who are small go nowhere.

My laws have not been popular since I started promulgating them in the early 1980s. But they are useful to me. The write up “Golden Spike: Podcasting Saw A 22% Rise In Ad Spending In Q2 [2024].” The information in the article, if on the money, appear to support the Arnold Law articulated in the first sentence of this blog post.

The long tail can be a killer. Thanks, MSFT Copilot. How’s life these days? Oh, that’s too bad.

The write up contains an item of information which not surprising to those who paid attention in a good middle school or in a second year economics class. (I know. Snooze time for many students.) The main idea is that a small number of items account for a large proportion of the total occurrences.

Here’s what the article reports:

Unsurprisingly, podcasts in the top 500 attracted the majority of ad spend, with these shows garnering an average of $252,000 per month each. However, the profits made by series down the list don’t have much to complain about – podcasts ranked 501 to 3000 earned about $30,000 monthly. Magellan found seven out of the top ten advertisers from the first quarter continued their heavy investment in the second quarter, with one new entrant making its way onto the list.

This means that of the estimated three to four million podcasts, the power law nails where the advertising revenue goes.

I mention this because when I go to the gym I listen to some of the podcasts on the Leo Laporte TWIT network. At one time, the vision was to create the CNN of the technology industry. Now the podcasts seem to be the voice of the podcasts which cannot generate sufficient money from advertising to pay the bills. Therefore, hasta la vista staff, dedicated studio, and presumably some other expenses associated with a permanent studio.

Other podcasts will be hit by the stinging long tail. The question becomes, “How do these 2.9 million podcasts make money?”

Here’s what I have noticed in the last few months:

- Podcasters (video and voice) just quit. I assume they get a job or move in with friends. Van life is too expensive due to the cost of fuel, food, and maintenance now that advertising is chasing the winners in the long tail game.

- Some beg for subscribers.

- Some point people to their Buy Me a Coffee or Patreon page, among other similar community support services.

- Some sell T shirts. One popular technology podcaster sells a $60 screwdriver. (I need that.)

- Some just whine. (No, I won’t single out the winning whiner.)

If I were teaching math, this podcast advertising data would make an interesting example of the power law. Too bad most will be impotent to change its impact on podcasting.

Stephen E Arnold, August 9, 2024

Can AI Models Have Abandonment Issues?

August 9, 2024

Gee, it seems the next big thing may now be just … the next thing. Citing research from Gartner, Windows Central asks, “Is GenAI a Dying Fad? A New Study Predicts 30% of Investors Will Jump Ship by 2025 After Proof of Concept.” This is on top of a Reuters Institute report released in May that concluded public “interest” in AI is all hype and very few people are actually using the tools. Writer Kevin Okemwa specifies:

“[Gartner] suggests ‘at least 30% of generative AI (GenAI) projects will be abandoned after proof of concept by the end of 2025.’ The firm attributes its predictions to poor data quality, a lack of guardrails to prevent the technology from spiraling out of control, and high operation costs with no clear path to profitability.”

For example, the article reminds us, generative AI leader OpenAI is rumored to be facing bankruptcy. Gartner Analyst Rita Sallam notes that, while executives are anxious for the promised returns on AI investments, many companies struggle to turn these projects into profits. Okemwa continues:

“Gartner’s report highlights the challenges key players have faced in the AI landscape, including their inability to justify the substantial resources ranging from $5 million to $20 million without a defined path to profitability. ‘Unfortunately, there is no one size fits all with GenAI, and costs aren’t as predictable as other technologies,’ added Sallam. According to Gartner’s report, AI requires ‘a high tolerance for indirect, future financial investment criteria versus immediate return on investment (ROI).’“

That must come as a surprise to those who banked on AI hype and expected massive short-term gains. Oh well. So, what will the next next big thing be?

Cynthia Murrell, August 9, 2024

DeepMind Explains Imagination, Not the Google Olympic Advertisement

August 8, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

I admit it. I am suspicious of Google “announcements,” ArXiv papers, and revelations about the quantumly supreme outfit. I keep remembering the Google VP dead on a yacht with a special contract worker. I know about the Googler who tried to kill herself because a dalliance with a Big Time Google executive went off the rails. I know about the baby making among certain Googlers in the legal department. I know about the behaviors which the US Department of Justice described as “monopolistic.”

When I read “What Bosses Miss about AI,” I thought immediately about Google’s recent mass market televised advertisement about uses of Google artificial intelligence. The set up is that a father (obviously interested in his progeny) turned to Google’s generative AI to craft an electronic message to the humanoid. I know “quality time” is often tough to accommodate, but an email?

The Googler who allegedly wrote the cited essay has a different take on how to use smart software. First, most big-time thinkers are content with AI performing cost-reduction activities. AI is less expensive than a humanoid. These entities require health care, retirement, a shoulder upon which to cry (a key function for personnel in the human relations department), and time off.

Another type of big-time thinker grasps the idea that smart software can make processes more efficient. The write up describes this as the “do what we do, just do it better” approach to AI. The assumption is that the process is neutral, and it can be improved. Imagine the value of AI to Vlad the Impaler!

The third category of really Big Thinker is the leader who can use AI for imagination. I like the idea of breaking a chaotic mass of use cases into categories anchored to the Big Thinkers who use the technology.

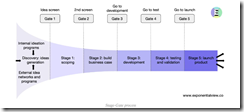

However, I noted what I think is unintentional irony in the write up. This chart shows the non-AI approach to doing what leadership is supposed to do:

What happens when a really Big Thinker uses AI to zip through this type of process. The acceleration is delivered from AI. In this Googler’s universe, I think one can assume Google’s AI plays a modest role. Here’s the payoff paragraph:

Traditional product development processes are designed based on historical data about how many ideas typically enter the pipeline. If that rate is constant or varies by small amounts (20% or 50% a year), your processes hold. But the moment you 10x or 100x the front of that pipeline because of a new scientific tool like AlphaFold or a generative AI system, the rest of the process clogs up. Stage 1 to Stage 2 might be designed to review 100 items a quarter and pass 5% to Stage 2. But what if you have 100,000 ideas that arrive at Stage 1? Can you even evaluate all of them? Do the criteria used to pass items to Stage 2 even make sense now? Whether it is a product development process or something else, you need to rethink what you are doing and why you are doing it. That takes time, but crucially, it takes imagination.

Let’s think about this advice and consider the imagination component of the Google Olympics’ advertisement.

- Google implemented a process, spent money, did “testing,” ran the advert, and promptly withdrew it. Why? The ad was annoying to humanoids.

- Google’s “imagination” did not work. Perhaps this is a failure of the Google AI and the Google leadership? The advert succeeded in making Google the focal point of some good, old-fashioned, quite humanoid humor. Laughing at Google AI is certainly entertaining, but it appears to have been something that Google’s leadership could not “imagine.”

- The Google AI obviously reflects Google engineering choices. The parent who must turn to Google AI to demonstrate love, parental affection, and support to one’s child is, in my opinion, quite Googley. Whether the action is human or not might be an interesting topics for a coffee shop discussion. For non-Googlers, the idea of talking about what many perceived as stupid, insensitive, and inhumane is probably a non-started. Just post on social media and move on.

Viewed in a larger context, the cited essay makes it clear that Googlers embrace AI. Googlers see others’ reaction to AI as ranging from doltish to informed. Google liked the advertisement well enough to pay other companies to show the message.

I suggest the following: Google leadership should ask several AI systems if proposed advertising copy can be more economical. That’s a Stage 1 AI function. Then Google leadership should ask several AI systems how the process of creating the ideas for an advertisement can be improved. That’s a Stage 2 AI function. And, finally, Google leadership should ask, “What can we do to prevent bonkers problems resulting from trying to pretend we understand people who know nothing and care less about the three “stages” of AI understanding.

Will that help out the Google? I don’t need to ask an AI system. I will go with my instinct. The answer is, “No.”

That’s one of the challenges Google faces. The company seems unable to help itself do anything other than sell ads, promote its AI system, and cruise along in quantumly supremeness.

Stephen E Arnold, August 8, 2024

Thoughts about the Dark Web

August 8, 2024

This essay is the work of a dumb humanoid. No smart software required.

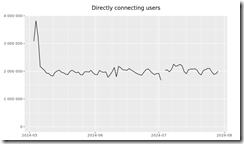

The Dark Web. Wow. YouTube offers a number of tell-all videos about the Dark Web. Articles explain the topics one can find on certain Dark Web fora. What’s forgotten is that the number of users of the Dark Web has been chugging along, neither gaining tens of millions of users or losing tens of millions of users. Why? Here’s a traffic chart from the outfit that sort of governs The Onion Router:

Source: https://metrics.torproject.org/userstats-relay-country.html

The chart is not the snappiest item on the sprawling Torproject.org Web site, but the message seems to be that TOR has been bouncing around two million users this year. Go back in time and the number has increased, but not much. Online statistics, particularly those associated with obfuscation software, are mushy. Let’s toss in another couple million users to account for alternative obfuscation services. What happens? We are not in the tens of millions.

Our research suggests that the stability of TOR usage is due to several factors:

- The hard core bad actors comprise a small percentage of the TOR usage and probably do more outside of TOR than within it. In September 2024 I will be addressing this topic at a cyber fraud conference.

- The number of entities indexing the “Dark Web” remains relatively stable. Sure, some companies drop out of this data harvesting but the big outfits remain and their software looks a lot like a user, particularly with some of the wonky verification Dark Web sites use to block automated collection of data.

- Regular Internet users don’t pay much attention to TOR, including those with the one-click access browsers like Brave.

- Human investigators are busy looking and interacting, but the numbers of these professionals also remains relatively stable.

To sum up, most people know little about the Dark Web. When these individuals figure out how to access a Web site advertising something exciting like stolen credit cards or other illegal products and services, they are unaware of a simple fact: An investigator from some country maybe operating like a bad actor to find a malefactor. By the way, the Dark Web is not as big as some cyber companies assert. The actual number of truly bad Dark Web sites is fewer than 100, based on what my researchers tell me.

A very “good” person approaches an individual who looks like a very tough dude. The very “good” person has a special job for the touch dude. Surprise! Thanks, MSFT Copilot. Good enough and you should know what certain professionals look like.

I read “Former Pediatrician Stephanie Russell Sentenced in Murder Plot.” The story is surprisingly not that unique. The reason I noted a female pediatrician’s involvement in the Dark Web is that she lives about three miles from my office. The story is that the good doctor visited the Dark Web and hired a hit man to terminate an individual. (Don’t doctors know how to terminate as part of their studies?)

The write up reports:

A Louisville judge sentenced former pediatrician Stephanie Russell to 12 years in prison Wednesday for attempting to hire a hitman to kill her ex-husband multiple times.

I love the somewhat illogical phrase “kill her ex-husband multiple times.”

Russell pleaded guilty April 22, 2024, to stalking her former spouse and trying to have him killed amid a protracted custody battle over their two children. By accepting responsibility and avoiding a trial, Russell would have expected a lighter prison sentence. However, she again tried to find a hitman, this time asking inmates to help with the search, prosecutors alleged in court documents asking for a heftier prison sentence.

One rumor circulating at the pub which is a popular lunch spot near the doctor’s former office is that she used the Dark Web and struck up an online conversation with one of the investigators monitoring such activity.

Net net: The Dark Web is indeed interesting.

Stephen E Arnold, August 8, 2024

Train AI on Repetitive Data? Sure, Cheap, Good Enough, But, But, But

August 8, 2024

We already know that AI algorithms are only as smart as the data that trains them. If the data models are polluted with bias such as racism and sexism, the algorithms will deliver polluted results. We’ve also learned that while some of these models are biased because of innocent ignorance. Nature has revealed that AI algorithms have yet another weakness: “AI Models Collapse When Trained On Recursively Generated Data.”

Generative text AI aka large language models (LLMs) are already changing the global landscape. While generative AI is still in its infancy, AI developers are already designing the next generation. There’s one big problem: LLMs. The first versions of Chat GPT were trained on data models that scrapped content from the Internet. GPT continues to train on models using the same scrapping methods, but it’s creating a problem:

“If the training data of most future models are also scraped from the web, then they will inevitably train on data produced by their predecessors. In this paper, we investigate what happens when text produced by, for example, a version of GPT forms most of the training dataset of following models. What happens to GPT generations GPT-{n} as n increases? We discover that indiscriminately learning from data produced by other models causes ‘model collapse’—a degenerative process whereby, over time, models forget the true underlying data distribution, even in the absence of a shift in the distribution over time.”

The generative AI algorithms are learning from copies of copies. Over time the integrity of the information fails. The research team behind the Nature paper discovered that model collapse is inevitable when with the most ideal conditions. The team did discover two possibilities to explain model collapse: intentional data poisoning and task-free continual learning. Those don’t explain recursive data collapse with models free of those events.

The team concluded that the best way for generative text AI algorithms to learn was continual interaction learning from humans. In other words, the LLMs need constant, new information created by humans to replicate their behavior. It’s simple logic when you think about it.

Whitney Grace, August 8, 2024

The Customer Is Not Right. The Customer Is the Problem!

August 7, 2024

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

This essay is the work of a dinobaby. Unlike some folks, no smart software improved my native ineptness.

The CrowdStrike misstep (more like a trivial event such as losing the cap to a Bic pen or misplacing an eraser) seems to be morphing into insights about customer problems. I pointed out that CrowdStrike in 2022 suggested it wanted to become a big enterprise player. The company has moved toward that goal, and it has succeeded in capturing considerable free marketing as well.

Two happy high-technology customers learn that they broke their system. The good news is that the savvy vendor will sell them a new one. Thanks, MSFT Copilot. Good enough.

The interesting failure of an estimated 8.5 million customers’ systems made CrowdStrike a household name. Among some airline passengers, creative people added more colorful language. Delta Airlines has retained a big time law firm. The idea is to sue CrowdStrike for a misstep that caused concession sales at many airports to go up. Even Panda Chinese looks quite tasty after hours spent in an airport choked with excited people, screaming babies, and stressed out over achieving business professionals.

“Microsoft Claims Delta Airlines Declined Help in Upgrading Technology After Outage” reports that like CrowdStrike, Microsoft’s attorneys want to make quite clear that Delta Airlines is the problem. Like CrowdStrike, Microsoft tried repeatedly to offer a helping hand to the airline. The airline ignored that meritorious, timely action.

Like CrowdStrike, Delta is the problem, not CrowdStrike or Microsoft whose systems were blindsided by that trivial update issue. The write up reports:

Mark Cheffo, a Dechert partner [another big-time lawfirm] representing Microsoft, told Delta’s attorney in a letter that it was still trying to figure out how other airlines recovered faster than Delta, and accused the company of not updating its systems. “Our preliminary review suggests that Delta, unlike its competitors, apparently has not modernized its IT infrastructure, either for the benefit of its customers or for its pilots and flight attendants,” Cheffo wrote in the letter, NBC News reported. “It is rapidly becoming apparent that Delta likely refused Microsoft’s help because the IT system it was most having trouble restoring — its crew-tracking and scheduling system — was being serviced by other technology providers, such as IBM … and not Microsoft Windows," he added.

The language in the quoted passage, if accurate, is interesting. For instance, there is the comparison of Delta to other airlines which “recovered faster.” Delta was not able to recover faster. One can conclude that Delta’s slowness is the reason the airline was dead on the hot tarmac longer than more technically adept outfits. Among customers grounded by the CrowdStrike misstep, Delta was the problem. Microsoft systems, as outstanding as they are, wants to make darned sure that Delta’s allegations of corporate malfeasance goes nowhere fast oozes from this characterization and comparison.

Also, Microsoft’s big-time attorney has conducted a “preliminary review.” No in-depth study of fouling up the inner workings of Microsoft’s software is needed. The big-time lawyers have determined that “Delta … has not modernized its IT infrastructure.” Okay, that’s good. Attorneys are skillful evaluators of another firm’s technological infrastructure. I did not know big-time attorneys had this capability, but as a dinobaby, I try to learn something new every day.

Plus the quoted passed makes clear that Delta did not want help from either CrowdStrike or Microsoft. But the reason is clear: Delta Airlines relied on other firms like IBM. Imagine. IBM, the mainframe people, the former love buddy of Microsoft in the OS/2 days, and the creator of the TV game show phenomenon Watson.

As interesting as this assertion that Delta is not to blame for making some airports absolute delights during the misstep, it seems to me that CrowdStrike and Microsoft do not want to be in court and having to explain the global impact of misplacing that ballpoint pen cap.

The other interesting facet of the approach is the idea that the best defense is a good offense. I find the approach somewhat amusing. The customer, not the people licensing software, is responsible for its problems. These vendors made an effort to help. The customer who screwed up their own Rube Goldberg machine, did not accept these generous offers for help. Therefore, the customer caused the financial downturn, relying on outfits like the laughable IBM.

Several observations:

- The “customer is at fault” is not surprising. End user licensing agreements protect the software developer, not the outfit who pays to use the software.

- For CrowdStrike and Microsoft, a loss in court to Delta Airlines will stimulate other inept customers to seek redress from these outstanding commercial enterprises. Delta’s litigation must be stopped and quickly using money and legal methods.

- None of the yip-yap about “fault” pays much attention to the people who were directly affected by the trivial misstep. Customers, regardless of the position in the food chain of revenue, are the problem. The vendors are innocent, and they have rights too just like a person.

For anyone looking for a new legal matter to follow, the CrowdStrike Microsoft versus Delta Airlines may be a replacement for assorted murders, sniping among politicians, and disputes about “get out of jail free cards.” The vloggers and the poohbahs have years of interactions to observe and analyze. Great stuff. I like the customer is the problem twist too.

Oh, I must keep in mind that I am at fault when a high-technology outfit delivers low-technology.

Stephen E Arnold, August 7, 2024

Publishers Perplexed with Perplexity

August 7, 2024

In an about-face, reports Engadget, “Perplexity Will Put Ads in it’s AI Search Engine and Share Revenue with Publishers.” The ads part we learned about in April, but this revenue sharing bit is new. Is it a response to recent accusations of unauthorized scraping and plagiarism? Nah, the firm insists, the timing is just a coincidence. While Perplexity won’t reveal how much of the pie they will share with publishers, the company’s chief business officer Dmitry Shevelenko described it as a “meaningful double-digit percentage.” Engadget Senior Editor Pranav Dixit writes:

“‘[Our revenue share] is certainly a lot more than Google’s revenue share with publishers, which is zero,’ Shevelenko said. ‘The idea here is that we’re making a long-term commitment. If we’re successful, publishers will also be able to generate this ancillary revenue stream.’ Perplexity, he pointed out, was the first AI-powered search engine to include citations to sources when it launched in August 2022.”

Defensive much? Dixit reminds us Perplexity redesigned that interface to feature citations more prominently after Forbes criticized it in June.

Several AI companies now have deals to pay major publishers for permission to scrape their data and feed it to their AI models. But Perplexity does not train its own models, so it is taking a piece-work approach. It will also connect advertisements to searches. We learn:

“‘Perplexity’s revenue-sharing program, however, is different: instead of writing publishers large checks, Perplexity plans to share revenue each time the search engine uses their content in one of its AI-generated answers. The search engine has a ‘Related’ section at the bottom of each answer that currently shows follow-up questions that users can ask the engine. When the program rolls out, Perplexity plans to let brands pay to show specific follow-up questions in this section. Shevelenko told Engadget that the company is also exploring more ad formats such as showing a video unit at the top of the page. ‘The core idea is that we run ads for brands that are targeted to certain categories of query,’ he said.”

The write-up points out the firm may have a tough time breaking into an online ad business dominated by Google and Meta. Will publishers hand over their content in the hope Perplexity is on the right track? Launched in 2022, the company is based in San Francisco.

Cynthia Murrell, August 7, 2024

AI Research: A New and Slippery Cost Center for the Google

August 7, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

A week or so ago, I read “Scaling Exponents Across Parameterizations and Optimizers.” The write up made crystal clear that Google’s DeepMind can cook up a test, throw bodies at it, and generate a bit of “gray” literature. The objective, in my opinion, was three-fold. [1] The paper makes clear that DeepMind is thinking about its smart software’s weaknesses and wants to figure out what to do about them. And [2] DeepMind wants to keep up the flow of PR – Marketing which says, “We are really the Big Dogs in this stuff. Good luck catching up with the DeepMind deep researchers.” Note: The third item appears after the numbers.

I think the paper reveals a third and unintended consequence. This issue is made more tangible by an entity named 152334H and captured in “Calculating the Cost of a Google DeepMind Paper.” (Oh, 152334 is a deep blue black color if anyone cares.)

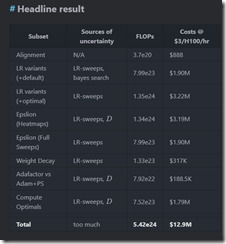

That write up presents calculations supporting this assertion:

How to burn US$10,000,000 on an arXiv preprint

The write up included this table presenting the costs to replicate what the xx Googlers and DeepMinders did to produce the ArXiv gray paper:

Notice, please, that the estimate is nearly $13 million. Anyone want to verify the Google results? What am I hearing? Crickets.

The gray paper’s 11 authors had to run the draft by review leadership and a lawyer or two. Once okayed, the document was converted to the arXiv format, and we the findings to improve our understanding of how much work goes into the achievements of the quantumly supreme Google.

Thijs number of $12 million and change brings me to item [3]. The paper illustrates why Google has a tough time controlling its costs. The paper is not “marketing,” because it is R&D. Some of the expense can be shuffled around. But in my book, the research is overhead, but it is not counted like the costs of cubicles for administrative assistants. It is science; it is a cost of doing business. Suck it up, you buttercups, in accounting.

The write up illustrates why Google needs as much money as it can possibly grab. These costs which are not really nice, tidy costs have to be covered. With more than 150,000 people working on projects, the costs of “gray” papers is a trigger for more costs. The compute time has to be paid for. Hello, cloud customers. The “thinking time” has to be paid for because coming up with great research is open ended and may take weeks, months, or years. One could not rush Einstein. One cannot rush Google wizards in the AI realm either.

The point of this blog post is to create a bit of sympathy for the professionals in Google’s accounting department. Those folks have a tough job figuring out how to cut costs. One cannot prevent 11 people from burning through computer time. The costs just hockey stick. Consequently the quantumly supreme professionals involved in Google cost control look for simpler, more comprehensible ways to generate sufficient cash to cover what are essentially “surprise” costs. These tools include magic wand behavior over payments to creators, smart commission tables to compensate advertising partners, and demands for more efficiency from Googlers who are not thinking big thoughts about big AI topics.

Net net: Have some awareness of how tough it is to be quantumly supreme. One has to keep the PR and Marketing messaging on track. One has to notch breakthroughs, insights, and innovations. What about that glue on the pizza thing? Answer: What?

Stephen E Arnold, August 7, 2024