Microsoft Explains Who Is at Fault If Copilot Smart Software Does Dumb Things

September 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Those Windows Central experts have delivered a Dusie of a write up. “Microsoft Says OpenAI’s ChatGPT Isn’t Better than Copilot; You Just Aren’t Using It Right, But Copilot Academy Is Here to Help” explains:

Avid AI users often boast about ChatGPT’s advanced user experience and capabilities compared to Microsoft’s Copilot AI offering, although both chatbots are based on OpenAI’s technology. Earlier this year, a report disclosed that the top complaint about Copilot AI at Microsoft is that “it doesn’t seem to work as well as ChatGPT.”

I think I understand. Microsoft uses OpenAI, other smart software, and home brew code to deliver Copilot in apps, the browser, and Azure services. However, users have reported that Copilot doesn’t work as well as ChatGPT. That’s interesting. A hallucinating capable software processed by the Microsoft engineering legions is allegedly inferior to Copilot.

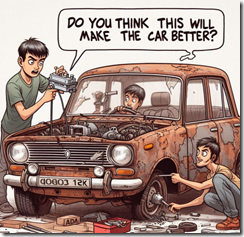

Enthusiastic young car owners replace individual parts. But the old car remains an old, rusty vehicle. Thanks, MSFT Copilot. Good enough. No, I don’t want to attend a class to learn how to use you.

Who is responsible? The answer certainly surprised me. Here’s what the Windows Central wizards offer:

A Microsoft employee indicated that the quality of Copilot’s response depends on how you present your prompt or query. At the time, the tech giant leveraged curated videos to help users improve their prompt engineering skills. And now, Microsoft is scaling things a notch higher with Copilot Academy. As you might have guessed, Copilot Academy is a program designed to help businesses learn the best practices when interacting and leveraging the tool’s capabilities.

I think this means that the user is at fault, not Microsoft’s refactored version of OpenAI’s smart software. The fix is for the user to learn how to write prompts. Microsoft is not responsible. But OpenAI’s implementation of ChatGPT is perceived as better. Furthermore, training to use ChatGPT is left to third parties. I hope I am close to the pin on this summary. OpenAI just puts Strawberries in front of hungry users and let’s them gobble up ChatGPT output. Microsoft fixes up ChatGPT and users are allegedly not happy. Therefore, Microsoft puts the burden on the user to learn how to interact with the Microsoft version of ChatGPT.

I thought smart software was intended to make work easier and more efficient. Why do I have to go to school to learn Copilot when I can just pound text or a chunk of data into ChatGPT, click a button, and get an output? Not even a Palantir boot camp will lure me to the service. Sorry, pal.

My hypothesis is that Microsoft is a couple of steps away from creating something designed for regular users. In its effort to “improve” ChatGPT, the experience of using Copilot makes the user’s life more miserable. I think Microsoft’s own engineering practices act like a struck brake on an old Lada. The vehicle has problems, so installing a new master cylinder does not improve the automobile.

Crazy thinking: That’s what the write up suggests to me.

Stephen E Arnold, September 23, 2024