Content Conversion: Search and AI Vendors Downplay the Task

November 19, 2024

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

Marketers and PR people often have degrees in political science, communications, or art history. This academic foundation means that some of these professionals can listen to a presentation and struggle to figure out what’s a horse, what’s horse feathers, and what’s horse output.

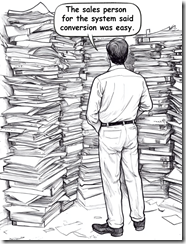

Consequently, many organizations engaged in “selling” enterprise search, smart software, and fusion-capable intelligence systems downplay or just fib about how darned easy it is to take “content” and shove it into the Fancy Dan smart software. The pitch goes something like this: “We have filters that can handle 90 percent of the organization’s content. Word, PowerPoint, Excel, Portable Document Format (PDF), HTML, XML, and data from any system that can export tab delimited content. Just import and let our system increase your ability to analyze vast amounts of content. Yada yada yada.”

Thanks, Midjourney. Good enough.

The problem is that real life content is often a problem. I am not going to trot out my list of content problem children. Instead I want to ask a question: If dealing with content is a slam dunk, why do companies like IBM and Oracle sustain specialized tools to convert Content Type A into Content Type B?

The answer is that content processing is an essential step because [a] structured and unstructured content can exist in different versions. Figuring out the one that is least wrong and most timely is tricky. [b] Humans love mobile devices, laptops, home computers, photos, videos, and audio. Furthermore, how does a content processing get those types of content from a source not located in an organization’s office (assuming it has one) and into the content processing system? The answer is, “Money, time, persuasion, and knowledge of what employee has what.” Finding a unicorn at the Kentucky Derby is more likely. [c] Specialized systems employ lingo like “Export as” and provide some file types. Yeah. The problem is that the output may not contain everything that is in the specialized software program. Examples range from computational chemistry systems to those nifty AutoCAD type drawing system to slick electronic trace routing solutions to DaVinci Resolve video systems which can happily pull “content” from numerous places on a proprietary network set up. Yeah, no problem.

Evidence of how big this content conversion issue is appears in the IBM write up “A New Tool to Unlock Data from Enterprise Documents for Generative AI.” If the content conversion work is trivial, why is IBM wasting time and brainpower figuring out something like making a PowerPoint file smart software friendly?

The reason is that as big outfits get “into” smart software, the people working on the project find that the exception folder gets filled up. Some documents and content types don’t convert. If a boss asks, “How do we know the data in the AI system are accurate?”, the hapless IT person looking at the exception folder either lies or says in a professional voice, “We don’t have a clue?”

IBM’s write up says:

IBM’s new open-source toolkit, Docling, allows developers to more easily convert PDFs, manuals, and slide decks into specialized data for customizing enterprise AI models and grounding them on trusted information.

But one piece of software cannot do the job. That’s why IBM reports:

The second model, TableFormer, is designed to transform image-based tables into machine-readable formats with rows and columns of cells. Tables are a rich source of information, but because many of them lie buried in paper reports, they’ve historically been difficult for machines to parse. TableFormer was developed for IBM’s earlier DeepSearch project to excavate this data. In internal tests, TableFormer outperformed leading table-recognition tools.

Why are these tools needed? Here’s IBM’s rationale:

Researchers plan to build out Docling’s capabilities so that it can handle more complex data types, including math equations, charts, and business forms. Their overall aim is to unlock the full potential of enterprise data for AI applications, from analyzing legal documents to grounding LLM responses on corporate policy documents to extracting insights from technical manuals.

Based on my experience, the paragraph translates as, “This document conversion stuff is a killer problem.”

When you hear a trendy enterprise search or enterprise AI vendor talk about the wonders of its system, be sure to ask about document conversion. Here are a few questions to put the spotlight on what often becomes a black hole of costs:

- If I process 1,000 pages of PDFs, mostly text but with some charts and graphs, what’s the error rate?

- If I process 1,000 engineering drawings with embedded product and vendor data, what percentage of the content is parsed for the search or AI system?

- If I process 1,000 non text objects like videos and iPhone images, what is the time required and the metadata accuracy for the converted objects?

- Where do unprocessable source objects go? An exception folder, the trash bin, or to my in box for me to fix up?

Have fun asking questions.

Stephen E Arnold, November 19, 2024