The Fatal Flaw in Rules-Based Smart Software

December 17, 2024

This blog post is the work of an authentic dinobaby. No smart software was used.

This blog post is the work of an authentic dinobaby. No smart software was used.

As a dinobaby, I have to remember the past. Does anyone know how the “smart” software in AskJeeves worked? At one time before the cute logo and the company followed the path of many, many other breakthrough search firms, AskJeeves used hand-crafted rules. (Oh, the reference to breakthrough is a bit of an insider joke with which I won’t trouble you.) A user would search for “weather 94401” and the system would “look up” in the weather rule the zip code for Foster City, California, and deliver the answer. Alternatively, I could have when I ran the query looked out my window. AskJeeves went on a path painfully familiar to other smart software companies today: Customer service. AskJeeves was acquired by IAC Corp. which moved away from the rules-based system which was “revolutionizing” search in the late 1990s.

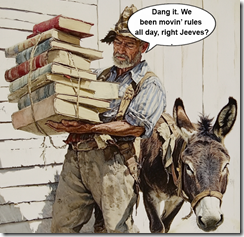

Rules-based wranglers keep busy a-fussin’ and a-changin’ all the dang time. The patient mule Jeeves just wants lunch. Thanks, MidJourney, good enough.

I read “Certain Names Make ChatGPT Grind to a Halt, and We Know Why.” The essay presents information about how the wizards at OpenAI solve problems its smart software creates. The fix is to channel the “rules-based approach” which was pretty darned exciting decades ago. Like the AskJeeves’ approach, the use of hand-crafted rules creates several problems. The cited essay focuses on the use of “rules” to avoid legal hassles created when smart software just makes stuff up.

I want to highlight several other problems with rules-based decision systems which are far older in computer years than the AskJeeves marketing success in 1996. Let me highlight a few which may lurk within the OpenAI and ChatGPT smart software:

- Rules have to be something created by a human in response to something another (often unpredictable) human did. Smart software gets something wrong like saying a person is in jail or dead when he is free and undead.

- Rules have to be maintained. Like legacy code, setting and forgetting can have darned exciting consequences after the original rules creator changed jobs or fell into the category “in jail” or “dead.”

- Rules work with a limited set of bounded questions and answers. Rules fail when applied to the fast-changing and weird linguistic behavior of humans. If a “rule” does know a word like “debanking”, the system will struggle, crash, or return zero results. Bummer.

- Rules seem like a great idea until someone calculates how many rules are needed, how much it costs to create a rule, and how much maintenance rules require (typically based on the cost of creating a rule in the first place). To keep the math simple, rules are expensive.

I liked the cited essay about OpenAI. It reminds me how darned smart today’s developers of smart software are. This dinobaby loved the article. What a great anecdote! I want to say, “OpenAI should have “asked Jeeves.” I won’t. I will point out that IBM Watson, the Jeopardy winner version, was rules based. In fact, rules are still around, and they still carry like a patient donkey the cost burden.

Stephen E Arnold, December 17, 2024