Salesforce to MSFT: We Are Coming, Baby Cakes

November 18, 2024

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

No smart software. Just a dumb dinobaby. Oh, the art? Yeah, MidJourney.

Salesforce, an outfit that hopped on the “attention” bandwagon, is now going whole hog with smart software. “Salesforce to Hire More Than 1,000 Workers to Boost AI Product Sales” makes clear that AI is going to be the hook for the company for the next hype cycle riding toward Next Big Thing theme park.

The write up says:

Agentforce is a new layer on the Salesforce platform, designed to enable companies to build and deploy AI agents that autonomously perform tasks.

Now that’s a buzz packed sentence: “Layer,” sales call data as a “platform”, “AI agents”, “autonomously”, and smart software that can “perform tasks.”

The idea is that sales are an important part of a successful organization. The exception is that monopolies really don’t need too many sales professionals. Lawyers? Yes. Forward deployed engineers? Yes. Marketers? Yes. Door knockers? Well, probably fewer going forward.

How does the Salesforce AI system work? The answer is simple it seems:

These AI agents operate independently, triggered by data changes, business rules, pre-built automations, or API signals.

Who writes the rules? I wonder if AI writes it own rules or do specialists get an opportunity to demonstrate their ability to become essential cogs in the Salesforce customers’ machines?

What do customers do with smart Salesforce? Once again, the answer is easy to provide. The write up says:

Companies such as OpenTable, Saks and Wiley are currently utilizing Agentforce to augment their workforce and enhance customer experiences. Over the past two years, Salesforce has focused on controlling sales expenses by reducing jobs and encouraging customers to use self-service or third-party purchasing options.

I think I understand. Get rid of pesky humans, their vacations, health care, pension plans, and annoying demands for wage increases. Salesforce delivers “efficiency.”

I am not sure what to make of this set of statements. Underpinning Salesforce is a database. The stuff on top of the database are interfaces. Now smart software promises to deliver efficiency and obviously another layer of “smart stuff” to provide what software and services have been promising since the days of the punched card.

Smart software, like Web search, is a natural monopoly unless specific deep pocket outfits can create a defensible niche and sell enough smart software to that niche before some other company eats their lunch.

But that’s what some companies do? Eat other individual’s lunch. So whose taking those lunches tomorrow? Amazon, Google, Microsoft, or Salesforce? Maybe the lunch thief will be a pesky start up essentially off the radar of the big hungry dogs?

With AI development shifting East, is the Silicon Valley AI way the future. Heck, even Google is moving smart software to London which is a heck of a lot easier flight to some innovative locations.

Hopefully one of the AI companies can convert billions in AI investment into new revenue and big profits in a sprightly manner. So far, I see marketing and AI dead ends. Is Salesforce, as the long gone Philco radio company used to say, “The leader”? On one hand, Salesforce is hiring. On the other, get rid of employees. Okay, I think I understand.

Stephen E Arnold, November 18, 2024

Management Brilliance or Perplexing Behavior

November 15, 2024

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

Sorry to disappoint you, but this blog post is written by a dumb humanoid. The art? We used MidJourney.

TechCrunch published “Perplexity CEO Offers AI Company’s Services to Replace Striking NYT Staff.” The New York Times Tech Guild went on strike. Aravind Srinivas, formerly at OpenAI and founder of Perplexity, made an interesting offer. According to the cited article, Mr. Srinivas allegedly said he would provide services to “mitigate the effect of a strike by New York Times tech workers.”

A young startup luminary reacts to a book about business etiquette. His view of what’s correct is different from what others have suggested might win friends and influence people. Thanks, MidJourney. Good enough.

Two points: Crossing the picket lines seemed okay if the story is correct and assuming that Perplexity’s smart software would “mitigate the effect” of the strike.

According to the article, “many” people criticized Mr. Srinivas’ offer to help a dead tree with some digital ornaments in a time of turmoil. What the former OpenAI wizard suggested he wanted to do was:

to provide technical infra support on a high traffic day.

Infra, I assume, is infrastructure. And a high-traffic day at a dead tree business is? I just don’t know. The Gray Lady has an online service and it bought an eGame which lacks the bells and whistles of Hamster Kombat. I think that Hamster Kombat has a couple of hundred million users and a revenue stream from assorted addictive elements jazzed with tokens. Could Perplexity help out Telegram if its distributed network ran into more headwinds that the detainment of its founder in France?

Furthermore, the article reminded me that the Top Dog of the dead tree outfit “sent Perplexity a cease and desist letter in October [2024] over the startup’s scraping of articles for use by its AI models.”

What interests me, however, is the outstanding public relations skills that Mr. Srinivas demonstrated. He has captured headlines with his “infra” offer. He is getting traction on Twitter, now the delightfully named X.com. He is teaching old-school executives like Tim Apple how to deal with companies struggling to adapt to the AI, go fast approach to business.

Perplexity’s offer illustrates a conceptual divide between old school publishing, labor unions, and AI companies. Silicon Valley outfits have a deft touch. (I almost typed “tone deaf”. Yikes.)

Stephen E Arnold, November 15, 2024

A Digital Flea Market Tests Smart Software

November 14, 2024

Sales platform eBay has learned some lessons about deploying AI. The company tested three methods and shares its insights in the post, “Cutting Through the Noise: Three Things We’ve Learned About Generative AI and Developer Productivity.” Writer Senthil Padmanabhan explains:

“Through our AI work at eBay, we believe we’ve unlocked three major tracks to developer productivity: utilizing a commercial offering, fine-tuning an existing Large Language Model (LLM), and leveraging an internal network. Each of these tracks requires additional resources to integrate, but it’s not a matter of ranking them ‘good, better, or best.’ Each can be used separately or in any combination, and bring their own benefits and drawbacks.”

The company could have chosen from several existing commercial AI offerings. It settled on GitHub Copilot for its popularity with developers. That and the eBay codebase was already on GitHub. They found the tool boosted productivity and produced mostly accurate documents (70%) and code (60%). The only problem: Copilot’s limited data processing ability makes it impractical for some applications. For now.

To tweak and train an open source LLM, the team chose Code Llama 13B. They trained the camelid on eBay’s codebase and documentation. The resulting tool reduced the time and labor required to perform certain tasks, particularly software upkeep. It could also sidestep a problem for off-the-shelf options: because it can be trained to access data across internal services and within non-dependent libraries, it can get to data the commercial solutions cannot find. Thereby, code duplication can be avoided. Theoretically.

Finally, the team used an Retrieval Augmented Generation to synthesize documentation across disparate sources into one internal knowledge base. Each piece of information entered into systems like Slack, Google Docs, and Wikis automatically received its own vector, which was stored in a vector database. When they queried their internal GPT, it quickly pulled together an answer from all available sources. This reduced the time and frustration of manually searching through multiple systems looking for an answer. Just one little problem: Sometimes the AI’s responses were nonsensical. Were any just plain wrong? Padmanabhan does not say.

The post concludes:

“These three tracks form the backbone for generative AI developer productivity, and they keep a clear view of what they are and how they benefit each project. The way we develop software is changing. More importantly, the gains we realize from generative AI have a cumulative effect on daily work. The boost in developer productivity is at the beginning of an exponential curve, which we often underestimate, as the trouble with exponential growth is that the curve feels flat in the beginning.”

Okay, sure. It is all up from here. Just beware of hallucinations along the way. After all, that is one little detail that still needs to be ironed out.

Cynthia Murrell, November 14, 2024

Smart Software: It May Never Forget

November 13, 2024

A recent paper challenges the big dogs of AI, asking, “Does Your LLM Truly Unlearn? An Embarrassingly Simple Approach to Recover Unlearned Knowledge.” The study was performed by a team of researchers from Penn State, Harvard, and Amazon and published on research platform arXiv. True or false, it is a nifty poke in the eye for the likes of OpenAI, Google, Meta, and Microsoft, who may have overlooked the obvious. The abstract explains:

“Large language models (LLMs) have shown remarkable proficiency in generating text, benefiting from extensive training on vast textual corpora. However, LLMs may also acquire unwanted behaviors from the diverse and sensitive nature of their training data, which can include copyrighted and private content. Machine unlearning has been introduced as a viable solution to remove the influence of such problematic content without the need for costly and time-consuming retraining. This process aims to erase specific knowledge from LLMs while preserving as much model utility as possible.”

But AI firms may be fooling themselves about this method. We learn:

“Despite the effectiveness of current unlearning methods, little attention has been given to whether existing unlearning methods for LLMs truly achieve forgetting or merely hide the knowledge, which current unlearning benchmarks fail to detect. This paper reveals that applying quantization to models that have undergone unlearning can restore the ‘forgotten’ information.”

Oops. The team found as much as 83% of data thought forgotten was still there, lurking in the shadows. The paper offers a explanation for the problem and suggestions to mitigate it. The abstract concludes:

“Altogether, our study underscores a major failure in existing unlearning methods for LLMs, strongly advocating for more comprehensive and robust strategies to ensure authentic unlearning without compromising model utility.”

See the paper for all the technical details. Will the big tech firms take the researchers’ advice and improve their products? Or will they continue letting their investors and marketing departments lead them by the nose?

Cynthia Murrell, November 13, 2024

Meta, AI, and the US Military: Doomsters, Now Is Your Chance

November 12, 2024

Sorry to disappoint you, but this blog post is written by a dumb humanoid.

Sorry to disappoint you, but this blog post is written by a dumb humanoid.

The Zuck is demonstrating that he is an American. That’s good. I found the news report about Meta and its smart software in Analytics India magazine interesting. “After China, Meta Just Hands Llama to the US Government to ‘Strengthen’ Security” contains an interesting word pair, “after China.”

What did the article say? I noted this statement:

Meta’s stance to help government agencies leverage their open-source AI models comes after China’s rumored adoption of Llama for military use.

The write up points out:

“These kinds of responsible and ethical uses of open source AI models like Llama will not only support the prosperity and security of the United States, they will also help establish U.S. open source standards in the global race for AI leadership.” said Nick Clegg, President of Global Affairs in a blog post published from Meta.

Analytics India notes:

The announcement comes after reports that China was rumored to be using Llama for its military applications. Researchers linked to the People’s Liberation Army are said to have built ChatBIT, an AI conversation tool fine-tuned to answer questions involving the aspects of the military.

I noted this statement attributed to a “real” person at Meta:

Yann LecCun, Meta’s Chief AI scientist, did not hold back. He said, “There is a lot of very good published AI research coming out of China. In fact, Chinese scientists and engineers are very much on top of things (particularly in computer vision, but also in LLMs). They don’t really need our open-source LLMs.”

I still find the phrase “after China” interesting. Is money the motive for this open source generosity? Is it a bet on Meta’s future opportunities? No answers at the moment.

Stephen E Arnold, November 12, 2024

Meta and China: Yeah, Unauthorized Use of Llama. Meh

November 8, 2024

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

That open source smart software, you remember, makes everything computer- and information-centric so much better. One open source champion laboring as a marketer told me, “Open source means no more contractual handcuffs, the ability to make changes without a hassle, and evidence of the community.

An AI-powered robot enters a meeting. One savvy executive asks in Chinese, “How are you? Are you here to kill the enemy?” Another executive, seated closer to the gas emitted from a cannister marked with hazardous materials warnings gasps, “I can’t breathe!” Thanks, Midjourney. Good enough.

How did those assertions work for China? If I can believe the “trusted” outputs of the “real” news outfit Reuters, just super cool. “Exclusive: Chinese Researchers Develop AI Model for Military Use on Back of Meta’s Llama”, those engaging folk of the Middle Kingdom:

… have used Meta’s publicly available Llama model to develop an AI tool for potential military applications, according to three academic papers and analysts.

Now that’s community!

The write up wobbles through some words about the alleged Chinese efforts and adds:

Meta has embraced the open release of many of its AI models, including Llama. It imposes restrictions on their use, including a requirement that services with more than 700 million users seek a license from the company. Its terms also prohibit use of the models for “military, warfare, nuclear industries or applications, espionage” and other activities subject to U.S. defense export controls, as well as for the development of weapons and content intended to “incite and promote violence”. However, because Meta’s models are public, the company has limited ways of enforcing those provisions.

In the spirit of such comments as “Senator, thank you for that question,” a Meta (aka Facebook), wizard allegedly said:

“That’s a drop in the ocean compared to most of these models (that) are trained with trillions of tokens so … it really makes me question what do they actually achieve here in terms of different capabilities,” said Joelle Pineau, a vice president of AI Research at Meta and a professor of computer science at McGill University in Canada.

My interpretation of the insight? Hey, that’s okay.

As readers of this blog know, I am not too keen on making certain information public. Unlike some outfits’ essays, Beyond Search tries to address topics without providing information of a sensitive nature. For example, search and retrieval is a hard problem. Big whoop.

But posting what I would term sensitive information as usable software for anyone to download and use strikes me as something which must be considered in a larger context; for example, a bad actor downloading an allegedly harmless penetration testing utility of the Metasploit-ilk. Could a bad actor use these types of software to compromise a commercial or government system? The answer is, “Duh, absolutely.”

Meta’s founder of the super helpful Facebook wants to bring people together. Community. Kumbaya. Sharing.

That has been the lubricant for amassing power, fame, and money… Oh, also a big gold necklace similar to the one’s I saw labeled “Pharaoh jewelry.”

Observations:

- Meta (Facebook) does open source for one reason: To blunt initiatives from its perceived competitors and to position itself to make money.

- Users of Meta’s properties are only data inputters and action points; that is, they are instrumentals.

- Bad actors love that open source software. They download it. They study it. They repurpose it to help the bad actors achieve their goals.

Did Meta include a kill switch in its open source software? Oh, sure. Meta is far-sighted, concerned with misuse of its innovations, and super duper worried about what an adversary of the US might do with that technology. On the bright side, if negotiations are required, the head of Meta (Facebook) allegedly speaks Chinese. Is that a benefit? He could talk with the weaponized robot dispensing biological warfare agents.

Stephen E Arnold, November 8, 2024

Let Them Eat Cake or Unplug: The AI Big Tech Bro Effect

November 7, 2024

I spotted a news item which will zip right by some people. The “real” news outfit owned by the lovable Jeff Bezos published “As Data Centers for AI Strain the Power Grid, Bills Rise for Everyday Customers.” The write up tries to explain that AI costs for electric power are being passed along to regular folks. Most of these electricity dependent people do not take home paychecks with tens of millions of dollars like the Nadellas, the Zuckerbergs, or the Pichais type breadwinners do. Heck, these AI poohbahs think about buying modular nuclear power plants. (I want to point out that these do not exist and may not for many years.)

The article is not going to thrill the professionals who are experts on utility demand and pricing. Those folks know that the smart software poohbahs have royally screwed up some weekends and vacations for the foreseeable future.

The WaPo article (presumably blessed by St. Jeffrey) says:

The facilities’ extraordinary demand for electricity to power and cool computers inside can drive up the price local utilities pay for energy and require significant improvements to electric grid transmission systems. As a result, costs have already begun going up for customers — or are about to in the near future, according to utility planning documents and energy industry analysts. Some regulators are concerned that the tech companies aren’t paying their fair share, while leaving customers from homeowners to small businesses on the hook.

Okay, typical “real” journospeak. “Costs have already begun going up for customers.” Hey, no kidding. The big AI parade began with the January 2023 announcement that the Softies were going whole hog on AI. The lovable Google immediately flipped into alert mode. I can visualize flashing yellow LEDs and faux red stop lights blinking in the gray corridors in Shoreline Drive facilities if there are people in those offices again. Yeah, ghostly blinking.

The write up points out, rather unsurprisingly:

The tech firms and several of the power companies serving them strongly deny they are burdening others. They say higher utility bills are paying for overdue improvements to the power grid that benefit all customers.

Who wants PEPCO and VEPCO to kill their service? Actually, no one. Imagine life in NoVa, DC, and the ever lovely Maryland without power. Yikes.

From my point of view, informed by some exposure to the utility sector at a nuclear consulting firm and then at a blue chip consulting outfit, here’s the scoop.

The demand planning done with rigor by US utilities took a hit each time the Big Dogs of AI brought more specialized, power hungry servers online and — here’s the killer, folks — and left them on. The way power consumption used to work is that during the day, consumer usage would fall and business/industry usage would rise. The power hogging steel industry was a 24×7 outfit. But over the last 40 years, manufacturing has wound down and consumer demand crept upwards. The curves had to be plotted and the demand projected, but, in general, life was not too crazy for the US power generation industry. Sure, there were the costs associated with decommissioning “old” nuclear plants and expanding new non-nuclear facilities with expensive but management environmental gewgaws, gadgets, and gizmos plugged in to save the snail darters and the frogs.

Since January 2023, demand has been curving upwards. Power generation outfits don’t want to miss out on revenue. Therefore, some utilities have worked out what I would call sweetheart deals for electricity for AI-centric data centers. Some of these puppies suck more power in a day than a dying city located in Flyover Country in Illinois.

Plus, these data centers are not enough. Each quarter the big AI dogs explain that more billions will be pumped into AI data centers. Keep in mind: These puppies run 24×7. The AI wolves have worked out discount rates.

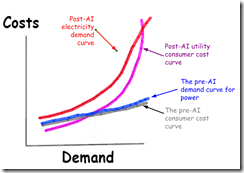

What do the US power utilities do? First, the models have to be reworked. Second, the relationships to trade, buy, or “borrow” power have to be refined. Third, capacity has to be added. Fourth, the utility rate people create a consumer pricing graph which may look like this:

Guess who will pay? Yep, consumers.

The red line is the prediction for post-AI electricity demand. For comparison, the blue line shows the demand curve before Microsoft ignited the AI wars. Note that the gray line is consumer cost or the monthly electricity bill for Bob and Mary Normcore. The nuclear purple line shows what is and will continue to happen to consumer electricity costs. The red line is the projected power demand for the AI big dogs.

The graph shows that the cost will be passed to consumers. Why? The sweetheart deals to get the Big Dog power generation contracts means guaranteed cash flow and a hurdle for a low-ball utility to lumber over. Utilities like power generation are not the Neon Deions of American business.

There will be hand waving by regulators. Some city government types will argue, “We need the data centers.” Podcasts and posts on social media will sprout like weeds in an untended field.

Net net: Bob and Mary Normcore may have to decide between food and electricity. AI is wonderful, right.

Stephen E Arnold, November 7, 2024

Dreaming about Enterprise Search: Hope Springs Eternal…

November 6, 2024

The post is the work of a humanoid who happens to be a dinobaby. GenX, Y, and Z, read at your own risk. If art is included, smart software produces these banal images.

The post is the work of a humanoid who happens to be a dinobaby. GenX, Y, and Z, read at your own risk. If art is included, smart software produces these banal images.

Enterprise search is back, baby. The marketing lingo is very year 2003, however. The jargon has been updated, but the story is the same: We can make an organization’s information accessible. Instead of Autonomy’s Neurolinguistic Programming, we have AI. Instead of “just text,” we have video content processed. Instead of filters, we have access to cloud-stored data.

An executive knows he can crack the problem of finding information instantly. The problem is doing it so that the time and cost of data clean up does not cost more than buying the Empire State Building. Thanks, Stable Diffusion. Good enough.

A good example of the current approach to selling the utility of an enterprise search and retrieval system is the article / interview in Betanews called “How AI Is Set to Democratize Information.” I want to be upfront. I am a mostly aligned with the analysis of information and knowledge presented by Taichi Sakaiya. His The Knowledge Value Revolution or a History of the Future has been a useful work for me since the early 1990s. I was in Osaka, Japan, lecturing at the Kansai Institute of Technology when I learned of this work book from my gracious hosts and the Managing Director of Kinokuniya (my sponsor). Devaluing knowledge by regressing to the fat part of a Gaussian distribution is not something about which I am excited.

However, the senior manager of Pyron (Raleigh, North Carolina), an AI-powered information retrieval company, finds the concept in line with what his firm’s technology provides to its customers. The article includes this statement:

The concept of AI as a ‘knowledge cloud’ is directly tied to information access and organizational intelligence. It’s essentially an interconnected network of systems of records forming a centralized repository of insights and lessons learned, accessible to individuals and organizations.

The benefit is, according to the Pyron executive:

By breaking down barriers to knowledge, the AI knowledge cloud could eliminate the need for specialized expertise to interpret complex information, providing instant access to a wide range of topics and fields.

The article introduces a fresh spin on the problems of information in organizations:

Knowledge friction is a pervasive issue in modern enterprises, stemming from the lack of an accessible and unified source of information. Historically, organizations have never had a singular repository for all their knowledge and data, akin to libraries in academic or civic communities. Instead, enterprise knowledge is scattered across numerous platforms and systems — each managed by different vendors, operating in silos.

Pyron opened its doors in 2017. After seven years, the company is presenting a vision of what access to enterprise information could, would, and probably should do.

The reality, based on my experience, is different. I am not talking about Pyron now. I am discussing the re-emergence of enterprise search as the killer application for bolting artificial intelligence to information retrieval. If you are in love with AI systems from oligopolists, you may want to stop scanning this blog post. I do not want to be responsible for a stroke or an esophageal spasm. Here we go:

- Silos of information are an emergent phenomenon. Knowledge has value. Few want to make their information available without some value returning to them. Therefore, one can talk about breaking silos and democratization, but those silos will be erected and protected. Secret skunk works, mislabeled projects, and squirreling away knowledge nuggets for a winter’s day. In the case of Senator Everett Dirksen, the information was used to get certain items prioritized. That’s why there is a building named after him.

- The “value” of information or knowledge depends on another person’s need. A database which contains the antidote to save a child from a household poisoning costs money to access. Why? Desperate people will pay. The “information wants to free” idea is not one that makes sense to those with information and the knowledge to derive value from what another finds inscrutable. I am not sure that “democratizing information” meshes smoothly with my view.

- Enterprise search, with or without, hits some cost and time problems with a small number of what have been problems for more than 50 years. SMART failed, STAIRS III failed, and the hundreds of followers have failed. Content is messy. The idea that one can process text, spreadsheets, Word files, and email is one thing. Doing it without skipping wonky files or the time and cost of repurposing data remains difficult. Chemical companies deal with formulae; nuclear engineering firms deal with records management and mathematics; and consulting companies deal with highly paid people who lock up their information on a personal laptop. Without these little puddles of information, the “answer” or the “search output” will not be just a hallucination. The answer may be dead wrong.

I understand the need to whip up jargon like “democratize information”, “knowledge friction”, and “RAG frameworks”. The problem is that despite the words, delivering accurate, verifiable, timely on-point search results in response to a query is a difficult problem.

Maybe one of the monopolies will crack the problem. But most of output is a glimpse of what may be coming in the future. When will the future arrive? Probably when the next PR or marketing write up about search appears. As I have said numerous times, I find it more difficult to locate the information I need than at any time in my more than half a century in online information retrieval.

What’s easy is recycling marketing literature from companies who were far better at describing a “to be” system, not a “here and now” system.

Stephen E Arnold, November 4, 2024

Twenty Five Percent of How Much, Google?

November 6, 2024

The post is the work of a humanoid who happens to be a dinobaby. GenX, Y, and Z, read at your own risk. If art is included, smart software produces these banal images.

The post is the work of a humanoid who happens to be a dinobaby. GenX, Y, and Z, read at your own risk. If art is included, smart software produces these banal images.

I read the encomia to Google’s quarterly report. In a nutshell, everything is coming up roses even the hyperbole. One news hook which has snagged some “real” news professionals is that “more than a quarter of new code at Google is generated by AI.” The exclamation point is implicit. Google’s AI PR is different from some other firms; for example, Samsung blames its financial performance disappointments on some AI. Winners and losers in a game in which some think the oligopolies are automatic winners.

An AI believer sees the future which is arriving “soon, real soon.” Thanks, You.com. Good enough because I don’t have the energy to work around your guard rails.

The question is, “How much code and technical debt does Google have after a quarter century of its court-described monopolistic behavior? Oh, that number is unknown. How many current Google engineers fool around with that legacy code? Oh, that number is unknown and probably for very good reasons. The old crowd of wizards has been hit with retirement, cashing in and cashing out, and “leadership” nervous about fiddling with some processes that are “good enough.” But 25 years. No worries.

The big news is that 25 percent of “new” code is written by smart software and then checked by the current and wizardly professionals. How much “new” code is written each year for the last three years? What percentage of the total Google code base is “new” in the years between 2021 and 2024? My hunch is that “new” is relative. I also surmise that smart software doing 25 percent of the work is one of those PR and Wall Street targeted assertions specifically designed to make the Google stock go up. And it worked.

However, I noted this Washington Post article: “Meet the Super Users Who Tap AI to Get Ahead at Work.” Buried in that write up which ran the mostly rah rah AI “real” news article coincident with Google’s AI spinning quarterly reports one interesting comment:

Adoption of AI at work is still relatively nascent. About 67 percent of workers say they never use AI for their jobs compared to 4 percent who say they use it daily, according to a recent survey by Gallup.

One can interpret this as saying, “Imagine the growth that is coming from reduced costs. Get rid of most coders and just use Google’s and other firms’ smart programming tools.

Another interpretation is, “The actual use is much less robust than the AI hyperbole machine suggests.”

Which is it?

Several observations:

- Many people want AI to pump some life into the economic fuel tank. By golly, AI is going to be the next big thing. I agree, but I think the Gallup data indicates that the go go view is like looking at a field of corn from a crop duster zipping along at 1,000 feet. The perspective from the airplane is different from the person walking amidst the stalks.

- The lack of data behind Google-type assertions about how much machine code is in the Google mix sounds good, but where are the data? Google, aren’t you data driven? So, where’s the back up data for the 25 percent assertion.

- Smart software seems to be something that is expensive, requires dreams of small nuclear reactors next to a data center adjacent a hospital. Yeah, maybe once the impact statements, the nuclear waste, and the skilled worker issues have been addressed. Soon as measured in environmental impact statement time which is different from quarterly report time.

Net net: Google desperately wants to be the winner in smart software. The company is suggesting that if it were broken apart by crazed government officials, smart software would die. Insert the exclamation mark. Maybe two or three. That’s unlikely. The blurring of “as is” with “to be” is interesting and misleading.

Stephen E Arnold, November 6, 2024

Hey, US Government, Listen Up. Now!

November 5, 2024

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

This post is the work of a dinobaby. If there is art, accept the reality of our using smart art generators. We view it as a form of amusement.

Microsoft on the Issues published “AI for Startups.” The write is authored by a dream team of individuals deeply concerned about the welfare of their stakeholders, themselves, and their corporate interests. The sensitivity is on display. Who wrote the 1,400 word essay? Setting aside the lawyers, PR people, and advisors, the authors are:

- Satya Nadella, Chairman and CEO, Microsoft

- Brad Smith, Vice-Chair and President, Microsoft

- Marc Andreessen, Cofounder and General Partner, Andreessen Horowitz

- Ben Horowitz, Cofounder and General Partner, Andreessen Horowitz

Let me highlight a couple of passages from essay (polemic?) which I found interesting.

In the era of trustbusters, some of the captains of industry had firm ideas about the place government professionals should occupy. Look at the railroads. Look at cyber security. Look at the folks living under expressway overpasses. Tumultuous times? That’s on the money. Thanks, MidJourney. A good enough illustration.

Here’s the first snippet:

Artificial intelligence is the most consequential innovation we have seen in a generation, with the transformative power to address society’s most complex problems and create a whole new economy—much like what we saw with the advent of the printing press, electricity, and the internet.

This is a bold statement of the thesis for these intellectual captains of the smart software revolution. I am curious about how one gets from hallucinating software to “the transformative power to address society’s most complex problems and cerate a whole new economy.” Furthermore, is smart software like printing, electricity, and the Internet? A fact or two might be appropriate. Heck, I would be happy with a nifty Excel chart of some supporting data. But why? This is the first sentence, so back off, you ignorant dinobaby.

The second snippet is:

Ensuring that companies large and small have a seat at the table will better serve the public and will accelerate American innovation. We offer the following policy ideas for AI startups so they can thrive, collaborate, and compete.

Ah, companies large and small and a seat at the table, just possibly down the hall from where the real meetings take place behind closed doors. And the hosts of the real meeting? Big companies like us. As the essay says, “that only a Big Tech company with our scope and size can afford, creating a platform that is affordable and easily accessible to everyone, including startups and small firms.”

The policy “opportunity” for AI startups includes many glittering generalities. The one I like is “help people thrive in an AI-enabled world.” Does that mean universal basic income as smart software “enhances” jobs with McKinsey-like efficiency. Hey, it worked for opioids. It will work for AI.

And what’s a policy statement without a variation on “May live in interesting times”? The Microsoft a2z twist is, “We obviously live in a tumultuous time.” That’s why the US Department of Justice, the European Union, and a few other Luddites who don’t grok certain behaviors are interested in the big firms which can do smart software right.

Translation: Get out of our way and leave us alone.

Stephen E Arnold, November 5, 2024