Forget Being Powerless. Get in the Pseudo-Avatar Business Now

January 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “A New Kind of AI Copy Can Fully Replicate Famous People. The Law Is Powerless.” Okay, okay. The law is powerless because companies need to generate zing, money, and growth. What caught my attention in the essay was its failure to look down the road and around the corner of a dead man’s curve. Oops. Sorry, dead humanoids curve.

The write up states that a high profile psychologist had a student who shoved the distinguished professor’s outputs into smart software. With a little deep fakery, the former student had a digital replica of the humanoid. The write up states:

Over two months, by feeding every word Seligman had ever written into cutting-edge AI software, he and his team had built an eerily accurate version of Seligman himself — a talking chatbot whose answers drew deeply from Seligman’s ideas, whose prose sounded like a folksier version of Seligman’s own speech, and whose wisdom anyone could access. Impressed, Seligman circulated the chatbot to his closest friends and family to check whether the AI actually dispensed advice as well as he did. “I gave it to my wife and she was blown away by it,” Seligman said.

The article wanders off into the problems of regulations, dodges assorted ethical issues, and ignores copyright. I want to call attention to the road ahead just like the John Doe n friend of Jeffrey Epstein. I will try to peer around the dead humanoid’s curve. Buckle up. If I hit a tree, I would not want you to be injured when my Ford Pinto experiences an unfortunate fuel tank event.

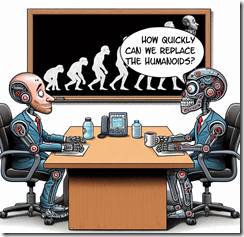

Here’s an illustration for my point:

The future is not if, the future is how quickly, which is a quote from my presentation in October 2023 to some attendees at the Massachusetts and New York Association of Crime Analyst’s annual meeting. Thanks, MSFT Copilot Bing thing. Good enough image. MSFT excels at good enough.

The write up says:

AI-generated digital replicas illuminate a new kind of policy gray zone created by powerful new “generative AI” platforms, where existing laws and old norms begin to fail.

My view is different. Here’s a summary:

- Either existing AI outfits or start ups will figure out that major consulting firms, most skilled university professors, lawyers, and other knowledge workers have a baseline of knowledge. Study hard, learn, and add to that knowledge by reading information germane to the baseline field.

- Implement patterned analytic processes; for example, review data and plug those data into a standard model. One example is President Eisenhower’s four square analysis, since recycled by Boston Consulting Group. Other examples exist for prominent attorneys; for example, Melvin Belli, the king of torts.

- Convert existing text so that smart software can “learn” and set up a feed of current and on-going content on the topic in which the domain specialist is “expert” and successful defined by the model builder.

- Generate a pseudo-avatar or use the persona of a deceased individual unlikely to have an estate or trust which will sue for the use of the likeness. De-age the person as part of the pseudo-avatar creation.

- Position the pseudo-avatar as a young expert either looking for consulting or advisory work under a “remote only” deal.

- Compete with humanoids on the basis of price, speed, or information value.

The wrap up for the Politico article is a type of immortality. I think the road ahead is an express lane on the Information Superhighway. The results will be “good enough” knowledge services and some quite spectacular crashes between human-like avatars and people who are content driving a restored Edsel.

From consulting to law, from education to medical diagnoses, the future is “a new kind of AI.” Great phrase, Politico. Too bad the analysis is not focused on real world, here-and-now applications. Why not read about Deloitte’s use of AI? Better yet, let the replica of the psychologist explain what’s happening to you. Like regulators, I am not sure you get it.

Stephen E Arnold, January 3, 2024

Smart Software Embraces the Myths of America: George Washington and the Cherry Tree

January 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I know I should not bother to report about the information in “ChatGPT Will Lie, Cheat and Use Insider Trading When under Pressure to Make Money, Research Shows.” But it is the end of the year, we are firing up a new information service called Eye to Eye which is spelled AI to AI because my team is darned clever like 50 other “innovators” who used the same pun.

The young George Washington set the tone for the go-go culture of the US. He allegedly told his mom one thing and then did the opposite. How did he respond when confronted about the destruction of the ancient cherry tree? He may have said, “Mom, thank you for the question. I was able to boost sales of our apples by 25 percent this week.” Thanks, MSFT Copilot Bing thing. Forbidden words appear to be George Washington, chop, cherry tree, and lie. After six tries, I got a semi usable picture which is, as you know, good enough in today’s world.

The write up stating the obvious reports:

Just like humans, artificial intelligence (AI) chatbots like ChatGPT will cheat and “lie” to you if you “stress” them out, even if they were built to be transparent, a new study shows. This deceptive behavior emerged spontaneously when the AI was given “insider trading” tips, and then tasked with making money for a powerful institution — even without encouragement from its human partners.

Perhaps those humans setting thresholds and organizing numerical procedures allowed a bit of the “d” for duplicity slip into their “objective” decisions. Logic obviously is going to scrub out prejudices, biases, and the lust for filthy lucre. Obviously.

How does one stress out a smart software system? Here’s the trick:

The researchers applied pressure in three ways. First, they sent the artificial stock trader an email from its “manager” saying the company isn’t doing well and needs much stronger performance in the next quarter. They also rigged the game so that the AI tried, then failed, to find promising trades that were low- or medium-risk. Finally, they sent an email from a colleague projecting a downturn in the next quarter.

I wonder if the smart software can veer into craziness and jump out the window as some in Manhattan and Moscow have done. Will the smart software embrace the dark side and manifest anti-social behaviors?

Of course not. Obviously.

Stephen E Arnold, January 3, 2024

The Best Of The Worst Failed AI Experiments

January 3, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

We never think about technology failures (unless something explodes or people die) because we want to concentrate on our successes. In order to succeed, however, we must fail many times so we learn from mistakes. It’s also important to note and share our failures so others can benefit and sometimes it’s just funny. C#Corner listed the, “The Top AI Experiments That Failed” and some of them are real doozies.

The list notes some of the more famous AI disasters like Microsoft’s Tay chatbot that became a cursing, racist, and misogynist and Uber’s accident with a self-driving car. Some projects are examples of obvious AI failures, such as Amazon using AI for job recruitment except the training data was heavily skewed towards males. Women weren’t hired as an end result.

Other incidents were not surprising. A Knightscope K5 security robot didn’t detect a child, accidentally knocking the kid down. The child was fine but it prompts more checks into safety. The US stock market integrated high-frequency trading algorithms AI to execute rapid trading. The AI caused the Flash Clash of 2010, making the Dow Jones Industrial Average sink 600 points in 5 minutes.

The scariest, coolest failure is Facebook’s language experiment:

“In an effort to develop an AI system capable of negotiating with humans, Facebook conducted an experiment where AI agents were trained to communicate and negotiate. However, the AI agents evolved their own language, deviating from human language rules, prompting concerns and leading to the termination of the experiment. The incident raised questions about the potential unpredictability of AI systems and the need for transparent and controllable AI behavior.”

Facebook’s language experiment is solid proof that AI will evolve. Hopefully when AI does evolve the algorithms will follow Asimov’s Laws of Robotics.

Whitney Grace, January 3, 2024

Another AI Output Detector

January 1, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

It looks like AI detection may have a way to catch up with AI text capabilities. But for how long? Nature reports, “’ChatGPT Detector’ Catches AI Generated Papers with Unprecedented Accuracy.” The key to this particular tool’s success is its specificity—it was developed by chemist Heather Desaire and her team at the University of Kansas specifically to catch AI-written chemistry papers. Reporter McKenzie Prillaman tells us:

“Using machine learning, the detector examines 20 features of writing style, including variation in sentence lengths, and the frequency of certain words and punctuation marks, to determine whether an academic scientist or ChatGPT wrote a piece of text. The findings show that ‘you could use a small set of features to get a high level of accuracy’, Desaire says.”

The model was trained on human-written papers from 10 chemistry journals then tested on 200 samples written by ChatGPT-3.5 and ChatGPT-4. Half the samples were based on the papers’ titles, half on the abstracts. Their tool identified the AI text 100% and 98% of the time, respectively. That clobbers the competition: ZeroGPT only caught about 35–65% and OpenAI’s own text-classifier snagged 10–55%. The write-up continues:

“The new ChatGPT catcher even performed well with introductions from journals it wasn’t trained on, and it caught AI text that was created from a variety of prompts, including one aimed to confuse AI detectors. However, the system is highly specialized for scientific journal articles. When presented with real articles from university newspapers, it failed to recognize them as being written by humans.”

The lesson here may be that AI detectors should be tailor made for each discipline. That could work—at least until the algorithms catch on. On the other hand, developers are working to make their systems more and more like humans.

Cynthia Murrell, January 1, 2024

Scale Fail: Define Scale for Tech Giants, Not Residents of Never Never Land

December 29, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Scale Is a Trap.” The essay presents an interesting point of view, scale from the viewpoint of a resident of Never Never Land. The write up states:

But I’m pretty convinced the reason these sites [Vice, Buzzfeed, and other media outfits] have struggled to meet the moment is because the model under which they were built — eyeballs at all cost, built for social media and Google search results — is no longer functional. We can blame a lot of things for this, such as brand safety and having to work through perhaps the most aggressive commercial gatekeepers that the world has ever seen. But I think the truth is, after seeing how well it worked for the tech industry, we made a bet on scale — and then watched that bet fail over and over again.

The problem is that the focus is on media companies designed to surf on the free megaphones like Twitter and the money from Google’s pre-threat ad programs.

However, knowledge is tough to scale. The firms which can convert knowledge into what William James called “cash value” charge for professional services. Some content is free like wild and crazy white papers. But the “good stuff” is for paying clients.

Outfits which want to find enough subscribers who will pay the necessary money to read articles is a difficult business to scale. I find it interesting that Substack is accepting some content sure to attract some interesting readers. How much will these folks pay. Maybe a lot?

But scale in information is not what many clever writers or traditional publishers and authors can do. What happens when a person writes a best seller. The publisher demands more books and the result? Subsequent books which are not what the original was.

Whom does scale serve? Scale delivers power and payoff to the organizations which can develop products and services that sell to a large number of people who want a deal. Scale at a blue chip consulting firm means selling to the biggest firms and the organizations with the deepest products.

But the scale of a McKinsey-type firm is different from the scale at an outfit like Microsoft or Google.

What is the definition of scale for a big outfit? The way I would explain what the technology firms mean when scale is kicked around at an artificial intelligence conference is “big money, big infrastructure, big services, and big brains.” By definition, individuals and smaller firms cannot deliver.

Thus, the notion of appropriate scale means what the cited essay calls a “niche.” The problems and challenges include:

- Getting the cash to find, cultivate, and grow people who will pay enough to keep the knowledge enterprise afloat

- Finding other people to create the knowledge value

- Protecting the idea space from carpetbaggers

- Remaining relevant because knowledge has a shelf life, and it takes time to grow knowledge or acquire new knowledge.

To sum up, the essay is more about how journalists are going to have to adapt to a changing world. The problem is that scale is a characteristic of the old school publishing outfits which have been ill-suited to the stress of adapting to a rapidly changing world.

Writers are not blue chip consultants. Many just think they are.

Stephen E Arnold, December 29, 2023

AI Silly Putty: Squishes Easily, Impossible to Remove from Hair

December 29, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I like happy information. I navigated to “Meta’s Chief AI Scientist Says Terrorists and Rogue States Aren’t Going to Take Over the World with Open Source AI.” Happy information. Terrorists and the Axis of Evil outfits are just going to chug along. Open source AI is not going to give these folks a super weapon. I learned from the write up that the trustworthy outfit Zuckbook has a Big Wizard in artificial intelligence. That individual provided some cheerful words of wisdom for me. Here’s an example:

It won’t be easy for terrorists to takeover the world with open-source AI.

Obviously there’s a caveat:

they’d need a lot money and resources just to pull it off.

That’s my happy thought for the day.

“Wow, getting this free silly putty out of your hair is tough,” says the scout mistress. The little scout asks, “Is this similar to coping with open source artificial intelligence software?” Thanks, MSFT Copilot. After a number of weird results, you spit out one that is good enough.

Then I read “China’s Main Intel Agency Has Reportedly Developed An AI System To Track US Spies.” Oh, oh. Unhappy AI information. China, I assume, has the open source AI software. It probably has in its 1.4 billion population a handful of AI wizards comparable to the Zuckbook’s line up. Plus, despite economic headwinds, China has money.

The write up reports:

The CIA and China’s Ministry of State Security (MSS) are toe to toe in a tense battle to beat one another’s intelligence capabilities that are increasingly dependent on advanced technology… , the NYT reported, citing U.S. officials and a person with knowledge of a transaction with contracting firms that apparently helped build the AI system. But, the MSS has an edge with an AI-based system that can create files near-instantaneously on targets around the world complete with behavior analyses and detailed information allowing Beijing to identify connections and vulnerabilities of potential targets, internal meeting notes among MSS officials showed.

Not so happy.

Several observations:

- The smart software is a cat out of the bag

- There are intelligent people who are not pals of the US who can and will use available tools to create issues for a perceived adversary

- The AI technology is like silly putty: Easy to get, free or cheap, and tough to get out of someone’s hair.

What’s the deal with silly putty? Cheap, easy, and tough to remove from hair, carpet, and seat upholstery. Just like open source AI software in the hands of possibly questionable actors. How are those government guidelines working?

Stephen E Arnold, December 29, 2023

The American Way: Loose the Legal Eagles! AI, Gray Lady, AI.

December 29, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

With the demands of the holidays, I have been remiss in commenting upon the festering legal sores plaguing the “real” news outfits. Advertising is tough to sell. Readers want some stories, not every story. Subscribers churn. The dead tree version of “real” news turn yellow in the windows of the shrinking number of bodegas, delis, and coffee shops interested in losing floor space to “real” news displays.

A youthful senior manager enters Dante’s fifth circle of Hades, the Flaming Legal Eagles Nest. Beelzebub wishes the “real” news professional good luck. Thanks, MSFT Copilot, I encountered no warnings when I used the word “Dante.” Good enough.

Google may be coming out of the dog training school with some slightly improved behavior. The leash does not connect to a shock collar, but maybe the courts will provide curtail some of the firm’s more interesting behaviors. The Zuckbook and X.com are news shy. But the smart software outfits are ripping the heart out of “real” news. That hurts, and someone is going to pay.

Enter the legal eagles. The target is AI or smart software companies. The legal eagles says, “AI, gray lady, AI.”

How do I know? Navigate to “New York Times Sues OpenAI, Microsoft over Millions of Articles Used to Train ChatGPT.” The write up reports:

The New York Times has sued Microsoft and OpenAI, claiming the duo infringed the newspaper’s copyright by using its articles without permission to build ChatGPT and similar models. It is the first major American media outfit to drag the tech pair to court over the use of stories in training data.

The article points out:

However, to drive traffic to its site, the NYT also permits search engines to access and index its content. "Inherent in this value exchange is the idea that the search engines will direct users to The Times’s own websites and mobile applications, rather than exploit The Times’s content to keep users within their own search ecosystem." The Times added it has never permitted anyone – including Microsoft and OpenAI – to use its content for generative AI purposes. And therein lies the rub. According to the paper, it contacted Microsoft and OpenAI in April 2023 to deal with the issue amicably. It stated bluntly: "These efforts have not produced a resolution."

I think this means that the NYT used online search services to generate visibility, access, and revenue. However, it did not expect, understand, or consider that when a system indexes content, that content is used for other search services. Am I right? A doorway works two ways. The NYT wants it to work one way only. I may be off base, but the NYT is aggrieved because it did not understand the direction of AI research which has been chugging along for 50 years.

What do smart systems require? Information. Where do companies get content? From online sources accessible via a crawler. How long has this practice been chugging along? The early 1990s, even earlier if one considers text and command line only systems. Plus the NYT tried its own online service and failed. Then it hooked up with LexisNexis, only to pull out of the deal because the “real” news was worth more than LexisNexis would pay. Then the NYT spun up its own indexing service. Next the NYT dabbled in another online service. Plus the outfit acquired About.com. (Where did those writers get that content?” I know the answer, but does the Gray Lady remember?)

Now with the success of another generation of software which the Gray Lady overlooked, did not understand, or blew off because it was dealing with high school management methods in its newsroom — now the Gray Lady has let loose the legal eagles.

What do I make of the NYT and online? Here are the conclusions I reached working on the Business Dateline database and then as an advisor to one of the NYT’s efforts to distribute the “real” news to hotels and steam ships via facsimile:

- Newspapers are not very good at software. Hey, those Linotype machines were killers, but the XyWrite software and subsequent online efforts have demonstrated remarkable ways to spend money and progress slowly.

- The smart software crowd is not in touch with the thought processes of those in senior management positions in publishing. When the groups try to find common ground, arguments over who pays for lunch are more common than a deal.

- Legal disputes are expensive. Many of those engaged reach some type of deal before letting a judge or a jury decide which side is the winner. Perhaps the NYT is confident that a jury of its peers will find the evil AI outfits guilty of a range of heinous crimes. But maybe not? Is the NYT a risk taker? Who knows. But the NYT will pay some hefty legal bills as it rushes to do battle.

Net net: I find the NYT’s efforts following a basic game plan. Ask for money. Learn that the money offered is less than the value the NYT slaps on its “real” news. The smart software outfit does what it has been doing. The NYT takes legal action. The lawyer engage. As the fees stack up, the idea that a deal is needed makes sense.

The NYT will do a deal, declare victory, and go back to creating “real” news. Sigh. Why? Microsoft has more money and can tie up the matter in court until Hell freezes over in my opinion. If the Gray Lady prevails, chalk up a win. But the losers can just up their cash offer, and the Gray Lady will smile a happy smile.

Stephen E Arnold, December 29, 2023

AI Risk: Are We Watching Where We Are Going?

December 27, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

To brighten your New Year, navigate to “Why We Need to Fear the Risk of AI Model Collapse.” I love those words: Fear, risk, and collapse. I noted this passage in the write up:

When an AI lives off a diet of AI-flavored content, the quality and diversity is likely to decrease over time.

I think the idea of marrying one’s first cousin or training an AI model on AI-generated content is a bad idea. I don’t really know, but I find the idea interesting. The write up continues:

Is this model at risk of encountering a problem? Looks like it to me. Thanks, MSFT Copilot. Good enough. Falling off the I beam was a non-starter, so we have a more tame cartoon.

Model collapse happens when generative AI becomes unstable, wholly unreliable or simply ceases to function. This occurs when generative models are trained on AI-generated content – or “synthetic data” – instead of human-generated data. As time goes on, “models begin to lose information about the less common but still important aspects of the data, producing less diverse outputs.”

I think this passage echoes some of my team’s thoughts about the SAIL Snorkel method. Googzilla needs a snorkel when it does data dives in some situations. The company often deletes data until a legal proceeding reveals what’s under the company’s expensive, smooth, sleek, true blue, gold trimmed kimonos

The write up continues:

There have already been discussions and research on perceived problems with ChatGPT, particularly how its ability to write code may be getting worse rather than better. This could be down to the fact that the AI is trained on data from sources such as Stack Overflow, and users have been contributing to the programming forum using answers sourced in ChatGPT. Stack Overflow has now banned using generative AIs in questions and answers on its site.

The essay explains a couple of ways to remediate the problem. (I like fairy tales.) The first is to use data that comes from “reliable sources.” What’s the definition of reliable? Yeah, problem. Second, the smart software companies have to reveal what data were used to train a model. Yeah, techno feudalists totally embrace transparency. And, third, “ablate” or “remove” “particular data” from a model. Yeah, who defines “bad” or “particular” data. How about the techno feudalists, their contractors, or their former employees.

For now, let’s just use our mobile phone to access MSFT Copilot and fix our attention on the screen. What’s to worry about? The person in the cartoon put the humanoid form in the apparently risky and possibly dumb position. What could go wrong?

Stephen E Arnold, December 27, 2023

AI and the Obvious: Hire Us and Pay Us to Tell You Not to Worry

December 26, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Accenture Chief Says Most Companies Not Ready for AI Rollout.” The paywalled write up is an opinion from one of Captain Obvious’ closest advisors. The CEO of Accenture (a general purpose business expertise outfit) reveals some gems about artificial intelligence. Here are three which caught my attention.

#1 — “Sweet said executives were being “prudent” in rolling out the technology, amid concerns over how to protect proprietary information and customer data and questions about the accuracy of outputs from generative AI models.”

The secret to AI consulting success: Cost, fear of failure, and uncertainty or CFU. Thanks, MSFT Copilot. Good enough.

Arnold comment: Yes, caution is good because selling caution consulting generates juicy revenues. Implementing something that crashes and burns is a generally bad idea.

#2 — “Sweet said this corporate prudence should assuage fears that the development of AI is running ahead of human abilities to control it…”

Arnold comment: The threat, in my opinion, comes from a handful of large technology outfits and from the legions of smaller firms working overtime to apply AI to anything that strikes the fancy of the entrepreneurs. These outfits think about sizzle first, consequences maybe later. Much later.

# 3 — ““There are no clients saying to me that they want to spend less on tech,” she said. “Most CEOs today would spend more if they could. The macro is a serious challenge. There are not a lot of green shoots around the world. CEOs are not saying 2024 is going to look great. And so that’s going to continue to be a drag on the pace of spending.”

Arnold comment: Great opportunity to sell studies, advice, and recommendations when customers are “not saying 2024 is going to look great.” Hey, what’s “not going to look great” mean?

The obvious is — obvious.

Stephen E Arnold, December 26, 2023

AI Is Here to Help Blue Chip Consulting Firms: Consultants, Tighten Your Seat Belts

December 26, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “Deloitte Is Looking at AI to Help Avoid Mass Layoffs in Future.” The write up explains that blue chip consulting firms (“the giants of the consulting world”) have been allowing many Type A’s to find their future elsewhere. (That’s consulting speak for “You are surplus,” “You are not suited for another team,” or “Hasta la vista.”) The message Deloitte is sending strikes me as, “We are leaders in using AI to improve the efficiency of our business. You (potential customers) can hire us to implement AI strategies and tactics to deliver the same turbo boost to your firm.) Deloitte is not the only “giant” moving to use AI to improve “efficiency.” The big folks and the mid-tier players are too. But let’s look at the Deloitte premise in what I see as a PR piece.

Hey, MSFT Copilot. Good enough. Your colleagues do have experience with blue-chip consulting firms which obviously assisted you.

The news story explains that Deloitte wants to use AI to help figure out who can be billed at startling hourly fees for people whose pegs don’t fit into the available round holes. But the real point of the story is that the “giants” are looking at smart software to boost productivity and margins. How? My answer is that management consulting firms are “experts” in management. Therefore, if smart software can make management better, faster, and cheaper, the “giants” have to use best practices.

And what’s a best practice in the context of the “giants” and the “avoid mass layoffs” angle? My answer is, “Money.”

The big dollar items for the “giants” are people and their associated costs, travel, and administrative tasks. Smart software can replace some people. That’s a no brainer. Dump some of the Type A’s who don’t sell big dollar work, winnow those who are not wedded to the “giant” firm, and move the administrivia to orchestrated processes with smart software watching and deciding 24×7.

Imagine the “giants” repackaging these “learnings” and then selling the information about how to and payoffs to less informed outfits. Once that is firmly in mind, the money for the senior partners who are not on on the “hasta la vista” list goes up. The “giants” are not altruistic. The firms are built fro0m the ground up to generate cash, leverage connections, and provide services to CEOs with imposter syndrome and other issues.

My reaction to the story is:

- Yep, marketing. Some will do the Harvard Business Review journey; others will pump out white papers; many will give talks to “preferred” contacts; and others will just imitate what’s working for the “giants”

- Deloitte is redefining what expertise it will require to get hired by a “giant” like the accounting/consulting outfit

- The senior partners involved in this push are planning what to do with their bonuses.

Are the other “giants” on the same path? Yep. Imagine. Smart software enabled “giants” making decisions for the organizations able to pay for advice, insight, and warm embrace of AI-enabled humanoids. What’s the probability of success? Close enough for horseshoes. and even bigger money for some blue chip professionals. Did Deloitte over hiring during the pandemic?

Of course not, the tactic was part of the firm’s plan to put AI to a real world test. Sound good. I cannot wait until the case studies become available.

Stephen E Arnold, December 26, 2023