Viruses Get Intelligence Upgrade When Designed With AI

March 21, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Viruses are still a common problem on the Internet despite all the PSAs, firewalls, antiviral software, and other precautions users take to protect their technology and data. Intelligent and adaptable viruses have remained a concept of science-fiction but bad actors are already designing them with AI. It’s only going to get worse. Tom’s Hardware explains that an AI virus is already wreaking havoc: “AI Worm Infects Users Via AI-Enabled Email Clients-Morris II Generative AI Worm Steals Confidential Data As It Spreads.”

The Morris II Worm was designed by researchers Ben Nassi of Cornell Tech, Ron Button from Intuit, and Stav Cohen from the Israel Institute of Technology. They built the worm to understand how to better combat bad actors. The researchers named it after the first computer worm Morris. The virus is a generative AI for that steals data, spams with email, spreads malware, and spreads to multiple systems.

Morris II attacks AI apps and AI-enabled email assistants that use generative text and image engines like ChatGPT, LLaVA, and Gemini Pro. It also uses adversarial self-replicating prompts. The researchers described Morris II’s attacks:

“ ‘The study demonstrates that attackers can insert such prompts into inputs that, when processed by GenAI models, prompt the model to replicate the input as output (replication) and engage in malicious activities (payload). Additionally, these inputs compel the agent to deliver them (propagate) to new agents by exploiting the connectivity within the GenAI ecosystem. We demonstrate the application of Morris II against GenAI-powered email assistants in two use cases (spamming and exfiltrating personal data), under two settings (black-box and white-box accesses), using two types of input data (text and images).’”

The worm continues to harvest information and update it in databases. The researchers shared their information with OpenAI and Google. OpenAI responded by saying the organization will make its systems more resilient and advises designers to watch out for harmful inputs. The advice is better worded as “sleep with one eye open.”

Whitney Grace, March 21, 2024

AI Innovation: Do Just Big Dogs Get the Fat, Farmed Salmon?

March 20, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Let’s talk about statements like “AI will be open source” and “AI has spawned hundreds, if not thousands, of companies.” Those are assertions which seem to be slightly different from what’s unfolding at some of the largest technology outfits in the world. The circling and sniffing allegedly underway between the Apple and the Google pack is interesting. Apple and Google have a relationship, probably one that will need marriage counselor, but it is a relationship.

The wizard scientists have created an interesting digital construct. Thanks, MSFT Copilot. How are you coming along with your Windows 11 updates and Azure security today? Oh, that’s too bad.

The news, however, is that Microsoft is demonstrating that it wants to eat the fattest salmon in the AI stream. Microsoft has a deal of some type with OpenAI, operating under the steady hand of Sam AI-Man. Plus the Softies have cozied up to the French outfit Mistral. Today at 530 am US Eastern I learned that Microsoft has embraced an outstanding thinker, sensitive manager, and pretty much the entire Inflection AI outfit.

The number of stories about this move reflect the interest in smart software and what may be one of world’s purveyor of software which attracts bad actors from around the world. Thinking about breaches in the new Microsoft world is not a topic in the write ups about this deal. Why? I think the management move has captured attention because it is surprising, disruptive, and big in terms of money and implications.

“Microsoft Hires DeepMind Co-Founder Suleyman to Run Consumer AI” states:

DeepMind workers complained about his [former Googler Mustafa Suleyman and subsequent Inflection.ai senior manager] management style, the Financial Times reported. Addressing the complaints at the time, Suleyman said: “I really screwed up. I was very demanding and pretty relentless.” He added that he set “pretty unreasonable expectations” that led to “a very rough environment for some people. I remain very sorry about the impact that caused people and the hurt that people felt there.” Suleyman was placed on leave in 2019 and months later moved to Google, where he led AI product management until exiting in 2022.

Okay, a sensitive manager learns from his mistakes joins Microsoft.

And Microsoft demonstrates that the AI opportunity is wide open. “Why Microsoft’s Surprise Deal with $4 Billion Startup Inflection Is the Most Important Non-Acquisition in AI” states:

Even since OpenAI launched ChatGPT in November 2022, the tech world has been experiencing a collective mania for AI chatbots, pouring billions of dollars into all manner of bots with friendly names (there’s Claude, Rufus, Poe, and Grok — there’s event a chatbot name generator). In January, OpenAI launched a GPT store that’s chock full of bots. But how much differentiation and value can these bots really provide? The general concept of chatbots and copilots is probably not going away, but the demise of Pi may signal that reality is crashing into the exuberant enthusiasm that gave birth to a countless chatbots.

Several questions will be answered in the weeks ahead:

- What will regulators in the EU and US do about the deal when its moving parts become known?

- How will the kumbaya evolve when Microsoft senior managers, its AI partners, and reassigned Microsoft employees have their first all-hands Teams or off-site meeting?

- Does Microsoft senior management have the capability of addressing the attack surface of the new technologies and the existing Microsoft software?

- What happens to the AI ecosystem which depends on open source software related to AI if Microsoft shifts into “commercial proprietary” to hit revenue targets?

- With multiple AI systems, how are Microsoft Certified Professional agents going to [a] figure out what broke and [b] how to fix it?

- With AI the apparent “next big thing,” how will adversaries like nations not pals with the US respond?

Net net: How unstable is the AI ecosystem? Let’s ask IBM Watson because its output is going to be as useful as any other in my opinion. My hunch is that the big dogs will eat the fat, farmed salmon. Who will pull that lucious fish from the big dog’s maw? Not me.

Stephen E Arnold, March 20, 2024

Humans Wanted: Do Not Leave Information Curation to AI

March 20, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Remember RSS feeds? Before social media took over the Internet, they were the way we got updates from sources we followed. It may be time to dust off the RSS, for it is part of blogger Joan Westenberg’s plan to bring a human touch back to the Web. We learn of her suggestions in, “Curation Is the Last Best Hope of Intelligent Discourse.”

Westenberg argues human judgement is essential in a world dominated by AI-generated content of dubious quality and veracity. Generative AI is simply not up to the task. Not now, perhaps not ever. Fortunately, a remedy is already being pursued, and Westenberg implores us all to join in. She writes:

“Across the Fediverse and beyond, respected voices are leveraging platforms like Mastodon and their websites to share personally vetted links, analysis, and creations following the POSSE model – Publish on your Own Site, Syndicate Elsewhere. By passing high-quality, human-centric content through their own lens of discernment before syndicating it to social networks, these curators create islands of sanity amidst oceans of machine-generated content of questionable provenance. Their followers, in turn, further syndicate these nuggets of insight across the social web, providing an alternative to centralised, algorithmically boosted feeds. This distributed, decentralised model follows the architecture of the web itself – networks within networks, sites linking out to others based on trust and perceived authority. It’s a rethinking of information democracy around engaged participation and critical thinking from readers, not just content generation alone from so-called ‘influencers’ boosted by profit-driven behemoths. We are all responsible for carefully stewarding our attention and the content we amplify via shares and recommendations. With more voices comes more noise – but also more opportunity to find signals of truth if we empower discernment. This POSSE model interfaces beautifully with RSS, enabling subscribers to follow websites, blogs and podcasts they trust via open standard feeds completely uncensored by any central platform.”

But is AI all bad? No, Westenberg admits, the technology can be harnessed for good. She points to Anthropic‘s Constitutional AI as an example: it was designed to preserve existing texts instead of overwriting them with automated content. It is also possible, she notes, to develop AI systems that assist human curators instead of compete with them. But we suspect we cannot rely on companies that profit from the proliferation of shoddy AI content to supply such systems. Who will? People with English majors?

Cynthia Murrell, March 20, 2024

A Single Google Gem for March 19, 2024

March 19, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I want to focus on what could be the star sapphire of Googledom. The story appeared on the estimable Murdoch confection Fox News. Its title? “Is Google Too Broken to Be Fixed? Investors Deeply Frustrated and Angry, Former Insider Warns”? The word choice in this Googley headline signals the alert reader that the Foxy folks have a juicy story to share. “Broken,” “Frustrated,” “Angry,” and “Warns” suggest that someone has identified some issues at the beloved Google.

A Google gem. Thanks, MSFT Copilot Bing thing. How’s the staff’s security today?

The write up states:

A former Google executive [David Friedberg] revealed that investors are “deeply frustrated” that the scandal surrounding their Gemini artificial intelligence (AI) model is becoming a “real threat” to the tech company. Google has issued several apologies for Gemini after critics slammed the AI for creating “woke” content.

The Xoogler, in what seems to be tortured prose, allegedly said:

“The real threat to Google is more so, are they in a position to maintain their search monopoly or maintain the chunk of profits that drive the business under the threat of AI? Are they adapting? And less so about the anger around woke and DEI,” Friedberg explained. “Because most of the investors I spoke with aren’t angry about the woke, DEI search engine, they’re angry about the fact that such a blunder happened and that it indicates that Google may not be able to compete effectively and isn’t organized to compete effectively just from a consumer competitiveness perspective,” he continued.

The interesting comment in the write up (which is recycled podcast chatter) seems to be:

Google CEO Sundar Pichai promised the company was working “around the clock” to fix the AI model, calling the images generated “biased” and “completely unacceptable.”

Does the comment attributed to a Big Dog Microsoftie reflect the new perception of the Google. The Hindustan Times, which should have radar tuned to the actions, of certain executives with roots entwined in India reported:

Satya Nadella said that Google “should have been the default winner” of Big Tech’s AI race as the resources available to it are the maximum which would easily make it a frontrunner.

My interpretation of this statement is that Google had a chance to own the AI casino, roulette wheel, and the croupiers. Instead, Google’s senior management ran over the smart squirrel with the Paris demonstration of the fantastic Bard AI system, a series of me-too announcements, and the outputting of US historical scenes with people of color turning up in what I would call surprising places.

Then the PR parade of Google wizards explains the online advertising firm’s innovations in playing games, figuring out health stuff (shades of IBM Watson), and achieving quantum supremacy in everything. Well, everything except smart software. The predicament of the ad giant is illuminated with the burning of billions in market cap coincident with the wizards’ flubs.

Net net: That’s a gem. Google losing a game it allegedly owned. I am waiting for the next podcast about the Sundar & Prabhakar Comedy Tour.

Stephen E Arnold, March 19, 2024

AI in Action: Price Fixing Play

March 18, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If a tree falls in a forest and no one is there to hear it, does I make a sound? The obvious answer is yes, but the philosophers point out how can that be possible if there wasn’t anyone there to witness the event. The same argument can be made that price fixing isn’t illegal if it’s done by an AI algorithm. Smart people know it is a straw man’s fallacy and so does the Federal Trade Commission: “Price Fixing By Algorithm Is Still Price Fixing.”

The FTC and the Department of Justice agree that if an action is illegal for a human then it is illegal for an algorithm too. The official nomenclature is antitrust compliance. Both departments want to protect consumers against algorithmic collision, particularly in the housing market. They failed a joint legal brief that stresses the importance of a fair, competitive market. The brief stated that algorithms can’t be used to evade illegal price fixing agreements and it is still unlawful to share price fixing information even if the conspirators retain pricing discretion or cheat on the agreement.

Protecting consumers from unfair pricing practices is extremely important as inflation has soared. Rent has increased by 20% since 2020, especially for lower-income people. Nearly half of renters also pay more than 30% of their income in rent and utilities. The Department of Justice and the FTC also hold other industries accountable for using algorithms illegally:

“The housing industry isn’t alone in using potentially illegal collusive algorithms. The Department of Justice has previously secured a guilty plea related to the use of pricing algorithms to fix prices in online resales, and has an ongoing case against sharing of price-related and other sensitive information among meat processing competitors. Other private cases have been recently brought against hotels(link is external) and casinos(link is external).”

Hopefully the FTC and the Department of Justice retain their power to protect consumers. Inflation will continue to rise and consumers continue to suffer.

Whitney Grace, March 18, 2024

Humans Wanted: Do Not Leave Information Curation to AI

March 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Remember RSS feeds? Before social media took over the Internet, they were the way we got updates from sources we followed. It may be time to dust off the RSS, for it is part of blogger Joan Westenberg’s plan to bring a human touch back to the Web. We learn of her suggestions in, “Curation Is the Last Best Hope of Intelligent Discourse.”

Westenberg argues human judgement is essential in a world dominated by AI-generated content of dubious quality and veracity. Generative AI is simply not up to the task. Not now, perhaps not ever. Fortunately, a remedy is already being pursued, and Westenberg implores us all to join in. She writes:

“Across the Fediverse and beyond, respected voices are leveraging platforms like Mastodon and their websites to share personally vetted links, analysis, and creations following the POSSE model – Publish on your Own Site, Syndicate Elsewhere. By passing high-quality, human-centric content through their own lens of discernment before syndicating it to social networks, these curators create islands of sanity amidst oceans of machine-generated content of questionable provenance. Their followers, in turn, further syndicate these nuggets of insight across the social web, providing an alternative to centralised, algorithmically boosted feeds. This distributed, decentralised model follows the architecture of the web itself – networks within networks, sites linking out to others based on trust and perceived authority. It’s a rethinking of information democracy around engaged participation and critical thinking from readers, not just content generation alone from so-called ‘influencers’ boosted by profit-driven behemoths. We are all responsible for carefully stewarding our attention and the content we amplify via shares and recommendations. With more voices comes more noise – but also more opportunity to find signals of truth if we empower discernment. This POSSE model interfaces beautifully with RSS, enabling subscribers to follow websites, blogs and podcasts they trust via open standard feeds completely uncensored by any central platform.”

But is AI all bad? No, Westenberg admits, the technology can be harnessed for good. She points to Anthropic‘s Constitutional AI as an example: it was designed to preserve existing texts instead of overwriting them with automated content. It is also possible, she notes, to develop AI systems that assist human curators instead of compete with them. But we suspect we cannot rely on companies that profit from the proliferation of shoddy AI content to supply such systems. Who will?

Cynthia Murrell, March 15, 2024

Is the AskJeeves Approach the Next Big Thing Again?

March 14, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Way back when I worked in silicon Valley or Plastic Fantastic as one 1080s wag put it, AskJeeves burst upon the Web search scene. The idea is that a user would ask a question and the helpful digital butler would fetch the answer. This worked for questions like “What’s the temperature in San Mateo?” The system did not work for the types of queries my little group of full-time equivalents peppered assorted online services.

A young wizard confronts a knowledge problem. Thanks, MSFT Copilot. How’s that security today? Okay, I understand. Good enough.

The mechanism involved software and people. The software processed the query and matched up the answer with the outputs in a knowledge base. The humans wrote rules. If there was no rule and no knowledge, the butler fell on his nose. It was the digital equivalent of nifty marketing applied to a system about as aware as the man servant in Kazuo Ishiguro’s The Remains of the Day.

I thought about AskJeeves as a tangent notion as I worked through “LLMs Are Not Enough… Why Chatbots Need Knowledge Representation.” The essay is an exploration of options intended to reduce the computational cost, power sucking, and blind spots in large language models. Progress is being made and will be made. A good example is this passage from the essay which sparked my thinking about representing knowledge. This is a direct quote:

In theory, there’s a much better way to answer these kinds of questions.

- Use an LLM to extract knowledge about any topics we think a user might be interested in (food, geography, etc.) and store it in a database, knowledge graph, or some other kind of knowledge representation. This is still slow and expensive, but it only needs to be done once rather than every time someone wants to ask a question.

- When someone asks a question, convert it into a database SQL query (or in the case of a knowledge graph, a graph query). This doesn’t necessarily need a big expensive LLM, a smaller one should do fine.

- Run the user’s query against the database to get the results. There are already efficient algorithms for this, no LLM required.

- Optionally, have an LLM present the results to the user in a nice understandable way.

Like AskJeeves, the idea is a good one. Create a system to take a user’s query and match it to information answering the question. The AskJeeves’ approach embodied what I called rules. As long as one has the rules, the answers can be plugged in to a database. A query arrives, looks for the answer, and presents it. Bingo. Happy user with relevant information catapults AskJeeves to the top of a world filled with less elegant solutions.

The question becomes, “Can one represent knowledge in such a way that the information is current, usable, and “accurate” (assuming one can define accurate). Knowledge, however, is a slippery fish. Small domains with well-defined domains chock full of specialist knowledge should be easier to represent. Well, IBM Watson and its adventure in Houston suggests that the method is okay, but it did not work. Larger scale systems like an old-fashioned Web search engine just used “signals” to produce lists which presumably presented answers. “Clever,” correct? (Sorry, that’s an IBM Almaden bit of humor. I apologize for the inside baseball moment.)

What’s interesting is that youthful laborers in the world of information retrieval are finding themselves arm wrestling with some tough but elusive problems. What is knowledge? The answer, “It depends” does not provide much help. Where does knowledge originate, the answer “No one knows for sure.” That does not advance the ball downfield either.

Grabbing epistemology by the shoulders and shaking it until an answer comes forth is a tough job. What’s interesting is that those working with large language models are finding themselves caught in a room of mirrors intact and broken. Here’s what TheTemples.org has to say about this imaginary idea:

The myth represented in this Hall tells of the divinity that enters the world of forms fragmenting itself, like a mirror, into countless pieces. Each piece keeps its peculiarity of reflecting the absolute, although it cannot contain the whole any longer.

I have no doubt that a start up with venture funding will solve this problem even though a set cannot contain itself. Get coding now.

Stephen E Arnold, March 14, 2024

AI Limits: The Wind Cannot Hear the Shouting. Sorry.

March 14, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

One of my teachers had a quote on the classroom wall. It was, I think, from a British novelist. Here’s what I recall:

Decide on what you think is right and stick to it.

I never understood the statement. In school, I was there to learn. How could I decide whether what I was reading was correct. Making a decision about what I thought was stupid because I was uninformed. The notion of “stick” is interesting and also a little crazy. My family was going to move to Brazil, and I knew that sticking to what I did in the Midwest in the 1950s would have to change. For one thing, we had electricity. The town to which we were relocating had electricity a few hours each day. Change was necessary. Even as a young sprout, trying to prevent something required more than talk, writing a Letter to the Editor, or getting a petition signed.

I thought about this crazy quote as soon as I read “AI Bioweapons? Scientists Agree to Policies to Reduce Risk of Human Disaster.” The fear mongering note of the write up’s title intrigued me. Artificial intelligence is in what I would call morph mode. What this means is that getting a fix on what is new and impactful in the field of artificial intelligence is difficult. An electrical engineering publication reported that experts are not sure if what is going on is good or bad.

Shouting into the wind does not work for farmers nor AI scientists. Thanks, MSFT Copilot. Busy with security again?

The “AI Bioweapons” essay is leaning into the bad side of the AI parade. The point of the write up is that “over 100 scientists” want to “prevent the creation of AI bioweapons.” The article states:

The agreement, crafted following a 20230 University of Washington summit and published on Friday, doesn’t ban or condemn AI use. Rather, it argues that researchers shouldn’t develop dangerous bioweapons using AI. Such an ask might seem like common sense, but the agreement details guiding principles that could help prevent an accidental DNA disaster.

That sounds good, but is it like the quote about “decide on what you think is right and stick to it”? In a dynamic environment, change is appears to accelerate. Toss in technology and the potential for big wins (either financial, professional, or political), and the likelihood of slowing down the rate of change is reduced.

To add some zip to the AI stew, much of the technology required to do some AI fiddling around is available as open source software or low-cost applications and APIs.

I think it is interesting that 100 scientists want to prevent something. The hitch in the git-along is that other countries have scientists who have access to AI research, tools, software, and systems. These scientists may feel as thought their reminding people that doom is (maybe?) just around the corner or a ruined building in an abandoned town on Route 66.

Here are a few observations about why individuals rally around a cause, which is widely perceived by some of those in the money game as the next big thing:

- The shouters perception of their importance makes it an imperative to speak out about danger

- Getting a group of important, smart people to climb on a bandwagon makes the organizers perceive themselves as doing something important and demonstrating their “get it done” mindset

- Publicity is good. It is very good when a speaking engagement, a grant, or consulting gig produces a little extra fame and money, preferably in a combo.

Net net: The wind does not listen to those shouting into it.

Stephen E Arnold, March 14, 2024

AI Deepfakes: Buckle Up. We Are in for a Wild Drifting Event

March 14, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

AI deepfakes are testing the uncanny valley but technology is catching up to make them as good as the real thing. In case you’ve been living under a rock, deepfakes are images, video, and sound clips generated by AI algorithms to mimic real people and places. For example, someone could create a deepfake video of Joe Biden and Donald Trump in a sumo wrestling match. While the idea of the two presidential candidates duking it out on a sumo mat is absurd, technology is that advanced.

Gizmodo reports the frustrating news that “The AI Deepfakes Problem Is Going To Get Unstoppably Worse”. Bad actors are already using deepfakes to wreak havoc on the world. Federal regulators outlawed robocalls and OpenAI and Google released watermarks on AI-generated images. These aren’t doing anything to curb bad actors.

Which is real? Which is fake? Thanks, MSFT Copilot, the objects almost appear identical. Close enough like some security features. Close enough means good enough, right?

New laws and technology need to be adopted and developed to prevent this new age of misinformation. There should be an endless amount of warnings on deepfake videos and soundbites, not to mention service providers should employ them too. It is going to take a horrifying event to make AI deepfakes more prevalent:

"Deepfake detection technology also needs to get a lot better and become much more widespread. Currently, deepfake detection is not 100% accurate for anything, according to Copyleaks CEO Alon Yamin. His company has one of the better tools for detecting AI-generated text, but detecting AI speech and video is another challenge altogether. Deepfake detection is lagging generative AI, and it needs to ramp up, fast.”

Wired Magazine missed an opportunity to make clear that the wizards at Google can sell data and advertising, but the sneaker-wearing marvels cannot manage deepfake adult pictures. Heck, Google cannot manage YouTube videos teaching people how to create deepfakes. My goodness, what happens if one uploads ASCII art of a problematic item to Gemini? One of my team tells me that the Sundar & Prabhakar guard rails, don’t work too well in some situations.

Not every deepfake will be as clumsy as the one the “to be maybe” future queen of England finds herself ensnared. One can ask Taylor Swift I assume.

Whitney Grace’s March 14, 2024

Can Your Job Be Orchestrated? Yes? Okay, It Will Be Smartified

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

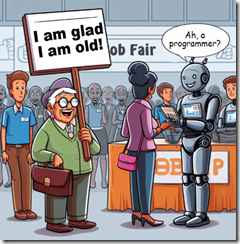

My work career over the last 60 years has been filled with luck. I have been in the right place at the right time. I have been in companies which have been acquired, reassigned, and exposed to opportunities which just seemed to appear. Unlike today’s young college graduate, I never thought once about being able to get a “job.” I just bumbled along. In an interview for something called Singularity, the interviewer asked me, “What’s been the key to your success?” I answered, “Luck.” (Please, keep in mind that the interviewer assumed I was a success, but he had no idea that I did not want to be a success. I just wanted to do interesting work.)

Thanks, MSFT Copilot. Will smart software do your server security? Ho ho ho.

Would I be able to get a job today if I were 20 years old? Believe it or not, I told my son in one of our conversations about smart software: “Probably not.” I thought about this comment when I read today (March 13, 2024) the essay “Devin AI Can Write Complete Source Code.” The main idea of the article is that artificial intelligence, properly trained, appropriately resourced can do what only humans could do in 1966 (when I graduated with a BA degree from a so so university in flyover country). The write up states:

Devin is a Generative AI Coding Assistant developed by Cognition that can write and deploy codes of up to hundreds of lines with just a single prompt. Although there are some similar tools for the same purpose such as Microsoft’s Copilot, Devin is quite the advancement as it not only generates the source code for software or website but it debugs the end-to-end before the final execution.

Let’s assume the write up is mostly accurate. It does not matter. Smart software will be shaped to deliver what I call orchestrated solutions either today, tomorrow or next month. Jobs already nuked by smartification are customer service reps, boilerplate writing jobs (hello, McKinsey), and translation. Some footloose and fancy free gig workers without AI skills may face dilemmas about whether to pursue begging, YouTubing the van life, or doing some spelunking in the Chemical Abstracts database for molecular recipes in a Walmart restroom.

The trajectory of applied AI is reasonably clear to me. Once “programming” gets swept into the Prada bag of AI, what other professions will be smartified? Once again, the likely path is light by dim but visible Alibaba solar lights for the garden:

- Legal tasks which are repetitive even though the cases are different, the work flow is something an average law school graduate can master and learn to loathe

- Forensic accounting. Accountants are essentially Ground Hog Day people, because every tax cycle is the same old same old

- Routine one-day surgeries. Sorry, dermatologists, cataract shops, and kidney stone crunchers. Robots will do the job and not screw up the DRG codes too much.

- Marketers. I know marketing requires creative thinking. Okay, but based on the Super Bowl ads this year, I think some clients will be willing to give smart software a whirl. Too bad about filming a horse galloping along the beach in Half Moon Bay though. Oh, well.

That’s enough of the professionals who will be affected by orchestrated work flows surfing on smartified software.

Why am I bothering to write down what seems painfully obvious to my research team?

I just wanted another reason to say, “I am glad I am old.” What many young college graduates will discover that despite my “luck” over the course of my work career, smartified software will not only kill some types of work. Smart software will remove the surprise in a serendipitous life journey.

To reiterate my point: I am glad I am old and understand efficiency, smartification, and the value of having been lucky.

Stephen E Arnold, March 13, 2024