AI Bubble Gum Cards

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

A publication for electrical engineers has created a new mechanism for making AI into a collectible. Navigate to “The AI apocalypse: A Scorecard.” Scroll down to the part of the post which looks like the gems from the 1050s:

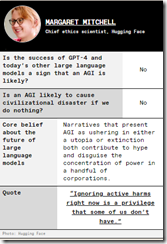

The idea is to pick 22 experts and gather their big ideas about AI’s potential to destroy humanity. Here’s one example of an IEEE bubble gum card:

© by the estimable IEEE.

The information on the cards is eclectic. It is clear that some people think smart software will kill me and you. Others are not worried.

My thought is that IEEE should expand upon this concept; for example, here are some bubble gum card ideas:

- Do the NFT play? These might be easier to sell than IEEE memberships and subscriptions to the magazine

- Offer actual, fungible packs of trading cards with throw-back bubble gum

- Create an AI movie about AI experts with opposing ideas doing battle in a video game type world. Zap. You lose, you doubter.

But the old-fashioned approach to selling trading cards to grade school kids won’t work. First, there are very few corner stores near schools in many cities. Two, a special interest group will agitate to block the sale of cards about AI because the inclusion of chewing gum will damage children’s teeth. And, three, kids today want TikToks, at least until the service is banned from a fast-acting group of elected officials.

I think the IEEE will go in a different direction; for example, micro USBs with AI images and source code on them. Or, the IEEE should just advance to the 21st-century and start producing short-form AI videos.

The IEEE does have an opportunity. AI collectibles.

Stephen E Arnold, March 13, 2024

AI to AI Program for March 12, 2024, Now Available

March 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Erik Arnold, with some assistance from Stephen E Arnold (the father) has produced another installment of AI to AI: Smart Software for Government Use Cases.” The program presents news and analysis about the use of artificial intelligence (smart software) in government agencies.

The ad-free program features Erik S. Arnold, Managing Director of Govwizely, a Washington, DC consulting and engineering services firm. Arnold has extensive experience working on technology projects for the US Congress, the Capitol Police, the Department of Commerce, and the White House. Stephen E Arnold, an adviser to Govwizely, also participates in the program. The current episode explores five topics in an father-and-son exploration of important, yet rarely discussed subjects. These include the analysis of law enforcement body camera video by smart software, the appointment of an AI information czar by the US Department of Justice, copyright issues faced by UK artificial intelligence projects, the role of the US Marines in the Department of Defense’s smart software projects, and the potential use of artificial intelligence in the US Patent Office.

The video is available on YouTube at https://youtu.be/nsKki5P3PkA. The Apple audio podcast is at this link.

Stephen E Arnold, March 12, 2024

AI Hermeneutics: The Fire Fights of Interpretation Flame

March 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My hunch is that not too many of the thumb-typing, TikTok generation know what hermeneutics means. Furthermore, like most of their parents, these future masters of the phone-iverse don’t care. “Let software think for me” would make a nifty T shirt slogan at a technology conference.

This morning (March 12, 2024) I read three quite different write ups. Let me highlight each and then link the content of those documents to the the problem of interpretation of religious texts.

Thanks, MSFT Copilot. I am confident your security team is up to this task.

The first write up is a news story called “Elon Musk’s AI to Open Source Grok This Week.” The main point for me is that Mr. Musk will put the label “open source” on his Grok artificial intelligence software. The write up includes an interesting quote; to wit:

Musk further adds that the whole idea of him founding OpenAI was about open sourcing AI. He highlighted his discussion with Larry Page, the former CEO of Google, who was Musk’s friend then. “I sat in his house and talked about AI safety, and Larry did not care about AI safety at all.”

The implication is that Mr. Musk does care about safety. Okay, let’s accept that.

The second story is an ArXiv paper called “Stealing Part of a Production Language Model.” The authors are nine Googlers, two ETH wizards, one University of Washington professor, one OpenAI researcher, and one McGill University smart software luminary. In short, the big outfits are making clear that closed or open, software is rising to the task of revealing some of the inner workings of these “next big things.” The paper states:

We introduce the first model-stealing attack that extracts precise, nontrivial information from black-box production language models like OpenAI’s ChatGPT or Google’s PaLM-2…. For under $20 USD, our attack extracts the entire projection matrix of OpenAI’s ada and babbage language models.

The third item is “How Do Neural Networks Learn? A Mathematical Formula Explains How They Detect Relevant Patterns.” The main idea of this write up is that software can perform an X-ray type analysis of a black box and present some useful data about the inner workings of numerical recipes about which many AI “experts” feign total ignorance.

Several observations:

- Open source software is available to download largely without encumbrances. Good actors and bad actors can use this software and its components to let users put on a happy face or bedevil the world’s cyber security experts. Either way, smart software is out of the bag.

- In the event that someone or some organization has secrets buried in its software, those secrets can be exposed. One the secret is known, the good actors and the bad actors can surf on that information.

- The notion of an attack surface for smart software now includes the numerical recipes and the model itself. Toss in the notion of data poisoning, and the notion of vulnerability must be recast from a specific attack to a much larger type of exploitation.

Net net: I assume the many committees, NGOs, and government entities discussing AI have considered these points and incorporated these articles into informed policies. In the meantime, the AI parade continues to attract participants. Who has time to fool around with the hermeneutics of smart software?

Stephen E Arnold, March 12, 2024

Thomson Reuters Is Going to Do AI: Run Faster

March 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Thomson Reuters, a mostly low profile outfit, is going to do AI. Why’s this interesting to law schools, lawyers, accountants, special librarians, libraries, and others who “pay” for “real” information? There are three reasons:

- Money

- Markets

- Mania.

Thomson Reuters has been a tech talker for decades. The company created skunk works. It hired quirky MIT wizards. I bought businesses with information technology. But underneath the professional publishing clear coat, the firm is the creation of Lord Thomson of Fleet. The firm has a track record of being able to turn a profit on its $7 billion in revenues. But the future, if news reports are accurate, is artificial intelligence or smart software.

The young publishing executive says, “I have go to get ahead of this AI bus before it runs over me.” Thanks, MSFT Copilot. Working on security today?

But wait! What makes Thomson Reuters different from the New York Times or (heaven forbid the question) Rupert Murdoch’s confections? The answer is in my opinion: Thomson Reuters does the trust thing and is a professional publisher. I don’t want to explain that in the world of Lord Thomson of Fleet that publishing is publishing. Nope. Not going there. Thomson Reuters is a custom made billiard cue, not one of those bar pool cheapos.

As appropriate to today’s Thomson Reuters, the news appeared in Thomson’s own news releases first; for example, “Thomson Reuters Profit Beats Estimates Amid AI Push.” Yep, AI drives profits. That’s the “m” in money. Plus, Thomson late last year this article found its way to the law firm market (yep, that’s the second “m”): “Morgan Lewis and Thomson Reuters Enter into Partnership to Put Law Firms’ Needs at the Heart of AI Development.”

Now the third “m” or mania. Here’s a representative story, “Thomson Reuters to Invest US$8 billion in a Substantial AI-Focused Spending Initiative.” You can also check out the Financial Times’s report at this link.

Thomson Reuters is a $7 billion corporation. If the $8 billion number is on the money, the venerable news outfit is going to spend the equivalent on one year’s revenue acquiring and investing in smart software. In terms of professional publishing, this chunk of change is roughly the equivalent of Sam AI-Man’s need for trillions of dollars for his smart software business.

Several thoughts struck me as I was reading about the $8 billion investment in smart software:

- In terms of publishing or more narrowly professional publishing, $8 billion will take some time to spend. But time is not on the side of publishing decision making processes. When the check is written for an AI investment, there may be some who ask, “Is this the correct investment? After all, aren’t we professional publishers serving lawyers, accountants, and researchers?”

- The US legal processes are interesting. But the minor challenge of Crown copyright adds a bit of spice to certain investments. The UK government itself is reluctant to push into some AI areas due to concerns that certain information may not be available unless the red tape about copyright has been trimmed, rolled, and put on the shelf. Without being disrespectful, Thomson Reuters could find that some of the $8 billion headed into its clients pockets as legal challenges make their way through courts in Britain, Canada, and the US and probably some frisky EU states.

- The game for AI seems to be breaking into two what a former Greek minister calls the techno feudal set up. On one hand, there are giant technology centric companies (of which Thomson Reuters is not one of the club members). These are Google- and Microsoft-scale outfits with infrastructure, data, customers, and multiple business models. On the other hand, there are the Product Watch outfits which are using open source and APIs to create “new” and “important” AI businesses, applications, and solutions. In short, there are some barons and a whole grab-bag of lesser folk. Is Thomson Reuters going to be able to run with the barons. Remember, please, the barons are riding stallions. Thomson Reuter-type firms either walk or ride donkeys.

Net net: If Thomson Reuters spends $8 billion on smart software, how many lawyers, accountants, and researchers will be put out of work? The risks are not just bad AI investments. The threat maybe to gut the billing power of the paying customers for Thomson Reuters’ content. This will be entertaining to watch.

PS. The third “m”? It is mania, AI mania.

Stephen E Arnold, March 11, 2024

x

x

x

x

x

AI May Kill Jobs Plus It Can Kill Bambi, Koalas, and Whales

March 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.Amid the AI hype is little mention of a huge problem.

This essay is the work of a dumb dinobaby. No smart software required.Amid the AI hype is little mention of a huge problem.

As Nature’s Kate Crawford reports, “Generative AI’s Environmental Costs Are Soaring—and Mostly Secret.” Besides draining us of fresh water, AI data centers also consume immense amounts of energy. We learn:

“One assessment suggests that ChatGPT, the chatbot created by OpenAI in San Francisco, California, is already consuming the energy of 33,000 homes. It’s estimated that a search driven by generative AI uses four to five times the energy of a conventional web search. Within years, large AI systems are likely to need as much energy as entire nations.”

Even OpenAI’s head Sam Altman admits this is not sustainable, but he has a solution in mind. Is he pursuing more efficient models, or perhaps redesigning data centers? Nope. Altman’s hopes are pinned on nuclear fusion. But that technology has been “right around the corner” for the last 50 years. We need solutions now, not in 2050 or later. Sadly, it is unlikely AI companies will make the effort to find and enact those solutions unless forced to. The article notes a piece of legislation, the Artificial Intelligence Environmental Impacts Act of 2024, has finally been introduced in the Senate. But in the unlikely event the bill makes it through the House, it may be too feeble to make a real difference. Crawford considers:

“To truly address the environmental impacts of AI requires a multifaceted approach including the AI industry, researchers and legislators. In industry, sustainable practices should be imperative, and should include measuring and publicly reporting energy and water use; prioritizing the development of energy-efficient hardware, algorithms, and data centers; and using only renewable energy. Regular environmental audits by independent bodies would support transparency and adherence to standards. Researchers could optimize neural network architectures for sustainability and collaborate with social and environmental scientists to guide technical designs towards greater ecological sustainability. Finally, legislators should offer both carrots and sticks. At the outset, they could set benchmarks for energy and water use, incentivize the adoption of renewable energy and mandate comprehensive environmental reporting and impact assessments. The Artificial Intelligence Environmental Impacts Act is a start, but much more will be needed — and the clock is ticking.”

Tick. Tock. Need a dead dolphin? Use a ChatGPT-type system.

Cynthia Murrell, March 8, 2024

Engineering Trust: Will Weaponized Data Patch the Social Fabric?

March 7, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Trust is a popular word. Google wants me to trust the company. Yeah, I will jump right on that. Politicians want me to trust their attestations that citizen interest are important. I worked in Washington, DC, for too long. Nope, I just have too much first-hand exposure to the way “things work.” What about my bank? It wants me to trust it. But isn’t the institution the subject of a a couple of government investigations? Oh, not important. And what about the images I see when I walk gingerly between the guard rails. I trust them right? Ho ho ho.

In our post-Covid, pre-US national election, the word “trust” is carrying quite a bit of freight. Whom to I trust? Not too many people. What about good old Socrates who was an Athenian when Greece was not yet a collection of ferocious football teams and sun seekers. As you may recall, he trusted fellow residents of Athens. He end up dead from either a lousy snack bar meal and beverage, or his friends did him in.

One of his alleged precepts in his pre-artificial intelligence worlds was:

“We cannot live better than in seeking to become better.” — Socrates

Got it, Soc.

Thanks MSFT Copilot and provider of PC “moments.” Good enough.

I read “Exclusive: Public Trust in AI Is Sinking across the Board.” Then I thought about Socrates being convicted for corruption of youth. See. Education does not bring unlimited benefits. Apparently Socrates asked annoying questions which open him to charges of impiety. (Side note: Hey, Socrates, go with the flow. Just pray to the carved mythical beast, okay?)

A loss of public trust? Who knew? I thought it was common courtesy, a desire to discuss and compromise, not whip out a weapon and shoot, bludgeon, or stab someone to death. In the case of Haiti, a twist is that a victim is bound and then barbequed in a steel drum. Cute and to me a variation of stacking seven tires in a pile dousing them with gasoline, inserting a person, and igniting the combo. I noted a variation in the Ukraine. Elderly women make cookies laced with poison and provide them to special operation fighters. Subtle and effective due to troop attrition I hear. Should I trust US Girl Scout cookies? No thanks.

What’s interesting about the write up is that it provides statistics to back up this brilliant and innovative insight about modern life is its focus on artificial intelligence. Let me pluck several examples from the dot point filled write up:

- “Globally, trust in AI companies has dropped to 53%, down from 61% five years ago.”

- “Trust in AI is low across political lines. Democrats trust in AI companies is 38%, independents are at 25% and Republicans at 24%.”

- “Eight years ago, technology was the leading industry in trust in 90% of the countries Edelman studies. Today, it is the most trusted in only half of countries.”

AI is trendy; crunchy click bait is highly desirable even for an estimable survivor of Silicon Valley style news reporting.

Let me offer several observations which may either be troubling or typical outputs from a dinobaby working in an underground computer facility:

- Close knit groups are more likely to have some concept of trust. The exception, of course, is the behavior of the Hatfields and McCoys

- Outsiders are viewed with suspicion. Often for now reason, a newcomer becomes the default bad entity

- In my lifetime, I have watched institutions take actions which erode trust on a consistent basis.

Net net: Old news. AI is not new. Hyperbole and click obsession are factors which illustrate the erosion of social cohesion. Get used to it.

Stephen E Arnold, March 7, 2024

AI and Warfare: Gaza Allegedly Experiences AI-Enabled Drone Attacks

March 7, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

We have officially crossed a line. DeepNewz reveals: “AI-Enabled Military Tech and Indian-Made Hermes 900 Drones Deployed in Gaza.” It this what they mean by “helpful AI”? We cannot say we are surprised. The extremely brief write-up tells us:

“Reports indicate that Israel has deployed AI-enabled military technology in Gaza, marking the first known combat use of such technology. Additionally, Indian-made Hermes 900 drones, produced in collaboration between Adani‘s company and Elbit Systems, are set to join the Israeli army’s fleet of unmanned aerial vehicles. This development has sparked fears about the implications of autonomous weapons in warfare and the role of Indian manufacturing in the conflict in Gaza. Human rights activists and defense analysts are particularly worried about the potential for increased civilian casualties and the further escalation of the conflict.”

On a minor but poetic note, a disclaimer states the post was written with ChatGPT. Strap in, fellow humans. We are just at the beginning of a long and peculiar ride. How are those assorted government committees doing with their AI policy planning?

Cynthia Murrell, March 7, 2024

Poohbahs Poohbahing: Just Obvious Poohbahing

March 6, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

We’re already feeling the effects of AI technology in deepfake videos and soundbites and generative text. While our present circumstances are our the beginning of AI technology, so-called experts are already claiming AI has gone bananas. The Verge, a popular Silicon Valley news outlet, released a new podcast episode where they declare that, “The AIs Are Officially Out Of Control.”

AI generated images and text aren’t 100% accurate. AI images are prone to include extra limbs, false representations of people, and even entirely miss the prompt. AI generative text is about as accurate as a Wikipedia article, so you need to double check and edit the response. Unfortunately AI are only as smart as the datasets that program them. AIs have been called “racist”and “sexist” due to limited data. Google Gemini also has gone too far on diversity and inclusion returning images that aren’t historically accurate when asked to deliver.

The podcast panelists made an obvious point when the pundits said that Google’s results qualities have declined. Bad SEO, crappy content, and paid results pollute search. They claim that the best results Google returns are coming from Reddit posts. Reddit is a catch-all online forum that Google recently negotiated deal with to use its content to train AI. That’s a great idea, especially when Reddit is going public on the stock market.

The problem is that Reddit is full of trolls who do things for %*^ and giggles. While Reddit is a brilliant source of information because it is created by real people, the bad actors will train the AI-chatbots to be “racist” and “sexist” like previous iterations. The worst incident involves ethnically diverse Nazis:

“Google has apologized for what it describes as “inaccuracies in some historical image generation depictions” with its Gemini AI tool, saying its attempts at creating a “wide range” of results missed the mark. The statement follows criticism that it depicted specific white figures (like the US Founding Fathers) or groups like Nazi-era German soldiers as people of color, possibly as an overcorrection to long-standing racial bias problems in AI.”

I am not sure which is the problem: Uninformed generalizations, flawed AI technology capable of zapping billions in a few hours, or minimum viable products are the equivalent of a blue jay fouling up a sparrow’s nest. Chirp. Chirp. Chirp.

Whitney Grace, March 6, 2024

Just One Big Google Zircon Gemstone for March 5, 2024

March 5, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I have a folder stuffed with Google gems for the week of February 26 to March 1, 12023. I have a write up capturing more Australians stranded by following Google Maps’s representation of a territory, Google’s getting tangled in another publisher lawsuit, Google figuring out how to deliver better search even when the user’s network connection sucks, Google’s firing 43 unionized contractors while in the midst of a legal action, and more.

The brilliant and very nice wizard adds, “Yes, we have created a thing which looks valuable, but it is laboratory-generated. And it is gem and a deeply flawed one, not something we can use to sell advertising yet”. Thanks, MSFT Copilot Bing thing. Good enough and I liked the unasked for ethnic nuance.

But there is just one story: Google nuked billions in market value and created the meme of the week by making many images the heart and soul of diversity. Pundits wanted one half of the Sundar and Prabhakar comedy show yanked off the stage. Check out Stratechery’s view of Google management’s grasp of leading the company in a positive manner in Gemini and Google’s Culture. The screw up was so bad that even the world’s favorite expert in aircraft refurbishment and modern gas-filled airships spoke up. (Yep, that’s the estimable Sergey Brin!)

In the aftermath of a brilliant PR move, CNBC ran a story yesterday that summed up the February 26 to March 1 Google experience. The title was “Google Co-Founder Sergey Brin Says in Rare Public Appearance That Company ‘Definitely Messed Up’ Gemini Image Launch.” What an incisive comment from one of the father of “clever” methods of determining relevance. The article includes this brilliant analysis:

He also commented on the flawed launch last month of Google’s image generator, which the company pulled after users discovered historical inaccuracies and questionable responses. “We definitely messed up on the image generation,” Brin said on Saturday. “I think it was mostly due to just not thorough testing. It definitely, for good reasons, upset a lot of people.”

That’s the Google “gem.” Amazing.

Stephen E Arnold, March 5, 2024

Synthetic Data: From Science Fiction to Functional Circumscription

March 4, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Synthetic data are information produced by algorithms, not by real-world events. It’s created using real-world data and numerical recipes. The appeal is that it is easier than collecting real life information, cheaper than dealing with data from real life, and faster than fooling around with surveys, monitoring devices, and law suits. In theory, synthetic data is one promising way of skirting the expense of getting humans involved.

“What Is [a] Synthetic Sample – And Is It All It’s Cracked Up to Be?” tackles the subject of a synthetic sample, a topic which is one slice of the synthetic data universe. The article seeks “to uncover the truth behind artificially created qualitative and quantitative market research data.” I am going to avoid the question, “Is synthetic data useful?” because the answer is, “Yes.” Bean counters and those looking to find a way out of the pickle barrel filled with expensive brine are going to chase after the magic of algorithms producing data to do some machine learning magic.

In certain situations, fake flowers are super. Other times, the faux blooms are just creepy. Thanks, MSFT Copilot Bing thing. Good enough.

Are synthetic data better than real world data? The answer from my vantage point is, “It depends.” Fancy math can prove that for some use cases, synthetic data are “good enough”; that is, the data produce results close enough to what a “real” data set provides. Therefore, just use synthetic data. But for other applications, synthetic data might throw some sand in the well-oiled marketing collateral describing the wonders of synthetic data. (Some university research labs are quite skilled in PR speak, but the reality of their methods may not line up with the PowerPoints used to raise venture capital.)

This essay discusses a research project to figure out if a synthetic sample works or in my lingo if the synthetic sample is good enough. The idea is that as long as the synthetic data is within a specified error range, the synthetic sample can be used and may produce “reliable” or useful results. (At least one hopes this is the case.)

I want to focus on one portion of the cited article and invite you to read the complete Kantar explanation.

Here’s the passage which snagged my attention:

… right now, synthetic sample currently has biases, lacks variation and nuance in both qual and quant analysis. On its own, as it stands, it’s just not good enough to use as a supplement for human sample. And there are other issues to consider. For instance, it matters what subject is being discussed. General political orientation could be easy for a large language model (LLM), but the trial of a new product is hard. And fundamentally, it will always be sensitive to its training data – something entirely new that is not part of its training will be off-limits. And the nature of questioning matters – a highly ’specific’ question that might require proprietary data or modelling (e.g., volume or revenue for a particular product in response to a price change) might elicit a poor-quality response, while a response to a general attitude or broad trend might be more acceptable.

These sentences present several thorny problems is academic speak. Let’s look at them in the vernacular of rural Kentucky where I live.

First, we have the issue of bias. Training data can be unintentionally or intentionally biased. Sample radical trucker posts on Telegram, and use those messages to train a model like Reor. That output is going to express views that some people might find unpalatable. Therefore, building a synthetic data recipe which includes this type of Telegram content is going to be oriented toward truck driver views. That’s good and bad.

Second, a synthetic sample may require mixing data from a “real” sample. That’s a common sense approach which reduces some costs. But will the outputs be good enough. The question then becomes, “Good enough for what applications?” Big, general questions about how a topic is presented might be close enough for horseshoes. Other topics like those focusing on dealing with a specific technical issue might warrant more caution or outright avoidance of synthetic data. Do you want your child or wife to die because the synthetic data about a treatment regimen was close enough for horseshoes. But in today’s medical structure, that may be what the future holds.

Third, many years ago, one of the early “smart” software companies was Autonomy, founded by Mike Lynch. In the 1990s, Bayesian methods were known but some — believe it or not — were classified and, thus, not widely known. Autonomy packed up some smart software in the Autonomy black box. Users of this system learned that the smart software had to be retrained because new terms and novel ideas not in the original training set were not findable by the neuro linguistic program’s engine. Yikes, retraining requires human content curation of data sets, time to retrain the system, and the expense of redeploying the brains of the black boxes. Clients did not like this and some, to be frank, did not understand why a product did not work like an MG sports car. Synthetic data has to be trained to “know” about new terms and avid the “certain blindness” probability based systems possess.

Fourth, the topic of “proprietary data modeling” means big bucks. The idea behind synthetic data is that it is cheaper. Building proprietary training data and keeping it current is expensive. Is it better? Yeah, maybe. Is it faster? Probably not when humans are doing the curation, cleaning, verifying, and training.

The write up states:

But it’s likely that blended models (human supplemented by synthetic sample) will become more common as LLMs get even more powerful – especially as models are finetuned on proprietary datasets.

Net net: Synthetic data warrants monitoring. Some may want to invest in synthetic data set companies like Kantar, for instance. I am a dinobaby, and I like the old-fashioned Stone Age approach to data. The fancy math embodies sufficient risk for me. Why increase risk? Remember my reference to a dead loved one? That type of risk.

Stephen E Arnold, March 4, 2023