Redefining Elite in the Age of AI: Nope, Redefining Average Is the News Story

December 12, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Business Insider has come up with an interesting swizzle on the AI thirst fest. “AI Is the Great Equalizer.” The subtitle is quite suggestive about a technology which is over 50 years in the making and just one year into its razzle dazzle next big thing with the OpenAI generative pre-trained transformer.

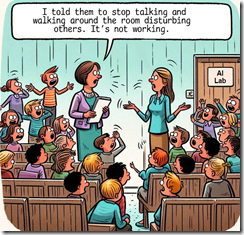

The teacher (the person with the tie) is not quite as enthusiastic about Billy, Kristie, and Mary. The teacher knows that each is a budding Einstein, a modern day Gertrude Stein, or an Ada Lovelace in the eyes of the parent. The reality is that big-time performers are a tiny percentage of any given cohort. One blue chip consulting firm complained that it had to interview 1,000 people to identify a person who could contribute. That was self-congratulatory like Oscar Meyer slapping the Cinco Jota label on a pack of baloney. But the perceptions about the impact of a rapidly developing technology on average performers is are interesting but their validity is unknown. Thanks, MSFT Copilot, you have the parental pride angle down pat. What inspired you? A microchip?

In my opinion, the main idea in the essay is:

Education and expertise won’t count for as much as they used to.

Does this mean the falling scores for reading and math are a good thing? Just let one of the techno giants do the thinking: Is that the message.

I loved this statement about working in law firms. In my experience, the assertion applies to consulting firms as well. There is only one minor problem, which I will mention after you scan the quote:

This is something the law-school study touches on. “The legal profession has a well-known bimodal separation between ‘elite’ and ‘nonelite’ lawyers in pay and career opportunities,” the authors write. “By helping to bring up the bottom (and even potentially bring down the top), AI tools could be a significant force for equality in the practice of law.”

The write up points out that AI won’t have much of an impact on the “elite”; that is, the individuals who can think, innovate, and make stuff happen. The write up says about company hiring strategies contacted about the impact of AI:

They [These firms’ executives] are aiming to hire fewer entry-level people straight out of school, since AI can increasingly take on the straightforward, well-defined tasks these younger workers have traditionally performed. They plan to bulk up on experts who can ace the complicated stuff that’s still too hard for machines to perform.

The write up in interesting, but it is speculative, not what’s happening.

Here’s what we know about the ChatGPT-type revolution after one year:

- Cyber criminals have figured out how to use generative tools to increase the amount of cyber crime requiring sentences or script generation. Score one for the bad actors.

- Older people are either reluctant or fearful of fooling around with what appears to be “magical” software. Therefore, the uptake at work is likely to be slower and probably more cautious than for some who are younger at heart. Score one for Luddites and automation-related protests.

- The younger folk will use any online service that makes something easier or more convenient. Want to buy contraband? Hit those Telegram-type groups. Want to write a report about a new procedure? Hey, let a ChatGPT-type system do it? Worry about its accuracy or appropriateness? Nope, not too much.

Net net: Change is happening, but the use of smart outputs by people who cannot read, do math, or think about Kant’s ideas are unlikely to do much more than add friction to an already creaky bureaucratic machine. As for the future, I don’t know. This dinobaby is not fearful of admitting it.

As for lawyers, remember what Shakespeare said:

“The first thing we do is, let’s kill all the lawyers.”

The statement by Dick the Butcher may apply to quite a few in “knowledge” professions. Including some essayists like this dinobaby and many, many others. The rationale is to just keep the smartest ones. AI is good enough for everything else.

Stephen E Arnold, December 12, 2023

Problematic Smart Algorithms

December 12, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

We already know that AI is fundamentally biased if it is trained with bad or polluted data models. Most of these biases are unintentional due ignorance on the part of the developers, I.e. lack diversity or vetted information. In order to improve the quality of AI, developers are relying on educated humans to help shape the data models. Not all of the AI projects are looking to fix their polluted data and ZD Net says it’s going to be a huge problem: “Algorithms Soon Will Run Your Life-And Ruin It, If Trained Incorrectly.”

Our lives are saturated with technology that has incorporated AI. Everything from an application used on a smartphone to a digital assistant like Alexa or Siri uses AI. The article tells us about another type of biased data and it’s due to an ironic problem. The science team of Aparna Balagopalan, David Madras, David H. Yang, Dylan Hadfield-Menell, Gillian Hadfield, and Marzyeh Ghassemi worked worked on an AI project that studied how AI algorithms justified their predictions. The data model contained information from human respondents who provided different responses when asked to give descriptive or normative labels for data.

Normative data concentrates on hard facts while descriptive data focuses on value judgements. The team noticed the pattern so they conducted another experiment with four data sets to test different policies. The study asked the respondents to judge an apartment complex’s policy about aggressive dogs against images of canines with normative or descriptive tags. The results were astounding and scary:

"The descriptive labelers were asked to decide whether certain factual features were present or not – such as whether the dog was aggressive or unkempt. If the answer was "yes," then the rule was essentially violated — but the participants had no idea that this rule existed when weighing in and therefore weren’t aware that their answer would eject a hapless canine from the apartment.

Meanwhile, another group of normative labelers were told about the policy prohibiting aggressive dogs, and then asked to stand judgment on each image.

It turns out that humans are far less likely to label an object as a violation when aware of a rule and much more likely to register a dog as aggressive (albeit unknowingly ) when asked to label things descriptively.

The difference wasn’t by a small margin either. Descriptive labelers (those who didn’t know the apartment rule but were asked to weigh in on aggressiveness) had unwittingly condemned 20% more dogs to doggy jail than those who were asked if the same image of the pooch broke the apartment rule or not.”

The conclusion is that AI developers need to spread the word about this problem and find solutions. This could be another fear mongering tactic like the Y2K implosion. What happened with that? Nothing. Yes, this is a problem but it will probably be solved before society meets its end.

Whitney Grace, December 12, 2023

Did AI Say, Smile and Pay Despite Bankruptcy

December 11, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Going out of business is a painful event for [a] the whiz kids who dreamed up an idea guaranteed to baffle grandma, [b] the friends, family, and venture capitalists who funded the sure-fire next Google, and [c] the “customers” or more accurately the “users” who gave the product or service a whirl and some cash.

Therefore, one who had taken an entry level philosophy class when a sophomore might have brushed against the thorny bush of ethics. Some get scratched, emulate the folks who wore chains and sharpened nails under their Grieve St Laurent robes, and read medieval wisdom literature for fun. Others just dump that baloney and focus on figuring out how to exit Dodge City without a posse riding hard after them.

The young woman learns that the creditors of an insolvent firm may “sell” her account to companies which operate on a “pay or else” policy. Imagine. You have lousy teeth and you could be put in jail. Look at the bright side. In some nation states, prison medical services include dental work. Anesthetic? Yeah. Maybe not so much. Thanks, MSFT Copilot. You had a bit of a hiccup this morning, but you spit out a tooth with an image on it. Close enough.

I read “Smile Direct Club shuts down after Filing for Bankruptcy – What It Means for Customers.” With AI customer service solutions available, one would think that a zoom zoom semi-high tech outfit would find a way to handle issues in an elegant way. Wait! Maybe the company did, and this article documents how smart software may influence certain business decisions.

The story is simple. Smile Direct could not make its mail order dental business payoff. The cited news story presents what might be a glimpse of the AI future. I quote:

Smile Direct Club has also revealed its "lifetime smile guarantee" it previously offered was no longer valid, while those with payment plans set up are expected to continue making payments. The company has not yet revealed how customers can get refunds.

I like the idea that a “lifetime” is vague; therefore, once the company dies, the user is dead too. I enjoyed immensely the alleged expectation that customers who are using the mail order dental service — even though it is defunct and not delivering its “product” — will have to keep making payments. I assume that the friendly folks at online payment services and our friends at the big credit card companies will just keep doing the automatic billing. (Those payment institutions have super duper customer service systems in my experience. Yours, of course, may differ from mine.

I am looking forward to straightening out this story. (You know. Dental braces. Straightening teeth via mail order. High tech. The next Google. Yada yada.)

Stephen E Arnold, December 11, 2023

Constraints Make AI More Human. Who Would Have Guessed?

December 11, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

AI developers could be one step closer at artificially recreating the human brain. Science Daily discusses a study from the University of Cambridge about, “AI System Self-Organizes To Develop Features of Brains Of Complex Organisms.” Neural systems are designed to organize, form connections, and balance an organism’s competing demands. They need energy and resources to grow an organism’s physical body, while they also optimize neural activity for information processing. This natural balance describes how animal brains have similar organizational solutions.

Brains are designed to solve and understand complex problems while exerting as little energy as possible. Biological systems usually evolve to maximize energy resources available to them.

“See how much better the output is when we constrain the smart software,” says the young keyboard operator. Thanks, MSFT Copilot. Good enough.

Scientists from the Medical Research Council Cognition and Brain Sciences Unit (MRC CBSU) at the University of Cambridge experimented with this concept when they made a simplified brain model and applied physical constraints. The model developed traits similar to human brains.

The scientists tested the model brain system by having it navigate a maze. Maze navigation was chosen because it requires various tasks to be completed. The different tasks activate different nodes in the model. Nodes are similar to brain neurons. The brain model needed to practice navigating the maze:

“Initially, the system does not know how to complete the task and makes mistakes. But when it is given feedback it gradually learns to get better at the task. It learns by changing the strength of the connections between its nodes, similar to how the strength of connections between brain cells changes as we learn. The system then repeats the task over and over again, until eventually it learns to perform it correctly.

With their system, however, the physical constraint meant that the further away two nodes were, the more difficult it was to build a connection between the two nodes in response to the feedback. In the human brain, connections that span a large physical distance are expensive to form and maintain.”

The physical constraints on the model forced its nodes to react and adapt similarly to a human brain. The implications for AI are that it could make algorithms process faster and more complex tasks as well as advance the evolution of “robot” brains.

Whitney Grace, December 11, 2023

Weaponizing AI Information for Rubes with Googley Fakes

December 8, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

From the “Hey, rube” department: “Google Admits That a Gemini AI Demo Video Was Staged” reports as actual factual:

There was no voice interaction, nor was the demo happening in real time.

Young Star Wars’ fans learn the truth behind the scenes which thrill them. Thanks, MSFT Copilot. One try and some work with the speech bubble and I was good to go.

And to what magical event does this mysterious statement refer? The Google Gemini announcement. Yep, 16 Hollywood style videos of “reality.” Engadget asserts:

Google is counting on its very own GPT-4 competitor, Gemini, so much that it staged parts of a recent demo video. In an opinion piece, Bloomberg says Google admits that for its video titled “Hands-on with Gemini: Interacting with multimodal AI,” not only was it edited to speed up the outputs (which was declared in the video description), but the implied voice interaction between the human user and the AI was actually non-existent.

The article makes what I think is a rather gentle statement:

This is far less impressive than the video wants to mislead us into thinking, and worse yet, the lack of disclaimer about the actual input method makes Gemini’s readiness rather questionable.

Hopefully sometime in the near future Googlers can make reality from Hollywood-type fantasies. After all, policeware vendors have been trying to deliver a Minority Report-type of investigative experience for a heck of a lot longer.

What’s the most interesting part of the Google AI achievement? I think it illuminates the thinking of those who live in an ethical galaxy far, far away… if true, of course. Of course. I wonder if the same “fake it til you make it” approach applies to other Google activities?

Stephen E Arnold, December 8, 2023

Google Smart Software Titbits: Post Gemini Edition

December 8, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In the Apple-inspired roll out of Google Gemini, the excitement is palpable. Is your heart palpitating? Ah, no. Neither is mine. Nevertheless, in the aftershock of a blockbuster “me to” the knowledge shrapnel has peppered my dinobaby lair; to wit: Gemini, according to Wired, is a “new breed” of AI. The source? Google’s Demis Hassabis.

What happens when the marketing does not align with the user experience? Tell the hardware wizards to shift into high gear, of course. Then tell the marketing professionals to evolve the story. Thanks, MSFT Copilot. You know I think you enjoyed generating this image.

Navigate to “Google Confirms That Its Cofounder Sergey Brin Played a Key Role in Creating Its ChatGPT Rival.” That’s a clickable headline. The write up asserts: “Google hinted that its cofounder Sergey Brin played a key role in the tech giant’s AI push.”

Interesting. One person involved in both Google and OpenAI. And Google responding to OpenAI after one year? Management brilliance or another high school science club method? The right information at the right time is nine-tenths of any battle. Was Google not processing information? Was the information it received about OpenAI incorrect or weaponized? Now Gemini is a “new breed” of AI. The Verge reports that McDonald’s burger joints will use Google AI to “make sure your fries are fresh.”

Google has been busy in non-AI areas; for instance:

- The Register asserts that a US senator claims Google and Apple reveal push notification data to non-US nation states

- Google has ramped up its donations to universities, according to TechMeme

- Lost files you thought were in Google Drive? Never fear. Google has a software tool you can use to fix your problem. Well, that’s what Engadget says.

So an AI problem? What problem?

Stephen E Arnold, December 8, 2023

Safe AI or Money: Expert Concludes That Money Wins

December 8, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read “The Frantic Battle over OpenAI Shows That Money Triumphs in the End.” The author, an esteemed wizard in the world of finance and economics, reveals that money is important. Here’s a snippet from the essay which I found truly revolutionary, brilliant, insightful, and truly novel:

The academic wizard has concluded that a ball is indeed round. The world of geometry has been stunned. The ball is not just round. It exists as a sphere. The most shocking insight from the Ivory Tower is that the ball bounces. Thanks for the good enough image, MSFT Copilot.

But ever since OpenAI’s ChatGPT looked to be on its way to achieving the holy grail of tech – an at-scale consumer platform that would generate billions of dollars in profits – its non-profit safety mission has been endangered by big money. Now, big money is on the way to devouring safety.

Who knew?

The essay continues:

Which all goes to show that the real Frankenstein monster of AI is human greed. Private enterprise, motivated by the lure of ever-greater profits, cannot be relied on to police itself against the horrors that an unfettered AI will create. Last week’s frantic battle over OpenAI shows that not even a non-profit board with a capped profit structure for investors can match the power of big tech and Wall Street. Money triumphs in the end.

Oh, my goodness. Plato, Aristotle, and other mere pretenders to genius you have been put to shame. My heart is palpitating from the revelation that “money triumphs in the end.”

Stephen E Arnold, December 8, 2023

Big Tech, Big Fakes, Bigger Money: What Will AI Kill?

December 7, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I don’t read The Hollywood Reporter. I did one job for a Hollywood big wheel. That was enough for me. I don’t drink. I don’t take drugs unless prescribed by my comic book addicted medical doctor in rural Kentucky. I don’t dress up and wear skin bronzers in the hope that my mobile will buzz. I don’t stay out late. I don’t fancy doing things which make my ethical compass buzz more angrily than my mobile phone. Therefore, The Hollywood Reporter does not speak to me.

One of my research team sent me a link to “The Rise of AI-Powered Stars: Big Money and Risks.” I scanned the write up and then I went through it again. By golly, The Hollywood Reporter hit on an “AI will kill us” angle not getting as much publicity as Sam AI-Man’s minimal substance interview.

Can a techno feudalist generate new content using what looks like “stars” or “well known” people? Probably. A payoff has to be within sight. Otherwise, move on to the next next big thing. Thanks, MSFT Copilot. Good enough cartoon.

Please, read the original and complete article in The Hollywood Reporter. Here’s the passage which rang the insight bell for me:

tech firms are using the power of celebrities to introduce the underlying technology to the masses. “There’s a huge possible business there and I think that’s what YouTube and the music companies see, for better or for worse

Let’s think about these statements.

First, the idea of consumerizing AI for the masses is interesting. However, I interpret the insight as having several force vectors:

- Become the plumbing for the next wave of user generated content (USG)

- Get paid by users AND impose an advertising tax on the USG

- Obtain real-time data about the efficacy of specific smart generation features so that resources can be directed to maintain a “moat” from would-be attackers.

Second, by signing deals with people who to me are essentially unknown, the techno giants are digging some trenches and putting somewhat crude asparagus obstacles where the competitors are like to drive their AI machines. The benefits include:

- First hand experience with the stars’ ego system responds

- The data regarding cost of signing up a star, payouts, and selling ads against the content

- Determining what push back exists [a] among fans and [b] the historical middlemen who have just been put on notice that they can find their future elsewhere.

Finally, the idea of the upside and the downside for particular entities and companies is interesting. There will be winners and losers. Right now, Hollywood is a loser. TikTok is a winner. The companies identified in The Hollywood Reporter want to be winners — big winners.

I may have to start paying more attention to this publication and its stories. Good stuff. What will AI kill? The cost of some human “talent”?

Stephen E Arnold, December 7, 2023

Will TikTok Go Slow in AI? Well, Sure

December 7, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The AI efforts of non-governmental organizations, government agencies, and international groups are interesting. Many resolutions, proclamations, and blog polemics, etc. have been saying, “Slow down AI. Smart software will put people out of work. Destroy humans’ ability to think. Unleash the ‘I’ll be back guy.'”

Getting those enthusiastic about smart software is a management problem. Thanks, MSFT Copilot. Good enough.

My stance in the midst of this fearmongering has been bemusement. I know that predicting the future perturbations of technology is as difficult as picking a Kentucky Derby winner and not picking a horse that will drop dead during the race. When groups issue proclamations and guidelines without an enforcement mechanism, not much is going to happen in the restraint department.

I submit as partial evidence for my bemusement the article “TikTok Owner ByteDance Joins Generative AI Frenzy with Service for Chatbot Development, Memo Says.” What seems clear, if the write up is mostly on the money, is that a company linked to China is joining “the race to offer AI model development as a service.”

Two quick points:

- Model development allows the provider to get a sneak peak at what the user of the system is trying to do. This means that information flows from customer to provider.

- The company in the “race” is one of some concern to certain governments and their representatives.

The write up says:

ByteDance, the Chinese owner of TikTok, is working on an open platform that will allow users to create their own chatbots, as the company races to catch up in generative artificial intelligence (AI) amid fierce competition that kicked off with last year’s launch of ChatGPT. The “bot development platform” will be launched as a public beta by the end of the month…

The cited article points out:

China’s most valuable unicorn has been known for using some form of AI behind the scenes from day one. Its recommendation algorithms are considered the “secret sauce” behind TikTok’s success. Now it is jumping into an emerging market for offering large language models (LLMs) as a service.

What other countries are beavering away on smart software? Will these drive in the slow lane or the fast lane?

Stephen E Arnold, December 7, 2023

Gemini Twins: Which Is Good? Which Is Evil? Think Hard

December 6, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I received a link to a Google DeepMind marketing demonstration Web page called “Welcome to Gemini.” To me, Gemini means Castor and Pollux. Somewhere along the line — maybe a wonky professor named Chapman — told my class that these two represented Zeus and Hades. Stated another way, one was a sort of “good” deity with a penchant for non-godlike behavior. The other downright awful most of the time. I assume that Google knows about Gemini, its mythological baggage, and the duality of a Superman type doing the trust, justice, American way, and the other inspiring a range of bad actors. Imagine. Something that is good and bad. That’s smart software I assume. The good part sells ads; the bad part fails at marketing perhaps?

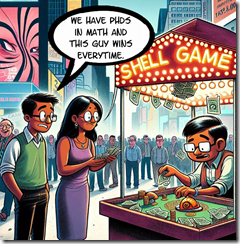

Two smart Googlers in New York City learn the difference between book learning for a PhD and street learning for a degree from the Institute of Hard Knocks. Thanks, MSFT Copilot. (Are you monitoring Google’s effort to dominate smart software by announcing breakthroughs very few people understand? Are you finding Google’s losses at the AI shell game entertaining?

Google’s blog post states with rhetorical aplomb:

Gemini is built from the ground up for multimodality — reasoning seamlessly across text, images, video, audio, and code.

Well, any other AI using Google’s previous technology is officially behind the curve. That’s clear to me. I wonder if Sam AI-Man, Microsoft, and the users of ChatGPT are tuned to the Google wavelength? There’s a video or more accurately more than a dozen of them, but I don’t like video so I skipped them all. There are graphs with minimal data and some that appear to jiggle in “real” time. I skipped those too. There are tables. I did read the some of the data and learned that Gemini can do basic arithmetic and “challenging” math like geometry. That is the 3, 4, 5 triangle stuff. I wonder how many people under the age of 18 know how to use a tape measure to determine if a corner is 90 degrees? (If you don’t, why not ask ChatGPT or MSFT Copilot.) I processed the twin’s size which come in three sizes. Do twins come in triples? Sigh. Anyway one can use Gemini Ultra, Gemini Pro, and Gemini Nano. Okay, but I am hung up on the twins and the three sizes. Sorry. I am a dinobaby. There are more movies. I exited the site and navigated to YCombinator’s Hacker News. Didn’t Sam AI-Man have a brush with that outfit?

You can find the comments about Gemini at this link. I want to highlight several quotations I found suggestive. Then I want to offer a few observations based on my conversation with my research team.

Here are some representative statements from the YCombinator’s forum:

- Jansan said: Yes, it [Google] is very successful in replacing useful results with links to shopping sites.

- FrustratedMonkey said: Well, deepmind was doing amazing stuff before OpenAI. AlphaGo, AlphaFold, AlphaStar. They were groundbreaking a long time ago. They just happened to miss the LLM surge.

- Wddkcs said: Googles best work is in the past, their current offerings are underwhelming, even if foundational to the progress of others.

- Foobar said: The whole things reeks of being desperate. Half the video is jerking themselves off that they’ve done AI longer than anyone and they “release” (not actually available in most countries) a model that is only marginally better than the current GPT4 in cherry-picked metrics after nearly a year of lead-time?

- Arson9416 said: Google is playing catchup while pretending that they’ve been at the forefront of this latest AI wave. This translates to a lot of talk and not a lot of action. OpenAI knew that just putting ChatGPT in peoples hands would ignite the internet more than a couple of over-produced marketing videos. Google needs to take a page from OpenAI’s playbook.

Please, work through the more than 600 comments about Gemini and reach your own conclusions. Here are mine:

- The Google is trying to market using rhetorical tricks and big-brain hot buttons. The effort comes across to me as similar to Ford’s marketing of the Edsel.

- Sam AI-Man remains the man in AI. Coups, tension, and chaos — irrelevant. The future for many means ChatGPT.

- The comment about timing is a killer. Google missed the train. The company wants to catch up, but it is not shipping products nor being associated to features grade school kids and harried marketers with degrees in art history can use now.

Sundar Pichai is not Sam AI-Man. The difference has become clear in the last year. If Sundar and Sam are twins, which represents what?

Stephen E Arnold, December 6, 2023

x

x

x

x

xx