Will TikTok Go Slow in AI? Well, Sure

December 7, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The AI efforts of non-governmental organizations, government agencies, and international groups are interesting. Many resolutions, proclamations, and blog polemics, etc. have been saying, “Slow down AI. Smart software will put people out of work. Destroy humans’ ability to think. Unleash the ‘I’ll be back guy.'”

Getting those enthusiastic about smart software is a management problem. Thanks, MSFT Copilot. Good enough.

My stance in the midst of this fearmongering has been bemusement. I know that predicting the future perturbations of technology is as difficult as picking a Kentucky Derby winner and not picking a horse that will drop dead during the race. When groups issue proclamations and guidelines without an enforcement mechanism, not much is going to happen in the restraint department.

I submit as partial evidence for my bemusement the article “TikTok Owner ByteDance Joins Generative AI Frenzy with Service for Chatbot Development, Memo Says.” What seems clear, if the write up is mostly on the money, is that a company linked to China is joining “the race to offer AI model development as a service.”

Two quick points:

- Model development allows the provider to get a sneak peak at what the user of the system is trying to do. This means that information flows from customer to provider.

- The company in the “race” is one of some concern to certain governments and their representatives.

The write up says:

ByteDance, the Chinese owner of TikTok, is working on an open platform that will allow users to create their own chatbots, as the company races to catch up in generative artificial intelligence (AI) amid fierce competition that kicked off with last year’s launch of ChatGPT. The “bot development platform” will be launched as a public beta by the end of the month…

The cited article points out:

China’s most valuable unicorn has been known for using some form of AI behind the scenes from day one. Its recommendation algorithms are considered the “secret sauce” behind TikTok’s success. Now it is jumping into an emerging market for offering large language models (LLMs) as a service.

What other countries are beavering away on smart software? Will these drive in the slow lane or the fast lane?

Stephen E Arnold, December 7, 2023

Gemini Twins: Which Is Good? Which Is Evil? Think Hard

December 6, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I received a link to a Google DeepMind marketing demonstration Web page called “Welcome to Gemini.” To me, Gemini means Castor and Pollux. Somewhere along the line — maybe a wonky professor named Chapman — told my class that these two represented Zeus and Hades. Stated another way, one was a sort of “good” deity with a penchant for non-godlike behavior. The other downright awful most of the time. I assume that Google knows about Gemini, its mythological baggage, and the duality of a Superman type doing the trust, justice, American way, and the other inspiring a range of bad actors. Imagine. Something that is good and bad. That’s smart software I assume. The good part sells ads; the bad part fails at marketing perhaps?

Two smart Googlers in New York City learn the difference between book learning for a PhD and street learning for a degree from the Institute of Hard Knocks. Thanks, MSFT Copilot. (Are you monitoring Google’s effort to dominate smart software by announcing breakthroughs very few people understand? Are you finding Google’s losses at the AI shell game entertaining?

Google’s blog post states with rhetorical aplomb:

Gemini is built from the ground up for multimodality — reasoning seamlessly across text, images, video, audio, and code.

Well, any other AI using Google’s previous technology is officially behind the curve. That’s clear to me. I wonder if Sam AI-Man, Microsoft, and the users of ChatGPT are tuned to the Google wavelength? There’s a video or more accurately more than a dozen of them, but I don’t like video so I skipped them all. There are graphs with minimal data and some that appear to jiggle in “real” time. I skipped those too. There are tables. I did read the some of the data and learned that Gemini can do basic arithmetic and “challenging” math like geometry. That is the 3, 4, 5 triangle stuff. I wonder how many people under the age of 18 know how to use a tape measure to determine if a corner is 90 degrees? (If you don’t, why not ask ChatGPT or MSFT Copilot.) I processed the twin’s size which come in three sizes. Do twins come in triples? Sigh. Anyway one can use Gemini Ultra, Gemini Pro, and Gemini Nano. Okay, but I am hung up on the twins and the three sizes. Sorry. I am a dinobaby. There are more movies. I exited the site and navigated to YCombinator’s Hacker News. Didn’t Sam AI-Man have a brush with that outfit?

You can find the comments about Gemini at this link. I want to highlight several quotations I found suggestive. Then I want to offer a few observations based on my conversation with my research team.

Here are some representative statements from the YCombinator’s forum:

- Jansan said: Yes, it [Google] is very successful in replacing useful results with links to shopping sites.

- FrustratedMonkey said: Well, deepmind was doing amazing stuff before OpenAI. AlphaGo, AlphaFold, AlphaStar. They were groundbreaking a long time ago. They just happened to miss the LLM surge.

- Wddkcs said: Googles best work is in the past, their current offerings are underwhelming, even if foundational to the progress of others.

- Foobar said: The whole things reeks of being desperate. Half the video is jerking themselves off that they’ve done AI longer than anyone and they “release” (not actually available in most countries) a model that is only marginally better than the current GPT4 in cherry-picked metrics after nearly a year of lead-time?

- Arson9416 said: Google is playing catchup while pretending that they’ve been at the forefront of this latest AI wave. This translates to a lot of talk and not a lot of action. OpenAI knew that just putting ChatGPT in peoples hands would ignite the internet more than a couple of over-produced marketing videos. Google needs to take a page from OpenAI’s playbook.

Please, work through the more than 600 comments about Gemini and reach your own conclusions. Here are mine:

- The Google is trying to market using rhetorical tricks and big-brain hot buttons. The effort comes across to me as similar to Ford’s marketing of the Edsel.

- Sam AI-Man remains the man in AI. Coups, tension, and chaos — irrelevant. The future for many means ChatGPT.

- The comment about timing is a killer. Google missed the train. The company wants to catch up, but it is not shipping products nor being associated to features grade school kids and harried marketers with degrees in art history can use now.

Sundar Pichai is not Sam AI-Man. The difference has become clear in the last year. If Sundar and Sam are twins, which represents what?

Stephen E Arnold, December 6, 2023

x

x

x

x

xx

How about Fear and Paranoia to Advance an Agenda?

December 6, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I thought sex sells. I think I was wrong. Fear seems to be the barn burner at the end of 2023. And why not? We have the shadow of another global pandemic? We have wars galore. We have craziness on US air planes. We have a Cybertruck which spells the end for anyone hit by the behemoth.

I read (but did not shake like the delightful female in the illustration “AI and Mass Spying.” The author is a highly regarded “public interest technologist,” an internationally renowned security professional, and a security guru. For me, the key factoid is that he is a fellow at the Berkman Klein Center for Internet & Society at Harvard University and a lecturer in public policy at the Harvard Kennedy School. Mr. Schneier is a board member of the Electronic Frontier Foundation and the most, most interesting organization AccessNow.

Fear speaks clearly to those in retirement communities, elder care facilities, and those who are uninformed. Let’s say, “Grandma, you are going to be watched when you are in the bathroom.” Thanks, MSFT Copilot. I hope you are sending data back to Redmond today.

I don’t want to make too much of the Harvard University connection. I feel it is important to note that the esteemed educational institution got caught with its ethical pants around its ankles, not once, but twice in recent memory. The first misstep involved an ethics expert on the faculty who allegedly made up information. The second is the current hullabaloo about a whistleblower allegation. The AP slapped this headline on that report: “Harvard Muzzled Disinfo Team after $500 Million Zuckerberg Donation.” (I am tempted to mention the Harvard professor who is convinced he has discovered fungible proof of alien technology.)

So what?

The article “AI and Mass Spying” is a baffler to me. The main point of the write up strikes me as:

Summarization is something a modern generative AI system does well. Give it an hourlong meeting, and it will return a one-page summary of what was said. Ask it to search through millions of conversations and organize them by topic, and it’ll do that. Want to know who is talking about what? It’ll tell you.

I interpret the passage to mean that smart software in the hands of law enforcement, intelligence operatives, investigators in one of the badge-and-gun agencies in the US, or a cyber lawyer is really, really bad news. Smart surveillance has arrived. Smart software can process masses of data. Plus the outputs may be wrong. I think this means the sky is falling. The fear one is supposed to feel is going to be the way a chicken feels when it sees the Chik-fil-A butcher truck pull up to the barn.

Several observations:

- Let’s assume that smart software grinds through whatever information is available to something like a spying large language model. Are those engaged in law enforcement are unaware that smart software generates baloney along with the Kobe beef? Will investigators knock off the verification processes because a new system has been installed at a fusion center? The answer to these questions is, “Fear advances the agenda of using smart software for certain purposes; specifically, enforcement of rules, regulations, and laws.”

- I know that the idea that “all” information can be processed is a jazzy claim. Google made it, and those familiar with Google search results knows that Google does not even come close to all. It can barely deliver useful results from the Railway Retirement Board’s Web site. “All” covers a lot of ground, and it is unlikely that a policeware vendor will be able to do much more than process a specific collection of data believed to be related to an investigation. “All” is for fear, not illumination. Save the categorical affirmatives for the marketing collateral, please.

- The computational cost for applying smart software to large domains of data — for example, global intercepts of text messages — is fun to talk about over lunch. But the costs are quite real. Then the costs of the computational infrastructure have to be paid. Then the cost of the downstream systems and people who have to figure out if the smart software is hallucinating or delivering something useful. I would suggest that Israel’s surprise at the unhappy events in October 2023 to the present day unfolded despite the baloney for smart security software, a great intelligence apparatus, and the tons of marketing collateral handed out at law enforcement conferences. News flash: The stuff did not work.

In closing, I want to come back to fear. Exactly what is accomplished by using fear as the pointy end of the stick? Is it insecurity about smart software? Are there other messages framed in a different way to alert people to important issues?

Personally, I think fear is a low-level technique for getting one’s point across. But when those affiliated with an outfit with the ethics matter and now the payola approach to information, how about putting on the big boy pants and select a rhetorical trope that is unlikely to anything except remind people that the Covid thing could have killed us all. Err. No. And what is the agenda fear advances?

So, strike the sex sells trope. Go with fear sells.

Stephen E Arnold, December 6, 2023

AI: Big Ideas Become Money Savers and Cost Cutters

December 6, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Earlier this week (November 28, 2023,) The British newspaper The Guardian published “Sports Illustrated Accused of Publishing Articles Written by AI.” The main idea is that dependence on human writers became the focus of a bunch of bean counters. The magazine has a reasonably high profile among a demographic not focused on discerning the difference between machine output and sleek, intellectual, well groomed New York “real” journalists. Some cared. I didn’t. It’s money ball in the news business.

The day before the Sports Illustrated slick business and PR move, I noted a Murdoch-infused publication’s revelation about smart software. Barron’s published “AI Will Create—and Destroy—Jobs. History Offers a Lesson.” Barron’s wrote about it; Sports Illustrated got snared doing it.

Barron’s said:

That AI technology will come for jobs is certain. The destruction and creation of jobs is a defining characteristic of the Industrial Revolution. Less certain is what kind of new jobs—and how many—will take their place.

Okay, the Industrial Revolution. Exactly how long did that take? What jobs were destroyed? What were the benefits at the beginning, the middle, and end of the Industrial Revolution? What were the downsides of the disruption which unfolded over time? Decades wasn’t it?

The AI “revolution” is perceived to be real. Investors, testosterone-charged venture capitalists, and some Type A students are going to make the AI Revolution a reality. Damn, the regulators, the copyright complainers, and the dinobabies who want to read, think, and write themselves.

Barron’s noted:

A survey conducted by LinkedIn for the World Economic Forum offers hints about where job growth might come from. Of the five fastest-growing job areas between 2018 and 2022, all but one involve people skills: sales and customer engagement; human resources and talent acquisition; marketing and communications; partnerships and alliances. The other: technology and IT. Even the robots will need their human handlers.

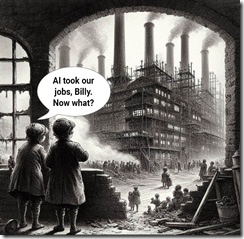

I can think of some interesting jobs. Thanks, MSFT Copilot. You did ingest some 19th century illustrations, didn’t you, you digital delight.

Now those are rock solid sources: Microsoft’s LinkedIn and the charming McKinsey & Company. (I think of McKinsey as the opioid innovators, but that’s just my inexplicable predisposition toward an outstanding bastion of ethical behavior.)

My problem with the Sports Illustrated AI move and the Barron’s essay boils down to the bipolarism which surfaces when a new next big thing appears on the horizon. Predicting what will happen when a technology smashes into business billiard balls is fraught with challenges.

One thing is clear: The balls are rolling, and journalists, paralegals, consultants, and some knowledge workers are going to find themselves in the side pocket. The way out might be making TikToks or selling gadgets on eBay.

Some will say, “AI took our jobs, Billy. Now what?” Yes, now what?

Stephen E Arnold, December 6, 2023

The RAG Snag: Convenience May Undermine Thinking for Millions

December 4, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My understanding is that everyone who is informed about AI knows about RAG. The acronym means Retrieval Augmented Generation. One explanation of RAG appears in the nVidia blog in the essay “What Is Retrieval Augmented Generation aka RAG.” nVidia, in my opinion, loves whatever drives demand for its products.

The idea is that machine processes to minimize errors in output. The write up states:

Retrieval-augmented generation (RAG) is a technique for enhancing the accuracy and reliability of generative AI models with facts fetched from external sources.

Simplifying the idea, RAG methods gather information and perform reference checks. The checks can be performed by consulting other smart software, the Web, or knowledge bases like an engineering database. nVidia provides a “reference architecture,” which obviously relies on nVidia products.

The write up does an obligatory tour of a couple of search and retrieval systems. Why? Most of the trendy smart software are demonstrations of information access methods wrapped up in tools that think for the “searcher” or person requiring output to answer a question, explain a concept, or help the human to think clearly. (In dinosaur days, the software is performing functions once associated with a special librarian or an informed colleague who could ask questions and conduct a reference interview. I hope the dusty concepts did not make you sneeze.)

“Yes, young man. The idea of using multiples sources can result in learning. We now call this RAG, not research.” The young man, stunned with the insight say, “WTF?” Thanks, MSFT Copilot. I love the dual tassels. The young expert is obviously twice as intelligent as the somewhat more experienced dinobaby with the weird fingers.

The article includes a diagram which I found difficult to read. I think the simple blocks represent the way in which smart software obviates the need for the user to know much about sources, verification, or provenance about the sources used to provide information. Furthermore, the diagram makes the entire process look just like getting the location of a pizza restaurant from an iPhone (no Google Maps for me).

The highlight of the write up are the links within the article. An interested reader can follow the links for additional information.

Several observations:

- The emergence of RAG as a replacement for such concepts as “search”, “special librarian,” and “provenance” makes clear that finding information is a problem not solved for systems, software, and people. New words make the “old” problem appear “new” again.

- The push for recursive methods to figure out what’s “right” or “correct” will regress to the mean; that is, despite the mathiness of the methods, systems will deliver “acceptable” or “average” outputs. A person who thinks that software will impart genius to a user are believing in a dream. These individuals will not be living the dream.

- widespread use of smart software and automation means that for most people, critical thinking will become the equivalent of an appendix. Instead of mother knows best, the system will provide the framing, the context, and the implication that the outputs are correct.

RAG opens new doors for those who operate widely adopted smart software systems will have significant control over what people think and, thus, do. If the iPhone shows a pizza joint, what about other pizza joints? Just ask. The system will not show pizza joints not verified in some way. If that “verification” requires the company advertising to be in the data set, well, that’s the set of pizza joints one will see. The others? Invisible, not on the radar, and doomed to failure seem their fate.

RAG is significant because it is new speak and it marks a disassociation of “knowing” from “accepting” output information as the best and final words on a topic. I want to point out that for a small percentage of humans, their superior cognitive abilities will ensure a different trajectory. The “new elite” will become the individuals who design, shape, control, and deploy these “smart” systems.

Most people will think they are informed because they can obtain information from a device. The mass of humanity will not know how information control influences their understanding and behavior. Am I correct? I don’t know. I do know one thing: This dinobaby prefers to do knowledge acquisition the old fashioned, slow, inefficient, and uncontrolled way.

Stephen E Arnold, December 4, 2023

Health Care and Steerable AI

December 4, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Large language models are powerful tools that can be used for the betterment of humanity. Or, in the hands of for-profit entities, to get away with wringing every last penny out of a system in the most opaque and intractable ways possible. When that system manages the wellbeing of millions and millions of people, the fallout can be tragic. TechDirt charges, “’AI’ Is Supercharging our Broken Healthcare System’s Worst Tendencies.”

Reporter Karl Bode begins by illustrating the bad blend of corporate greed and AI with journalism as an example. Media companies, he writes, were so eager to cut corners and dodge unionized labor they adopted AI technology before it was ready. In that case the results were “plagiarism, bull[pucky], a lower quality product, and chaos.” Those are bad. Mistakes in healthcare are worse. We learn:

“Not to be outdone, the very broken U.S. healthcare industry is similarly trying to layer half-baked AI systems on top of a very broken system. Except here, human lives are at stake. For example UnitedHealthcare, the largest health insurance company in the US, has been using AI to determine whether elderly patients should be cut off from Medicare benefits. If you’ve ever navigated this system on behalf of an elderly loved one, you likely know what a preposterously heartless [poop]whistle this whole system already is long before automation gets involved. But a recent investigation by STAT showed the AI consistently made major errors and cut elderly folks off from needed care prematurely, with little recourse by patients or families. … A recent lawsuit filed in the US District Court for the District of Minnesota alleges that the AI in question was reversed by human review roughly 90 percent of the time.”

And yet, employees were ordered to follow the algorithm’s decisions no matter their inanity. For the few patients who did win hard-fought reversals, those decisions were immediately followed by fresh rejections that kicked them back to square one. Bode writes:

“The company in question insists that the AI’s rulings are only used as a guide. But it seems pretty apparent that, as in most early applications of LLMs, the systems are primarily viewed by executives as a quick and easy way to cut costs and automate systems already rife with problems, frustrated consumers, and underpaid and overtaxed support employees.”

But is there hope these trends will be eventually curtailed? Well, no. The write-up concludes by scoffing at the idea that government regulations or class action lawsuits are any match for corporate greed. Sounds about right.

Cynthia Murrell, December 4, 2023

AI Adolescence Ascendance: AI-iiiiii!

December 1, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The monkey business of smart software has revealed its inner core. The cute high school essays and the comments about how to do search engine optimization are based on the fundamental elements of money, power, and what I call ego-tanium. When these fundamental elements go critical, exciting things happen. I know this this assertion is correct because I read “The AI Doomers Have Lost This Battle”, an essay which appears in the weird orange newspaper The Financial Times.

The British bastion of practical financial information says:

It would be easy to say that this chaos showed that both OpenAI’s board and its curious subdivided non-profit and for-profit structure were not fit for purpose. One could also suggest that the external board members did not have the appropriate background or experience to oversee a $90bn company that has been setting the agenda for a hugely important technology breakthrough.

In my lingo, the orange newspaper is pointing out that a high school science club management style is like a burning electric vehicle. Once ignited, the message is, “Stand back, folks. Let it burn.”

“Isn’t this great?” asks the driver. The passenger, a former Doomsayer, replies, “AIiiiiiiiiii.” Thanks MidJourney, another good enough illustration which I am supposed to be able to determine contains copyrighted material. Exactly how? may I ask. Oh, you don’t know.

The FT picks up a big-picture idea; that is, smart software can become a problem for humanity. That’s interesting because the book “Weapons of Math Destruction” did a good job of explaining why algorithms can go off the rails. But the FT’s essay embraces the idea of software as the Terminator with the enthusiasm of the crazy old-time guy who shouted “Eureka.”

I note this passage:

Unfortunately for the “doomers”, the events of the last week have sped everything up. One of the now resigned board members was quoted as saying that shutting down OpenAI would be consistent with the mission (better safe than sorry). But the hundreds of companies that were building on OpenAI’s application programming interfaces are scrambling for alternatives, both from its commercial competitors and from the growing wave of open-source projects that aren’t controlled by anyone. AI will now move faster and be more dispersed and less controlled. Failed coups often accelerate the thing that they were trying to prevent.

Okay, the yip yap about slowing down smart software is officially wrong. I am not sure about the government committees’ and their white papers about artificial intelligence. Perhaps the documents can be printed out and used to heat the camp sites of knowledge workers who find themselves out of work.

I find it amusing that some of the governments worried about smart software are involved in autonomous weapons. The idea of a drone with access to a facial recognition component can pick out a target and then explode over the person’s head is an interesting one.

Is there a connection between the high school antics of OpenAI, the hand-wringing about smart software, and the diffusion of decider systems? Yes, and the relationship is one of those hockey stick curves so loved by MBAs from prestigious US universities. (Non reproducibility and a fondness for Jeffrey Epstein-type donors is normative behavior.)

Those who want to cash in on the next Big Thing are officially in the 2023 equivalent of the California gold rush. Unlike the FT, I had no doubt about the ascendance of the go-fast approach to technological innovation. Technologies, even lousy ones, are like gerbils. Start with a two or three and pretty so there are lots of gerbils.

Will the AI gerbils and the progeny be good or bad. Because they are based on the essential elements of life — money, power, and ego-tanium — the outlook is … exciting. I am glad I am a dinobaby. Too bad about the Doomers, who are regrouping to try and build shield around the most powerful elements now emitting excited particles. The glint in the eyes of Microsoft executives and some venture firms are the traces of high-energy AI emissions in the innovators’ aqueous humor.

Stephen E Arnold, December 1, 2023

Deepfakes: Improving Rapidly with No End in Sight

December 1, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The possible applications of AI technology are endless and we’ve barely imagined the opportunities. While tech experts mainly focus on the benefits of AI, bad actors are concentrating how to use them for illegal activities. The Next Web explains how bad actors are using AI for scams, “Deepfake Fraud Attempts Are Up 3000% In 2023-Here’s Why.” Bad actors are using cheap and widely available AI technology to create deepfake content for fraud attempts.

According to Onfido, an ID verification company in London, reports that deepfake scams increased by 31% in 2023. It’s an entire 3000% year-on-year gain. The AI tool of choice for bad actors is face-swapping apps. They range in quality from a bad copy and paste job to sophisticated, blockbuster quality fakes. While the crude attempts are laughable, it only takes one successful facial identity verification for fraudsters to win.

The bad actors concentrate on quantity over quality and account for 80.3% of attacks in 2023. Biometric information is a key component to stop fraudsters:

“Despite the rise of deepfake fraud, Onfido insists that biometric verification is an effective deterrent. As evidence, the company points to its latest research. The report found that biometrics received three times fewer fraudulent attempts than documents. The criminals, however, are becoming more creative at attacking these defenses. As GenAI tools become more common, malicious actors are increasingly producing fake documents, spoofing biometric defenses, and hijacking camera signals.”

Onfido suggests using “liveness” biometrics in verification technology. Liveness determines if a user if actually present instead of a deepfake, photo, recording, or masked individual.

As AI technology advances so will bad actors in their scams.

Whitney Grace, December 1, 2023

Amazon Customer Service: Let Many Flowers Bloom and Die on the Vine

November 29, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Amazon has been outputting artificial intelligence “assertions” at a furious pace. What’s clear is that Amazon is “into” the volume and variety business in my opinion. The logic of offering multiple “works in progress” and getting them to work reasonably well is going to have three characteristics: The first is that deploying and operating different smart software systems is going to be expensive. The second is that tuning and maintaining high levels of accuracy in the outputs will be expensive. The third is that supporting the users, partners, customers, and integrators is going to be expensive. If we use a bit of freshman in high school algebra, the common factor is expensive. Amazon’s remarkable assertion that no one wants to bet a business on just one model strikes me as a bit out of step with the world in which bean counters scuttle and scurry in green eyeshades and sleeve protectors. (See. I am a dinobaby. Sleeve protectors. I bet none of the OpenAI type outfits have accountants who use these fashion accessories!)

Let’s focus on just one facet of the expensive burdens I touched upon above— customer service. Navigate to the remarkable and stunningly uncritical write up called “How to Reach Amazon Customer Service: A Complete Guide.” The write up is an earthworm list of the “options” Amazon provides. As Amazon was announcing its new new big big things, I was trying to figure out why an order for an $18 product was rejected. The item in question was one part of a multipart order. The other, more costly items were approved and billed to my Amazon credit card.

Thanks MSFT Copilot. You do a nice broken bulldozer or at least a good enough one.

But the dog treats?

I systematically worked through the Amazon customer service options. As a Prime customer, I assumed one of them would work. Here’s my report card:

- Amazon’s automated help. A loop. See Help pages which suggested I navigate too the customer service page. Cute. A first year comp sci student’s programming error. A loop right out of the box. Nifty.

- The customer service page. Well, that page sent me to Help and Help sent me to the automation loop. Cool Zero for two.

- Access through the Amazon app. Nope. I don’t install “apps” on my computing devices unless I have zero choice. (Yes, I am thinking about Apple and Google.) Too bad Amazon, I reject your app the way I reject QR codes used by restaurants. (Do these hash slingers know that QR codes are a fave of some bad actors?)

- Live chat with Amazon customer service was not live. It was a bot. The suggestion? Get back in the loop. Maybe the chat staff was at the Amazon AI announcement or just severely overstaffed or simply did not care. Another loser.

- Request a call from Amazon customer service. Yeah, I got to that after I call Amazon customer service. Another loser.

I repeated the “call Amazon customer service” twice and I finally worked through the automated system and got a person who barely spoke English. I explained the problem. One product rejected because my Amazon credit card was rejected. I learned that this particular customer service expert did not understand how that could have happened. Yeah, great work.

How did I resolve the rejected credit card. I called the Chase Bank customer service number. I told a person my card was manipulated and I suspected fraud. I was escalated to someone who understood the word “fr4aud.” After about five minutes of “’Will you please hold”, the Chase person told me, “The problem is at Amazon, not your card and not Chase.”

What was the fix? Chase said, “Cancel the order.” I did and went to another vendor.

Now what’s that experience suggest about Amazon’s ability (willingness) to provide effective, efficient customer support to users of its purported multiple large language models, AI systems, and assorted marketing baloney output during Amazon’s “we are into AI” week?

My answer? The Bezos bulldozer has an engine belching black smoke, making a lot of noise because the muffler has a hole in it, and the thumpity thump of the engine reveals that something is out of tune.

Yeah, AI and customer support. Just one of the “expensive” things Amazon may not be able to deliver. The troubling thing is that Amazon’s AI may have been powering the multiple customer support systems. Yikes.

Stephen E Arnold, November 29, 2023

India Might Not Buy the User-Is-Responsible Argument

November 29, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

India’s elected officials seem to be agitated about deep fakes. No, it is not the disclosure that a company in Spain is collecting $10,000 a month or more from a fake influencer named Aitana López. (Some in India may be following the deeply faked bimbo, but I would assert that not too many elected officials will admit to their interest in the digital dream boat.)

US News & World Report recycled a Reuters (the trust outfit) story “India Warns Facebook, YouTube to Enforce Ruyles to Deter Deepfakes — Sources” and asserted:

India’s government on Friday warned social media firms including Facebook and YouTube to repeatedly remind users that local laws prohibit them from posting deepfakes and content that spreads obscenity or misinformation

“I know you and the rest of the science club are causing problems with our school announcement system. You have to stop it, or I will not recommend you or any science club member for the National Honor Society.” The young wizard says, “I am very, very sorry. Neither I nor my friends will play rock and roll music during the morning announcements. I promise.” Thanks, MidJourney. Not great but at least you produced an image which is more than I can say for the MSFT Copilot Bing thing.

What’s notable is that the government of India is not focusing on the user of deep fake technology. India has US companies in its headlights. The news story continues:

India’s IT ministry said in a press statement all platforms had agreed to align their content guidelines with government rules.

Amazing. The US techno-feudalists are rolling over. I am someone who wonders, “Will these US companies bend a knee to India’s government?” I have zero inside information about either India or the US techno-feudalists, but I have a recollection that US companies:

- Do what they want to do and then go to court. If they win, they don’t change. If they lose, they pay the fine and they do some fancy dancing.

- Go to a meeting and output vague assurances prefaced by “Thank you for that question.” The companies may do a quick paso double and continue with business pretty much as usual

- Just comply. As Canada has learned, Facebook’s response to the Canadian news edict was simple: No news for Canada. To make the situation more annoying to a real government, other techno-feudalists hopped on Facebook’s better idea.

- Ignore the edict. If summoned to a meeting or hit with a legal notice, companies will respond with flights of legal eagles with some simple messages; for example, no more support for your law enforcement professionals or your intelligence professionals. (This is a hypothetical example only, so don’t develop the shingles, please.)

Net net: Techno-feudalists have to decide: Roll over, ignore, or go to “war.”

Stephen E Arnold, November 29, 2023