Amazon Rekognition: Great but…

November 9, 2018

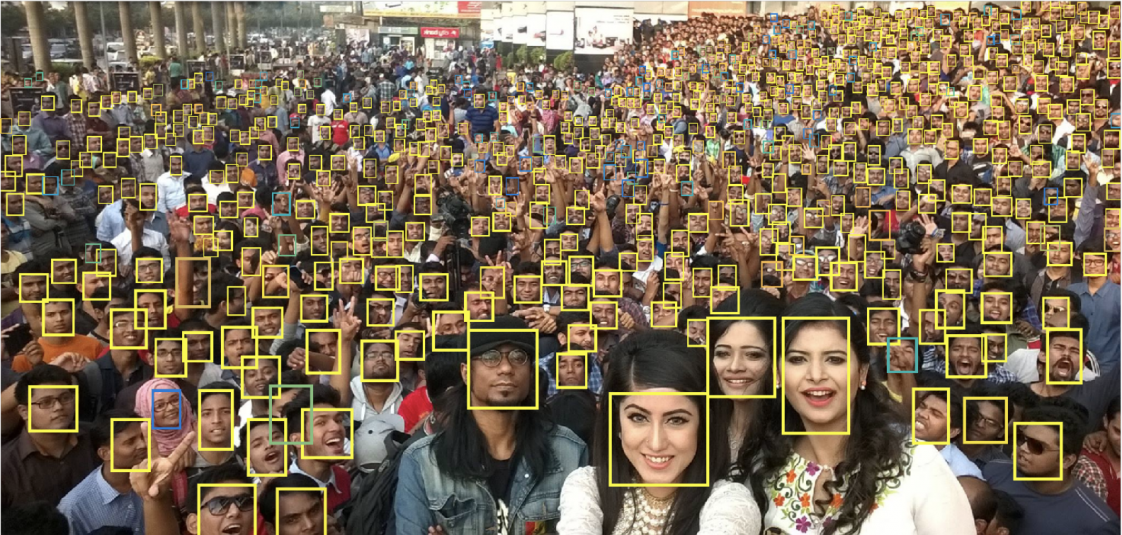

I have been following the Amazon response to employee demands to cut off the US government. Put that facial recognition technology on “ice.” The issue is an intriguing one; for example, Rekognition plugs into DeepLens. DeepLens connects with Sagemaker. The construct allows some interesting policeware functions. Ah, you didn’t know that? Some info is available if you view the October 30 and November 6, 2018, DarkCyber. Want more info? Write benkent2020 at yahoo dot com.

How realistic is 99 percent accuracy? Pretty realistic when one has one image and a bounded data set against which to compare a single image of of adequate resolution and sharpness.

What caught my attention was the “real” news in “Amazon Told Employees It Would Continue to Sell Facial Recognition Software to Law Enforcement.” I am less concerned about the sales to the US government. I was drawn to these verbal perception shifters:

- under fire. [Amazon is taking flak from its employees who don’t want Amazon technology used by LE and similar services.]

- track human beings [The assumption is tracking is bad until the bad actor tracked is trying to kidnap your child, then tracking is wonderful. This is the worse type of situational reasoning.]

- send them back into potentially dangerous environments overseas. [Are Central and South America overseas, gentle reader?]

These are hot buttons.

But I circled in pink this phrase:

Rekognition is research proving the system is deeply flawed, both in terms of accuracy and regarding inherent racial bias.

Well, what does one make of the statement that Rekognition is powerful but has fatal flaws?

Want proof that Rekognition is something more closely associated with Big Lots than Amazon Prime? The write up states:

The American Civil Liberties Union tested Rekognition over the summer and found that the system falsely identified 28 members of Congress from a database of 25,000 mug shots. (Amazon pushed back against the ACLU’s findings in its study, with Matt Wood, its general manager of deep learning and AI, saying in a blog post back in July that the data from its test with the Rekognition API was generated with an 80 percent confidence rate, far below the 99 percent confidence rate it recommends for law enforcement matches.)

Yeah, 99 percent confidence. Think about that. Pretty reasonable, right? Unfortunately 99 percent is like believing in the tooth fairy, just in terms of a US government spec or Statement of Work. Reality for the vast majority of policeware systems is in the 75 to 85 percent range. Pretty good in my book because these are achievable accuracy percentages. The 99 percent stuff is window dressing and will be for years to come.

Also, Amazon, the Verge points out, is not going to let folks tinker with the Rekognition system to determine how accurate it really is. I learned:

The company has also declined to participate in a comprehensive study of algorithmic bias run by the National Institute of Standards and Technology that seeks to identify when racial and gender bias may be influencing a facial recognition algorithm’s error rate.

Yep, how about those TREC accuracy reports?

My take on this write up is that Amazon is now in the sites of the “real” journalists.

Perhaps the Verge would like Amazon to pull out of the JEDI procurement?

Great idea for some folks.

Perhaps the Verge will dig into the other components of Rekognition and then plot the improvements in accuracy when certain types of data sets are used in the analysis.

Facial recognition is not the whole cloth. Rekognition is one technology thread which needs a context that moves beyond charged language and accuracy rates which are in line with those of other advanced systems.

Amazon’s strength is not facial recognition. The company has assembled a policeware construct. That’s news.

Stephen E Arnold, November 9, 2018

Analytics: From Predictions to Prescriptions

October 19, 2018

I read an interesting essay originating at SAP. The article’s title: “The Path from Predictive to Prescriptive Analytics.” The idea is that outputs from a system can be used to understand data. Outputs can also be used to make “predictions”; that is, guesses or bets on likely outcomes in the future. Prescriptive analytics means that the systems tell or wire actions into an output. Now the output can be read by a human, but I think the key use case will be taking the prescriptive outputs and feeding them into other software systems. In short, the system decides and does. No humans really need be involved.

The write up states:

There is a natural progression towards advanced analytics – it is a journey that does not have to be on separate deployments. In fact, it is enhanced by having it on the same deployment, and embedding it in a platform that brings together data visualization, planning, insight, and steering/oversight functions.

What is the optimal way to manage systems which are dictating actions or just automatically taking actions?

The answer is, quite surprisingly, a bit of MBA consultantese: Governance.

The most obvious challenge with regards to prescriptive analytics is governance.

Several observations:

- Governance is unlikely to provide the controls which prescriptive systems warrant. Evidence is that “governance” in some high technology outfits is in short supply.

- Enhanced automation will pull prescriptive analytics into wide use. The reasons are one you have heard before: Better, faster, cheaper.

- Outfits like the Google and In-Q-Tel funded Recorded Future and DarkTrace may have to prepare for new competition; for example, firms which specialize in prescription, not prediction.

To sum up, interesting write up. perhaps SAP will be the go to player in plugging prescriptive functions into their software systems?

Stephen E Arnold, October 19, 2018

Free Data Sources

October 19, 2018

We were plowing through our research folder for Beyond Search. We overlooked the article “685 Outstanding Free Data Sources For 2017.” If you need a range of data sources related to such topics as government data, machine learning, and algorithms, you might want to bookmark this listing.

Stephen E Arnold, October 19, 2018

Algorithms Are Neutral. Well, Sort of Objective Maybe?

October 12, 2018

I read “Amazon Trained a Sexism-Fighting, Resume-Screening AI with Sexist Hiring data, So the Bot Became Sexist.” The main point is that if the training data are biased, the smart software will be biased.

No kidding.

The write up points out:

There is a “machine learning is hard” angle to this: while the flawed outcomes from the flawed training data was totally predictable, the system’s self-generated discriminatory criteria were surprising and unpredictable. No one told it to downrank resumes containing “women’s” — it arrived at that conclusion on its own, by noticing that this was a word that rarely appeared on the resumes of previous Amazon hires.

Now the company discovering that its smart software became automatically biased was Amazon.

That’s right.

The same Amazon which has invested significant resources in its SageMaker machine learning platform. This is part of the infrastructure which will, Amazon hopes, will propel the US Department of Defense forward for the next five years.

Hold on.

What happens if the system and method produces wonky outputs when a minor dust up is automatically escalated?

Discriminating in hiring is one thing. Fluffing a global matter is a another.

Do the smart software systems from Google, IBM, and Microsoft have similar tendencies? My recollection is that this type of “getting lost” has surfaced before. Maybe those innovators pushing narrowly scoped rule based systems were on to something?

Stephen E Arnold, October 11, 2018

Smart Software: There Are Only a Few Algorithms

September 27, 2018

I love simplicity. The write up “The Algorithms That Are Currently Fueling the Deep Learning Revolution” certainly makes deep learning much simpler. Hey, learn these methods and you too can fire up your laptop and chop Big Data down to size. Put digital data into the digital juicer and extract wisdom.

Ah, simplicity.

The write up explains that there are four algorithms that make deep learning tick. I like this approach because it does not require one to know that “deep learning” means. That’s a plus.

The algorithms are:

- Back propagation

- Deep Q Learning

- Generative adversarial network

- Long short term memory

Are these algorithms or are these suitcase words?

The view from Harrod’s Creek is that once one looks closely at these phrase one will discover multiple procedures, systems and methods, and math slightly more complex than tapping the calculator on one’s iPhone to get a sum. There is, of course, the issue of data validation, bandwidth, computational resources, and a couple of other no-big-deal things.

Be a deep learning expert. Easy. Just four algorithms.

Stephen E Arnold, September 27, 2018

IBM Embraces Blockchain for Banking: Is Amazon in the Game Too?

September 9, 2018

IBM recently announced the creation of LedgerConnect, a Blockchain powered banking service. This is an interesting move for a company that previously seemed to waver on whether it wanted to associate with this technology most famous for its links to cryptocurrency. However, the pairing actually makes sense, as we discovered in a recent IT Pro Portal story, “IBM Reveals Support Blockchain App Store.”

According to an IBM official:

“On LedgerConnect financial institutions will be able to access services in areas such as, but not limited to, know your customer processes, sanctions screening, collateral management, derivatives post-trade processing and reconciliation and market data. By hosting these services on a single, enterprise-grade network, organizations can focus on business objectives rather than application development, enabling them to realize operational efficiencies and cost savings across asset classes.”

This, in addition, to recent news that some of the biggest banks on the planet are already using Blockchain for a variety of needs. This includes the story that the Agricultural Bank of China has started issuing large loans using the technology. In fact, out of the 26 publicly owned banks in China, nearly half are using Blockchain. IBM looks pretty conservative when you think of it like that, which is just where IBM likes to be.

Amazon supporst Ethereum, HyperLedger, and a host of other financial functions. For how long? Years.

Patrick Roland, September 9, 2018

Algorithms Can Be Interesting

September 8, 2018

Navigate to “As Germans Seek News, YouTube Delivers Far-Right Tirades” and consider the consequences of information shaping. I have highlighted a handful of statements from the write up to prime your critical thinking pump. Here goes.

I circled this statement in true blue:

…[a Berlin-based digital researcher] scraped YouTube databases for information on every Chemnitz-related video published this year. He found that the platform’s recommendation system consistently directed people toward extremist videos on the riots — then on to far-right videos on other subjects.

I noted:

[The researcher] found that the platform’s recommendation system consistently directed people toward extremist videos on the riots — then on to far-right videos on other subjects.

The write up said:

A YouTube spokeswoman declined to comment on the accusations, saying the recommendation system intended to “give people video suggestions that leave them satisfied.”

The newspaper story revealed:

Zeynep Tufekci, a prominent social media researcher at the University of North Carolina at Chapel Hill, has written that these findings suggest that YouTube could become “one of the most powerful radicalizing instruments of the 21st century.”

With additional exploration, the story asserts a possible mathematical idiosyncrasy:

… The YouTube recommendations bunched them all together, sending users through a vast, closed system composed heavily of misinformation and hate.

You may want to read the original write up and consider the implications of interesting numerical recipes’ behavior.

Smart Software: Just Keep Adding Layers between the Data and the Coder

September 6, 2018

What could be easier? Clicking or coding.

Give up. Clicking wins.

A purist might suggest that training smart software requires an individual with math and data analysis skills. A modern hippy dippy approach is to suggest that pointing and clicking is the way of the future.

Amazon is embracing that approach and other firms are too.

I read “Baidu Launches EZDL, an AI Model Training Platform That Requires No Coding Experience.” Even in China where technical talent is slightly more abundant than in Harrod’s Creek, Kentucky, is on the bandwagon.

I learned:

Baidu this week launched an online tool in beta — EZDL — that makes it easy for virtually anyone to build, design, and deploy artificial intelligence (AI) models without writing a single line of code.

Why slog through courses? Point and click. The future.

There’s not much detail in the write up, but I get the general idea of what’s up from this passage from the write up:

To train a model, EZDL requires 20-100 images, or more than 50 audio files, assigned to each label, and training takes between 15 minutes and an hour. (Baidu claims that more than two-thirds of models get accuracy scores higher than 90 percent.) Generated algorithms can be deployed in the cloud and accessed via an API, or downloaded in the form of a software development kit that supports iOS, Android, and other operating systems.

Oh, oh. The “API” buzzword is in the paragraph, so life is not completely code free.

Baidu, like Amazon, has a bit of the competitive spirit. The write up explains:

Baidu’s made its AI ambitions clear in the two years since it launched Baidu Brain, its eponymous platform for enterprise AI. The company says more than 600,000 developers are currently using Brain 3.0 — the newest version, released in July 2018 — for 110 AI services across 20 industries.

What could go wrong? Nothing, I assume. Absolutely nothing.

Stephen E Arnold, September 6, 2018

Online with Smart Software, Robots, and Obesity

September 1, 2018

I recall a short article called “A Starfish-Killing, Artificially Intelligent Robot Is Set to Patrol the Great Barrier Reef.”The story appeared in 2016. I clipped this item a few days ago: “Centre for Robotic Vision Uses Bots to Cull Starfish.” The idea is that environmental protection becomes easier with killer robots.

Now combine that technology application with “Artificial Intelligence Spots Obesity from Space.” The main idea is that smart software can piece together items of data to figure out who is fat and where fat people live.

What happens if a clever tinkerer hooks together robots which can take action to ensure termination with smart software able to identify a target.

I mention this technology confection because the employees who object to an employer’s technology may be behind the curve. The way technology works is that innovations work a bit like putting Lego blocks together. Separate capabilities can be combined in interesting ways.

Will US employees’ refusal to work on certain projects act like a stuck brake on a rental car?

Worth thinking about before a killer satellite identifies a target and makes an autonomous decision about starfish or other entities. Getting online has interesting applications.

Why search when one can target via algorithms?

Stephen E Arnold, September 1, 2018

A Glimpse of Random

August 30, 2018

I found “The Unreasonable Effectiveness of Quasirandom Sequences” interesting. Random number generators are important to certain cyber analytics systems. The write up puts the spotlight on the R2 method. Without turning a blog post into a math lesson, I want to suggest that you visit the source document and look at how different approaches to random number generation appear when graphed. My point is that the selection of a method and then the decision to seed a method with a particular value can have an impact on how the other numerical recipes behave when random numbers are fed into a process. The outputs of a system in which the user has great confidence may, in fact, be constructs and one way to make sense of data. What’s this mean? Pinpointing algorithmic “bias” is a difficult job. It is often useful to keep in mind that decisions made by a developer or math whiz for what seems like a no brainer process can have a significant impact on outputs.

Stephen E Arnold, August 30, 2018