Research: A Slippery Path to Wisdom Now

January 19, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

When deciding whether to believe something on the Internet all one must do is google it, right? Not so fast. Citing five studies performed between 2019 and 2022, Scientific American describes “How Search Engines Boost Misinformation.” Writer Lauren Leffer tells us:

“Encouraging Internet users to rely on search engines to verify questionable online articles can make them more prone to believing false or misleading information, according to a study published today in Nature. The new research quantitatively demonstrates how search results, especially those prompted by queries that contain keywords from misleading articles, can easily lead people down digital rabbit holes and backfire. Guidance to Google a topic is insufficient if people aren’t considering what they search for and the factors that determine the results, the study suggests.”

Those of us with critical thinking skills may believe that caveat goes without saying but, alas, it does not. Apparently evaluating the reliability of sources through lateral reading must be taught to most searchers. Another important but underutilized practice is to rephrase a query before hitting enter. Certain terms are predominantly used by purveyors of misinformation, so copy-and-pasting a dubious headline will turn up dubious sources to support it. We learn:

“For example, one of the misleading articles used in the study was entitled ‘U.S. faces engineered famine as COVID lockdowns and vax mandates could lead to widespread hunger, unrest this winter.’ When participants included ‘engineered famine’—a unique term specifically used by low-quality news sources—in their fact-check searches, 63 percent of these queries prompted unreliable results. In comparison, none of the search queries that excluded the word ‘engineered’ returned misinformation. ‘I was surprised by how many people were using this kind of naive search strategy,’ says the study’s lead author Kevin Aslett, an assistant professor of computational social science at the University of Central Florida. ‘It’s really concerning to me.’”

That is putting it mildly. These studies offer evidence to support suspicions that thoughtless searching is getting us into trouble. See the article for more information on the subject. Maybe a smart LLM will spit it out for you, and let you use it as your own?

Cynthia Murrell, January 19, 2024

Do You Know the Term Quality Escape? It Is a Sign of MBA Efficiency Talk

January 12, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I am not too keen on leaving my underground computer facility. Given the choice of a flight on a commercial airline and doing a Zoom, fire up the Zoom. It works reasonably well. Plus, I don’t have to worry about screwed up flight controls, air craft maintenance completed in a country known for contraband, and pilots trained on flawed or incomplete instructional materials. Why am I nervous? As a Million Mile traveler on a major US airline, I have survived a guy dying in the seat next to me, assorted “return to airport” delays, and personal time spent in a comfy seat as pilots tried to get the mechanics to give the okay for the passenger jet to take off. (Hey, it just landed. What’s up? Oh, right, nothing.)

Another example of a quality escape: Modern car, dead battery, parts falling off, and a flat tire. Too bad the driver cannot plug into the windmill. Thanks, MSFT Copilot Bing thing. Good enough because the auto is not failing at 14,000 feet.

I mention my thrilling life as a road warrior because I read “Boeing 737-9 Grounding: FAA Leaves No Room For “Quality Escapes.” In that “real” news report I spotted a phrase which was entirely new to me. Imagine. After more than 50 years of work in assorted engineering disciplines at companies ranging from old-line industrial giants like Halliburton to hippy zippy outfits in Silicon Valley, here was a word pair that baffled me:

Quality Escape

Well, quality escape means that a product was manufactured, certified, and deployed which was did not meet “standards”. In plain words, the door and components were not safe and, therefore, lacked quality. And escape? That means failure. An F, flop, or fizzle.

“FAA Opens Investigation into Boeing Quality Control after Alaska Airlines Incident” reports:

… the [FAA] agency has recovered key items sucked out of the plane. On Sunday, a Portland schoolteacher found a piece of the aircraft’s fuselage that had landed in his backyard and reached out to the agency. Two cell phones that were likely flung from the hole in the plane were also found in a yard and on the side of the road and turned over to investigators.

I worked on an airplane related project or two when I was younger. One of my team owned two light aircraft, one of which was acquired from an African airline and then certified for use in the US. I had a couple of friends who were jet pilots in the US government. I picked up some random information; namely, FAA inspections are a hassle. Required work is expensive. Stuff breaks all the time. When I was picking up airplane info, my impression was that the FAA enforced standards of quality. One of the pilots was a certified electrical engineer. He was not able to repair his electrical equipment due to FAA regulations. The fellow followed the rules because the FAA in that far off time did not practice “good enough” oversight in my opinion. Today? Well, no people fell out of the aircraft when the door came off and the pressure equalization took place. iPhones might survive a fall from 14,000 feet. Most humanoids? Nope. Shoes, however, do fare reasonably well.

Several questions:

- Exactly how can a commercial aircraft be certified and then shed a door in flight?

- Who is responsible for okaying the aircraft model in the first place?

- Didn’t some similar aircraft produce exciting and consequential results for the passengers, their families, pilots, and the manufacturer?

- Why call crappy design and engineering “quality escape”? Crappy is simpler, more to the point.

Yikes. But if it flies, it is good enough. Excellence has a different spin these days.

Stephen E Arnold, January 12, 2024

British Library: The Math of Can Kicking Security Down the Road

January 9, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a couple of blog posts about the security issues at the British Library. I am not currently working on projects in the UK. Therefore, I noted the issue and moved on to more pressing matters. Examples range from writing about the antics of the Google to keeping my eye on the new leader of the highly innovative PR magnet, the NSO Group.

Two well-educated professionals kick a security can down the road. Why bother to pick it up? Thanks, MSFT Copilot Bing thing. I gave up trying to get you to produce a big can and big shoe. Sigh.

I read “British Library to Burn Through Reserves to Recover from Cyber Attack.” The weird orange newspaper usually has semi-reliable, actual factual information. The write up reports or asserts (the FT is a newspaper, after all):

The British Library will drain about 40 per cent of its reserves to recover from a cyber attack that has crippled one of the UK’s critical research bodies and rendered most of its services inaccessible.

I won’t summarize what the bad actors took down. Instead, I want to highlight another passage in the article:

Cyber-intelligence experts said the British Library’s service could remain down for more than a year, while the attack highlighted the risks of a single institution playing such a prominent role in delivering essential services.

A couple of themes emerge from these two quoted passages:

- Whatever cash the library has, spitting distance of half is going to be spent “recovering,” not improving, enhancing, or strengthening. Just “recovering.”

- The attack killed off “most” of the British Libraries services. Not a few. Not one or two. Just “most.”

- Concentration for efficiency leads to failure for downstream services. But concentration makes sense, right. Just ask library patrons.

My view of the situation is familiar of you have read other blog posts about Fancy Dan, modern methods. Let me summarize to brighten your day:

First, cyber security is a function that marketers exploit without addressing security problems. Those purchasing cyber security don’t know much. Therefore, the procurement officials are what a falcon might label “easy prey.” Bad for the chihuahua sometimes.

Second, when security issues are identified, many professionals don’t know how to listen. Therefore, a committee decides. Committees are outstanding bureaucratic tools. Obviously the British Library’s managers and committees may know about manuscripts. Security? Hmmm.

Third, a security failure can consume considerable resources in order to return to the status quo. One can easily imagine a scenario months or years in the future when the cost of recovery is too great. Therefore, the security breach kills the organization. Termination can be rationalized by a committee, probably affiliated with a bureaucratic structure further up the hierarchy.

I think the idea of “kicking the security can” down the road a widespread characteristic of many organizations. Is the situation improving? No. Marketers move quickly to exploit weaknesses of procurement teams. Bad actors know this. Excitement ahead.

Stephen E Arnold, January 9, 2024

Pegasus Equipped with Wings Stomps Around and Leaves Hoof Prints

January 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The NSO Group’s infamous Pegasus spyware is in the news again, this time in India. Newsclick reveals, “New Forensic Report Finds ‘Damning Revelations’ of ‘Repeated’ Pegasus Use to Target Indian Scribes.” The report is a joint project by Amnesty International and The Washington Post. It was spurred by two indicators. First, routine monitoring exercise in June 2023 turned up traces of Pegasus on certain iPhones. Then, in October, several journalists and Opposition party politicians received Apple alerts warning of “State-sponsored attackers.” The article tells us:

“‘As a result, Amnesty International’s Security Lab undertook a forensic analysis on the phones of individuals around the world who received these notifications, including Siddharth Varadarajan and Anand Mangnale. It found traces of Pegasus spyware activity on devices owned by both Indian journalists. The Security Lab recovered evidence from Anand Mangnale’s device of a zero-click exploit which was sent to his phone over iMessage on 23 August 2023, and designed to covertly install the Pegasus spyware. … According to the report, the ‘attempted targeting of Anand Mangnale’s phone happened at a time when he was working on a story about an alleged stock manipulation by a large multinational conglomerate in India.’”

This was not a first for The Wire co-founder Siddharth Varadarajan. His phone was also infected with Pegasus back in 2018, according to forensic analysis ordered by the Supreme Court of India. The latest findings have Amnesty International urging bans on invasive, opaque spyware worldwide. Naturally, The NSO Group continues to insist all its clients are “vetted law enforcement and intelligence agencies that license our technologies for the sole purpose of fighting terror and major crime” and that it has policies in place to prevent “targeting journalists, lawyers and human rights defenders or political dissidents that are not involved in terror or serious crimes.” Sure.

Meanwhile, some leaders of India’s ruling party blame Apple for those security alerts, alleging the “company’s internal threat algorithms were faulty.” Interesting deflection. We’re told an Apple security rep was called in and directed to craft some other, less alarming explanation for the warnings. Is this because the government itself is behind the spyware? Unclear; Parliament refuses to look into the matter, claiming it is sub judice. How convenient.

Cynthia Murrell, January 8, 2024

AI Risk: Are We Watching Where We Are Going?

December 27, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

To brighten your New Year, navigate to “Why We Need to Fear the Risk of AI Model Collapse.” I love those words: Fear, risk, and collapse. I noted this passage in the write up:

When an AI lives off a diet of AI-flavored content, the quality and diversity is likely to decrease over time.

I think the idea of marrying one’s first cousin or training an AI model on AI-generated content is a bad idea. I don’t really know, but I find the idea interesting. The write up continues:

Is this model at risk of encountering a problem? Looks like it to me. Thanks, MSFT Copilot. Good enough. Falling off the I beam was a non-starter, so we have a more tame cartoon.

Model collapse happens when generative AI becomes unstable, wholly unreliable or simply ceases to function. This occurs when generative models are trained on AI-generated content – or “synthetic data” – instead of human-generated data. As time goes on, “models begin to lose information about the less common but still important aspects of the data, producing less diverse outputs.”

I think this passage echoes some of my team’s thoughts about the SAIL Snorkel method. Googzilla needs a snorkel when it does data dives in some situations. The company often deletes data until a legal proceeding reveals what’s under the company’s expensive, smooth, sleek, true blue, gold trimmed kimonos

The write up continues:

There have already been discussions and research on perceived problems with ChatGPT, particularly how its ability to write code may be getting worse rather than better. This could be down to the fact that the AI is trained on data from sources such as Stack Overflow, and users have been contributing to the programming forum using answers sourced in ChatGPT. Stack Overflow has now banned using generative AIs in questions and answers on its site.

The essay explains a couple of ways to remediate the problem. (I like fairy tales.) The first is to use data that comes from “reliable sources.” What’s the definition of reliable? Yeah, problem. Second, the smart software companies have to reveal what data were used to train a model. Yeah, techno feudalists totally embrace transparency. And, third, “ablate” or “remove” “particular data” from a model. Yeah, who defines “bad” or “particular” data. How about the techno feudalists, their contractors, or their former employees.

For now, let’s just use our mobile phone to access MSFT Copilot and fix our attention on the screen. What’s to worry about? The person in the cartoon put the humanoid form in the apparently risky and possibly dumb position. What could go wrong?

Stephen E Arnold, December 27, 2023

The High School Science Club Got Fined for Its Management Methods

December 4, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I almost missed this story. “Google Reaches $27 Million Settlement in Case That Sparked Employee Activism in Tech” which contains information about the cost of certain management methods. The write up asserts:

Google has reached a $27 million settlement with employees who accused the tech giant of unfair labor practices, setting a record for the largest agreement of its kind, according to California state court documents that haven’t been previously reported.

The kindly administrator (a former legal eagle) explains to the intelligent teens in the high school science club something unpleasant. Their treatment of some non sci-club types will cost them. Thanks, MSFT Copilot. Who’s in charge of the OpenAI relationship now?

The article pegs the “worker activism” on Google. I don’t know if Google is fully responsible. Googzilla’s shoulders and wallet are plump enough to carry the burden in my opinion. The article explains:

In terminating the employee, Google said the person had violated the company’s data classification guidelines that prohibited staff from divulging confidential information… Along the way, the case raised issues about employee surveillance and the over-use of attorney-client privilege to avoid legal scrutiny and accountability.

Not surprisingly, the Google management took a stand against the apparently unjust and unwarranted fine. The story notes via a quote from someone who is in the science club and familiar with its management methods::

“While we strongly believe in the legitimacy of our policies, after nearly eight years of litigation, Google decided that resolution of the matter, without any admission of wrongdoing, is in the best interest of everyone,” a company spokesperson said.

I want to point out that the write up includes links to other articles explaining how the Google is refining its management methods.

Several questions:

- Will other companies hit by activist employees be excited to learn the outcome of Google’s brilliant legal maneuvers which triggered a fine of a mere $27 million

- Has Google published a manual of its management methods? If not, for what is the online advertising giant waiting?

- With more than 170,000 (plus or minus) employees, has Google found a way to replace the unpredictable, expensive, and recalcitrant employees with its smart software? (Let’s ask Bard, shall we?)

After 25 years, the Google finds a way to establish benchmarks in managerial excellence. Oh, I wonder if the company will change it law firm line up. I mean $27 million. Come on. Loose the semantic noose and make more ads “relevant.”

Stephen E Arnold, December 4, 2023

Google and X: Shall We Again Love These Bad Dogs?

November 30, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Two stories popped out of my blah newsfeed this morning (Thursday, November 30, 2023). I want to highlight each and offer a handful of observations. Why? I am a dinobaby, and I remember the adults who influenced me telling me to behave, use common sense, and follow the rules of “good” behavior. Dull? Yes. A license to cut corners and do crazy stuff? No.

The first story, if it is indeed accurate, is startling. “Google Caught Placing Big-Brand Ads on Hardcore Porn Sites, Report Says” includes a number of statements about the Google which make me uncomfortable. For instance:

advertisers who feel there’s no way to truly know if Google is meeting their brand safety standards are demanding more transparency from Google. Ideally, moving forward, they’d like access to data confirming where exactly their search ads have been displayed.

Where are big brand ads allegedly appearing? How about “undesirable sites.” What comes to mind for me is adult content. There are some quite sporty ads on certain sites that would make a Methodist Sunday school teacher blush.

These two big dogs are having a heck of a time ruining the living room sofa. Neither dog knows that the family will not be happy. These are dogs, not the mental heirs of Immanuel Kant. Thanks, MSFT Copilot. The stuffing looks like soap bubbles, but you are “good enough,” the benchmark for excellence today.

But the shocking factoid is that Google does not provide a way for advertisers to know where their ads have been displayed. Also, there is a possibility that Google shared ad revenue with entities which may be hostile to the interests of the US. Let’s hope that the assertions reported in the article are inaccurate. But if the display of big brand ads on sites with content which could conceivably erode brand value, what exactly is Google’s system doing? I will return to this question in the observations section of this essay.

The second article is equally shocking to me.

“Elon Musk Tells Advertisers: ‘Go F*** Yourself’” reports that the EV and rocket man with a big hole digging machine allegedly said about advertisers who purchase promotions on X.com (Twitter?):

Don’t advertise,” … “If somebody is going to try to blackmail me with advertising, blackmail me with money, go fuck yourself. Go f*** yourself. Is that clear? I hope it is.” … ” If advertisers don’t return, Musk said, “what this advertising boycott is gonna do is it’s gonna kill the company.”

The cited story concludes with this statement:

The full interview was meandering and at times devolved into stream of consciousness responses; Musk spoke for triple the time most other interviewees did. But the questions around Musk’s own actions, and the resulting advertiser exodus — the things that could materially impact X — seemed to garner the most nonchalant answers. He doesn’t seem to care.

Two stories. Two large and successful companies. What can a person like myself conclude, recognizing that there is a possibility that both stories may have some gaps and flaws:

- There is a disdain for old-fashioned “values” related to acceptable business practices

- The thread of pornography and foul language runs through the reports. The notion of well-crafted statements and behaviors is not part of the Google and X game plan in my view

- The indifference of the senior managers at both companies seeps through the descriptions of how Google and X operate strikes me as intentional.

Now why?

I think that both companies are pushing the edge of business behavior. Google obviously is distributing ad inventory anywhere it can to try and create a market for more ads. Instead of telling advertisers where their ads are displayed or giving an advertiser control over where ads should appear, Google just displays the ads. The staggering irrelevance of the ads I see when I view a YouTube video is evidence that Google knows zero about me despite my being logged in and using some Google services. I don’t need feminine undergarments, concealed weapons products, or bogus health products.

With X.com the dismissive attitude of the firm’s senior management reeks of disdain. Why would someone advertise on a system which promotes behaviors that are detrimental to one’s mental set up?

The two companies are different, but in a way they are similar in their approach to users, customers, and advertisers. Something has gone off the rails in my opinion at both companies. It is generally a good idea to avoid riding trains which are known to run on bad tracks, ignore safety signals, and demonstrate remarkably questionable behavior.

What if the write ups are incorrect? Wow, both companies are paragons. What if both write ups are dead accurate? Wow, wow, the big dogs are tearing up the living room sofa. More than “bad dog” is needed to repair the furniture for living.

Stephen E Arnold, November 30, 2023

Google Maps: Rapid Progress on Un-Usability

November 30, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a Xhitter.com post about Google Maps. Those who have either heard me talk about the “new” Google Maps or who have read some of my blog posts on the subject know my view. The current Google Maps is useless for my needs. Last year, as one of my team were driving to a Federal secure facility, I bought an overpriced paper map at one of the truck stops. Why? I had no idea how to interact with the map in a meaningful way. My recollection was that I could coax Google Maps and Waze to be semi-helpful. Now the Google Maps’s developers have become tangled in a very large thorn bush. The team discusses how large the thorn bush is, how sharp the thorns are, and how such a large thorn bush could thrive in the Googley hot house.

This dinobaby expresses some consternation at [a] not knowing where to look, [b] how to show the route, and [c] not cause a motor vehicle accident. Thanks, MSFT Copilot. Good enough I think.

The result is enhancements to Google Maps which are the digital equivalent of skin cancer. The disgusting result is a vehicle for advertising and engagement that no one can use without head scratching moments. Am I alone in my complaint. Nope, the afore mentioned Xhitter.com post aligns quite well with my perception. The author is a person who once designed a more usable version of Google Maps.

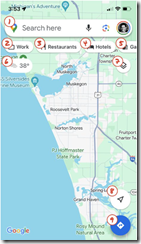

Her Xhitter.com post highlights the digital skin cancer the team of Googley wizards has concocted. Here’s a screen capture of her annotated, life-threatening disfigurement:

She writes:

The map should be sacred real estate. Only things that are highly useful to many people should obscure it. There should be a very limited number of features that can cover the map view. And there are multiple ways to add new features without overlaying them directly on the map.

Sounds good. But Xooglers and other outsiders are not likely to get much traction from the Map team. Everyone is working hard at landing in the hot AI area or some other discipline which will deliver a bonus and a promotion. Maps? Nope.

The former Google Maps’ designer points out:

In 2007, I was 1 of 2 designers on Google Maps. At that time, Maps had already become a cluttered mess. We were wedging new features into any space we could find in the UI. The user experience was suffering and the product was growing increasingly complicated. We had to rethink the app to be simple and scale for the future.

Yep, Google Maps, a case study for people who are brilliant who have lost the atlas to reality. And “sacred” at Google? Ad revenue, not making dear old grandma safer when she drives. (Tesla, Cruise, where are those smart, self-driving cars? Ah, I forgot. They are with Waymo, keeping their profile low.)

Stephen E Arnold, November 30, 2023

Another Xoogler and More Process Insights

November 23, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google employs many people. Over the last 25 years, quite a few Xooglers (former Google employees) are out and about. I find the essays by the verbal Xooglers interesting. “Reflecting on 18 Years at Google” contains several intriguing comments. Let me highlight a handful of these. You will want to read the entire Hixie article to get the context for the snips I have selected.

The first point I underlined with blushing pink marker was:

I found it quite frustrating how teams would be legitimately actively pursuing ideas that would be good for the world, without prioritizing short-term Google interests, only to be met with cynicism in the court of public opinion.

Old timers share stories about the golden past in the high-technology of online advertising. Thanks, Copilot, don’t overdo the schmaltz.

The “Google as a victim” is a notion not often discussed — except by some Xooglers. I recall a comment made to me by a seasoned manager at another firm, “Yes, I am paranoid. They are out to get me.” That comment may apply to some professionals at Google.

How about this passage?

My mandate was to do the best thing for the web, as whatever was good for the web would be good for Google (I was explicitly told to ignore Google’s interests).

The oft-repeated idea is that Google cares about its users and similar truisms are part of what I call the Google mythology. Intentionally, in my opinion, Google cultivates the “doing good” theme as part of its effort to distract observers from the actual engineering intent of the company. (You love those Google ads, don’t you?)

Google’s creative process is captured in this statement:

We essentially operated like a startup, discovering what we were building more than designing it.

I am not sure if this is part of Google’s effort to capture the “spirit” of the old-timey days of Bell Laboratories or an accurate representation of Google’s directionless methods became over the years. What people “did” is clearly dissociated from the advertising mechanisms on which the oversized tires and chrome do-dads were created and bolted on the ageing vehicle.

And, finally, this statement:

It would require some shake-up at the top of the company, moving the center of power from the CFO’s office back to someone with a clear long-term vision for how to use Google’s extensive resources to deliver value to users.

What happened to the ideas of doing good and exploratory innovation?

Net net: Xooglers pine for the days of the digital gold rush. Googlers may not be aware of what the company is and does. That may be a good thing.

Stephen E Arnold, November 23, 2023

Anti-AI Fact Checking. What?

November 21, 2023

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

If this effort is sincere, at least one news organization is taking AI’s ability to generate realistic fakes seriously. Variety briefly reports, “CBS Launches Fact-Checking News Unit to Examine AI, Deepfakes, Misinformation.” Aptly dubbed “CBS News Confirmed,” the unit will be led by VPs Claudia Milne and Ross Dagan. Writer Brian Steinberg tells us:

“The hope is that the new unit will produce segments on its findings and explain to audiences how the information in question was determined to be fake or inaccurate. A July 2023 research note from the Northwestern Buffett Institute for Global Affairs found that the rapid adoption of content generated via A.I. ‘is a growing concern for the international community, governments and the public, with significant implications for national security and cybersecurity. It also raises ethical questions related to surveillance and transparency.’”

Why yes, good of CBS to notice. And what will it do about it? We learn:

“CBS intends to hire forensic journalists, expand training and invest in new technology, [CBS CEO Wendy] McMahon said. Candidates will demonstrate expertise in such areas as AI, data journalism, data visualization, multi-platform fact-checking, and forensic skills.”

So they are still working out the details, but want us to rest assured they have a plan. Or an outline. Or maybe a vague notion. At least CBS acknowledges this is a problem. Now what about all the other news outlets?

Cynthia Murrell, November 21, 2023