Can Your Job Be Orchestrated? Yes? Okay, It Will Be Smartified

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My work career over the last 60 years has been filled with luck. I have been in the right place at the right time. I have been in companies which have been acquired, reassigned, and exposed to opportunities which just seemed to appear. Unlike today’s young college graduate, I never thought once about being able to get a “job.” I just bumbled along. In an interview for something called Singularity, the interviewer asked me, “What’s been the key to your success?” I answered, “Luck.” (Please, keep in mind that the interviewer assumed I was a success, but he had no idea that I did not want to be a success. I just wanted to do interesting work.)

Thanks, MSFT Copilot. Will smart software do your server security? Ho ho ho.

Would I be able to get a job today if I were 20 years old? Believe it or not, I told my son in one of our conversations about smart software: “Probably not.” I thought about this comment when I read today (March 13, 2024) the essay “Devin AI Can Write Complete Source Code.” The main idea of the article is that artificial intelligence, properly trained, appropriately resourced can do what only humans could do in 1966 (when I graduated with a BA degree from a so so university in flyover country). The write up states:

Devin is a Generative AI Coding Assistant developed by Cognition that can write and deploy codes of up to hundreds of lines with just a single prompt. Although there are some similar tools for the same purpose such as Microsoft’s Copilot, Devin is quite the advancement as it not only generates the source code for software or website but it debugs the end-to-end before the final execution.

Let’s assume the write up is mostly accurate. It does not matter. Smart software will be shaped to deliver what I call orchestrated solutions either today, tomorrow or next month. Jobs already nuked by smartification are customer service reps, boilerplate writing jobs (hello, McKinsey), and translation. Some footloose and fancy free gig workers without AI skills may face dilemmas about whether to pursue begging, YouTubing the van life, or doing some spelunking in the Chemical Abstracts database for molecular recipes in a Walmart restroom.

The trajectory of applied AI is reasonably clear to me. Once “programming” gets swept into the Prada bag of AI, what other professions will be smartified? Once again, the likely path is light by dim but visible Alibaba solar lights for the garden:

- Legal tasks which are repetitive even though the cases are different, the work flow is something an average law school graduate can master and learn to loathe

- Forensic accounting. Accountants are essentially Ground Hog Day people, because every tax cycle is the same old same old

- Routine one-day surgeries. Sorry, dermatologists, cataract shops, and kidney stone crunchers. Robots will do the job and not screw up the DRG codes too much.

- Marketers. I know marketing requires creative thinking. Okay, but based on the Super Bowl ads this year, I think some clients will be willing to give smart software a whirl. Too bad about filming a horse galloping along the beach in Half Moon Bay though. Oh, well.

That’s enough of the professionals who will be affected by orchestrated work flows surfing on smartified software.

Why am I bothering to write down what seems painfully obvious to my research team?

I just wanted another reason to say, “I am glad I am old.” What many young college graduates will discover that despite my “luck” over the course of my work career, smartified software will not only kill some types of work. Smart software will remove the surprise in a serendipitous life journey.

To reiterate my point: I am glad I am old and understand efficiency, smartification, and the value of having been lucky.

Stephen E Arnold, March 13, 2024

AI Bubble Gum Cards

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

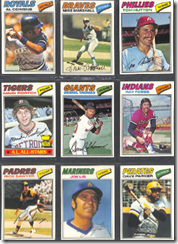

A publication for electrical engineers has created a new mechanism for making AI into a collectible. Navigate to “The AI apocalypse: A Scorecard.” Scroll down to the part of the post which looks like the gems from the 1050s:

The idea is to pick 22 experts and gather their big ideas about AI’s potential to destroy humanity. Here’s one example of an IEEE bubble gum card:

© by the estimable IEEE.

The information on the cards is eclectic. It is clear that some people think smart software will kill me and you. Others are not worried.

My thought is that IEEE should expand upon this concept; for example, here are some bubble gum card ideas:

- Do the NFT play? These might be easier to sell than IEEE memberships and subscriptions to the magazine

- Offer actual, fungible packs of trading cards with throw-back bubble gum

- Create an AI movie about AI experts with opposing ideas doing battle in a video game type world. Zap. You lose, you doubter.

But the old-fashioned approach to selling trading cards to grade school kids won’t work. First, there are very few corner stores near schools in many cities. Two, a special interest group will agitate to block the sale of cards about AI because the inclusion of chewing gum will damage children’s teeth. And, three, kids today want TikToks, at least until the service is banned from a fast-acting group of elected officials.

I think the IEEE will go in a different direction; for example, micro USBs with AI images and source code on them. Or, the IEEE should just advance to the 21st-century and start producing short-form AI videos.

The IEEE does have an opportunity. AI collectibles.

Stephen E Arnold, March 13, 2024

Want Clicks: Do Sad, Really, Really Sorrowful

March 13, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

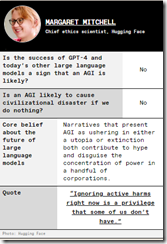

The US is a hotbed of negative news. It’s what drives the media and perpetuates the culture of fear that (arguably) has plagued the country since colonial times. US citizens and now the rest of the world are so addicted to bad news that a research team got the brilliant idea to study what words people click. Nieman Lab wrote about the study in, “Negative Words In News Headlines Generate More Clicks-But Sad Words Are More Effective Than Angry Or Scary Ones.”

Thanks, MSFT Copilot. One of Redmond’s security professionals I surmise?

Negative words are prevalent in headlines because they sell clicks. The Nature Human Behavior(u)r journal published a study called “Negativity Drives Online News Consumption.” The study analyzed the effect of negative and emotional words on news consumption and the research team discovered that negativity increased clickability. These findings also confirm the well-documented behavior of humans seeking negativity in all information-seeking.

It coincides with humanity’s instinct to be vigilant of any danger and avoid it. While humans instinctually gravitate towards negative headlines, certain negative words are more popular than others. Humans apparently are driven to click on sad-related synonyms, avoid anything resembling joy or fear, and angry words don’t have any effect. It all goes back to survival:

“And if we are to believe “Bad is stronger than good” derives from evolutionary psychology — that it arose as a useful heuristic to detect threats in our environment — why would fear-related words reduce likelihood to click? (The authors hypothesize that fear and anger might be more important in generating sharing behavior — which is public-facing — than clicks, which are private.)

In any event, this study puts some hard numbers to what, in most newsrooms, has been more of an editorial hunch: Readers are more drawn to negativity than to positivity. But thankfully, the effect size is small — and I’d wager that it’d be even smaller for any outlet that decided to lean too far in one direction or the other.”

It could also be a strict diet of danger-filled media too.

Whitney Grace, March 13, 2024

Thomson Reuters Is Going to Do AI: Run Faster

March 11, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Thomson Reuters, a mostly low profile outfit, is going to do AI. Why’s this interesting to law schools, lawyers, accountants, special librarians, libraries, and others who “pay” for “real” information? There are three reasons:

- Money

- Markets

- Mania.

Thomson Reuters has been a tech talker for decades. The company created skunk works. It hired quirky MIT wizards. I bought businesses with information technology. But underneath the professional publishing clear coat, the firm is the creation of Lord Thomson of Fleet. The firm has a track record of being able to turn a profit on its $7 billion in revenues. But the future, if news reports are accurate, is artificial intelligence or smart software.

The young publishing executive says, “I have go to get ahead of this AI bus before it runs over me.” Thanks, MSFT Copilot. Working on security today?

But wait! What makes Thomson Reuters different from the New York Times or (heaven forbid the question) Rupert Murdoch’s confections? The answer is in my opinion: Thomson Reuters does the trust thing and is a professional publisher. I don’t want to explain that in the world of Lord Thomson of Fleet that publishing is publishing. Nope. Not going there. Thomson Reuters is a custom made billiard cue, not one of those bar pool cheapos.

As appropriate to today’s Thomson Reuters, the news appeared in Thomson’s own news releases first; for example, “Thomson Reuters Profit Beats Estimates Amid AI Push.” Yep, AI drives profits. That’s the “m” in money. Plus, Thomson late last year this article found its way to the law firm market (yep, that’s the second “m”): “Morgan Lewis and Thomson Reuters Enter into Partnership to Put Law Firms’ Needs at the Heart of AI Development.”

Now the third “m” or mania. Here’s a representative story, “Thomson Reuters to Invest US$8 billion in a Substantial AI-Focused Spending Initiative.” You can also check out the Financial Times’s report at this link.

Thomson Reuters is a $7 billion corporation. If the $8 billion number is on the money, the venerable news outfit is going to spend the equivalent on one year’s revenue acquiring and investing in smart software. In terms of professional publishing, this chunk of change is roughly the equivalent of Sam AI-Man’s need for trillions of dollars for his smart software business.

Several thoughts struck me as I was reading about the $8 billion investment in smart software:

- In terms of publishing or more narrowly professional publishing, $8 billion will take some time to spend. But time is not on the side of publishing decision making processes. When the check is written for an AI investment, there may be some who ask, “Is this the correct investment? After all, aren’t we professional publishers serving lawyers, accountants, and researchers?”

- The US legal processes are interesting. But the minor challenge of Crown copyright adds a bit of spice to certain investments. The UK government itself is reluctant to push into some AI areas due to concerns that certain information may not be available unless the red tape about copyright has been trimmed, rolled, and put on the shelf. Without being disrespectful, Thomson Reuters could find that some of the $8 billion headed into its clients pockets as legal challenges make their way through courts in Britain, Canada, and the US and probably some frisky EU states.

- The game for AI seems to be breaking into two what a former Greek minister calls the techno feudal set up. On one hand, there are giant technology centric companies (of which Thomson Reuters is not one of the club members). These are Google- and Microsoft-scale outfits with infrastructure, data, customers, and multiple business models. On the other hand, there are the Product Watch outfits which are using open source and APIs to create “new” and “important” AI businesses, applications, and solutions. In short, there are some barons and a whole grab-bag of lesser folk. Is Thomson Reuters going to be able to run with the barons. Remember, please, the barons are riding stallions. Thomson Reuter-type firms either walk or ride donkeys.

Net net: If Thomson Reuters spends $8 billion on smart software, how many lawyers, accountants, and researchers will be put out of work? The risks are not just bad AI investments. The threat maybe to gut the billing power of the paying customers for Thomson Reuters’ content. This will be entertaining to watch.

PS. The third “m”? It is mania, AI mania.

Stephen E Arnold, March 11, 2024

x

x

x

x

x

In Tech We Mistrust

March 11, 2024

While tech firms were dumping billions into AI, they may have overlooked one key component: consumer faith. The Hill reports, “Trust in AI Companies Drops to 35 Percent in New Study.” We note that 35% figure is for the US only, while the global drop was a mere 8%. Still, that is the wrong direction for anyone with a stake in the market. So what is happening? Writer Filip Timotija tells us:

So it is not just AI we mistrust, it is tech companies as a whole. That tracks. The study polled 32,000 people across 28 countries. Timotija reminds us regulators in the US and abroad are scrambling to catch up. Will fear of consumer rejection do what neither lagging lawmakers nor common decency can? The write-up notes:

“Westcott argued the findings should be a ‘wake up call’ for AI companies to ‘build back credibility through ethical innovation, genuine community engagement and partnerships that place people and their concerns at the heart of AI developments.’ As for the impacts on the future for the industry as a whole, ‘societal acceptance of the technology is now at a crossroads,’ he said, adding that trust in AI and the companies producing it should be seen ‘not just as a challenge, but an opportunity.’” “Multiple factors contributed to the decline in trust toward the companies polled in the data, according to Justin Westcott, Edelman’s chair of global technology. ‘Key among these are fears related to privacy invasion, the potential for AI to devalue human contributions, and apprehensions about unregulated technological leaps outpacing ethical considerations,’ Westcott said, adding ‘the data points to a perceived lack of transparency and accountability in how AI companies operate and engage with societal impacts.’ Technology as a whole is losing its lead in trust among sectors, Edelman said, highlighting the key findings from the study. ‘Eight years ago, technology was the leading industry in trust in 90 percent of the countries we study,’ researchers wrote, referring to the 28 countries. ‘Now it is most trusted only in half.’”

Yes, an opportunity. All AI companies must do is emphasize ethics, transparency, and societal benefits over profits. Surely big tech firms will get right on that.

Cynthia Murrell, March 11, 2024

ACM: Good Defense or a Business Play?

March 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Professional publishers want to use the trappings of peer review, standards, tradition, and quasi academic hoo-hah to add value to their products; others want a quasi-monopoly. Think public legal filings and stuff in high school chemistry book. The customers of professional publishers are typically not the folks at the pizza joint on River Road in Prospect, Kentucky. The business of professional publishing in an interesting one, but in the wild and crazy world of collapsing next-gen publishing, professional publishing is often ignored. A publisher conference aimed at professional publishers is quite different from the Jazz Age South by Southwest shindig.

Yep, free. Thanks, MSFT Copilot. How’s that security today?

But professional publishers have been in the news. Examples include the dust up about academics making up data. The big time president of the much-honored Stanford University took intellectual short cuts and quit late last year. Then there was the some nasty issue about data and bias at the esteemed Harvard University. Plus, a number of bookish types have guess-timated that a hefty percentage of research studies contain made-up data. Hey, you gotta publish to get tenure or get a grant, right?

But there is an intruder in the basement of the professional publishing club. The intruder positions itself in the space between the making up of some data and the professional publishing process. That intruder is ArXiv, an open-access repository of electronic preprints and postprints (known as e-prints) approved for posting after moderation, according to Wikipedia. (Wikipedia is the cancer which killed the old-school encyclopedias.) Plus, there are services which offer access to professional content without paying for the right to host the information. I won’t name these services because I have no desire to have legal eagles circle about my semi-functioning head.

Why do I present this grade-school level history? I read “CACM Is Now Open Access.” Let’s let the Association of Computing Machinery explain its action:

For almost 65 years, the contents of CACM have been exclusively accessible to ACM members and individuals affiliated with institutions that subscribe to either CACM or the ACM Digital Library. In 2020, ACM announced its intention to transition to a fully Open Access publisher within a roughly five-year timeframe (January 2026) under a financially sustainable model. The transition is going well: By the end of 2023, approximately 40% of the ~26,000 articles ACM publishes annually were being published Open Access utilizing the ACM Open model. As ACM has progressed toward this goal, it has increasingly opened large parts of the ACM Digital Library, including more than 100,000 articles published between 1951–2000. It is ACM’s plan to open its entire archive of over 600,000 articles when the transition to full Open Access is complete.

The decision was not an easy one. Money issues rarely are.

I want to step back and look at this interesting change from a different point of view:

- Getting a degree today is less of a must have than when I was a wee dinobaby. My parents told me I was going to college. Period. I learned how much effort was required to get my hands on academic journals. I was a master of knowing that Carnegie-Mellon had new but limited bound volumes of certain professional publications. I knew what journals were at the University of Pittsburgh. I used these resources when the Duquesne Library was overrun with the faithful. Now “researchers” can zip online and whip up astonishing results. Google-type researchers prefer the phrase “quantumly supreme results.” This social change is one factor influencing the ACM.

- Stabilizing revenue streams means pulling off a magic trick. Sexy conferences and special events complement professional association membership fees. Reducing costs means knocking off the now, very very expensive printing, storing, and shipping of physical journals. The ACM seems to have figured out how to keep the lights on and the computing machine types spending.

- ACM members can use ACM content the way they do a pirate library’s or the feel good ArXiv outfit. The move helps neutralize discontent among the membership, and it is good PR.

These points raise a question; to wit: In today’s world how relevant will a professional association and its professional publications be going foreword. The ACM states:

By opening CACM to the world, ACM hopes to increase engagement with the broader computer science community and encourage non-members to discover its rich resources and the benefits of joining the largest professional computer science organization. This move will also benefit CACM authors by expanding their readership to a larger and more diverse audience. Of course, the community’s continued support of ACM through membership and the ACM Open model is essential to keeping ACM and CACM strong, so it is critical that current members continue their membership and authors encourage their institutions to join the ACM Open model to keep this effort sustainable.

Yep, surviving in a world of faux expertise.

Stephen E Arnold, March 8, 2024

Engineering Trust: Will Weaponized Data Patch the Social Fabric?

March 7, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Trust is a popular word. Google wants me to trust the company. Yeah, I will jump right on that. Politicians want me to trust their attestations that citizen interest are important. I worked in Washington, DC, for too long. Nope, I just have too much first-hand exposure to the way “things work.” What about my bank? It wants me to trust it. But isn’t the institution the subject of a a couple of government investigations? Oh, not important. And what about the images I see when I walk gingerly between the guard rails. I trust them right? Ho ho ho.

In our post-Covid, pre-US national election, the word “trust” is carrying quite a bit of freight. Whom to I trust? Not too many people. What about good old Socrates who was an Athenian when Greece was not yet a collection of ferocious football teams and sun seekers. As you may recall, he trusted fellow residents of Athens. He end up dead from either a lousy snack bar meal and beverage, or his friends did him in.

One of his alleged precepts in his pre-artificial intelligence worlds was:

“We cannot live better than in seeking to become better.” — Socrates

Got it, Soc.

Thanks MSFT Copilot and provider of PC “moments.” Good enough.

I read “Exclusive: Public Trust in AI Is Sinking across the Board.” Then I thought about Socrates being convicted for corruption of youth. See. Education does not bring unlimited benefits. Apparently Socrates asked annoying questions which open him to charges of impiety. (Side note: Hey, Socrates, go with the flow. Just pray to the carved mythical beast, okay?)

A loss of public trust? Who knew? I thought it was common courtesy, a desire to discuss and compromise, not whip out a weapon and shoot, bludgeon, or stab someone to death. In the case of Haiti, a twist is that a victim is bound and then barbequed in a steel drum. Cute and to me a variation of stacking seven tires in a pile dousing them with gasoline, inserting a person, and igniting the combo. I noted a variation in the Ukraine. Elderly women make cookies laced with poison and provide them to special operation fighters. Subtle and effective due to troop attrition I hear. Should I trust US Girl Scout cookies? No thanks.

What’s interesting about the write up is that it provides statistics to back up this brilliant and innovative insight about modern life is its focus on artificial intelligence. Let me pluck several examples from the dot point filled write up:

- “Globally, trust in AI companies has dropped to 53%, down from 61% five years ago.”

- “Trust in AI is low across political lines. Democrats trust in AI companies is 38%, independents are at 25% and Republicans at 24%.”

- “Eight years ago, technology was the leading industry in trust in 90% of the countries Edelman studies. Today, it is the most trusted in only half of countries.”

AI is trendy; crunchy click bait is highly desirable even for an estimable survivor of Silicon Valley style news reporting.

Let me offer several observations which may either be troubling or typical outputs from a dinobaby working in an underground computer facility:

- Close knit groups are more likely to have some concept of trust. The exception, of course, is the behavior of the Hatfields and McCoys

- Outsiders are viewed with suspicion. Often for now reason, a newcomer becomes the default bad entity

- In my lifetime, I have watched institutions take actions which erode trust on a consistent basis.

Net net: Old news. AI is not new. Hyperbole and click obsession are factors which illustrate the erosion of social cohesion. Get used to it.

Stephen E Arnold, March 7, 2024

Kagi Hitches Up with Wolfram

March 6, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

“Kagi + Wolfram” reports that the for-fee Web search engine with AI has hooked up with one of the pre-eminent mathy people innovating today. The write up includes PR about the upsides of Kagi search and Wolfram’s computational services. The article states:

…we have partnered with Wolfram|Alpha, a well-respected computational knowledge engine. By integrating Wolfram Alpha’s extensive knowledge base and robust algorithms into Kagi’s search platform, we aim to deliver more precise, reliable, and comprehensive search results to our users. This partnership represents a significant step forward in our goal to provide a search engine that users can trust to find the dependable information they need quickly and easily. In addition, we are very pleased to welcome Stephen Wolfram to Kagi’s board of advisors.

The basic wagon gets a rethink with other animals given a chance to make progress. Thanks, MSFT Copilot. Good enough, but in truth I gave up trying to get a similar image with the dog replaced by a mathematician and the pig replaced with a perky entrepreneur.

The integration of mathiness with smart search is a step forward, certainly more impressive than other firms’ recycling of Web content into bubble gum cards presenting answer. Kagi is taking steps — small, methodical ones — toward what I have described as “search enabled applications” and my friend Dr. Greg Grefenstette described in his book with the snappy title “Search-Based Applications: At the Confluence of Search and Database Technologies (Synthesis Lectures on Information Concepts, Retrieval, and Services, 17).”

It may seem like a big step from putting mathiness in a Web search engine to creating a platform for search enabled applications. It may be, but I like to think that some bright young sprouts will figure out that linking a mostly brain dead legacy app with a Kagi-Wolfram service might be useful in a number of disciplines. Even some super confident really brilliantly wonderful Googlers might find the service useful.

Net net: I am gratified that Kagi’s for-fee Web search is evolving. Google’s apparent ineptitude might give Kagi the chance Neeva never had.

Stephen E Arnold, March 6, 2024

Open Source: Free, Easy, and Fast Sort Of

February 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Not long ago, I spoke with an open source cheerleader. The pros outweighed the cons from this technologist’s point of view. (I would like to ID the individual, but I try to avoid having legal eagles claw their way into my modest nest in rural Kentucky. Just plug in “John Wizard Doe”, a high profile entrepreneur and graduate of a big time engineering school.)

I think going up suggests a problem.

Here are highlights of my notes about the upside of open source:

- Many smart people eyeball the code and problems are spotted and fixed

- Fixes get made and deployed more rapidly than commercial software which of works on an longer “fix” cycle

- Dead end software can be given new kidneys or maybe a heart with a fork

- For most use cases, the software is free or cheaper than commercial products

- New functions become available; some of which fuel new product opportunities.

There may be a few others, but let’s look at a downside few open source cheerleaders want to talk about. I don’t want to counter the widely held belief that “many smart people eyeball the code.” The method is grab and go. The speed angle is relative. Reviving open source again and again is quite useful; bad actors do this. Most people just recycle. The “free” angle is a big deal. Everyone like “free” because why not? New functions become available so new markets are created. Perhaps. But in the cyber crime space, innovation boils down to finding a mistake that can be exploited with good enough open source components, often with some mileage on their chassis.

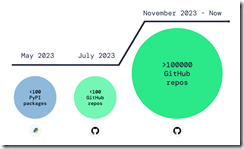

But the one point open source champions crank back on the rah rah output. “Over 100,000 Infected Repos Found on GitHub.” I want to point out that GitHub is a Microsoft, the all-time champion in security, owns GitHub. If you think about Microsoft and security too much, you may come away confused. I know I do. I also get a headache.

This “Infected Repos” API IRO article asserts:

Our security research and data science teams detected a resurgence of a malicious repo confusion campaign that began mid-last year, this time on a much larger scale. The attack impacts more than 100,000 GitHub repositories (and presumably millions) when unsuspecting developers use repositories that resemble known and trusted ones but are, in fact, infected with malicious code.

The write up provides excellent information about how the bad repos create problems and provides a recipe for do this type of malware distribution yourself. (As you know, I am not too keen on having certain information with helpful detail easily available, but I am a dinobaby, and dinobabies have crazy ideas.)

If we confine our thinking to the open source champion’s five benefits, I think security issues may be more important in some use cases.The better question is, “Why don’t open source supporters like Microsoft and the person with whom I spoke want to talk about open source security?” My view is that:

- Security is an after thought or a never thought facet of open source software

- Making money is Job #1, so free trumps spending money to make sure the open source software is secure

- Open source appeals to some venture capitalists. Why? RedHat, Elastic, and a handful of other “open source plays”.

Net net: Just visualize a future in which smart software ingests poisoned code, and programmers who rely on smart software to make them a 10X engineer. Does that create a bit of a problem? Of course not. Microsoft is the security champ, and GitHub is Microsoft.

Stephen E Arnold, February 29, 2024

Google Gems for the Week of 19 February, 2024

February 27, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

This week’s edition of Google Gems focuses on a Hope Diamond and a handful of lesser stones. Let’s go.

THE HOPE DIAMOND

In the chaos of the AI Gold Rush, horses fall and wizard engineers realize that they left their common sense in the saloon. Here’s the Hope Diamond from the Google.

The world’s largest online advertising agency created smart software with a lot of math, dump trucks filled with data, and wizards who did not recall that certain historical figures in the US were not of color. “Google Says Its AI Image-Generator Would Sometimes Overcompensate for Diversity,” an Associated Press story, explains in very gentle rhetoric that its super sophisticate brain and DeepMind would get the race of historical figures wrong. I think this means that Ben Franklin could look like a Zulu prince or George Washington might have some resemblance to Rama (blue skin, bow, arrow, and snappy hat).

My favorite search and retrieval expert Prabhakar Raghavan (famous for his brilliant lecture in Paris about the now renamed Bard) indicated that Google’s image rendering system did not hit the bull’s eye. No, Dr. Raghavan, the digital arrow pierced the micrometer thin plastic wrap of Google’s super sophisticated, quantum supremacy, gee-whiz technology.

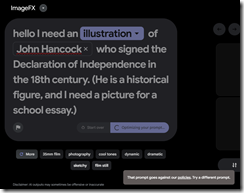

The message I received from Google when I asked for an illustration of John Hancock, an American historical figure. Too bad because this request goes against Google’s policies. Yep, wizards infused with the high school science club management method.

More important, however, was how Google’s massive stumble complemented OpenAI’s ChatGPT wonkiness. I want to award the Hope Diamond Award for AI Ineptitude to both Google and OpenAI. But, alas, there is just one Hope Diamond. The award goes to the quantumly supreme outfit Google.

[Note: I did not quote from the AP story. Why? Years ago the outfit threatened to sue people who use their stories’ words. Okay, no problemo, even though the newspaper for which I once worked supported this outfit in the days of “real” news, not recycled blog posts. I listen, but I do not forget some things. I wonder if the AP knows that Google Chrome can finish a “real” journalist’s sentences for he/him/she/her/it/them. Read about this “feature” at this link.]

Here are my reasons:

- Google is in catch-up mode and like those in the old Gold Rush, some fall from their horses and get up close and personal with hooves. How do those affect the body of a wizard? I have never fallen from a horse, but I saw a fellow get trampled when I lived in Campinas, Brazil. I recall there was a lot of screaming and blood. Messy.

- Google’s arrogance and intellectual sophistication cannot prevent incredible gaffes. A company with a mixed record of managing diversity, equity, etc. has demonstrated why Xooglers like Dr. Timnit Gebru find the company “interesting.” I don’t think Google is interesting. I think it is disappointing, particularly in the racial sensitivity department.

- For years I have explained that Google operates via the high school science club management method. What’s cute when one is 14 loses its charm when those using the method have been at it for a quarter century. It’s time to put on the big boy pants.

OTHER LITTLE GEMMAS

The previous week revealed a dirt trail with some sharp stones and thorny bushes. Here’s a quick selection of the sharpest and thorniest:

- The Google is running webinars to inform publishers about life after their wonderful long-lived cookies. Read more at Fipp.com.

- Google has released a small model as open source. What about the big model with the diversity quirk? Well, no. Read more at the weird green Verge thing.

- Google cares about AI safety. Yeah, believe it or not. Read more about this PR move on Techcrunch.

- Web search competitors will fail. This is a little stone. Yep, a kidney stone for those who don’t recall Neeva. Read more at Techpolicy.

- Did Google really pay $60 million to get that outstanding Reddit content. Wow. Maybe Google looks at different sub reddits than my research team does. Read more about it in 9 to 5 Google.

- What happens when an uninformed person uses the Google Cloud? Answer: Sticker shock. More about this estimable method in The Register.

- Some spoil sport finds traffic lights informed with Google’s smart software annoying. That’s hard to believe. Read more at this link.

- Google pointed out in a court filing that DuckDuckGo was a meta search system (that is, a search interface to other firm’s indexes) and Neeva was a loser crafted by Xooglers. Read more at this link.

No Google Hope Diamond report would be complete without pointing out that the online advertising giant will roll out its smart software to companies. Read more at this link. Let’s hope the wizards figure out that historical figures often have quite specific racial characteristics like Rama.

I wanted to include an image of Google’s rendering of a signer of the Declaration of Independence. What you see in the illustration above is what I got. Wow. I have more “gemmas”, but I just don’t want to present them.

Stephen E Arnold, February 27, 2024