Clippy, How Is Copilot? Oh, Too Bad

December 8, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

In most of my jobs, rewards landed on my desk when I sold something. When the firms silly enough to hire me rolled out a product, I cannot remember one that failed. The sales professionals were the early warning system for many of our consulting firm’s clients. Management provided money to a product manager or R&D whiz with a great idea. Then a product or new service idea emerged, often at a company event. Some were modest, but others featured bells and whistles. One such roll out had a big name person who a former adviser to several presidents. These firms were either lucky or well managed. Product dogs, diseased ferrets, and outright losers were identified early and the efforts redirected.

Two sales professionals realize that their prospects resist Microsoft’s agentic pawing. Mortgages must be paid. Sneakers must be purchased. Food has to be put on the table. Sales are needed, not push backs. Thanks, Venice.ai. Good enough.

But my employers were in tune with what their existing customer base wanted. Climbing a tall tree and going out on a limb were not common occurrences. Even Apple, which resides in a peculiar type of commercial bubble, recognizes a product that does not sell. A recent example is the itsy bitsy, teeny weenie mobile thingy. Apple bounced back with the Granny Scarf designed to hold any mobile phone. The thin and light model is not killed; its just not everywhere like the old reliable orange iPhone.

Sales professionals talk to prospects and customers. If something is not selling, the sales people report, “Problemo, boss.”

In the companies which employed me, the sales professionals knew what was coming and could mention in appropriately terms to those in the target market. This happened before the product or service was in production or available to clients. My employers (Halliburton, Booz, Allen, and a couple of others held in high esteem) had the R&D, the market signals, the early warning system for bad ideas, and the refinement or improvement mechanism working in a reliable way.

I read “Microsoft Drops AI Sales Targets in Half after Salespeople Miss Their Quotas.” The headline suggested three things to me instantly:

- The pre-sales early warning radar system did not exist or it was broken

- The sales professionals said in numbers, “Boss, this Copilot AI stuff is not selling.”

- Microsoft committed billions of dollars and significant, expensive professional staff time on something that prospects and customers do not rush to write checks, use, or tell their friends about the next big thing.”

The write up says:

… one US Azure sales unit set quotas for salespeople to increase customer spending on a product called Foundry, which helps customers develop AI applications, by 50 percent. Less than a fifth of salespeople in that unit met their Foundry sales growth targets. In July, Microsoft lowered those targets to roughly 25 percent growth for the current fiscal year. In another US Azure unit, most salespeople failed to meet an earlier quota to double Foundry sales, and Microsoft cut their quotas to 50 percent for the current fiscal year. The sales figures suggest enterprises aren’t yet willing to pay premium prices for these AI agent tools. And Microsoft’s Copilot itself has faced a brand preference challenge: Earlier this year, Bloomberg reported that Microsoft salespeople were having trouble selling Copilot to enterprises because many employees prefer ChatGPT instead.

Microsoft appears to have listened to the feedback. The adjustment, however, does not address the failure to implement the type of marketing probing process used by Halliburton and Booz, Allen: Microsoft implemented the “think it and it will become real.” The thinking in this case is that software can perform human work roles in a way that is equivalent to or better than a human’s execution.

I may be a dinobaby, but I figured out quickly that smart software has been for the last three years a utility. It is not quite useless, but it is not sufficiently robust to do the work that I do. Other people are on the same page with me.

My take away from the lower quotas is that Microsoft should have a rethink. The OpenAI bet, the AI acquisitions, the death march to put software that makes mistakes in applications millions use in quite limited ways, and the crazy publicity output to sell Copilot are sending Microsoft leadership both audio and visual alarms.

Plus, OpenAI has copied Google’s weird Red Alert. Since Microsoft has skin in the game with OpenAI, perhaps Microsoft should open its eyes and check out the beacons and listen to the klaxons ringing in Softieland sales meetings and social media discussions about Microsoft AI? Just a thought. (That Telegram virtual AI data center service looks quite promising to me. Telegram’s management is avoiding the Clippy-type error. Telegram may fail, but that outfit is paying GPU providers in TONcoin, not actual fiat currency. The good news is that MSFT can make Azure AI compute available to Telegram and get paid in TONcoin. Sounds like a plan to me.)

Stephen E Arnold, December 8, 2025

Telegram’s Cocoon AI Hooks Up with AlphaTON

December 5, 2025

[This post is a version of an alert I sent to some of the professionals for whom I have given lectures. It is possible that the entities identified in this short report will alter their messaging and delete their Telegram posts. However, the thrust of this announcement is directionally correct.]

Telegram’s rapid expansion into decentralized artificial intelligence announced a deal with AlphaTON Capital Corp. The Telegram post revealed that AlphaTON would be a flagship infrastructure and financial partner. The announcement was posted to the Cocoon Group within hours of AlphaTON getting clear of U.S. SEC “baby shelf” financial restrictions. AlphaTON promptly launched a $420.69 million securities push. Telegram and AlphaTON either acted in a coincidental way or Pavel Durov moved to make clear his desire to build a smart, Telegram-anchored financial service.

AlphaTON, a Nasdaq microcap formerly known as Portage Biotech rebranded in September 2025. The “new” AlphaTON claims to be deploying Nvidia B200 GPU clusters to support Cocoon, Telegram’s confidential-compute AI network. The company’s pivot from oncology to crypto-finance and AI infrastructure was sudden. Plus AlphaTON’s CEO Brittany Kaiser (best known for Cambridge Analytica) has allegedly interacted with Russian political and business figures during earlier data-operations ventures. If the allegations are accurate, Ms. Kaiser has connections to Russia-linked influence and financial networks. Telegram is viewed by some organizations like Kucoin as a reliable operational platform for certain financial activities.

Telegram has positioned AlphaTON as a partner and developer in the Telegram ecosystem. Firms like Huione Guarantee allegedly used Telegram for financial maneuvers that resulted in criminal charges. Other alleged uses of the Telegram platform have included other illegal activities identified in the more than a dozen criminal charges for which Pavel Durov awaits trial in France. Telegram’s instant promotion of AlphaTON, combined with the firm’s new ability to raise hundreds of millions, points to a coordinated strategy to build an AI-enabled financial services layer using Cocoon’s VAIC or virtual artificial intelligence complex.

The message seems clear. Telegram is not merely launching a distributed AI compute service; it is enabling a low latency, secrecy enshrouded AI-crypto financial construct. Telegram and AlphaTON both see an opportunity to profit from a fusion of distributed AI, cross jurisdictional operation, and a financial pay off from transactions at scale. For me and my research team, the AlphaTON tie-up signals that Telegram’s next frontier may blend decentralized AI, speculative finance, and actors operating far from traditional regulatory guardrails.

In my monograph “Telegram Labyrinth” (available only to law enforcement, US intelligence officers, and cyber attorneys in the US), Telegram requires close monitoring and a new generation of intelware software. Yesterday’s tools were not designed for what Telegram is deploying itself and with its partners. Thank you.

Stephen E Arnold, December 5, 2025, 1034 am US Eastern time

Apples Misses the AI Boat Again

December 4, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Apple and Telegram have a characteristic in common. Both did not recognize the AI boomlet that began in 2020 or so. Apple was thinking about Granny scarfs that could hold an iPhone and working out ways to cope with its dependence on Chinese manufacturing. Telegram was struggling with the US legal system and trying to create a programming language that a mere human could use to code a distributed application.

Apple’s ship has sailed, and it may dock at Google’s Gemini private island or it could decide to purchase an isolated chunk of real estate and build its de-perplexing AI system at that location.

Thanks, MidJourney. Good enough.

I thought about missing a boat or a train. The reason? I read “Apple AI Chief John Giannandrea Retiring After Siri Delays.” I simply don’t know who has been responsible for Apple AI. Siri did not work when I looked at it on my wife’s iPhone many years ago. Apparently it doesn’t work today. Could that be a factor in the leadership changes at the Tim Apple outfit?

The write up states:

Giannandrea will serve as an advisor between now and 2026, with former Microsoft AI researcher Amar Subramanya set to take over as vice president of AI. Subramanya will report to Apple engineering chief Craig Federighi, and will lead Apple Foundation Models, ML research, and AI Safety and Evaluation. Subramanya was previously corporate vice president of AI at Microsoft, and before that, he spent 16 years at Google.

Apple will probably have a person who knows some people to call at Softie and Google headquarters. However, when will the next AI boat arrive. Apple excelled at announcing AI, but no boat arrived. Telegram has an excuse; for example, our owner Pavel Durov has been embroiled in legal hassles and arm wrestling with the reality that developing complex applications for the Telegram platform is too difficult. One would have thought that Apple could have figured out a way to improve Siri, but it apparently was lost in a reality distortion field. Telegram didn’t because Pavel Durov was in jail in Paris, then confined to the country, and had to report to the French judiciary like a truant school boy. Apple just failed.

The write up says:

Giannandrea’s departure comes after Apple’s major iOS 18 Siri failure. Apple introduced a smarter, “?Apple Intelligence?” version of ?Siri? at WWDC 2024, and advertised the functionality when marketing the iPhone 16. In early 2025, Apple announced that it would not be able to release the promised version of ?Siri? as planned, and updates were delayed until spring 2026. An exodus of Apple’s AI team followed as Apple scrambled to improve ?Siri? and deliver on features like personal context, onscreen awareness, and improved app integration. Apple is now rumored to be partnering with Google for a more advanced version of ?Siri? and other ?Apple Intelligence? features that are set to come out next year.

My hunch is that grafting AI into the bizarro world of the iPhone and other Apple computing devices may be a challenge. Telegram’s solution is to not do hardware. Apple is now an outfit distinguishing itself by missing the boat. When does the next one arrive?

Stephen E Arnold, December 4, 2025

A New McKinsey Report Introduces New Jargon for Its Clients

December 3, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I read “Agents, Robots, and Us: Skill Partnerships in the Age of AI.” The write up explains that lots of employees will be terminated. Think of machines displacing seamstresses. AI is going to do that to jobs, lots of jobs.

I want to focus on a different aspect of the McKinsey Global Institute Report (a PR and marketing entity not unlike Telegram’s TON Foundation in my view).

Thanks, Vencie. Your cartoon contains neither females nor minorities. That’s definitely a good enough approach. But you have probably done a number on a few graphic artists.

First, the report offers you the potential client an opportunity to use McKinsey’s AI chatbot. The service is a test, but I have a hunch that it is not much of a test. The technology will be deployed so that McKinsey can terminate those who underperform in certain work related tasks. The criteria for keeping one’s job at a blue chip consulting firm varies from company to company. But those who don’t quit to find greener or at least less crazy pastures will now work under the watchful eye of McKinsey AI. It takes a great deal of time to write a meaningful analysis of a colleague’s job performance. Let AI do it with exceptions made for “special” hires of course. Give it a whirl.

Second, the report what I call consultant facts. These are statements which link the painfully obvious with a rationale. Let me give you an example from this pre-Thanksgiving sales document. McKinsey states:

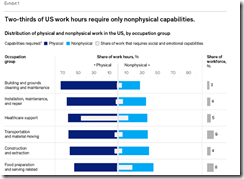

Two thirds of US work hours require only nonphysical capabilities

The painfully obvious: Most professional work is not “physical.” That means 67 percent of an employee’s fully loaded cost can be shifted to smart or semi-smart, good enough AI agentic systems. Then the obvious and the implication of financial benefits is supported by a truly blue chip chart. I know because as you can see, the graphics are blue. Here’s a segment of the McKinsey graph:

Notice that the chart is presented so that a McKinsey professional can explain the nested bar charts and expand on such data as “5 percent of a health care workforce can be agentized.” Will that resonate with hospital administrators working for roll up of individual hospitals. That’s big money. Get the AI working in one facility and then roll it out. Boom. An upside that seems credible. That’s the key to the consultant facts. Additional analysis is needed to tailor these initial McKinsey graph data to a specific use case. As a sales hook, this works and has worked for decades. Fish never understand hooks with plastic bait. Deer never quite master automobiles and headlights.

Third, the report contains sales and marketing jargon for 2026 and possibly beyond. McKinsey hopes for decades to come I think. Here’s a small selection of the words that will be used, recycled, and surface in lectures by AI experts to quite large crowds of conference attendees:

AI adjacent capabilities

AI fluency

Embodied AI

HMC or human machine collaboration

High prevalence skills

Human-agent-robot roles

technical automation potential

If you cannot define these, you need to hire McKinsey. If you want to grow as a big time manager, send that email or FedEx with a handwritten note on your engraved linen stationery.

Fourth, some humans will be needed. McKinsey wants to reassure its clients that software cannot replace the really valuable human. What do you think makes a really valuable worker beyond AI fluency? [a] A professional who signed off on a multi-million McKinsey consulting contract? [b] A person who helped McKinsey consultants get the needed data and interviews from an otherwise secretive client with compartmentalized and secure operating units? [b] A former McKinsey consultant now working for the firm to which McKinsey is pitching an AI project.

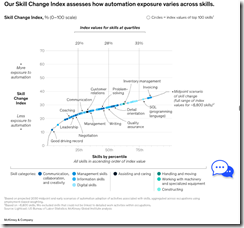

Fifth, the report introduces a new global index. The data in this free report is unlikely to be free in the future. McKinsey clients can obtain these data. This new global index is called the Skills Change Index. Here’s an example. You can get a bit more marketing color in the cited report. Just feast your eyes on this consultant fact packed chart:

Several comments. The weird bubble in the right hand page’s margin is your link to the McKinsey AI system. Give it a whirl, please. Look at the wonderland of information in a single chart presented in true blue, “just the facts, mam” style. The hawk-eyed will see that “leadership” seems immune to AI. Obviously senior management smart enough to hire McKinsey will be AI fluent and know the score or at least the projected financial payoff resulting from terminating staff who fail to up their game when robots do two thirds of the knowledge workers’ tasks.

Why has McKinsey gone to such creative lengths to create an item like this marketing collateral? Multiple teams labored on this online brochure. Graphic designers went through numerous versions of the sliding panels. McKinsey knows there is money in those AI studies. The firm will apply its intellectual method to the wizards who are writing checks to AI companies to build big data centers. Even Google is hedging its bets by packaging its data centers as providers to super wary customers like NATO. Any company can benefit from AI fluency-centric efficiency inputs. Well, not any. The reason is that only companies who can pay McKinsey fees quality to be clients.

The 11 people identified as the authors have completed the equivalent of a death march. Congratulations. I applaud you. At some point in your future career, you can look back on this document and take pride in providing a road map for companies eager to dump human workers for good enough AI systems. Perhaps one of you will be able to carry a sign in a major urban area that calls attention to your skills? You can look back and tell your friends and family, “I was part of this revolution.” So Happy holidays to you, McKinsey, and to the other blue chip firms exploiting good enough smart software.

Stephen E Arnold, December 3, 2025

Meta: Flying Its Flag for Moving Fast and Breaking Things

December 3, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Meta, a sporty outfit, is the subject of an interesting story in “Press Gazette,” an online publication. The article “News Publishers File Criminal Complaint against Mark Zuckerberg Over Scam Ads” asserts:

A group of news publishers have filed a police complaint against Meta CEO Mark Zuckerberg over scam Facebook ads which steal the identities of journalists. Such promotions have been widespread on the Meta platform and include adverts which purport to be authored by trusted names in the media.

Thanks, MidJourney. Good enough, the gold standard for art today.

I can anticipate the outputs from some Meta adherents; for example, “We are really, really sorry.” or “We have specific rules against fraudulent behavior and we will take action to address this allegation.” Or, “Please, contact our legal representative in Sweden.”

The write up does not speculate as I just did in the preceding paragraph. The article takes a different approach, reporting:

According to Utgivarna: “These ads exploit media companies and journalists, cause both financial and psychological harm to innocent people, while Meta earns large sums by publishing the fraudulent content.” According to internal company documents, reported by Reuters, Meta earns around $16bn per year from fraudulent advertising. Press Gazette has repeatedly highlighted the use of well-known UK and US journalists to promote scam investment groups on Facebook. These include so-called pig-butchering schemes, whereby scammers win the trust of victims over weeks or months before persuading them to hand over money. [Emphasis added by Beyond Search]

On November 22, 2025, Time Magazine ran this allegedly accurate story “Court Filings Allege Meta Downplayed Risks to Children and Misled the Public.” In that article, the estimable social media company found itself in the news. That write up states:

Sex trafficking on Meta platforms was both difficult to report and widely tolerated, according to a court filing unsealed Friday. In a plaintiffs’ brief filed as part of a major lawsuit against four social media companies, Instagram’s former head of safety and well-being Vaishnavi Jayakumar testified that when she joined Meta in 2020 she was shocked to learn that the company had a “17x” strike policy for accounts that reportedly engaged in the “trafficking of humans for sex.”

I find it interesting that Meta is referenced in legal issues involving two particularly troublesome problems in many countries around the world. The one two punch is sex trafficking and pig butchering. I — probably incorrectly — interpret these two allegations as kiddie crime and theft. But I am a dinobaby, and I am not savvy to the ways of the BAIT (big AI tech)-type companies. Getting labeled as a party of sex trafficking and pig butchering is quite interesting to me. Happy holidays to Meta’s PR and legal professionals. You may be busy and 100 percent billable over the holidays and into the new year.

Several observations may be warranted:

- There are some frisky BAIT outfits in Silicon Valley. Meta may well be competing for the title as the Most Frisky Firm (MFF). I wonder what the prize is?

- Meta was annoyed with a “tell all” book written by a former employee. Meta’s push back seemed a bit of a tell to me. Perhaps some of the information hit too close to the leadership of Meta? Now we have sex and fraud allegations. So…

- How will Facebook, Instagram, and WhatsApp innovate in ad sales once Meta’s AI technology is fully deployed? Will AI, for example, block ad sales that are questionable? AI does make errors, which might be a useful angle for Meta going forward.

Net net: Perhaps some journalist with experience in online crime will take a closer look at Meta. I smell smoke. I am curious about the fire.

Stephen E Arnold, December 3, 2025

Open Source Now for Rich Peeps

December 3, 2025

Once upon a time, open source was the realm of startups in a niche market. Nolan Lawson wrote about “The Fate Of ‘Small’ Open Source” on his blog Read The Tea Leaves. He explains that more developers are using AI in their work and it’s step ahead of how coding used to be in the past. He observed a societal change that has been happening since the invention of the Internet: “I do think it’s a future where we prize instant answers over teaching and understanding.”

Old-fashioned research is now an art that few decide to master except in some circumstances. However, that doesn’t help the open source libraries that built the foundation of modern AI and most systems. Lawson waxes poetic about the ending of an era and what’s the point of doing something new in an old language. He uses a lot of big words and tech speak that most people won’t understand, but I did decipher that he’s upset that big corporations and AI chatbots are taking away the work.

He remains hopeful though:

“So if there’s a conclusion to this meandering blog post (excuse my squishy human brain; I didn’t use an LLM to write this), it’s just that: yes, LLMs have made some kinds of open source obsolete, but there’s still plenty of open source left to write. I’m excited to see what kinds of novel and unexpected things you all come up with.”

My squishy brain comprehends that the future is as bleak as the present but it’s all relative and how we decide to make it.

Whitney Grace, December 3, 2025

AI-Yai-Yai: Two Wizards Unload on What VCs and Consultants Ignore

December 2, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I read “Ilya Sutskever, Yann LeCun and the End of Just Add GPUs.” The write up is unlikely to find too many accelerationists printing out the write up and handing it out to their pals at Philz Coffee. What does this indigestion maker way? Let’s take a quick look.

The write up says:

Ilya Sutskever – co-founder of OpenAI and now head of Safe Superintelligence Inc. – argued that the industry is moving from an “age of scaling” to an “age of research”. At the same time, Yann LeCun, VP & Chief AI Scientist at Meta, has been loudly insisting that LLMs are not the future of AI at all and that we need a completely different path based on “world models” and architectures like JEPA. [Beyond Search note because the author of the article was apparently making assumptions about what readers know. JEPA is short hand for Joint Embedding Predictive Architecture. The idea is to find a recipe to all machines learn about the world in a way a human does.]

I like to try to make things simple. Simple things are easier for me to remember. This passage means: Dead end. New approaches needed. Your interpretation may be different. I want to point out that my experience with LLMs in the past few months have left me with a sense that a “No Outlet” sign is ahead.

Thanks, Venice.ai. The signs are pointing in weird directions, but close enough for horse shoes.

Let’s take a look at another passage in the cited article.

“The real bottleneck [is] generalization. For Sutskever, the biggest unsolved problem is generalization. Humans can:

learn a new concept from a handful of examples

transfer knowledge between domains

keep learning continuously without forgetting everything

Models, by comparison, still need:

huge amounts of data

careful evals (sic) to avoid weird corner-case failures

extensive guardrails and fine-tuning

Even the best systems today generalize much worse than people. Fixing that is not a matter of another 10,000 GPUs; it needs new theory and new training methods.”

I assume “generalization” to AI wizards has this freight of meaning. For me, this is a big word way of saying, “Current AI models don’t work or perform like humans.” I do like the clarity of “needs new theory and training methods.” The “old” way of training has not made too many pals among those who hold copyright in my opinion. The article calls this “new recipes.”

Yann LeCun points out:

LLMs, as we know them, are not the path to real intelligence.

Yann LeCun likes world models. These have these attributes:

- “learn by watching the world (especially video)

- build an internal representation of objects, space and time

- can predict what will happen next in that world, not just what word comes next”

What’s the fix? You can navigate to the cited article and read the punch line to the experts’ views of today’s AI.

Several observations are warranted:

- Lots of money is now committed to what strikes these experts as dead ends

- The move fast and break things believers are in a spot where they may be going too fast to stop when the “Dead End” sign comes into view

- The likelihood of AI companies demonstrating that they can wish, think, and believe they have the next big thing and are operating with a willing suspension of disbelief.

I wonder if they positions presented in this article provide some insight into Google’s building dedicated AI data centers for big buck, security conscious clients like NATO and Pavel Durov’s decision to build the SETI-type of system he has announced.

Stephen E Arnold, December 2, 2025

Palantir Channels Moses, Blue Chip Consulting Baloney, and PR

December 2, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Palantir Technologies is a company in search of an identity. You may know the company latched on to the Lord of the Rings as a touchstone. The Palantir team adopted the “seeing stone.” The idea was that its technology could do magical things. There are several hundred companies with comparable technology. Datawalk has suggested that its system is the equivalent of Palantir’s. Is this true? I don’t know, but when one company is used by another company to make sales, it suggests that Palantir has done something of note.

I am thinking about Palantir because I did a small job for i2 Ltd. when Mike Hunter still was engaged with the firm. Shortly after this interesting work, I learned that Palantir was engaged in litigation with i2 Ltd. The allegations included Palantir’s setting up a straw man company to license the i2 Ltd.’s Analyst Notebook software development kit. i2 was the ur-intelware. Many of the companies marketing link analysis, analytics focused on making sense of call logs, and other arcana of little interest to most people are relatives of i2. Some acknowledge this bloodline. Others, particularly young intelware company employees working trade shows, just look confused if I mention i2 Ltd. Time is like sandpaper. Facts get smoothed, rounded, or worn to invisibility.

We have an illustration output by MidJourney. It shows a person dressed in a wardrobe that is out of step with traditional business attired. The machine-generated figure is trying to convince potential customers that the peculiarly garbed speaker can be trusted. The sign would have been viewed as good marketing centuries ago. Today it is just peculiar, possibly desperate on some level.

I read “Palantir Uses the ‘5 Whys’ Approach to Problem Solving — Here’s How It Works.” What struck me about the article is that Palantir’s CEO Alex Karp is recycling business school truisms as the insights that have powered the company to record government contracts. Toyota was one of the first company’s to focus on asking “why questions.” That firm tried to approach selling automobiles in a way different from the American auto giants. US firms were the world leaders when Toyota was cranking out cheap vehicles. The company pushed songs, communal exercise, and ideas different from the chrome trim crowd in Detroit; for example, humility, something called genchi genbutsu or go and see first hand, employee responsibility regardless of paygrade, continuous improvement (usually not adding chrome trim), and thinking beyond quarterly results. To an America, Mr. Toyoda’s ideas were nutso.

The write up reports:

Karp is a firm believer in the Five Whys, a simple system that aims to uncover the root cause of an issue that may not be immediately apparent. The process is straightforward. When an issue arises, someone asks, “Why?” Whatever the answer may be, they ask “why?” again and again until they have done so five times. “We have found is that those who are willing to chase the causal thread, and really follow it where it leads, can often unravel the knots that hold organizations back” …

The article adds this bit of color:

Palantir’s culture is almost as iconoclastic as its leader.

We have the Lord of the Rings, we have a Japanese auto company’s business method, and we have the CEO as an iconoclast.

Let’s think about this type of PR. Obviously Palantir and its formal and informal “leadership” want to be more than an outfit known for ending up in court as a result of a less-than-intelligent end run about an outfit whose primary market was law enforcement and intelligence professionals. Palantir is in the money or at least money from government contract, and it rarely mentions its long march to today’s apparent success. The firm was founded in May 2003. After a couple of years, Palantir landed its first customer: The US Central Intelligence Agency.

The company ingested about $3 billion in venture funding and reported its first profitable quarter in 2022. That’s 19 years, one interesting legal dust up, and numerous attempts to establish long-term relationships with its “customers.” Palantir did some work for do-good outfits. It tried its hand at commercial projects. But the firm remained anchored to government agencies in the US and the UK.

But something was lacking. The article is part of a content marketing campaign to make the firm’s CEO a luminary among technology leaders. Thus, we have the myth building block like the five why’s. These are not exactly intellectual home runs. The method is not proprietary. The method breaks down in many engagements. People don’t know why something happened. Consultants or forward deployed engineers scurry around trying to figure out what’s going. At some blue chip consulting firms, trotting out Toyota’s precepts as a way to deal with social media cyber security threats might result in the client saying, “No, thanks. We need a less superficial approach.”

I am not going to get a T shirt that says, “The knots that hold organizations back.” I favor

From my point of view, there are a couple of differences between the Toyota and it why era and Palantir today; for instance, Toyota was into measured, mostly disciplined process improvement. Palantir is more like the “move fast, break things” Silicon Valley outfit. Toyota was reasonably transparent about its processes. I did see the lights out factory near the Tokyo airport which was off limits to Kentucky people like. Palantir is in my mind associated with faux secrecy, legal paperwork, and those i2-related sealed documents.

Net net: Palantir’s myth making PR campaign is underway. I have no doubt it will work for many people. Good for them.

Stephen E Arnold, December x, 2025

China Smart US Dumb: An AI Content Marketing Push?

December 1, 2025

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

Another dinobaby post. No AI unless it is an image. This dinobaby is not Grandma Moses, just Grandpa Arnold.

I have been monitoring the China Smart, US Dumb campaign for some time. Most of the methods are below the radar; for example, YouTube videos featuring industrious people who seem to be similar to the owner of the Chinese restaurant not far from my office or posts on social media that remind me of the number of Chinese patents achieved each year. Sometimes influencers tout the wonders of a China-developed electric vehicle. None of these sticks out like a semi mainstream media push.

Thanks, Venice.ai, not exactly the hutong I had in mind but close enough for chicken kung pao in Kentucky.

However, that background “China Smart, US Dumb” messaging may be cranking up. I don’t know for sure, but this NBC News (not the Miss Now news) report caught my attention. Here’s the title:

The subtitle is snappier than Girl Fixes Generator, but you judge for yourself:

AI Startups Are Seeing Record Valuations, But Many Are Building on a Foundation of Cheap, Free-to-Download Chinese AI Models.

The write up states:

Surveying the state of America’s artificial intelligence landscape earlier this year, Misha Laskin was concerned. Laskin, a theoretical physicist and machine learning engineer who helped create some of Google’s most powerful AI models, saw a growing embrace among American AI companies of free, customizable and increasingly powerful “open” AI models.

We have a Xoogler who is concerned. What troubles the wizardly Misha Laskin? NBC News intones in a Stone Phillips’ tone:

Over the past year, a growing share of America’s hottest AI startups have turned to open Chinese AI models that increasingly rival, and sometimes replace, expensive U.S. systems as the foundation for American AI products.

Ever cautious, NBC News asserts:

The growing embrace could pose a problem for the U.S. AI industry. Investors have staked tens of billions on OpenAI and Anthropic, wagering that leading American artificial intelligence companies will dominate the world’s AI market. But the increasing use of free Chinese models by American companies raises questions about how exceptional those models actually are — and whether America’s pursuit of closed models might be misguided altogether.

Bingo! The theme is China smart and the US “misguided.” And not just misguided, but “misguided altogether.”

NBC News slams the point home with more force that the generator repairing Asian female closes the generator’s housing:

in the past year, Chinese companies like Deepseek and Alibaba have made huge technological advancements. Their open-source products now closely approach or even match the performance of leading closed American models in many domains, according to metrics tracked by Artificial Analysis, an independent AI benchmarking company.

I know from personal conversations that most of the people with whom I interreact don’t care. Most just accept the belief that the US is chugging along. Not doing great. Not doing terribly. Just moving along. Therefore, I don’t expect you, gentle reader, to think much of this NBC News report.

That’s why the China Smart, US Dumb messaging is effective. But this single example raises the question, “What’s the next major messaging outlet to cover this story?”

Stephen E Arnold, December 1, 2025

AI ASICs: China May Have Plans for AI Software and AI Hardware

December 1, 2025

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

Another dinobaby original. If there is what passes for art, you bet your bippy, that I used smart software. I am a grandpa but not a Grandma Moses.

I try to avoid wild and crazy generalizations, but I want to step back from the US-centric AI craziness and ask a question, “Why is the solution to anticipated AI growth more data centers?” Data centers seem like a trivial part of the broader AI challenge to some of the venture firms, BAIT (big AI technology) companies, and some online pundits. Building a data center is a cheap building filled with racks of computers, some specialized gizmos, a connection to the local power company, and a handful of network engineers. Bingo. You are good to go.

But what happens if the compute is provided by Application-Specific Integrated Circuits or ASICs? When ASICs became available for crypto currency mining, the individual or small-scale miner was no longer attractive. What happened is that large, industrialized crypto mining farms pushed out the individual miners or mom-and-pop data centers.

The Ghana ASIC roll out appears to have overwhelmed the person taking orders. Demand for cheap AI compute is strong. Is that person in the blue suit from Nvidia? Thanks, MidJourney. Good enough, the mark of excellence today.

Amazon, Google, and probably other BAIT outfits want to design their own AI chips. The problem is similar to moving silos of corn to a processing plant with a couple of pick up trucks. Capacity at chip fabrication facilities is constrained. Big chip ideas today may not be possible on the time scale set by the team designing NFL arena size data centers in Rhode Island- or Mississippi-type locations.

A Chinese startup founded by a former Google engineer claims to have created a new ultra-efficient and relatively low cost AI chip using older manufacturing techniques. Meanwhile, Google itself is now reportedly considering whether to make its own specialized AI chips available to buy. Together, these chips could represent the start of a new processing paradigm which could do for the AI industry what ASICs did for bitcoin mining.

What those ASICs did for crypto mining was shift calculations from individuals to large, centralized data centers. Yep, centralization is definitely better. Big is a positive as well.

The write up adds:

The Chinese startup is Zhonghao Xinying. Its Ghana chip is claimed to offer 1.5 times the performance of Nvidia’s A100 AI GPU while reducing power consumption by 75%. And it does that courtesy of a domestic Chinese chip manufacturing process that the company says is "an order of magnitude lower than that of leading overseas GPU chips." By "an order or magnitude lower," the assumption is that means well behind in technological terms given China’s home-grown chip manufacturing is probably a couple of generations behind the best that TSMC in Taiwan can offer and behind even what the likes of Intel and Samsung can offer, too.

The idea is that if these chips become widely available, they won’t be very good. Probably like the first Chinese BYD electric vehicles. But after some iterative engineering, the Chinese chips are likely to improve. If these improvements coincide with the turn on of the massive data centers the BAIT outfits are building, there might be rethinking required by the Silicon Valley wizards.

Several observations will be offered but these are probably not warranted by anyone other than myself:

- China might subsidize its home grown chips. The Googler is not the only person in the Middle Kingdom trying to find a way around the US approach to smart software. Cheap wins or is disruptive until neutralized in some way.

- New data centers based on the Chinese chips might find customers interested in stepping away from dependence on a technology that most AI companies are using for “me too”, imitative AI services. Competition is good, says Silicon Valley, until it impinges on our business. At that point, touch-to-predict actions come into play.

- Nvidia and other AI-centric companies might find themselves trapped in AI strategies that are comparable to a large US aircraft carrier. These ships are impressive, but it takes time to slow them down, turn them, and steam in a new direction. If Chinese AI ASICs hit the market and improve rapidly, the captains of the US-flagged Transformer vessels will have their hands full and financial officers clamoring for the leaderships’ attention.

Net net: Ponder this question: What is Ghana gonna do?

Stephen E Arnold, December 1, 2025