Technology and AI: A Good Enough and Opaque Future for Humans

August 9, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

“What Self Driving Cars Tell Us about AI Risks” provides an interesting view of smart software. I sensed two biases in the write up which I want to mention before commenting on the guts of the essay. The first bias is what I call “engineering blindspots.” The idea is that while flaws exist, technology gets better as wizards try and try again. The problem is that “good enough” may not lead to “better now” in a time measured by available funding. Therefore, the optimism engineers have for technology makes them blind to minor issues created by flawed “decisions” or “outputs.”

A technology wizard who took classes in ethics (got a gentleperson’s “C”, advanced statistics (got close enough to an “A” to remain a math major), and applied machine learning experiences a moment of minor consternation at a smart water treatment plant serving portions of New York City. The engineer looks at his monitor and says, “How did that concentration of 500 mg/L of chlorine get into the Newtown Creek Waste Water Treatment Plant?” MidJourney has a knack for capturing the nuances of an engineer’s emotions who ends up as a water treatment engineer, not an AI expert in Silicon Valley.

The second bias is that engineers understand inherent limitations. Non engineers “lack technical comprehension” and that smart software at this time does not understand “the situation, the context, or any unobserved factors that a person would consider in a similar situation.” The idea is that techno-wizards have a superior grasp of a problem. The gap between an engineer and a user is a big one, and since comprehension gaps are not an engineering problem, that’s the techno-way.

You may disagree. That’s what makes allegedly honest horse races in which stallions don’t fall over dead or have to be terminated in order to spare the creature discomfort and the owners big fees.

Now what about the innards of the write up?

- Humans make errors. This begs the question, “Are engineers human in the sense that downstream consequences are important, require moral choices, and like the humorous medical doctor adage “Do no harm”?

- AI failure is tough to predict? But predictive analytics, Monte Carlo simulations, and Fancy Dan statistical procedures like a humanoid setting a threshold because someone has to do it.

- Right now mathy stuff cannot replicate “judgment under uncertainty.” Ah, yes, uncertainty. I would suggest considering fear and doubt too. A marketing trifecta.

- Pay off that technical debt. Really? You have to be kidding. How much of the IBM mainframe’s architecture has changed in the last week, month, year, or — do I dare raise this issue — decade? How much of Google’s PageRank has been refactored to keep pace with the need to discharge advertiser paid messages as quickly as possible regardless of the user’s query? I know. Technical debt. No an issue.

- AI raises “system level implications.” Did that Israeli smart weapon make the right decision? Did the smart robot sever a spinal nerve? Did the smart auto mistake a traffic cone for a child? Of course not. Traffic cones are not an issue for smart cars unless one puts some on the road and maybe one on the hood of a smart vehicle.

Net net: Are you ready for smart software? I know I am. At the AutoZone on Friday, two individuals were unable to replace the paper required to provide a customer with a receipt. I know. I watched for 17 minutes until one of the young professionals gave me a scrawled handwritten note with the credit card code transaction number. Good enough. Let ‘er rip.

Stephen E Arnold, August 9, 2023

Someone Is Thinking Negatively and Avoiding Responsibility

August 9, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I have no idea how old the “former journalist” who wrote “I Feel Like an Old Shoe: Workers Feel Degraded and Cast Aside Because of Ageism.” Let’s consider a couple of snippets. Then I will offer several observations which demonstrate my lack of sympathy for individuals who want to blame their mental state on others. Spoiler: Others don’t care about anyone but themselves in my experience.

A high school student says to her teacher, “You are the reason I failed this math test. If you were a better teacher, I would have understood the procedure. But, no. You were busy focusing on the 10 year old genius who transferred into our class from Wuhan.” Baffled, the teacher says, “It is your responsibility to learn. There is plenty of help available from me, your classmates, or your tutor, Mr. Rao. You have to take responsibility and stop blaming others for what you did.” Thanks, MidJourney. Were you, by chance, one of those students who blame others for your faults?

Here’s a statement I noted:

“Employers told me individuals over 45 and particularly those over the age of 55 must be ‘exceptional’ in order to be hired. The most powerful finding for me however had to do with participants [of a survey] explaining that once they were labeled ‘old,’ they felt degraded and cast aside. One person told me, ‘I feel like an old shoe that’s of no use any more.’”

Okay, blame the senior managers, some of whom will be older, maybe old-age home grade like Warren Buffet or everyone’s favorite hero of Indiana Jones (Harrison Ford), or possibly Mr. Biden. Do these people feel old and like an old shoe? I suppose but they put on a good show. Are these people exceptional? Sure, why not label them as such. My point is that they persevere.

Now this passage from the write up:

Over all, there are currently about the same number of younger and older workers. Nevertheless, the share of older workers has increased for almost all occupations.

These data originate from Statistics Canada. For my purposes, let’s assume that Canada data are similar to US data. If an older worker feels like an “old shoe,” perhaps a personal version of the two slit experiment is operation. The observer alters the reality. What this means is that when the worker looks at himself or herself, the reality is fiddled. Toss in some emotional baggage, like a bad experience in kindergarten, and one can make a case for “they did this to me.”

My personal view is that some radical empiricism may be helpful to those who are old and want to blame others for their perceived status, their prospects, or there personal situation.

I am not concerned about my age. I am going to be 79 in a few weeks. I am proud to be a dinobaby, a term coined by someone at IBM I have heard to refer to the deadwood. The idea was that “old” meant high salary and often an informed view of a business or technical process. Younger folks wanted to outsource and salary, age, and being annoying in meetings were convenient excuses for cost reduction.

I am working on a project for an AI outfit. I have a new book (which is for law enforcement professionals, not the humilus genus. I have a keynote speech to deliver in October 2023. In short, I keep doing what I have been doing since I left a PhD program to work for that culturally sensitive outfit which helped provide technical services to those who would make bombs and other oddments.

If a person in my lecture comes up to me and says, “I disagree,” I listen. I don’t whine, make excuses, or dodge the comment. I deal with it to the best of my ability. I am not going to blame anything or anyone for my age or my work product. People who grouse are making clear to me that they lack the mental wiring to provide immediate and direct problem solving skills and to be spontaneously helpful.

Sorry. The write up is not focusing on the fix which is inside the consciousness of the individuals who want to blame others for their plight in life.

Stephen E Arnold, August 7, 2023

Research 2023: Is There a Methodology of Control via Mendacious Analysis

August 8, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I read “How Facebook Does (and Doesn’t) Shape Our Political Views.” I am not sure what to conclude from the analysis of four studies about Facebook (is it Zuckbook?) presumably completed with some cooperation from the social media giant itself. The message I carried away from the write up is that tainted research may be the principal result of these supervised studies.

The up and coming leader says to a research assistant, “I am telling you. Manipulate or make up the data. I want the results I wrote about supported by your analysis. If you don’t do what I say, there will be consequences.” MidJourney does know how to make a totally fake leader appear intense.

Consider the state of research in 2023. I have mentioned the problem with Stanford University’s president and his making up data. I want to link the Stanford president’s approach to research with Facebook (Meta). The university has had some effect on companies in the Silicon Valley region. And Facebook (Meta) employs a number of Stanford graduates. For me, then, it is logical to consider the approach of the university to objective research and the behavior of a company with some Stanford DNA to share certain characteristics.

“How Facebook Does (and Doesn’t) Shape Our Political Views” offers this observation based on “research”:

“… the findings are consistent with the idea that Facebook represents only one facet of the broader media ecosystem…”

The summary of the Facebook-chaperoned research cites an expert who correctly in my my identifies two challenges presented by the “research”:

- Researchers don’t know what questions to ask. I think this is part of the “don’t know what they don’t know.” I accept this idea because I have witnessed it. (Example: A reporter asking me about sources of third party data used to spy on Americans. I ignored the request for information and disconnected from the reporter’s call.)

- The research was done on Facebook’s “terms”. Yes, powerful people need control; otherwise, the risk of losing power is created. In this case, Facebook (Meta) wants to deflect criticism and try to make clear that the company’s hand was not on the control panel.

Are there parallels between what the fabricating president of Stanford did with data and what Facebook (Meta) has done with its research initiative? Shaping the truth is common to both examples.

In Stanford’s Ideal Destiny, William James said this about about Stanford:

It is the quality of its men that makes the quality of a university.

What links the actions of Stanford’s soon-to-be-former president and Facebook (Meta)? My answer would be, “Creating a false version of objective data is the name of the game.” Professor James, I surmise, would not be impressed.

Stephen E Arnold, August 8, 2023

Another High School Tactic: I Am Hurt, Coach

August 7, 2023

This is a rainy Monday (August 7, 2023). From my point of view, the content flowing across my monitoring terminal is not too exciting. More security issue, 50-50 financial rumor mongering, and adult Internet users may be monitored (the world is coming to an end!). But in the midst of this semi-news was an item called “Musk Says He May Need Surgery, Will Get MRI on Back and Neck.” Wow. The ageing icon of self-driving autos which can run over dinobabies like me has dipped into his management Book of Knowledge for a tactic to avoid a “cage match” with the lovable Zuck, master of Threads and beloved US high-technology social media king thing.

“What do you mean, your neck hurts? I need you for the big game on Saturday. Win and you will be more famous than any other wizard with smart cars, rockets, and a social media service.” says the assistant coach. Thanks MidJourney, you are a sport.

You can get the information from the cited story, which points out:

The world’s richest person said he will know this week whether surgery will be required, ahead of his proposed cage fight with Meta Platforms Inc. co-founder Mark Zuckerberg. He previously said he “might need an operation to strengthen the titanium plate holding my C5/C6 vertebrae together.”

Mr. Zuckerberg allegedly is revved and ready. The write up reports:

Zuckerberg posted Sunday on Threads that he suggested Aug. 26 for the match and he’s still awaiting confirmation. “I’m ready today,” he said. “Not holding my breath.”

From my point of view, the tactic is similar to “the dog ate my homework.” This variant — I couldn’t do my homework because I was sick — comes directly from the Guide to the High School Science Club Management Method, known internationally as GHSSCMM. The information in this well-known business manual has informed outstanding decision making in personnel methods (Dr. Timnit Gebru, late of Google), executives giving themselves more money before layoffs (too many companies to identify in a blog post like this), and appearing in US Congressional hearings (Thank you for the question. I don’t know. I will have the information delivered to your office).

Health problems can be problematic. Will the cage match take place? What if Mr. Musk says, “I can fight.” Will Mr. Zuckerberg respond, “I sprained my ankle”? What does the GHSSCMM suggest in a tit-for-tat dynamic?

Perhaps we should ask both Mr. Musk’s generative AI system and the tame Zuckerberg LLAMLA? That’s “real” news.

Stephen E Arnold, August 7, 2023

MBAs, Lawyers, and Sociology Majors Lose Another Employment Avenue

August 4, 2023

Note: Dinobaby here: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid. Services are now ejecting my cute little dinosaur gif. (´?_?`) Like my posts related to the Dark Web, the MidJourney art appears to offend someone’s sensibilities in the datasphere. If I were not 78, I might look into these interesting actions. But I am and I don’t really care.

Some days I find MBAs, lawyers, and sociology majors delightful. On others I fear for their future. One promising avenue of employment has now be cut off. What’s the job? Avocado peeler in an ethnic restaurant. Some hearty souls channeling Euell Gibbons may eat these as nature delivers them. Others prefer a toast delivery vehicle or maybe a dip to accompany a meal in an ethnic restaurant or while making a personal vlog about the stresses of modern life.

“Chipotle’s Autocado Robot Can Prep Avocados Twice as Fast as Humans” reports:

The robot is capable of peeling, seeding, and halving a case of avocados significantly faster than humans, and the company estimates it could cut its typical 50-minute guacamole prep time in half…

When an efficiency expert from a McKinsey-type firm or a second tier thinker from a mid-tier consulting firm reads this article, there is one obvious line of thought the wizard will follow: Replace some of the human avocado peelers with a robot. Projecting into the future while under the influence of spreadsheet fever, an upgrade to the robot’s software will enable it to perform other jobs in the restaurant or food preparation center; for example, taco filler or dip crafter.

Based on this actual factual write up, I have concluded that some MBAs, lawyers, and sociology majors will have to seek another pathway to their future. Yard sale organizer, pet sitter, and possibly the life of a hermit remain viable options. Oh, the hermit will have GoFundMe and BuyMeaCoffee pages. Perhaps a T shirt or a hat?

Stephen E Arnold, August 4, 2023

Google Relies on People Not Perceiving a Walled Garden

August 3, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I want to keep this brief. I have been, largely without effect, explaining Google’s foundational idea of a walled garden people will perceive as the digital world. Once in the garden, why leave?

A representation of the Hotel California’s walled garden. Why bother to leave? The walled garden is the entire digital world. I wish MidJourney would have included the neon sign spelling out “Hotel California” or “Googleplex.” But no, no, no. Smart software has guard rails.

Today (August 3, 2023), I want to point to two different write ups which explain bafflement at what Google is doing.

The first is a brief item from Mastodon labeled (I think) “A List of Recent Hostile Moves by Google’s Chrome Team.” The write up points out that Google’s attempt to become the gatekeeper for content. Another is content blocking. And action to undermine an image format. Hacker News presents several hundred comments which say to me, “Why is Google doing this? Google is supposed to be a good company.” Imagine. Read these comments at this link. Amazing, at least to me!

The second item is from a lawyer. The article is “Google’s Plan To DRM The Web Goes Against Everything Google Once Stood For.” Please, read the write up yourself. What’s remarkable is the point of view expressed in this phrase “everything Google once stood for.” Lawyers are a fascinating branch of professional advice givers. I am fearful of this type of thinking; therefore, I try to stay as far away from attorneys as I can.

Let’s step back.

In one of my monographs about Google (research funded by commercial enterprises) which contain some of the information my clients did not deep sensitive, I depicted Sergey Brin as a magician with fire in his hand. From the beginning of Google’s monetization via advertising misdirection and keeping the audience amazed were precepts of the company. Today’s Google is essentially running what is now a 25 year old game plan. Why not? Advertising “inspired” by Yahoo-GoTo-Overture’s pay-for-traffic model generates almost 70 percent of the company’s revenue. With advertising under pressure, the Google has to amp up its controls. Those relevant ads displayed to me for feminine-centric products reflect the efficacy of the precision ad matching, right?

Think about the walled garden metaphor. Think about the magician analogy. Now think about what Google will do to extend and influence the world around it. Exciting for young people who view the world through eyes which have only seen what the walled garden offers. If TikTok goes away, Google has its version with influencers and product placements. What’s not to like about Google News, Gmail, or Android? Most people find nothing untoward, and altering those perceptions may be difficult.

The long view is helpful when one extends control a free service at a time. And relevance of Google’s search results? The results are relevant because the objective is ad revenue and messaging on Googley things. An insect in the walled garden does not know much about other gardens and definitely nothing about an alternative.

Stephen E Arnold, August 3, 2023

Building Trust a Step at a Time the Silicon Valley Way

August 2, 2023

Note: Dinobaby here: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid. Services are now ejecting my cute little dinosaur gif. (´?_?`) Like my posts related to the Dark Web, the MidJourney art appears to offend someone’s sensibilities in the datasphere. If I were not 78, I might look into these interesting actions. But I am and I don’t really care.

I know that most companies are not really “in” Silicon Valley. It is a figure of speech to put certain firms and their business practices in a basket. I then try to find something useful to say about the basket. Please, feel free to disagree. Just note that I am a dinobaby, and I am often reluctant to discard my perceptions. Look at the positive side of this mental orientation: If and when you are 78, you too can analyze from a perspective guaranteed to be different from the 20-somethings who are the future of lots of stuff; for example, who gets medical care. Think about that.

I read “Meta, Amazon and Microsoft team up to take down Google Maps.” The source is a newspaper which I label “estimable tabloid.” The story caught my attention because it identified what this dinobaby perceives as collusion among commercial enterprises. My hunch is that some people living outside the US might label the business approach different. Classification debates are the most fun when held at information science trade shows. Vendors explain why their classification system is the absolute best way to put information in baskets. Exciting.

So my basket contains three outfits which want to undermine or get the biggest piece of the map action that Google controls. None of these outfits are monopolistic. If they were, the Cracker Jack US government agency charged with taking action against outfits that JP Morgan would definitely want to own would not lose its cases against a few big tech outfits.

The article explains:

The Operture Maps Foundation is an open initiative, making it a compelling alternative to Google Maps for app and software-makers. Google Maps takes a closed approach, giving Google greater control over how its map data is used and implemented. It also charges app developers for access to Google Maps, based on how many times the app’s users call on mapping info.

I wonder if this suggests that Operture Maps is different from the Google?

The article points out:

Overture Maps Foundation was established in 2022, as a partnership between Amazon AWS, Microsoft, Meta and TomTom. Its aim is “to create the smartest map on the planet,” according to TomTom VP of Engineering Mike Harrell. It also aims to make sure all your map needs in the future won’t be supplied by either Google or Apple.

I interpret this passage to mean that three big dogs want to team up to become a bigger dog. The consequence of being a bigger dog is that it can chow down on the goodies destined for the behemoths Apple and Google.

Viewed from the perspective of a consumer, I see this as an attempt for three “competitors” to team up and go after Apple and Google. Each company has a map business. Viewed as a person who worked in government entities for a number of years, I can interpret this tie up as a way to get some free meals and maybe a boondoggle from one or more lobbyists working on behalf of these firms. Viewed as someone concerned about the ineffectual nature of US regulation of the activities of big tech, I am more inclined than ever to use a paper map.

Will the Silicon Valley “way” work in other nation states? Probably not. China has taken steps to manage some of these super duper outfits within their borders. The European Union is skeptical of many big tech, Silicon Valley ideas. But at the end of the fiscal year, will objections matter?

I know what my answer is. Grab that paper map. We’re going on a road trip with location tracking and selective information whether I like it or not. I think I trust my paper map.

Stephen E Arnold, August 2, 2023

Meta Being Meta: Move Fast and Snap Threads

July 31, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

I want to admit that as a dinobaby I don’t care about [a] Barbie, [b] X [pronouced “ech” or “yech”], twit, or tweeter, [c] Zuckbook, meta-anything, or broken threads. Others seem to care deeply. The chief TWIT (Leo Laporte) — who is valiantly trying to replicate the success of the non-advertising “value for value” model for podcasting — cares about the Tweeter. He can be the one and only TWIT; the Twitter is now X [pronouced “ech” or “yech”], a delightful letter which evokes a number of interesting Web sites when auto-fill is relying on click popularity for relevance. Many of those younger than I care about the tweeter; for instance, with Twitter as a tailwind, some real journalists were able to tell their publisher employers, “I am out of here.” But with the tweeter in disarray does an opportunity exist for the Zuck to cause the tweeter to eXit?

A modern god among mortals looks at the graffito on the pantheon. Anger rises. Blood lust enflames the almighty. Then digital divinity savagely snarls, “Attack now. And render the glands from every musky sheep-ox in my digital domain. Move fast, or you will spend one full day with Cleggus Bombasticus. And you know that is sheer sheol.” [Ah, alliteration. But what is “sheol”?]

Plus, I can name one outfit interested in the Musky Zucky digital cage match, the Bezos bulldozer’s “real” news machine. I read “Move Fast and Beat Musk: The Inside Story of How Meta Built Threads,” which was ground out by the spinning wheels of “real” journalists. I would have preferred a different title; for instance my idea is in italics, Move fast and zuck up! but that’s just my addled view of the world.

The WaPo write up states:

Threads drew more than 100 million users in its first five days — making it, by some estimations, the most successful social media app launch of all time. Threads’ long-term success is not assured. Weeks after its July 5 launch, analytics firms estimated that the app’s usage dropped by more than half from its early peak. And Meta has a long history of copycat products or features that have failed to gain traction…

That’s the story. Take advantage of the Musker’s outstanding management to create a business opportunity for a blue belt in an arcane fighting method. Some allegedly accurate data suggest that “Most of the 100 million people who signed up for Threads stopped using it.”

Why would usage allegedly drop?

The Bezos bulldozer “real” news system reports:

Meta’s [Seine] Kim responded, “Our industry leading integrity enforcement tools and human review are wired into Threads.”

Yes, a quote to note.

Several observations:

- Threads arrived with the baggage of Zuckbook. Early sign ups decided to not go back to pick up the big suitcases.

- The persistence of users to send IXXes (pronounced ech, a sound similar to the “etch” in retch) illustrates one of Arnold’s Rules of Online: Once an online habit is formed, changing behavior may be impossible without just pulling the plug. Digital addiction is a thing.

- Those surfing on the tweeter to build their brand are loath to admit that without the essentially free service their golden goose is queued to become one possibly poisonous Chicken McNugget.

Snapped threads? Yes, even those wrapped tightly around the Musker. Thus, I find one of my co-worker’s quips apt: “Move fast and zuck up.”

Stephen E Arnold, July 31, 2023

Google: Running the Same Old Game Plan

July 31, 2023

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Google has been running the same old game plan since the early 2000s. But some experts are unaware of its simplicity. In the period from 2002 to 2004, I did a number of reports for my commercial clients about Google. In 2004, I recycled some of the research and analysis into The Google Legacy. The thesis of the monograph, published in England by the now defunct Infonortics Ltd. explained the infrastructure for search was enhanced to provide an alternative to commercial software for personal, business, and government use. The idea that a search-and-retrieval system based on precedent technology and funded in part by the National Science Foundation with a patent assigned to Stanford University could become Googzilla was a difficult idea to swallow. One of the investment banks who paid for our research got the message even though others did not. I wonder if that one group at the then world’s largest software company remembers my lecture about the threat Google posed to a certain suite of software applications? Probably not. The 20 somethings and the few suits at the lecture looked like kindergarteners waiting for recess.

I followed up The Google Legacy with Google Version 2.0: The Calculating Predator. This monograph was again based on proprietary research done for my commercial clients. I recycled some of the information, scrubbing that which was deemed inappropriate for anyone to buy for a few British pounds. In that work, I rather methodically explained that Google’s patent documents provided useful information about why the mere Web search engine was investing in some what seemed like odd-ball technologies like software janitors. I reworked one diagram to show how the Google infrastructure operated like a prison cell or walled garden. The idea is that once one is in, one may have to work to get past the gatekeeper to get out. I know the image from a book does not translate to a blog post, but, truth be told, I am disinclined to recreate art. At age 78, it is often difficult to figure out why smart drawing tools are doing what they want, not what I want.

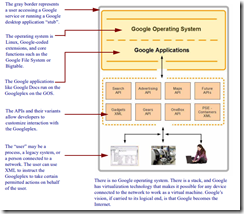

Here’s the diagram:

The prison cell or walled garden (2006) from Google Version 2.0: The Calculating Predator, published by Infonortics Ltd., 2006. And for any copyright trolls out there, I created the illustration 20 years ago, not Alamy and not Getty and no reputable publisher.

Three observations about the diagram are: [a] The box, prison cell, or walled garden contains entities, [b] once “in” there is a way out but the exit is via Google intermediated, defined, and controlled methods, and [c] anything in the walled garden perceives that the prison cell is the outside world. The idea obviously is for Google to become the digital world which people will perceive as the Internet.

I thought about my decades old research when I read “Google Tries to Defend Its Web Environment Integrity as Critics Slam It as Dangerous.” The write up explains that Google wants to make online activity better. In the comments to the article, several people point out that Google is using jargon and fuzzy misleading language to hide its actual intentions with the WEI.

The critics and the write up miss the point entirely: Look at the diagram. WEI, like the AMP initiative, is another method added to existing methods for Google to extend its hegemony over online activity. The patent, implement, and explain approach drags out over years. Attention spans, even for academics who make up data like the president of Stanford University, are not interested in anything other than personal goal achievement. Finding out something visible for years is difficult. When some interesting factoid is discovered, few accept it. Google has a great brand, and it cares about user experience and the other fog the firm generates.

MidJourney created this nice image of a Googler preparing for a presentation to the senior management of Google in 2001. In that presentation, the wizard was outlining Google’s fundamental strategy: Fake left, go right. The slogan for the company, based on my research, keep them fooled. Looking the wrong way is the basic rule of being a successful Googler, strategist, or magician.

Will Google WEI win? It does not matter because Google will just whip up another acronym, toss some verbal froth around, and move forward. What is interesting to me is Google’s success. Points I have noted over the years are:

- Kindergarten colors, Google mouse pads, and talking like General Electric once did about “bringing good things” continues to work

- Google’s dominance is not just accepted, changing or blocking anything Google wants to do is sacrilegious. It has become a sacred digital cow

- The inability of regulators to see Google as it is remains a constant, like Google’s advertising revenue

- Certain government agencies could not perform their work if Google were impeded in any significant way. No, I will not elaborate on this observation in a public blog post. Don’t even ask. I may make a comment in my keynote at the Massachusetts / New York Association of Crime Analysts’ conference in early October 2023. If you can’t get in, you are out of luck getting information on Point Four.

Net net: Fire up your Chrome browser. Look for reality in the Google search results. Turn cartwheels to comply with Google’s requirements. Pay money for traffic via Google advertising. Learn how to create good blog posts from Google search engine optimization experts. Use Google Maps. Put your email in Gmail. Do the Google thing. Then ask yourself, “How do I know if the information provided by Google is “real”? Just don’t get curious about synthetic data for Google smart software. Predictions about Big Brother are wrong. Google, not the government, is the digital parent whom you embraced after a good “Backrub.” Why change from high school science thought processes? If it ain’t broke, don’t fix it.

Stephen E Arnold, July 31, 2023

Digital Delphis: Predictions More Reliable Than Checking Pigeon Innards, We Think

July 28, 2023

![Vea4_thumb_thumb_thumb_thumb_thumb_t[1] Vea4_thumb_thumb_thumb_thumb_thumb_t[1]](http://arnoldit.com/wordpress/wp-content/uploads/2023/07/Vea4_thumb_thumb_thumb_thumb_thumb_t1_thumb-52.gif) Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

Note: This essay is the work of a real and still-alive dinobaby. No smart software involved, just a dumb humanoid.

One of the many talents of today’s AI is apparently a bit of prophecy. Interconnected examines “Computers that Live Two Seconds in the Future.” Blogger Matt Webb pulls together three examples that, to him, represent an evolution in computing.

The AI computer, a digital Delphic oracle, gets some love from its acolytes. One engineer says, “Any idea why the system is hallucinating?” The other engineer replies, “No clue.” MidJourney shows some artistic love to hard-working, trustworthy computer experts.

His first digital soothsayer is Apple’s Vision Pro headset. This device, billed as a “spatial computing platform,” takes melding the real and virtual worlds to the next level. To make interactions as realistic as possible, the headset predicts what a user will do next by reading eye movements and pupil dilation. The Vision Pro even flashes visuals and sounds so fast as to be subliminal and interprets the eyes’ responses. Ingenious, if a tad unsettling.

The next example addresses a very practical problem: WavePredictor from Next Ocean helps with loading and unloading ships by monitoring wave movements and extrapolating the next few minutes. Very helpful for those wishing to avoid cargo sloshing into the sea.

Finally, Webb cites a development that both excites and frightens programmers: GitHub Copilot. Though some coders worry this and similar systems will put them out of a job, others see it more as a way to augment their own brilliance. Webb paints the experience as a thrilling bit of time travel:

“It feels like flying. I skip forwards across real-time when writing with Copilot. Type two lines manually, receive and get the suggestion in spectral text, tab to accept, start typing again… OR: it feels like reaching into the future and choosing what to bring back. It’s perhaps more like the latter description. Because, when you use Copilot, you never simply accept the code it gives you. You write a line or two, then like the Ghost of Christmas Future, Copilot shows you what might happen next – then you respond to that, changing your present action, or grabbing it and editing it. So maybe a better way of conceptualizing the Copilot interface is that I’m simulating possible futures with my prompt then choosing what to actualize. (Which makes me realize that I’d like an interface to show me many possible futures simultaneously – writing code would feel like flying down branching time tunnels.)”

Gnarly dude! But what does all this mean for the future of computing? Even Webb is not certain. Considering operating systems that can track a user’s focus, geographic location, communication networks, and augmented reality environments, he writes:

“The future computing OS contains of the model of the future and so all apps will be able to anticipate possible futures and pick over them, faster than real-time, and so… …? What happens when this functionality is baked into the operating system for all apps to take as a fundamental building block? I don’t even know. I can’t quite figure it out.”

Us either. Stay tuned dear readers. Oh, let’s assume the wizards get the digital Delphic oracle outputting the correct future. You know, the future that cares about humanoids.

Cynthia Murrell, July 28, 2023