ACM: Good Defense or a Business Play?

March 8, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Professional publishers want to use the trappings of peer review, standards, tradition, and quasi academic hoo-hah to add value to their products; others want a quasi-monopoly. Think public legal filings and stuff in high school chemistry book. The customers of professional publishers are typically not the folks at the pizza joint on River Road in Prospect, Kentucky. The business of professional publishing in an interesting one, but in the wild and crazy world of collapsing next-gen publishing, professional publishing is often ignored. A publisher conference aimed at professional publishers is quite different from the Jazz Age South by Southwest shindig.

Yep, free. Thanks, MSFT Copilot. How’s that security today?

But professional publishers have been in the news. Examples include the dust up about academics making up data. The big time president of the much-honored Stanford University took intellectual short cuts and quit late last year. Then there was the some nasty issue about data and bias at the esteemed Harvard University. Plus, a number of bookish types have guess-timated that a hefty percentage of research studies contain made-up data. Hey, you gotta publish to get tenure or get a grant, right?

But there is an intruder in the basement of the professional publishing club. The intruder positions itself in the space between the making up of some data and the professional publishing process. That intruder is ArXiv, an open-access repository of electronic preprints and postprints (known as e-prints) approved for posting after moderation, according to Wikipedia. (Wikipedia is the cancer which killed the old-school encyclopedias.) Plus, there are services which offer access to professional content without paying for the right to host the information. I won’t name these services because I have no desire to have legal eagles circle about my semi-functioning head.

Why do I present this grade-school level history? I read “CACM Is Now Open Access.” Let’s let the Association of Computing Machinery explain its action:

For almost 65 years, the contents of CACM have been exclusively accessible to ACM members and individuals affiliated with institutions that subscribe to either CACM or the ACM Digital Library. In 2020, ACM announced its intention to transition to a fully Open Access publisher within a roughly five-year timeframe (January 2026) under a financially sustainable model. The transition is going well: By the end of 2023, approximately 40% of the ~26,000 articles ACM publishes annually were being published Open Access utilizing the ACM Open model. As ACM has progressed toward this goal, it has increasingly opened large parts of the ACM Digital Library, including more than 100,000 articles published between 1951–2000. It is ACM’s plan to open its entire archive of over 600,000 articles when the transition to full Open Access is complete.

The decision was not an easy one. Money issues rarely are.

I want to step back and look at this interesting change from a different point of view:

- Getting a degree today is less of a must have than when I was a wee dinobaby. My parents told me I was going to college. Period. I learned how much effort was required to get my hands on academic journals. I was a master of knowing that Carnegie-Mellon had new but limited bound volumes of certain professional publications. I knew what journals were at the University of Pittsburgh. I used these resources when the Duquesne Library was overrun with the faithful. Now “researchers” can zip online and whip up astonishing results. Google-type researchers prefer the phrase “quantumly supreme results.” This social change is one factor influencing the ACM.

- Stabilizing revenue streams means pulling off a magic trick. Sexy conferences and special events complement professional association membership fees. Reducing costs means knocking off the now, very very expensive printing, storing, and shipping of physical journals. The ACM seems to have figured out how to keep the lights on and the computing machine types spending.

- ACM members can use ACM content the way they do a pirate library’s or the feel good ArXiv outfit. The move helps neutralize discontent among the membership, and it is good PR.

These points raise a question; to wit: In today’s world how relevant will a professional association and its professional publications be going foreword. The ACM states:

By opening CACM to the world, ACM hopes to increase engagement with the broader computer science community and encourage non-members to discover its rich resources and the benefits of joining the largest professional computer science organization. This move will also benefit CACM authors by expanding their readership to a larger and more diverse audience. Of course, the community’s continued support of ACM through membership and the ACM Open model is essential to keeping ACM and CACM strong, so it is critical that current members continue their membership and authors encourage their institutions to join the ACM Open model to keep this effort sustainable.

Yep, surviving in a world of faux expertise.

Stephen E Arnold, March 8, 2024

Engineering Trust: Will Weaponized Data Patch the Social Fabric?

March 7, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Trust is a popular word. Google wants me to trust the company. Yeah, I will jump right on that. Politicians want me to trust their attestations that citizen interest are important. I worked in Washington, DC, for too long. Nope, I just have too much first-hand exposure to the way “things work.” What about my bank? It wants me to trust it. But isn’t the institution the subject of a a couple of government investigations? Oh, not important. And what about the images I see when I walk gingerly between the guard rails. I trust them right? Ho ho ho.

In our post-Covid, pre-US national election, the word “trust” is carrying quite a bit of freight. Whom to I trust? Not too many people. What about good old Socrates who was an Athenian when Greece was not yet a collection of ferocious football teams and sun seekers. As you may recall, he trusted fellow residents of Athens. He end up dead from either a lousy snack bar meal and beverage, or his friends did him in.

One of his alleged precepts in his pre-artificial intelligence worlds was:

“We cannot live better than in seeking to become better.” — Socrates

Got it, Soc.

Thanks MSFT Copilot and provider of PC “moments.” Good enough.

I read “Exclusive: Public Trust in AI Is Sinking across the Board.” Then I thought about Socrates being convicted for corruption of youth. See. Education does not bring unlimited benefits. Apparently Socrates asked annoying questions which open him to charges of impiety. (Side note: Hey, Socrates, go with the flow. Just pray to the carved mythical beast, okay?)

A loss of public trust? Who knew? I thought it was common courtesy, a desire to discuss and compromise, not whip out a weapon and shoot, bludgeon, or stab someone to death. In the case of Haiti, a twist is that a victim is bound and then barbequed in a steel drum. Cute and to me a variation of stacking seven tires in a pile dousing them with gasoline, inserting a person, and igniting the combo. I noted a variation in the Ukraine. Elderly women make cookies laced with poison and provide them to special operation fighters. Subtle and effective due to troop attrition I hear. Should I trust US Girl Scout cookies? No thanks.

What’s interesting about the write up is that it provides statistics to back up this brilliant and innovative insight about modern life is its focus on artificial intelligence. Let me pluck several examples from the dot point filled write up:

- “Globally, trust in AI companies has dropped to 53%, down from 61% five years ago.”

- “Trust in AI is low across political lines. Democrats trust in AI companies is 38%, independents are at 25% and Republicans at 24%.”

- “Eight years ago, technology was the leading industry in trust in 90% of the countries Edelman studies. Today, it is the most trusted in only half of countries.”

AI is trendy; crunchy click bait is highly desirable even for an estimable survivor of Silicon Valley style news reporting.

Let me offer several observations which may either be troubling or typical outputs from a dinobaby working in an underground computer facility:

- Close knit groups are more likely to have some concept of trust. The exception, of course, is the behavior of the Hatfields and McCoys

- Outsiders are viewed with suspicion. Often for now reason, a newcomer becomes the default bad entity

- In my lifetime, I have watched institutions take actions which erode trust on a consistent basis.

Net net: Old news. AI is not new. Hyperbole and click obsession are factors which illustrate the erosion of social cohesion. Get used to it.

Stephen E Arnold, March 7, 2024

Philosophy and Money: Adam Smith Remains Flexible

March 6, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In the early twenty-first century, China was slated to overtake the United States as the world’s top economy. Unfortunately for the “sleeping dragon,” China’s economy has tanked due to many factors. The country, however, still remains a strong spot for technology development such as AI and chips. The Register explains why China is still doing well in the tech sector: “How Did China Get So Good At Chips And AI? Congressional Investigation Blames American Venture Capitalists.”

Venture capitalists are always interested in increasing their wealth and subverting anything preventing that. While the US government has choked China’s semiconductor industry and denying it the use of tools to develop AI, venture capitalists are funding those sectors. The US’s House Select Committee on the China Communist Party (CCP) shared that five venture capitalists are funneling billions into these two industries: Walden International, Sequoia Capital, Qualcomm Ventures, GSR Ventures, and GGV Capital. Chinese semiconductor and AI businesses are linked to human rights abuses and the People’s Liberation Army. These five venture capitalist firms don’t appear interested in respecting human rights or preventing the spread of communism.

The House Select Committee on the CCP discovered that one $1.9 million went to AI companies that support China’s mega-surveillance state and aided in the Uyghur genocide. The US blacklisted these AI-related companies. The committee also found that $1.2 bullion was sent to 150 semiconductor companies.

The committee also accused of sharing more than funding with China:

“The committee also called out the VCs for "intangible" contributions – including consulting, talent acquisition, and market opportunities. In one example highlighted in the report, the committee singled out Walden International chairman Lip-Bu Tan, who previously served as the CEO of Cadence Design Systems. Cadence develops electronic design automation software which Chinese corporates, like Huawei, are actively trying to replicate. The committee alleges that Tan and other partners at Walden coordinated business opportunities and provided subject-matter expertise while holding board seats at SMIC and Advanced Micro-Fabrication Equipment Co. (AMEC).”

Sharing knowledge and business connections is equally bad (if not worse) than funding China’s tech sector. It’s like providing instructions and resources on how to build nuclear weapon. If China only had the resources it wouldn’t be as frightening.

Whitney Grace, March 6, 2024

Synthetic Data: From Science Fiction to Functional Circumscription

March 4, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Synthetic data are information produced by algorithms, not by real-world events. It’s created using real-world data and numerical recipes. The appeal is that it is easier than collecting real life information, cheaper than dealing with data from real life, and faster than fooling around with surveys, monitoring devices, and law suits. In theory, synthetic data is one promising way of skirting the expense of getting humans involved.

“What Is [a] Synthetic Sample – And Is It All It’s Cracked Up to Be?” tackles the subject of a synthetic sample, a topic which is one slice of the synthetic data universe. The article seeks “to uncover the truth behind artificially created qualitative and quantitative market research data.” I am going to avoid the question, “Is synthetic data useful?” because the answer is, “Yes.” Bean counters and those looking to find a way out of the pickle barrel filled with expensive brine are going to chase after the magic of algorithms producing data to do some machine learning magic.

In certain situations, fake flowers are super. Other times, the faux blooms are just creepy. Thanks, MSFT Copilot Bing thing. Good enough.

Are synthetic data better than real world data? The answer from my vantage point is, “It depends.” Fancy math can prove that for some use cases, synthetic data are “good enough”; that is, the data produce results close enough to what a “real” data set provides. Therefore, just use synthetic data. But for other applications, synthetic data might throw some sand in the well-oiled marketing collateral describing the wonders of synthetic data. (Some university research labs are quite skilled in PR speak, but the reality of their methods may not line up with the PowerPoints used to raise venture capital.)

This essay discusses a research project to figure out if a synthetic sample works or in my lingo if the synthetic sample is good enough. The idea is that as long as the synthetic data is within a specified error range, the synthetic sample can be used and may produce “reliable” or useful results. (At least one hopes this is the case.)

I want to focus on one portion of the cited article and invite you to read the complete Kantar explanation.

Here’s the passage which snagged my attention:

… right now, synthetic sample currently has biases, lacks variation and nuance in both qual and quant analysis. On its own, as it stands, it’s just not good enough to use as a supplement for human sample. And there are other issues to consider. For instance, it matters what subject is being discussed. General political orientation could be easy for a large language model (LLM), but the trial of a new product is hard. And fundamentally, it will always be sensitive to its training data – something entirely new that is not part of its training will be off-limits. And the nature of questioning matters – a highly ’specific’ question that might require proprietary data or modelling (e.g., volume or revenue for a particular product in response to a price change) might elicit a poor-quality response, while a response to a general attitude or broad trend might be more acceptable.

These sentences present several thorny problems is academic speak. Let’s look at them in the vernacular of rural Kentucky where I live.

First, we have the issue of bias. Training data can be unintentionally or intentionally biased. Sample radical trucker posts on Telegram, and use those messages to train a model like Reor. That output is going to express views that some people might find unpalatable. Therefore, building a synthetic data recipe which includes this type of Telegram content is going to be oriented toward truck driver views. That’s good and bad.

Second, a synthetic sample may require mixing data from a “real” sample. That’s a common sense approach which reduces some costs. But will the outputs be good enough. The question then becomes, “Good enough for what applications?” Big, general questions about how a topic is presented might be close enough for horseshoes. Other topics like those focusing on dealing with a specific technical issue might warrant more caution or outright avoidance of synthetic data. Do you want your child or wife to die because the synthetic data about a treatment regimen was close enough for horseshoes. But in today’s medical structure, that may be what the future holds.

Third, many years ago, one of the early “smart” software companies was Autonomy, founded by Mike Lynch. In the 1990s, Bayesian methods were known but some — believe it or not — were classified and, thus, not widely known. Autonomy packed up some smart software in the Autonomy black box. Users of this system learned that the smart software had to be retrained because new terms and novel ideas not in the original training set were not findable by the neuro linguistic program’s engine. Yikes, retraining requires human content curation of data sets, time to retrain the system, and the expense of redeploying the brains of the black boxes. Clients did not like this and some, to be frank, did not understand why a product did not work like an MG sports car. Synthetic data has to be trained to “know” about new terms and avid the “certain blindness” probability based systems possess.

Fourth, the topic of “proprietary data modeling” means big bucks. The idea behind synthetic data is that it is cheaper. Building proprietary training data and keeping it current is expensive. Is it better? Yeah, maybe. Is it faster? Probably not when humans are doing the curation, cleaning, verifying, and training.

The write up states:

But it’s likely that blended models (human supplemented by synthetic sample) will become more common as LLMs get even more powerful – especially as models are finetuned on proprietary datasets.

Net net: Synthetic data warrants monitoring. Some may want to invest in synthetic data set companies like Kantar, for instance. I am a dinobaby, and I like the old-fashioned Stone Age approach to data. The fancy math embodies sufficient risk for me. Why increase risk? Remember my reference to a dead loved one? That type of risk.

Stephen E Arnold, March 4, 2023

Open Source: Free, Easy, and Fast Sort Of

February 29, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Not long ago, I spoke with an open source cheerleader. The pros outweighed the cons from this technologist’s point of view. (I would like to ID the individual, but I try to avoid having legal eagles claw their way into my modest nest in rural Kentucky. Just plug in “John Wizard Doe”, a high profile entrepreneur and graduate of a big time engineering school.)

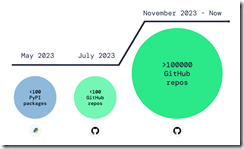

I think going up suggests a problem.

Here are highlights of my notes about the upside of open source:

- Many smart people eyeball the code and problems are spotted and fixed

- Fixes get made and deployed more rapidly than commercial software which of works on an longer “fix” cycle

- Dead end software can be given new kidneys or maybe a heart with a fork

- For most use cases, the software is free or cheaper than commercial products

- New functions become available; some of which fuel new product opportunities.

There may be a few others, but let’s look at a downside few open source cheerleaders want to talk about. I don’t want to counter the widely held belief that “many smart people eyeball the code.” The method is grab and go. The speed angle is relative. Reviving open source again and again is quite useful; bad actors do this. Most people just recycle. The “free” angle is a big deal. Everyone like “free” because why not? New functions become available so new markets are created. Perhaps. But in the cyber crime space, innovation boils down to finding a mistake that can be exploited with good enough open source components, often with some mileage on their chassis.

But the one point open source champions crank back on the rah rah output. “Over 100,000 Infected Repos Found on GitHub.” I want to point out that GitHub is a Microsoft, the all-time champion in security, owns GitHub. If you think about Microsoft and security too much, you may come away confused. I know I do. I also get a headache.

This “Infected Repos” API IRO article asserts:

Our security research and data science teams detected a resurgence of a malicious repo confusion campaign that began mid-last year, this time on a much larger scale. The attack impacts more than 100,000 GitHub repositories (and presumably millions) when unsuspecting developers use repositories that resemble known and trusted ones but are, in fact, infected with malicious code.

The write up provides excellent information about how the bad repos create problems and provides a recipe for do this type of malware distribution yourself. (As you know, I am not too keen on having certain information with helpful detail easily available, but I am a dinobaby, and dinobabies have crazy ideas.)

If we confine our thinking to the open source champion’s five benefits, I think security issues may be more important in some use cases.The better question is, “Why don’t open source supporters like Microsoft and the person with whom I spoke want to talk about open source security?” My view is that:

- Security is an after thought or a never thought facet of open source software

- Making money is Job #1, so free trumps spending money to make sure the open source software is secure

- Open source appeals to some venture capitalists. Why? RedHat, Elastic, and a handful of other “open source plays”.

Net net: Just visualize a future in which smart software ingests poisoned code, and programmers who rely on smart software to make them a 10X engineer. Does that create a bit of a problem? Of course not. Microsoft is the security champ, and GitHub is Microsoft.

Stephen E Arnold, February 29, 2024

The Google: A Bit of a Wobble

February 28, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Check out this snap from Techmeme on February 28, 2024. The folks commenting about Google Gemini’s very interesting picture generation system are confused. Some think that Gemini makes clear that the Google has lost its way. Others just find the recent image gaffes as one more indication that the company is too big to manage and the present senior management is too busy amping up the advertising pushed in front of “users.”

I wanted to take a look at What Analytics India Magazine had to say. Its article is “Aal Izz Well, Google.” The write up — from a nation state some nifty drone technology and so-so relationships with its neighbors — offers this statement:

In recent weeks, the situation has intensified to the extent that there are calls for the resignation of Google chief Sundar Pichai. Helios Capital founder Samir Arora has suggested a likelihood of Pichai facing termination or choosing to resign soon, in the aftermath of the Gemini debacle.

The write offers:

Google chief Sundar Pichai, too, graciously accepted the mistake. “I know that some of its responses have offended our users and shown bias – to be clear, that’s completely unacceptable and we got it wrong,” Pichai said in a memo.

The author of the Analytics India article is Siddharth Jindal. I wonder if he will talk about Sundar’s and Prabhakar’s most recent comedy sketch. The roll out of Bard in Paris was a hoot, and it too had gaffes. That was a year ago. Now it is a year later and what’s Google accomplished:

Analytics India emphasizes that “Google is not alone.” My team and I know that smart software is the next big thing. But Analytics India is particularly forgiving.

The estimable New York Post takes a slightly different approach. “Google Parent Loses $70B in Market Value after Woke AI Chatbot Disaster” reports:

Google’s parent company lost more than $70 billion in market value in a single trading day after its “woke” chatbot’s bizarre image debacle stoked renewed fears among investors about its heavily promoted AI tool. Shares of Alphabet sank 4.4% to close at $138.75 in the week’s first day of trading on Monday. The Google’s parent’s stock moved slightly higher in premarket trading on Tuesday [February 28, 2024, 941 am US Eastern time].

As I write this, I turned to Google’s nemesis, the Softies in Redmond, Washington. I asked for a dinosaur looking at a meteorite crater. Here’s what Copilot provided:

Several observations:

- This is a spectacular event. Sundar and Prabhakar will have a smooth explanation I believe. Smooth may be their core competency.

- The fact that a Code Red has become a Code Dead makes clear that communications at Google requires a tune up. But if no one is in charge, blowing $70 billion will catch the attention of some folks with sharp teeth and a mean spirit.

- The adolescent attitudes of a high school science club appear to inform the management methods at Google. A big time investigative journalist told me that Google did not operate like a high school science club planning a bus trip to the state science fair. I stick by my HSSCMM or high school science club management method. I won’t repeat her phrase because it is similar to Google’s quantumly supreme smart software: Wildly off base.

Net net: I love this rationalization of management, governance, and technical failure. Everyone in the science club gets a failing grade. Hey, wizards and wizardettes, why not just stick to selling advertising.

Stephen E Arnold, February 28,. 2024

10X: The Magic Factor

February 27, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The 10X engineer. The 10X payout. The 10X advertising impact. The 10X factor can apply to money, people, and processes. Flip to the inverse and one can use smart software to replace the engineers who are not 10X or — more optimistically — lift those expensive digital humanoids to a higher level. It is magical: Win either way, provided you are a top dog a one percenter. Applied to money, 10X means winner. End up with $0.10, and the hapless investor is a loser. For processes, figuring out a 10X trick, and you are a winner, although one who is misunderstood. Money matters more than machine efficiency to some people.

In pursuit of a 10X payoff, will the people end up under water? Thanks, ImageFX. Good enough.

These are my 10X thoughts after I read “Groq, Gemini, and 10X Improvements.” The essay focuses on things technical. I am going to skip over what the author offers as a well-reasoned, dispassionate commentary on 10X. I want to zip to one passage which I think is quite fascinating. Here it is:

We don’t know when increasing parameters or datasets will plateau. We don’t know when we’ll discover the next breakthrough architecture akin to Transformers. And we don’t know how good GPUs, or LPUs, or whatever else we’re going to have, will become. Yet, when when you consider that Moore’s Law held for decades… suddenly Sam Altman’s goal of raising seven trillion dollars to build AI chips seems a little less crazy.

The way I read this is that unknowns exist with AI, money, and processes. For me, the unknowns are somewhat formidable. For many, charging into the unknown does not cause sleepless nights. Talking about raising trillions of dollars which is a large pile of silver dollars.

One must take the $7 trillion and Sam AI-Man seriously. In June 2023, Sam AI-Man met the boss of Softbank. Today (February 22, 2024) rumors about a deal related to raising the trillions required for OpenAI to build chips and fulfill its promise have reached my research team. If true, will there be a 10X payoff, which noses into spitting distance of 15 zeros. If that goes inverse, that’s going to create a bad day for someone.

Stephen E Arnold, February 27, 2024

Qualcomm: Its AI Models and Pour Gasoline on a Raging Fire

February 26, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Qualcomm’s announcements at the Mobile World Congress pour gasoline on the raging AI fire. The chip maker aims to enable smart software on mobile devices, new gear, gym shoes, and more. Venture Beat’s “Qualcomm Unveils AI and Connectivity Chips at Mobile World Congress” does a good job of explaining the big picture. The online publication reports:

Generative AI functions in upcoming smartphones, Windows PCs, cars, and wearables will also be on display with practical applications. Generative AI is expected to have a broad impact across industries, with estimates that it could add the equivalent of $2.6 trillion to $4.4 trillion in economic benefits annually.

Qualcomm, primarily associated with chips, has pushed into what it calls “AI models.” The listing of the models appears on the Qualcomm AI Hub Web page. You can find this page at https://aihub.qualcomm.com/models. To view the available models, click on one of the four model domains, shown below:

Each domain will expand and present the name of the model. Note that the domain with the most models is computer vision. The company offers 60 models. These are grouped by function; for example, image classification, image editing, image generation, object detection, pose estimation, semantic segmentation (tagging objects), and super resolution.

The image below shows a model which analyzes data and then predicts related values. In this case, the position of the subject’s body are presented. The predictive functions of a company like Recorded Future suddenly appear to be behind the curve in my opinion.

There are two models for generative AI. These are image generation and text generation. Models are available for audio functions and for multimodal operations.

Qualcomm includes brief descriptions of each model. These descriptions include some repetitive phrases like “state of the art”, “transformer,” and “real time.”

Looking at the examples and following the links to supplemental information makes clear at first glance to suggest:

- Qualcomm will become a company of interest to investors

- Competitive outfits have their marching orders to develop comparable or better functions

- Supply chain vendors may experience additional interest and uplift from investors.

Can Qualcomm deliver? Let me answer the question this way. Whether the company experiences an nVidia moment or not, other companies have to respond, innovate, cut costs, and become more forward leaning in this chip sector.

I am in my underground computer lab in rural Kentucky, and I can feel the heat from Qualcomm’s AI announcement. Those at the conference not working for Qualcomm may have had their eyebrows scorched.

Stephen E Arnold, February 26, 2024

What Techno-Optimism Seems to Suggest (Oligopolies, a Plutocracy, or Utopia)

February 23, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Science and mathematics are comparable to religion. These fields of study attract acolytes who study and revere associated knowledge and shun nonbelievers. The advancement of modern technology is its own subset of religious science and mathematics combined with philosophical doctrine. Tech Policy Press discusses the changing views on technology-based philosophy in: “Parsing The Political Project Of Techno-Optimism.”

Rich, venture capitalists Marc Andreessen and Ben Horowitz are influential in Silicon Valley. While they’ve shaped modern technology with their investments, they also tried drafting a manifesto about how technology should be handled in the future. They “creatively” labeled it the “techno-optimist manifesto.” It promotes an ideology that favors rich people increasing their wealth by investing in politicians that will help them achieve this.

Techno-optimism is not the new mantra of Silicon Valley. Reception didn’t go over well. Andreessen wrote:

“Techno-Optimism is a material philosophy, not a political philosophy…We are materially focused, for a reason – to open the aperture on how we may choose to live amid material abundance.”

He also labeled this section, “the meaning of life.”

Techno-optimism is a revamped version of the Californian ideology that reigned in the 1990s. It preached that the future should be shaped by engineers, investors, and entrepreneurs without governmental influence. Techno-optimism wants venture capitalists to be untaxed with unregulated portfolios.

Horowitz added his own Silicon Valley-type titbit:

“‘…will, for the first time, get involved with politics by supporting candidates who align with our vision and values specifically for technology. (…) [W]e are non-partisan, one issue voters: if a candidate supports an optimistic technology-enabled future, we are for them. If they want to choke off important technologies, we are against them.’”

Horowitz and Andreessen are giving the world what some might describe as “a one-finger salute.” These venture capitalists want to do whatever they want wherever they want with governments in their pockets.

This isn’t a new ideology or a philosophy. It’s a rebranding of socialism and fascism and communism. There’s an even better word that describes techno-optimism: Plutocracy. I am not sure the approach will produce a Utopia. But there is a good chance that some giant techno feudal outfits will reap big rewards. But another approach might be to call techno optimism a religion and grab the benefits of a tax exemption. I wonder if someone will create a deep fake of Jim and Tammy Faye? Interesting.

Whitney Grace, February 23, 2023

Security Debt: So Just Be a Responsible User / Developer

February 15, 2024

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Security appears to be one of the next big things. Smart software strapped onto to cyber safeguard systems is a no-lose proposition for vendors. Does it matter that bolted on AI may not work? Nope. The important point is to ride the opportunity wave.

What’s interesting is that security is becoming a topic discussed at 75-something bridge groups and at lunch gatherings in government agencies concerned about fish and trees. Can third-party security services, grandmothers chasing a grand slam, or an expert in river fowl address security problems? I would suggest that the idea that security is the user’s responsibility is an interesting way to dodge responsibility. The estimable 23andMe tried this play, and I am not too sure that it worked.

Can security debt become the invisible hand creating opportunities for bad actors? Has the young executive reached the point of no return for a personal debt crisis? Thanks, MSFT Pilot Bing for a good enough illustration.

Who can address the security issues in the software people and organizations use today. “Why Software Security Debt Is Becoming a Serious Problem for Developers” states:

Over 70% of organizations have software containing flaws that have remained unfixed for longer than a year, constituting security debt,

Plus, the article asserts:

46% of organizations were found to have persistent, high-severity flaws that went unaddressed for over a year

Security issues exist. But the question is, “Who will address these flaws, gaps, and mistakes?”

The article cites an expert who opines:

“The further that you shift [security testing] to the developer’s desktop and have them see it as early as possible so they can fix it, the better, because number one it’s going to help them understand the issue more and [number two] it’s going to build the habits around avoiding it.”

But who is going to fix the security problems?

In-house developers may not have the expertise or access to the uncompiled code to identify and remediate. Open source and other third-party software can change without notice because why not do what’s best for those people or the bad actors manipulating open source software and “approved” apps available from a large technology company’s online store.

The article offers a number of suggestions, but none of these strike me as practical for some or most organizations.

Here’s the problem: Security is not a priority until a problem surfaces. Then when a problem becomes known, the delay between compromise, discovery, and public announcement can be — let’s be gentle — significant. Once a cyber security vendor “discovers” the problem or learns about it from a customer who calls and asks, “What has happened?”, the PR machines grind into action.

The “fixes” are typically rush jobs for these reasons:

- The vendor and the developer who made the zero a one does not earn money by fixing old code. Another factor is that the person or team responsible for the misstep is long gone, working as an Uber driver, or sitting in a rocking chair in a warehouse for the elderly

- The complexity of “going back” and making a fix may create other problems. These dependencies are unknown, so a fix just creates more problems. Writing a shim or wrapper code may be good enough to get the angry dogs to calm down and stop barking.

- The security flaw may be unfixable; that is, the original approach includes and may need flaws for performance, expediency, or some quite revenue-centric reason. No one wants to rebuild a Pinto that explodes in a rear end collision. Let the lawyers deal with it. When it comes to code, lawyers are definitely equipped to resolve security problems.

The write up contains a number of statistics, but it makes one major point:

Security debt is mounting.

Like a young worker who lives by moving credit card debt from vendor to vendor, getting out of the debt hole may be almost impossible. But, hey, it is that individual’s responsibility, not the system. Just be responsible. That is easy to say, and it strikes me as somewhat hollow.

Stephen E Arnold, February 15, 2024