AI Is a Winner: The Viewpoint of an AI Believer

November 13, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Bubble, bubble, bubble. This is the Silicon Valley version of epstein, epstein, epstein. If you are worn out from the doom and gloom of smart software’s penchant for burning cash and ignoring the realities of generating electric power quickly, you will want to read “AI Is Probably Not a Bubble: AI Companies Have Revenue, Demand, and Paths to Immense Value.” [Note: You may encounter a paywall when you attempt to view this article. Don’t hassle me. Contact those charming visionaries at Substack, the new new media outfit.]

The predictive impact of the analysis has been undercut by a single word in the title “Probably.” A weasel word appears because the author’s enthusiasm for AI is a bit of contrarian thinking presented in thought leader style. Probably a pig can fly somewhere at some time. Yep, confidence.

Here’s a passage I found interesting:

… unlike dot-com companies, the AI companies have reasonable unit economics absent large investments in infrastructure and do have paths to revenue. OpenAI is demonstrating actual revenue growth and product-market fit that Pets.com and Webvan never had. The question isn’t whether customers will pay for AI capabilities — they demonstrably are — but whether revenue growth can match required infrastructure investment. If AI is a bubble and it pops, it’s likely due to different fundamentals than the dot-com bust.

Ah, ha, another weasel word: “Whether.” Is this AI bubble going to expand infinitely or will it become a Pets.com?

The write up says:

Instead, if the AI bubble is a bubble, it’s more likely an infrastructure bubble.

I think the ground beneath the argument has shifted. The “AI” is a technology like “the Internet.” The “Internet” became a big deal. AI is not “infrastructure.” That’s a data center with fungible objects like machines and connections to cables. Plus, the infrastructure gets “utilized immediately upon completion.” But what if [a] demand decreases due to lousy AI value, [b] AI becomes a net inflater of ancillary costs like a Microsoft subscription to Word, or [c] electrical power is either not available or too costly to make a couple of football fields of servers to run 24×7?

I liked this statement, although I am not sure some of the millions of people who cannot find jobs will agree:

As weird as it sounds, an AI eventually automating the entire economy seems actually plausible, if current trends keep continuing and current lines keep going up.

Weird. Cost cutting is a standard operating tactic. AI is an excuse to dump expensive and hard-to-manage humans. Whether AI can do the work is another question. Shifting from AI delivering value to server infrastructure shows one weakness in the argument. Ignoring the societal impact of unhappy workers seems to me illustrative of taking finance classes, not 18th century history classes.

Okay, here’s the wind up of the analysis:

Unfortunately, forecasting is not the same as having a magic crystal ball and being a strong forecaster doesn’t give me magical insight into what the market will do. So honestly, I don’t know if AI is a bubble or not.

The statement is a combination of weasel words, crawfishing away from the thesis of the essay, and an admission that this is a marketing thought leader play. That’s okay. LinkedIn is stuffed full of essays like this big insight:

So why are industry leaders calling AI a bubble while spending hundreds of billions on infrastructure? Because they’re not actually contradicting themselves. They’re acknowledging legitimate timing risk while betting the technology fundamentals are sound and that the upside is worth the risk.

The AI giants are savvy cats, are they not?

Stephen E Arnold, November 13, 2025

US Government Procurement Changes: Like Silicon Valley, Really? I Mean For Sure?

November 12, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I learned about the US Department of War overhaul of its procurement processes by reading “The Department of War Just Shot the Accountants and Opted for Speed.” Rumblings of procurement hassles have been reaching me for years. The cherished methods of capture planning, statement of work consulting, proposal writing, and evaluating bids consumes many billable hours by consultants. The processes involve thousands of government professionals: Lawyers, financial analysts, technical specialists, administrative professionals, and consultants. I can’t omit the consultants.

According to the essay written by Steve Blank (a person unfamiliar to me):

Last week the Department of War finally killed the last vestiges of Robert McNamara’s 1962 Planning, Programming, and Budgeting System (PPBS). The DoW has pivoted from optimizing cost and performance to delivering advanced weapons at speed.

The write up provides some of the history of the procurement process enshrined in such documents as FAR or the Federal Acquisition Regulations. If you want the details, Mr. Blank provides I urge you to read his essay in full.

I want to highlight what I think is an important point to the recent changes. Mr. Bloom writes:

The war in Ukraine showed that even a small country could produce millions of drones a year while continually iterating on their design to match changes on the battlefield. (Something we couldn’t do.) Meanwhile, commercial technology from startups and scaleups (fueled by an immense pool of private capital) has created off-the-shelf products, many unmatched by our federal research development centers or primes, that can be delivered at a fraction of the cost/time. But the DoW acquisition system was impenetrable to startups. Our Acquisition system was paralyzed by our own impossible risk thresholds, its focus on process not outcomes, and became risk averse and immoveable.

Based on my experience, much of it working as a consultant on different US government projects, the horrific “special operation” delivered a number of important lessons about modern warfare. Reading between the lines of the passage cited above, two important items of information emerged from what I view as an illegal international event:

- Under certain conditions human creativity can blossom and then grow into major business operations. I would suggest that Ukraine’s innovations in the use of drones, how the drones are deployed in battle conditions, and how the basic “drone idea” reduce the effectiveness of certain traditional methods of warfare

- Despite disruptions to transportation and certain third-party products, Ukraine demonstrated that just-in-time production facilities can be made operational in weeks, sometimes days.

- The combination of innovative ideas, battlefield testing, and right-sized manufacturing demonstrated that a relatively small country can become a world-class leader in modern warfighting equipment, software, and systems.

Russia, with its ponderous planning and procurement process, has become the fall guy to a president who was a stand up comedian. Who is laughing now? It is not the perpetrators of the “special operation.” The joke, as some might say, is on individuals who created the “special operation.”

Mr. Blank states about the new procurement system:

To cut through the individual acquisition silos, the services are creating Portfolio Acquisition Executives (PAEs). Each Portfolio Acquisition Executive (PAE) is responsible for the entire end-to-process of the different Acquisition functions: Capability Gaps/Requirements, System Centers, Programming, Acquisition, Testing, Contracting and Sustainment. PAEs are empowered to take calculated risks in pursuit of rapidly delivering innovative solutions.

My view of this type of streamlining is that it will become less flexible over time. I am not sure when the ossification will commence, but bureaucratic systems, no matter how well designed, morph and become traditional bureaucratic systems. I am not going to trot out the academic studies about the impact of process, auditing, and legal oversight on any efficient process. I will plainly state that the bureaucracies to which I have been exposed in the US, Europe, and Asia are fundamentally the same.

Can the smart software helping enable the Silicon Valley approach to procurement handle the load and keep the humanoids happy? Thanks, Venice.ai. Good enough.

Ukraine is an outlier when it comes to the organization of its warfighting technology. Perhaps other countries if subjected to a similar type of “special operation” would behave as the Ukraine has. Whether I was giving lectures for the Japanese government or dealing with issues related to materials science for an entity on Clarendon Terrace, the approach, rules, regulations, special considerations, etc. were generally the same.

The question becomes, “Can a new procurement system in an environment not at risk of extinction demonstrate the speed, creativity, agility, and productivity of the Ukrainian model?”

My answer is, “No.”

Mr. Blank writes before he digs into the new organizational structure:

The DoW is being redesigned to now operate at the speed of Silicon Valley, delivering more, better, and faster. Our warfighters will benefit from the innovation and lower cost of commercial technology, and the nation will once again get a military second to none.

This is an important phrase: Silicon Valley. It is the model for making the US Department of War into a more flexible and speedy entity, particularly with regard to procurement, the use of smart software (artificial intelligence), and management methods honed since Bill Hewlett and Dave Packard sparked the garage myth.

Silicon Valley has been an model for many organizations and countries. However, who thinks much about the Silicon Fen? I sure don’t. I would wager a slice of cheese that many readers of this blog post have never, ever heard of Sophia Antipolis. Everyone wants to be a Silicon Valley and high-technology, move fast and break things outfit.

But we have but one Silicon Valley. Now the question is, “Will the US government be a successful Silicon Valley, or will it fizzle out?” Based on my experience, I want to go out on a very narrow limb and suggest:

- Cronyism was important to Silicon Valley, particularly for funding and lawyering. The “new” approach to Department of War procurement is going to follow a similar path.

- As the stakes go up, growth becomes more important than fiscal considerations. As a result, the cost of becoming bigger, faster, cheaper spikes. Costs for the majority of Silicon Valley companies kill off most start ups. The failure rate is high, and it is exacerbated by the need of the winners to continue to win.

- Silicon Valley management styles produce some negative consequences. Often overlooked are such modern management methods as [a] a lack of common sense, [b] decisions based on entitlement or short term gains, and [c] a general indifference to the social consequences of an innovation, a product, or a service.

If I look forward based on my deeply flawed understanding of this Silicon Valley revolution I see monopolistic behavior emerging. Bureaucracies will emerge because people working for other people create rules, procedures, and processes to minimize the craziness of doing the go fast and break things activities. Workers create bureaucracies to deal with chaos, not cause chaos.

Mr. Blank’s essay strikes me as generally supportive of this reinvention of the Federal procurement process. He concludes with:

Let’s hope these changes stick.

My personal view is that they won’t. Ukraine’s created a wartime Silicon Valley in a real-time, shoot-and-survive conflict. The urgency is not parked in a giant building in Washington, DC, or a Silicon Valley dream world. A more pragmatic approach is to partition procurement methods. Apply Silicon Valley thinking in certain classes of procurement; modify the FAR to streamline certain processes; and leave some of the procedures unchanged.

AI is a go fast and break things technology. It also hallucinates. Drones from Silicon Valley companies don’t work in Ukraine. I know because someone with first hand information told me. What will the new methods of procurement deliver? Answer: Drones that won’t work in a modern asymmetric conflict. With decisions involving AI, I sure don’t want to find myself in a situation about which smart software makes stuff up or operates on digital mushrooms.

Stephen E Arnold, November 12, 2025

Agentic Software: Close Enough for Horse Shoes

November 11, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I read a document that I would describe as tortured. The lingo was trendy. The charts and graphs sported trendy colors. The data gathering seemed to be a mix of “interviews” and other people’s research. Plus the write up was a bit scattered. I prefer the rigidity of old-fashioned organization. Nevertheless, I did spot one chunk of information that I found interesting.

The title of the research report (sort of an MBA- or blue chip consulting firm-type of document) is “State of Agentic AI: Founder’s Edition.” I think it was issued in March 2025, but with backdating popular, who knows. I had the research report in my files, and yesterday (November 3, 2025) I was gathering some background information for a talk I am giving on November 6, 2025. The document walked through data about the use of software to replace people. Actually, the smart software agents generally do several things according to the agent vendors’ marketing collateral. The cited document restated these items this way:

- Agents are set up to reach specific goals

- Agents are used to reason which means “break down their main goal … into smaller manageable tasks and think about the next best steps.”

- Agents operate without any humans in India or Pakistan operating invisibly and behind the scenes

- Agents can consult a “memory” of previous tasks, “experiences,” work, etc.

Agents, when properly set up and trained, can perform about as well as a human. I came away from the tan and pink charts with a ball park figure of 75 to 80 percent reliability. Close enough for horseshoes? Yep.

There is a run down of pricing options. Pricing seems to be challenge for the vendors with API usage charges and traditional software licensing used by a third of the agentic vendors.

Now here’s the most important segment from the document:

We asked founders in our survey: “What are the biggest issues you have encountered when deploying AI Agents for your customers? Please rank them in order of magnitude (e.g. Rank 1 assigned to the biggest issue)” The results of the Top 3 issues were illuminating: we’ve frequently heard that integrating with legacy tech stacks and dealing with data quality issues are painful. These issues haven’t gone away; they’ve merely been eclipsed by other major problems. Namely:

- Difficulties in integrating AI agents into existing customer/company workflows, and the human-agent interface (60% of respondents)

- Employee resistance and non-technical factors (50% of respondents)

- Data privacy and security (50% of respondents).

Here’s the chart tallying the results:

Several ideas crossed my mind as I worked through this research data:

- Getting the human-software interface right is a problem. I know from my work at places like the University of Michigan, the Modern Language Association, and Thomson-Reuters that people have idiosyncratic ways to do their jobs. Two people with similar jobs add the equivalent of extra dashboard lights and yard gnomes to the process. Agentic software at this time is not particularly skilled in the dashboard LED and concrete gnome facets of a work process. Maybe someday, but right now, that’s a common deal breaker. Employees says, “I want my concrete unicorn, thank you.”

- Humans say they are into mobile phones, smart in-car entertainment systems, and customer service systems that do not deliver any customer service whatsoever. Change as somebody from Harvard said in a lecture: “Change is hard.” Yeah, and it may not get any easier if the humanoid thinks he or she will allowed to find their future pushing burritos at the El Nopal Restaurant in the near future.

- Agentic software vendors assume that licensees will allow their creations to suck up corporate data, keep company secrets, and avoid disappointing customers by presenting proprietary information to a competitor. Security is “regular” enterprise software is a bit of a challenge. Security in a new type of agentic software is likely to be the equivalent of a ride on roller coaster which has tossed several middle school kids to their death and cut off the foot of a popular female. She survived, but now has a non-smart, non-human replacement.

Net net: Agentic software will be deployed. Most of its work will be good enough. Why will this be tolerated in personnel, customer service, loan approvals, and similar jobs? The answer is reduced headcounts. Humans cost money to manage. Humans want health care. Humans want raises. Software which is good enough seems to cost less. Therefore, welcome to the agentic future.

Stephen E Arnold, November 11, 2025

AI Dreams Are Plugged into Big Rock Candy Mountain

November 5, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

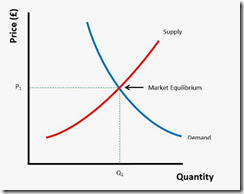

One of the niches in the power generation industry is demand forecasting. When I worked at Halliburton Nuclear, I sat in meetings. One feature of these meetings was diagrams. I don’t have any of these diagrams because there were confidentiality rules. I followed those. This is what some of the diagrams resembled:

Source: https://mavink.com/

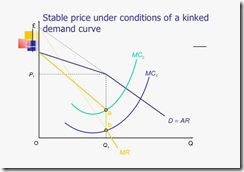

When I took a job at Booz, Allen, the firm had its own demand experts. The diagrams favored by one of the utility rate and demand experts looked like this. Note: Booz, Allen had rules, so the diagram comes from the cited source:

Source: https://vtchk.ru/photo/demand-curve/16

These curves speak volumes to the people who fund, engineer, and construct power generation facilities. The main idea for these semi-abstract curves is that balancing demand and supply is important. The price of electricity depends on figuring out the probable relationship of demand for power, the available supply and the supply that will come on line at estimated times in the future. The price people and organizations pay for electricity depend on these types of diagrams, the reams of data analysts crunch, and a group of people sitting in a green conference room at a plastic table agree the curves mean.

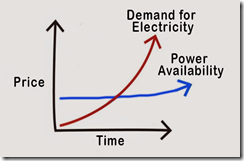

A recent report from Turner & Townsend (a UK consulting outfit) identifies some trends in the power generation sector with some emphasis on the data centers required for smart software. You can work through the report on the Turner & Townsend Web site by clicking this link. The main idea is that huge AI-centric data centers needed to power the Googley transformer-centric approach to smart software outstrips available power.

The response to this in the bit AI companies is, “We can put servers in orbit” and “We can build small nuclear reactors and park them near the data centers” and “We can buy turbines and use gas or other carbon fuels to power out data centers.” These are comments made by individuals who choose not to look at the wonky type of curves I show.

It takes time to build a conventional power generation facility. The legal process in the US has traditionally been difficult and expensive. A million dollars won’t even pay for a little environmental impact study. Lawyers can cost than a rail car loaded with specialized materials required for nuclear reactors. The costs for the PR required to place a baby nuke in Memphis next to a big data center may be more expensive than buying some Google ads and hiring a local marketing firm. Some people may not feel comfortable with a new, unproven baby nuke in their neighborhood. Coal- and oil-fired plants invite certain types of people to mount noisy and newsworthy protests. Putting a data center in orbit poses some additional paperwork challenges and a little bit of extra engineering work.

So what’s the big detailed report show. Here’s my diagram of the power, demand, and price future with those giant data centers in the US. You can work out the impact on non-US installations:

This diagram was whipped up by Stephen E Arnold.

The message in these curves reflects one of the “challenges” identified in the Turner & Townsend report: Cost.

What does this mean to those areas of the US where Big AI Boys plan to build large data centers? Answer: Their revenue streams need to be robust and their funding sources have open wallets.

What does this mean for the cost of electricity to consumers and run-of-the-mill organizations? Answer: Higher costs, brown outs, and fancy new meters than can adjust prices and current on the fly. Crank up the data center, and the Super Bowl broadcast may not be in some homes.

What does this mean for ubiquitous, 24×7 AI availability in software, home appliances, and mobile devices? Answer: Higher costs, brown outs, and degraded services.

How will the incredibly self aware, other centric, ethical senior managers at AI companies respond? Answer: No problem. Think thorium reactors and data centers in space.

Also, the cost of building new power generation facilities is not a problem for some Big Dogs. The time required for licensing, engineering, and construction. No problem. Just go fast, break things.

And overcoming resistance to turbines next to a school or a small thorium reactor in a subdivision? Hey, no problem. People will adapt or they can move to another city.

What about the engineering and the innovation? Answer: Not to worry. We have the smartest people in the world.

What about common sense and self awareness? Response: Yo, what do those terms mean are they synonyms for disco biscuits?

The next big thing lives on Big Rock Candy Mountain.

Stephen E Arnold, November 5, 2025

A Nice Way of Saying AI Will Crash and Burn

November 5, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a write up last week. Today is October 27, 2025, and this dinobaby has a tough time keeping AI comments, analyses, and proclamations straight. The old fashioned idea of putting a date on each article or post is just not GenAI. As a dinobaby, I find this streamlining like Google dumping apostrophes from its mobile keyboard ill advised. I would say “stupid,” but one cannot doubt the wisdom of the quantumly supreme AI PR and advertising machine, can one? One cannot tell some folks that AI is a utility. It may be important, but the cost may be AI’s Achilles’ heel or the sword on which AI impales itself.

A young wizard built a wonder model aircraft. But it caught on fire and is burning. This is sort of sad. Thanks, ChatGPT. Good enough.

These and other thoughts flitted through my mind when I read “Surviving the AI Capex Boom.” The write up includes an abstract, and you can work through the 14 page document to get the inside scoop. I will assume you have read the document. Here are some observations:

- The “boom” is visible to anyone who scans technical headlines. The hype for AI is exceeded only by the money pumped into the next big thing. The problem is that the payoff from AI is underwhelming when compared to the amount of cash pumped into a sector relying on a single technical innovation or breakthrough: The “transformer” method. Fragile is not the word for the situation.

- The idea that there are cheap and expensive AI stocks is interesting. I am, however, that cheap and expensive are substantively different. Google has multiple lines of revenue. If AI fails, it has advertising and some other cute businesses. Amazon has trouble with just about everything at the moment. Meta is — how shall I phrase it — struggling with organizational issues that illustrate managerial issues. So there is Google and everyone else.

- OpenAI is a case study in an entirely new class of business activities. From announcing that erotica is just the thing for ChatGPT to sort of trying to invent the next iPhone, Sam AI-Man is a heck of a fund raising machine. Only his hyperbole power works as well. His love of circular deals means that he survives, or he does some serious damage to a number of fellow travelers. I say, “No thanks, Sam.”

- The social impact of flawed AI is beginning to take shape. The consequences will be unpleasant in many ways. One example: Mental health knock ons. But, hey, this is a tiny percentage, a rounding error.

Net net: I am not convinced that investing in AI at this time is the wise move for an 81 year old dinobaby. Sorry, Kai Wu. You will survive the AI boom. You are, from my viewpoint, a banker. Bankers usually win. But others may not enjoy the benefits you do.

Stephen E Arnold, November 5, 2025

Transformers May Face a Choice: The Junk Pile or Pizza Hut

November 4, 2025

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

Another short essay from a real and still-alive dinobaby. If you see an image, we used AI. The dinobaby is not an artist like Grandma Moses.

I read a marketing collateral-type of write up in Venture Beat. The puffy delight carries this title “The Beginning of the End of the Transformer Era? Neuro-Symbolic AI Startup AUI Announces New Funding at $750M Valuation.” The transformer is a Googley thing. Obviously with many users of Google’s Googley AI, Google perceives itself as the Big Dog in smart software. Sorry, Sam AI-Man, Google really, really believes it is the leader; otherwise, why would Apple turn to Google for help with its AI challenges? Ah, you don’t know? Too bad, Sam, I feel for you.

Thanks, MidJourney. Good enough.

This write up makes clear that someone has $750 million reasons to fund a different approach to smart software. Contrarian brilliance or dumb move? I don’t know. The write up says:

AUI is the company behind Apollo-1, a new foundation model built for task-oriented dialog, which it describes as the "economic half" of conversational AI — distinct from the open-ended dialog handled by LLMs like ChatGPT and Gemini. The firm argues that existing LLMs lack the determinism, policy enforcement, and operational certainty required by enterprises, especially in regulated sectors.

But there’s more:

Apollo-1’s core innovation is its neuro-symbolic architecture, which separates linguistic fluency from task reasoning. Instead of using the most common technology underpinning most LLMs and conversational AI systems today — the vaunted transformer architecture described in the seminal 2017 Google paper "Attention Is All You Need" — AUI’s system integrates two layers:

Neural modules, powered by LLMs, handle perception: encoding user inputs and generating natural language responses.

A symbolic reasoning engine, developed over several years, interprets structured task elements such as intents, entities, and parameters. This symbolic state engine determines the appropriate next actions using deterministic logic.

This hybrid architecture allows Apollo-1 to maintain state continuity, enforce organizational policies, and reliably trigger tool or API calls — capabilities that transformer-only agents lack.

What’s important is that interest in an alternative to the Googley approach is growing. The idea is that maybe — just maybe — Google’s transformer is burning cash and not getting much smarter with each billion dollar camp fire. Consequently individuals with a different approach warrant a closer look.

The marketing oriented write up ends this way:’

While LLMs have advanced general-purpose dialog and creativity, they remain probabilistic — a barrier to enterprise deployment in finance, healthcare, and customer service. Apollo-1 targets this gap by offering a system where policy adherence and deterministic task completion are first-class design goals.

Researchers around the world are working overtime to find a way to deliver smart software without the Mad Magazine economics of power, CPUs, and litigation associated with the Googley approach. When a practical breakthrough takes place, outfits mired in Googley methods may be working at a job their mothers did not envision for her progeny.

Stephen E Arnold, November 4, 2025

Google Is Really Cute: Push Your Content into the Jaws of Googzilla

November 4, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Google has a new, helpful, clever, and cute service just for everyone with a business Web site. “Google Labs’ Free New Experiment Creates AI-Generated Ads for Your Small Business” lays out the basics of Pomelli. (I think this word means knobs or handles.)

A Googley business process designed to extract money and data from certain customers. Thanks, Venice.ai. Good enough.

The cited article states:

Pomelli uses AI to create campaigns that are unique to your business; all you need to do is upload your business website to begin. Google says Pomelli uses your business URL to create a “Business DNA” that analyzes your website images to identify brand identity. The Business DNA profile includes tone of voice, color palettes, fonts, and pictures. Pomelli can also generate logos, taglines, and brand values.

Just imagine Google processing your Web site, its content, images, links, and entities like email addresses, phone numbers, etc. Then using its smart software to create an advertising campaign, ads, and suggestions for the amount of money you should / will / must spend via Google’s own advertising system. What a cute idea!

The write up points out:

Google says this feature eliminates the laborious process of brainstorming unique ad campaigns. If users have their own campaign ideas, they can enter them into Pomelli as a prompt. Finally, Pomelli will generate marketing assets for social media, websites, and advertisements. These assets can be edited, allowing users to change images, headers, fonts, color palettes, descriptions, and create a call to action.

How will those tireless search engine optimization consultants and Google certified ad reselling outfits react to this new and still “experimental” service? I am confident that [a] some will rationalize the wonderfulness of this service and sell advisory services about the automated replacement for marketing and creative agencies; [b] some will not understand that it is time to think about a substantive side gig because Google is automating basic business functions and plugging into the customer’s wallet with no pesky intermediary to shave off some bucks; and [c] others will watch as their own sales efforts become less and less productive and then go out of business because adaptation is hard.

Is Google’s idea original? No, Adobe has something called AI Found, according to the write up. Google is not into innovation. Need I remind you that Google advertising has some roots in the Yahoo garden in bins marked GoTo.com and Overture.com. Also, there is a bank account with some Google money from a settlement about certain intellectual property rights that Yahoo believed Google used as a source of business process inspiration.

As Google moves into automating hooks, it accrues several significant benefits which seem to stick up in Google’s push to help its users:

- Crawling costs may be reduced. The users will push content to Google. This may or may not be a significant factor, but the user who updates provides Google with timely information.

- The uploaded or pushed content can be piped into the Google AI system and used to inform the advertising and marketing confection Pomelli. Training data and ad prospects in one go.

- The automation of a core business function allows Google to penetrate more deeply into a business. What if that business uses Microsoft products? It strikes me that the Googlers will say, “Hey, switch to Google and you get advertising bonus bucks that can be used to reduce your overall costs.”

- The advertising process is a knob that Google can be used to pull the user and his cash directly into the Google business process automation scheme.

As I said, cute and also clever. We love you, Google. Keep on being Googley. Pull those users’ knobs, okay.

Stephen E Arnold, November 4, 2025

Hollywood Has to Learn to Love AI. You Too, Mr. Beast

October 31, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

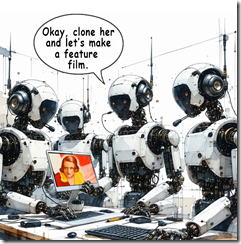

Russia’s leadership is good at talking, stalling, and doing what it wants. Is OpenAI copying this tactic? ”OpenAI Cracks Down on Sora 2 Deepfakes after Pressure from Bryan Cranston, SAG-AFTRA” reports:

OpenAI announced on Monday [October 20, 2025] in a joint statement that it will be working with Bryan Cranston, SAG-AFTRA, and other actor unions to protect against deepfakes on its artificial intelligence video creation app Sora.

Talking, stalling or “negotiating,” and then doing what it wants may be within the scope of this sentence.

The write up adds via a quote from OpenAI leadership:

“OpenAI is deeply committed to protecting performers from the misappropriation of their voice and likeness,” Altman said in a statement. “We were an early supporter of the NO FAKES Act when it was introduced last year, and will always stand behind the rights of performers.”

This sounds good. I am not sure it will impress teens as much as Mr. Altman’s posture on erotic chats, but the statement sounds good. If I knew Russian, it would be interesting to translate the statement. Then one could compare the statement with some of those emitted by the Kremlin.

Producing a big budget commercial film or a Mr. Beast-type video will look very different in 18 to 24 months. Thanks, Venice.ai. Good enough.

Several observations:

- Mr. Altman has to generate cash or the appearance of cash. At some point investors will become pushy. Pushy investors can be problematic.

- OpenAI’s approach to model behavior does not give me confidence that the company can figure out how to engineer guard rails and then enforce them. Young men and women fiddling with OpenAI can be quite ingenious.

- The BBC ran a news program with the news reader as a deep fake. What does this suggest about a Hollywood producer facing financial pressure working out a deal with an AI entrepreneur facing even greater financial pressure? I think it means that humanoids are expendable first a little bit and then for the entire digital production. Gamification will be too delicious.

Net net: I think I know how this interaction will play out. Sam Altman, the big name stars, and the AI outfits know. The lawyers know. Who doesn’t know? Frankly everyone knows how digital disintermediation works. Just ask a recent college grad with a degree in art history.

Stephen E Arnold, October 31, 2025

AI Is So Hard! We Are Working Hard! Do Not Hit Me in the Head, Mom, Please

October 28, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

I read a pretty crazy “we are wonderful and hard working” story in the Murdoch Wall Street Journal. The story is “AI Workers Are Putting In 100-Hour Workweeks to Win the New Tech Arms Race.” (This is a paywalled article so you will have to pay or pray that the permalink has not disappeared. In either event, don’t complain to me. Tell, the WSJ helpful customer support people or just subscribe at about $800 per year. Mr. Murdoch knows value.)

Thanks, Venice.ai. Good enough.

The story makes clear that Silicon Valley AI outfits slot themselves somewhere between the Chinese approach of 9-9-6 and the Japanese goal of karoshi. The shorthand 9-9-6 means that a Chinese professional labors 12 hours a day from 9 am to 9 pm and six days a week. No wonder some of those gadget factories have suicide nets installed on housing unit floors three and higher. And the Japanese karoshi concept is working oneself to death. At the blue chip consulting company where I labored, it was routine to see heaps of pizza boxes and some employees exiting the elevator crying from exhaustion as I was arriving for another fun day at an egomaniacal American institution.

Get this premise of a pivotal moment in the really important life of a super important suite of technologies that no one can define:

Executives and researchers at Microsoft, Anthropic, Alphabet’s Google, Meta Platforms, Apple and OpenAI have said they see their work as critical to a seminal moment in history as they duel with rivals and seek new ways to bring AI to the masses.

These fellows are inventing the fire and the wheel at the same time. Wow. That is so hard. The researchers are working even harder.

The write up includes this humble brag about those hard working AI professionals:

“Everyone is working all the time, it’s extremely intense, and there doesn’t seem to be any kind of natural stopping point,” Madhavi Sewak, a distinguished researcher at Google’s DeepMind, said in a recent interview.

And after me-too mobile apps, cloud connectors, and ho-hum devices, the Wall Street Journal story makes it clear these AI people are doing something important and they are working really hard. The proof is ordering food on Saturdays:

Corporate credit-card transaction data from the expense-management startup Ramp shows a surge in Saturday orders from San Francisco-area restaurants for delivery and takeout from noon to midnight. The uptick far exceeds previous years in San Francisco and other U.S. cities, according to Ramp.

Okay, I think you get the gist of the WSJ story. Let me offer several observations:

- Anyone who wants to work in the important field of AI you will have to work hard

- You will be involved in making the digital equivalent of fire and the wheel. You have no life because your work is important and hard.

- AI is hard.

My view is that smart software is a bundle of technologies that have narrowed to text centric activities via Google’s “transformer” system and possibly improper use of content obtained without permission from different sources. The people are working hard for two reasons. First, dumping more content into the large language model approach is not improving accuracy. Second, the pressure on the companies is a result of the burning of cash by the train car load and zero hockey stick profit from the investments. Some numbers person explained that an investment bank would get back its millions in investment by squeezing Microsoft. Yeah, and my French bulldog will sprout wings and fly. Third, the moves by OpenAI into erotic services and a Telegram-like approach to building an everything app signals that making money is hard.

What if making sustainable growth and profits from AI is even harder? What will life be like if an AI company with many very smart and hard working professionals goes out of business? Which will be harder: Get another job in AI at one of those juicy compensation packages or working through the issues related to loss of self esteem, mental and physical exhaustion, and a mom who says, “Just shake it off”?

The WSJ doesn’t address why the pressure is piled on. I will. The companies have to produce money. Yep, cash back for investors and their puppets. Have you ever met with a wealthy garbage collection company owner who wants his multi million investment in the digital fire or the digital wheel? Those meetings can be hard.

Here’s a question to end this essay: What if AI cannot be made better using 45 years of technology? What’s the next breakthrough to be? Figuring that out and doing it is closer to the Manhattan Project than taking a burning stick from a lightning strike and cooking a squirrel.

Stephen E Arnold, October 28, 2025

Microsoft, by Golly, Has an Ethical Compass: It Points to Security? No. Clippy? No. Subscriptions? Yes!

October 27, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

The elephants are in training for a big fight. Yo, grass, watch out.

“Microsoft AI Chief Says Company Won’t Build Chatbots for Erotica” reports:

Microsoft AI CEO Mustafa Suleyman said the software giant won’t build artificial intelligence services that provide “simulated erotica,” distancing itself from longtime partner OpenAI. “That’s just not a service we’re going to provide,” Suleyman said on Thursday [October 23, 2025] at the Paley International Council Summit in Menlo Park, California. “Other companies will build that.”

My immediate question: “Will Microsoft build tools and provide services allowing others to create erotica or conduct illegal activities; for example, delivery of phishing emails from the Microsoft Cloud to Outlook users?” A quick no seems to be implicit in this report about what Microsoft itself will do. A more pragmatic yes means that Microsoft will have no easy, quick, and cheap way to restrain what a percentage of its users will either do directly or via some type of obfuscation.

Microsoft seems to step away from converting the digital Bob into an adult star or Clippy engaging with a user in a “suggestive” interaction.

The write up adds:

On Thursday, Suleyman said the creation of seemingly conscious AI is already happening, primarily with erotica-focused services. He referenced Altman’s comments as well as Elon Musk’s Grok, which in July launched its own companion features, including a female anime character. “You can already see it with some of these avatars and people leaning into the kind of sexbot erotica direction,” Suleyman said. “This is very dangerous, and I think we should be making conscious decisions to avoid those kinds of things.”

I heard that 25 percent of Internet traffic is related to erotica. That seems low based on my estimates which are now a decade old. Sex not only sells; it seems to be one of the killer applications for digital services whether the user is obfuscated, registered, or using mom’s computer.

My hunch is that the AI enhanced services will trip over their own [a] internal resources, [b] the costs of preventing abuse, sexual or criminal, and [c] the leadership waffling.

There is big money in salacious content. Talking about what will and won’t happen in a rapidly evolving area of technology is little more than marketing spin. The proof will be what happens as AI becomes more unavoidable in Microsoft software and services. Those clever teenagers with Windows running on a cheap computer can do some very interesting things. Many of these will be actions that older wizards do not anticipate or simply push to the margins of their very full 9-9-6 day.

Stephen E Arnold, October 27, 2025