Losing Money? No Problem, Says OpenAI.

October 24, 2025

Losing billions? Not to worry.

I wouldn’t want to work on OpenAI’s financial team with these numbers, according to Tech In Asia’s article, “OpenAI’s H1 2025: $4.3b In Income, $13.5b In Loss.” You don’t have to be proficient in math to see that OpenAI is in the red after losing over thirteen billion dollars and only bringing in a little over four billion.

The biggest costs were from the research and development department operating at a loss of $6.7 billion. It spent $2 billion in sales and advertising, then had $2.5 bullion in stock-based compensation. These were both double that of expenses in these departments last year. Operating costs were another hit at $7.8 billion and it spent $2.5 billion in cash.

Here’s the current state of things:

“OpenAI paid Microsoft 20% of its revenue under an existing agreement.

At the end of June, the company held roughly US$17.5 billion in cash and securities, boosted by US$10 billion in new funding, and as of the end of July, was seeking an additional US$30 billion from investors.

A tender offer underway values OpenAI’s for-profit arm at about US$500 billion.”

The company isn’t doing well in the numbers but its technology is certainly in high demand and will put the company back in black…eventually. We believe that if one thinks it, the “it” will manifest, become true, and make the world very bright.

Whitney Grace, October 24, 2025

Amazon and its Imperative to Dump Human Workers

October 22, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Everyone loves Amazon. The local merchants thank Amazon for allowing them to find their future elsewhere. The people and companies dependent on Amazon Web Services rejoiced when the AWS system failed and created an opportunity to do some troubleshooting and vendor shopping. The customer (me) who received a pair of ladies underwear instead of an AMD Ryzen 5750X. I enjoyed being the butt of jokes about my red, see through microprocessor. Was I happy!

Mice discuss Amazon’s elimination of expensive humanoids. Thanks, Venice.ai. Good enough.

However, I read “Amazon Plans to Replace More Than Half a Million Jobs With Robots.” My reaction was that some employees and people in the Amazon job pipeline were not thrilled to learn that Amazon allegedly will dump humans and embrace robots. What a great idea. No health care! No paid leave! No grousing about work rules! No medical costs! No desks! Just silent, efficient, depreciable machines. Of course there will be smart software. What could go wrong? Whoops. Wrong question after taking out an estimated one third of the Internet for a day. How about this question, “Will the stakeholders be happy?” There you go.

The write up cranked out by the Gray Lady reports from confidential documents and other sources says:

Amazon’s U.S. work force has more than tripled since 2018 to almost 1.2 million. But Amazon’s automation team expects the company can avoid hiring more than 160,000 people in the United States it would otherwise need by 2027. That would save about 30 cents on each item that Amazon picks, packs and delivers to customers. Executives told Amazon’s board last year that they hoped robotic automation would allow the company to continue to avoid adding to its U.S. work force in the coming years, even though they expect to sell twice as many products by 2033. That would translate to more than 600,000 people whom Amazon didn’t need to hire.

Why is Amazon dumping humans? The NYT turns to that institution that found Jeffrey Epstein a font of inspiration. I read this statement in the cited article:

“Nobody else has the same incentive as Amazon to find the way to automate,” said Daron Acemoglu, a professor at the Massachusetts Institute of Technology who studies automation and won the Nobel Prize in economic science last year. “Once they work out how to do this profitably, it will spread to others, too.” If the plans pan out, “one of the biggest employers in the United States will become a net job destroyer, not a net job creator,” Mr. Acemoglu said.

Ah, save money. Keep more money for stakeholders. Who knew? Who could have foreseen this motivation?

What jobs will Amazon provide to humans? Obviously leadership will keep leadership jobs. In my decades of professional work experience, I have never met a CEO who really believes anyone else can do his or her job. Well, the NYT has an answer about what humans will do at Amazon; to wit:

Amazon has said it has a million robots at work around the globe, and it believes the humans who take care of them will be the jobs of the future. Both hourly workers and managers will need to know more about engineering and robotics as Amazon’s facilities operate more like advanced factories.

I wish to close this essay with several observations:

- Much of the information in the write up come from company documents. I am not comfortable with the use of this type of information. It strikes me as a short cut, a bit like Google or self-made expert saying, “See what I did!”

- Many words were used to get one message across: Robots and by extension smart software will put people out of work. Basic income time, right? Why not say that?

- The reason wants to dump people is easy to summarize: Humans are expensive. Cut humans, costs drop (in theory). But are there social costs? Sure, but why dwell on those.

Net net: Sigh. Did anyone reviewing this story note the Amazon online collapse? Perhaps there is a relationship between cost cutting at Amazon and the company’s stability?

Stephen E Arnold, October 22, 2025

OpenAI and the Confusing Hypothetical

October 20, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

SAMA or Sam AI-Man Altman is probably going to ignore the Economist’s article “What If OpenAI Went Belly-Up?” I love what-if articles. These confections are hot buttons for consultants to push to get well-paid executives with impostor syndrome to sign up for a big project. Push the button and ka-ching. The cash register tallies another win for a blue chip.

Will Sam AI-Man respond to the cited article? He could fiddle the algorithms for ChatGPT to return links to AI slop. The result would be either [a] an improvement in Economist what-if articles or a drop off in their ingenuity. The Economist is not a consulting firm, but it seems as if some of its professionals want to be blue chippers.

A young would-be magician struggles to master a card trick. He is worried that he will fail. Thanks, Venice.ai. Good enough.

What does the write up hypothesize? The obvious point is that OpenAI is essentially a scam. When it self destructs, it will do immediate damage to about 150 managers of their own and other people’s money. No new BMW for a favorite grand child. Shame at the country club when a really terrible golfer who owns an asphalt paving company says, “I heard you took a hit with that OpenAI investment. What’s going on?”

Bad.

SAMA has been doing what look like circular deals. The write up is not so much hypothetical consultant talk as it is a listing of money moving among fellow travelers like riders on wooden horses on a merry-go-round at the county fair. The Economist article states:

The ubiquity of Mr Altman and his startup, plus its convoluted links to other AI firms, is raising eyebrows. An awful lot seems to hinge on a firm forecast to lose $10bn this year on revenues of little more than that amount. D.A. Davidson, a broker, calls OpenAI “the biggest case yet of Silicon Valley’s vaunted ‘fake it ’till you make it’ ethos”.

Is Sam AI-Man a variant of Elizabeth Holmes or is he more like the dynamic duo, Sergey Brin and Larry Page? Google did not warrant this type of analysis six or seven years into its march to monopolistic behavior:

Four of OpenAI’s six big deal announcements this year were followed by a total combined net gain of $1.7trn among the 49 big companies in Bloomberg’s broad AI index plus Intel, Samsung and SoftBank (whose fate is also tied to the technology). However, the gains for most concealed losses for some—to the tune of $435bn in gross terms if you add them all up.

Frankly I am not sure about the connection the Economist expects me to make. Instead of Eureka! I offer, “What?”

Several observations:

- The word “scam” does not appear in this hypothetical. Should it? It is a bit harsh.

- Circular deals seem to be okay even if the amount of “value” exchanged seems to be similar to projections about asteroid mining.

- Has OpenAI’s ability to hoover cash affected funding of other economic investments. I used to hear about manufacturing in the US. What we seem to be manufacturing is deals with big numbers.

Net net: This hypothetical raises no new questions. The “fake it to you make it” approach seems to be part of the plumbing as we march toward 2026. Oh, too bad about those MBA-types who analyzed the payoff from Sam AI-Man’s story telling.

Stephen E Arnold, October x, 2025

Ford CEO and AI: A Busy Time Ahead

October 17, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Ford’s CEO is Jim Farley. He has his work cut out for him. First, he has an aluminum problem. Second, he has an F 150 production disruption problem. Third, he has a PR problem. There’s not much he can do about the interruption of the aluminum supply chain. No parts means truck factories in Kentucky will have to go slow or shut down. But the AI issue is obviously one that is of interest to Ford stakeholders.

He [Mr. Farley] says the jobs most at risk aren’t the ones on the assembly line, but the ones behind a desk. And in his view, the workers wiring machines, operating tools, and physically building the infrastructure could turn out to be the most critical group in the economy. Farley laid it out bluntly back in June at the Aspen Ideas Festival during an interview with author Walter Isaacson. “Artificial intelligence is going to replace literally half of all white-collar workers,” he said. “AI will leave a lot of white-collar people behind.” He wasn’t speculating about a distant future either. Farley suggested the shift is already unfolding, and the implications could be sweeping.

With the disruption of the aluminum supply chain, Ford now will have to demonstrate that AI has indeed reduced white collar headcount. The write up says:

For him, it comes down to what AI can and cannot do. Office tasks — from paperwork to scheduling to some forms of analysis — can be automated with growing speed. But when it comes to factories, data centers, supply chains, or even electric vehicle production, someone still has to build, install, and maintain it…

The Ford situation is an interesting one. AI will reduce costs because half Ford’s white collar workers will no longer be on the payroll. But with supply chain interruptions and the friction in retail and lease sales, Ford has an opportunity to demonstrate that AI will allow a traditional manufacturing company to weather the current thunderstorm and generate financial proof that AI can offset exogenous events.

How will Ford perform? This is worth watching because it will provide some useful information for firms looking for a way to cut costs, improve operations, and balance real-world business. AI delivering one kind of financial benefit and traditional blue-collar workers unable to produce products because of supply chain issues. Quite a balancing act for Ford leadership.

Stephen E Arnold, October 17, 2025

Hey, Pew, Wanna Bet?

October 16, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

My Telegram Labyrinth book is almost over the finish line. I include some discussion of online gambling in Telegram. Of particular interest to me and my research team was kiddie games. A number of these reward the young child with crypto tokens. Get enough tokens and the game provides the player with options. A couple of these options point the kiddie directly to an online casino running in Telegram Messenger. What happens next? A few players win. Others lose. The approach is structured and intentional. The goal of some of these fun games is addicting youngsters to online gambling via crypto.

Nifty. Telegram has been up and running since 2013. In the last few years, online gambling has become a part of the organization’s strategic vision. Anyone, including a child with a mobile device, can play online gambling on Telegram. From Telegram’s point of view, this is freedom. From a parent who discovers a financial downside from their child’s play, this is stressful.

I read “Americans Increasingly See Legal Sports Betting As a Bad Thing for Society and Sports.” The Pew research outfit dug into online gambling. What did the number crunchers learn? Here are a handful of findings:

- More Americans view legal sports betting as bad for society and sports. (Hey, addiction is a problem. Who knew?)

- One-fifth of Americans bet online. The good news is that sports betting is not growing. (Is that why advertising for online gaming seems to be more prevalent?)

- 47 percent of men under 30 say legal sports betting is a bad thing, up from 22 percent who said this in 2022.

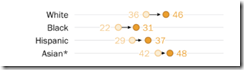

Now check out this tough-to-read graphic:

Views of online gambling vary within the demographic groups in the sample. I noted that old people (dinobabies like me) do not wager as frequently as those between the ages of 18 and 29. I wonder if the age of the VCs pumping money into AI come from this demographic. Betting seems okay to more of them. Ask someone over 65, only 12 percent of those you query will say, “Great idea.”

I would argue that online gambling is readily available. More services are emulating the Telegram model. The Pew study seemed to ignore the target demographic for the users of the Telegram kiddie gambling games. That is a whiff to me. But will anyone care? Only the parents and it may take years for the research firms to figure out where the key change is taking place.

Stephen E Arnold, October 16, 2025

Blue Chip Consultants: Spin, Sizzle, and Fizzle with AI

October 14, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Can one quantify the payoffs from AI? Not easily. So what’s the solution? How about a “free” as in “marketing collateral” report from the blue-chip consulting firm McKinsey & Co. (You know that outfit because it figured out how to put Eastern Kentucky, Indiana, and West Virginia on the map.)

I like company reports like “Upgrading Software Business Models to Thrive in the AI Era.” These combine the weird spirit of Ezra Pound with used car sales professionals and blend in a bit of “we know more” rhetoric. Based on my experience, this is a winning combination for many professionals. This document speaks to those in the business of selling software. Today software does not come in boxes or as part of the deal when one buys a giant mainframe. Nope, software is out there. In the cloud. Companies use cloud solutions because — as consultants explained years ago — an organization can fire most technical staff and shift to pay-as-you go services. That big room that held the mainframe can become a sublease. That’s efficiency.

This particular report is the work of four — count them — four people who can help your business. Just bring money and the right attitude. McKinsey is selective. That’s how it decided to enter the pharmaceutical consulting business. Here’s a statement the happy and cooperative group of like-minded consultants presented:

while global enterprise spending on AI applications has increased eightfold over the last year to close to $5 billion, it still only represents less than 1 percent of total software application spending.

Converting this consultant speak to my style of English, the four blue chippers are trying to say that AI is not living up to the hype. Why? A software company today is having a tough time proving that AI delivers. The lack of fungible proof in the form of profits means that something is not going according to plan. Remember: The plan is to increase the revenue from software infused with AI.

Options include the exciting taxi meter approach. This means that the customers of enterprise software doesn’t know how much something costs upfront. Invoices deliver the cost. Surprise is not popular among some bean counters. Amazon’s AWS is in the surprise business. So is Microsoft Azure. However, surprise is not a good approach for some customers.

Licensees of enterprise software with that AI goodness mixed in could balk at paying fees for computational processes outside the control of the software licensee. This is the excitement a first year calculus student experiences when the values of variables are mysterious or unknown. Once one wrestles the variables to the ground, then one learns that the curve never reaches the x axis. It’s infinite, sport.

Pricing AI is a killer. The China-linked folks at Deepseek and its fellow travelers are into the easy, fast, and cheap approach to smart software. One can argue whether the intellectual property is original. One cannot argue that cheap is a compelling feature of some AI solutions. Cue the song: Where or When with the lines:

It seems we stood and talked like this before

We looked at each other in the same way then

But I can’t remember where or QWEN…

The problem is that enterprise software with AI is tough to price. The enterprise software company’s engineering and development costs go up. Their actual operating costs rise. The enterprise software company has to provide fungible proof that the bundle delivers value to warrant a higher price. That’s hard. AI is everywhere, and quite a few services are free, cheap or, or do it yourself code.

McKinsey itself does not have an answer to the problem the report from four blue chip consultants has identified. The report itself is start evidence that explaining AI pricing, operational, and use case data is a work in progress. My view is that:

- AI hype painted a picture of wonderful, easily identifiable benefits. That picture is a bit like a AI generated video. It is momentarily engaging but not real.

- AI state of the art today is output with errors. Hey, that sounds special when one is relying on AI for a medical diagnosis for your child or grandchild or managing your retirement account.,

- AI is a utility function. Software utilities get bundled into software that does something for which the user or licensee is willing to pay. At this time, AI is a work in progress, a novelty, and a cloud of unknowing. At some point, the fog will clear, but it won’t happen as quickly as the AI furnaces burn cash.

- What to sell, to whom, and pricing are problems created by AI. Asking smart software what to do is probably not going to produce a useful answer when the enterprise market is in turmoil, wallowing in uncertainty, and increasingly resistant to “surprise” pricing models.

Net net: McKinsey itself has not figured out AI. The idea is that clients will hire blue chip consultants to figure out AI. Therefore, the more studies and analyses blue chip consultants conduct, the closer these outfits will come to an answer. That’s good for the consulting business. The enterprise software companies may hire the blue chip consultants to answer the money and value questions. The bad news is that the fate of AI in enterprise software developers is in the hands of the licensees. Based on the McKinsey report, these folks are going slow. The mismatch among these players may produce friction. That will be exciting.

Stephen E Arnold, October 14, 2025

AI Embraces the Ethos of Enterprise Search

October 9, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

In my files, I have examples of the marketing collateral generated by enterprise search vendors. I have some clippings from trade publications and other odds and ends dumped into my enterprise search folder. One of these reports is “Fastgründer John Markus Lervik dømt til fengsel.” The article is no longer online, but you can read my 2014 summary at this Beyond Search link. The write up documents an enterprise search vendor who used some alleged accounting methods to put a shine on the company. In 2008, Microsoft purchased Fast Search & Transfer putting an end to this interesting company.

A young CPA MBA BA (with honors) is jockeying a spreadsheet. His father worked for an enterprise search vendor based in the UK. His son is using his father’s template but cannot get the numbers to show positive cash flows across six quarters. Thanks, Venice.ai. Good enough.

Why am I mentioning Fast Search & Transfer? The information in Fortune Magazine’s “‘There’s So Much Pressure to Be the Company That Went from Zero to $100 Million in X Days’: Inside the Sketchy World of ARR and Inflated AI Startup Accounting” jogged my memory about Fast Search and a couple of other interesting companies in the enterprise search sector.

Enterprise search was the alleged technology to put an organization’s information at the fingertips of employees. Enterprise search would unify silos of information. Enterprise search would unlock the value of an organization’s “hidden” or “dark” data. Enterprise search would put those hours wasted looking for information to better use. (IDC was the cheerleader for the efficiency payoff from enterprise search.)

Does this sound familiar? It should every vendor applying AI to an organization’s information challenges is either recycling old chestnuts from the Golden Age of Enterprise Search or wandering in the data orchard discovering these glittering generalities amidst nuggets of high value jargon.

The Fortune article states:

There’s now a massive amount of pressure on AI-focused founders, at earlier stages than ever before: If you’re not generating revenue immediately, what are you even doing? Founders—in an effort to keep up with the Joneses—are counting all sorts of things as “long-term revenue” that are, to be blunt, nothing your Accounting 101 professor would recognize as legitimate. Exacerbating the pressure is the fact that more VCs than ever are trying to funnel capital into possible winners, at a time where there’s no certainty about what evaluating success or traction even looks like.

Would AI start ups fudge numbers? Of course not. Someone at the start up or investment firm took a class in business ethics. (The pizza in those study groups was good. Great if it could be charged to another group member’s Visa without her knowledge. Ho ho ho.)

The write up purses the idea that ARR or annual recurring revenue is a metric that may not reflect the health of an AI business. No kidding? When an outfit has zero revenue resulting from dumping investor case into a burning dumpster fire, it is difficult for me to understand how people see a payoff from AI. The “payoff” comes from moving money around, not from getting cash from people or organizations on a consistent basis. Subscription-like business models are great until churn becomes a factor.

The real point of the write up for me is that financial tricks, not customers paying for the product or service, are the name of the game. One big enterprise search outfit used “circular” deals to boost revenue. I did some small work for this outfit, so I cannot identify it. The same method is now part of the AI revolution involving Nvidia, OpenAI, and a number of other outfits. Whose money is moving? Who gets it? What’s the payoff? These are questions not addressed in depth in the information to which I have access?

I think financial intermediaries are the folks taking home the money. Some vendors may get paid like masters of black art accounting. But investor payoff? I am not so sure. For me the good old days of enterprise search are back again, just with bigger numbers and more impactful financial consequences.

As an aside, the Fortune article uses the word “shit” twice. Freudian slip or a change in editorial standards at Fortune? That word was applied by one of my team when asked to describe the companies I profiled in the Enterprise Search Report I wrote many years ago. “Are you talking about my book or enterprise search?” I asked. My team member replied, “The enterprise search thing.”

Stephen E Arnold, October 2025

Slopity Slopity Slop: Nice Work AI Leaders

October 8, 2025

Remember that article about academic and scientific publishers using AI to churn out pseudoscience and crap papers? Or how about that story relating to authors’ works being stolen to train AI algorithms? Did I mention they were stealing art too?

Techdirt literally has the dirt on AI creating more slop: “AI Slop Startup To Flood The Internet With Thousands Of AI Slop Podcasts, Calls Critics Of AI Slop ‘Luddites’.” AI is a helpful tool. It’s great to assist with mundane things of life or improve workflows. Automation, however, has become the newest sensation. Big Tech bigwigs and other corporate giants are using it to line their purses, while making lives worse for others.

Note this outstanding example of a startup that appears to be interested in slop:

“Case in point: a new startup named Inception Point AI is preparing to flood the internet with a thousands upon thousands of LLM-generated podcasts hosted by fake experts and influencers. The podcasts cost the startup a dollar or so to make, so even if just a few dozen folks subscribe they hope to break even…”

They’ll make the episodes for less than a dollar. Podcasting is already a saturated market, but Point AI plans to flush it with garbage. They don’t care about the ethics. It’s going to be the Temu of podcasts. It would be great if people would flock to true human-made stuff, but they probably won’t.

Another reason we’re in a knowledge swamp with crocodiles.

Whitney Grace, October 9, 2025

Google Gets the Crypto Telegram

October 7, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Not too many people cared that Google cut a deal with Alibaba’s ANT financial services outfit. My view is that at some point down the information highway, the agreement will capture more attention. Today (September 27, 2025), I want to highlight another example of Google’s getting a telegram about crypto.

Finding inspiration? Yep. Thanks, Venice.ai. Good enough.

Navigate to what seems to be just another crypto mining news announcement: “Cipher Mining Signs 168 MW, 10-Year AI Hosting Agreement with Fluidstack.”

So what’s a Cipher Mining? This is a publicly traded outfit engaged in crypto mining. My understanding is that the company’s primary source of revenue is bitcoin mining. Some may disagree, pointing to its business as “owner, developer and operator of industrial-scale data centers.”

The news release says:

[Cipher Mining] announces a 10-year high-performance computing (HPC) colocation agreement with Fluidstack, a premier AI cloud platform that builds and operates HPC clusters for some of the world’s largest companies.

So what?

The news release also offers this information:

Google will backstop $1.4 billion of Fluidstack’s lease obligations to support project-related debt financing and will receive warrants to acquire approximately 24 million shares of Cipher common stock, equating to an approximately 5.4% pro forma equity ownership stake, subject to adjustment and a potential cash settlement under certain circumstances. Cipher plans to retain 100% ownership of the project and access the capital markets as necessary to fund a portion of the project.

Okay, three outfits: crypto, data centers, and billions of dollars. That’s quite an information cocktail.

Several observations:

- Like the Alibaba / ANT relationship, the move is aligned with facilitating crypto activities on a large scale

- In the best tradition of moving money, Google seems to be involved but not the big dog. I think that Google may indeed be the big dog. Puzzle pieces that fit together? Seems like it to me.

- Crypto and financial services could — note I say “could” — be the hedge against future advertising revenue potholes.

Net net: Worth watching and asking, “What’s the next Google message received from Telegram?” Does this question seem cryptic? It isn’t. Like Meta, Google is following a path trod by a certain outfit now operating in Dubai. Is the path intentional or accidental? Where Google is concerned, everything is original, AI, and quantumly supreme.

Stephen E Arnold, October 7, 2025

Telegram and EU Regulatory Consolidation: Trouble Ahead

October 6, 2025

This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Imagine you are Pavel Durov. The value of TONcoin is problematic. France asked you to curtail some content in a country unknown to the folks who hang out at the bar at the Harrod’s Creek Inn in rural Kentucky. Competitors are announcing plans to implement Telegram-type functions in messaging apps built with artificial intelligence as steel girders. How can the day become more joyful?

Thanks, Midjourney. Good enough pair of goats. One an actual goat and the other a “Greatest of All Time” goat.

The orange newspaper has an answer to that question. “EU Watchdog Prepares to Expand Oversight of Crypto and Exchanges” reports:

Stock exchanges, cryptocurrency companies and clearing houses operating in the EU are set to come under the supervision of the bloc’s markets watchdog…

Crypto currency and some online services (possibly Telegram) operate across jurisdictions. The fragmented rules and regulations allow organizations with sporty leadership to perform some remarkable financial operations. If you poke around, you will find the names of some outfits allied with industrious operators linked to a big country in Asia. Pull some threads, and you may find an unknown Russian space force professional beavering away in the shadows of decentralized financial activities.

The write up points out:

Maria Luís Albuquerque, EU commissioner for financial services, said in a speech last month that it was “considering a proposal to transfer supervisory powers to Esma for the most significant cross-border entities” including stock exchanges, crypto companies and central counterparties.

How could these rules impact Telegram? It is nominally based in the United Arab Emirates? Its totally independent do-good Open Network Foundation works tirelessly from a rented office in Zug, Switzerland. Telegram is home free, right?

No pesky big government rules can ensnare the Messenger crowd.

Possibly. There is that pesky situation with the annoying French judiciary. (Isn’t that country with many certified cheeses collapsing?) One glitch: Pavel Durov is a French citizen. He has been arrested, charged, and questioned about a dozen heinous crimes. He is on a leash and must check in with his grumpy judicial “mom” every couple of weeks. He allegedly refused to cooperate with a request from a French government security official. He is awaiting more thrilling bureaucracy from the French judicial system. How does he cope? He criticizes France, the legal processes, and French officials asking him to do for France what Mr. Durov did for Russia earlier this year.

Now these proposed regulations may intertwine with Mr. Durov’s personal legal situation. As the Big Dog of Telegram, the French affair is likely to have some repercussions for Telegram and its Silicon Valley big tech approach to rules and regulations. EU officials are indeed aware of Mr. Durov and his activities. From my perspective in nowheresville in rural Kentucky, the news in the Financial Times on October 6, 2025, is problematic for Mr. Durov. The GOAT of Messaging, his genius brother, and a close knit group of core engineers will have to do some hard thinking to figure out how to deal with these European matters. Can he do it? Does a GOAT eat what’s available?

Stephen E Arnold, October 6, 2025