How to Cut Podcasts Costs and Hassles: A UK Example

November 5, 2024

Using AI to replicate a particular human is a fraught topic. Of paramount concern is the relentless issue of deepfakes. There are also legal issues of control over one’s likeness, of course, and concerns the technology could put humans out of work. It is against this backdrop, the BBC reports, that “Michael Parkinson’s Son Defends New AI Podcast.” The new podcast uses AI to recreate the late British talk show host, who will soon interview (human) guests. Son Mike acknowledges the concerns, but insists this project is different. Writer Steven McIntosh explains:

“Mike Parkinson said Deep Fusion’s co-creators Ben Field and Jamie Anderson ‘are 100% very ethical in their approach towards it, they are very aware of the legal and ethical issues, and they will not try to pass this off as real’. Recalling how the podcast was developed, Parkinson said: ‘Before he died, we [my father and I] talked about doing a podcast, and unfortunately he passed away before it came true, which is where Deep Fusion came in. ‘I came to them and said, ‘if we wanted to do this podcast with my father talking about his archive, is it possible?’, and they said ‘it’s more than possible, we think we can do something more’. He added his father ‘would have been fascinated’ by the project, although noted the broadcaster himself was a ‘technophobe’. Discussing the new AI version of his father, Parkinson said: ‘It’s extraordinary what they’ve achieved, because I didn’t really think it was going to be as accurate as that.’”

So they have the family’s buy-in, and they are making it very clear the host is remade with algorithms. The show is called “Virtually Parkinson,” after all. But there is still that replacing human talent with AI thing. Deep Fusion’s Anderson notes that, since Parkinson is deceased, he is in no danger of losing work. However, McIntosh counters, any guest that appears on this show may give one fewer interview to a show hosted by a different, living person. Good point.

One thing noteworthy about Deep Fusion’s AI on this project is its ability to not just put words in Parkinson’s mouth, but to predict how he would have actually responded. Assuming that function is accurate, we have a request: Please bring back the objective reporting of Walter Cronkite. This world sorely needs it.

Cynthia Murrell, November 5, 2024

Apple: Challenges Little and Bigly

October 28, 2024

Another post from a dinobaby. No smart software required except for the illustration.

Another post from a dinobaby. No smart software required except for the illustration.

At lunch yesterday (October 23, 2024), one of the people in the group had a text message with a long string of data. That person wanted to move the data from the text message into an email. The idea was copy a bit of ascii, put it in an email, and email the data to his office email account. Simple? He fiddled but could not get the iPhone to do the job. He showed me the sequence and when he went through the highlighting, the curly arrow, and the tap to copy, he was following the procedure. When he switched to email and pressed the text was not available. A couple of people tried to make this sequence of tapping and long pressing work. Someone handed the phone to me. I fooled around with it, asked the person to restart the phone, and went through the process. It took two tries but I got the snip of ASCII to appear in the email message. Yep, that’s the Apple iPhone. Everyone loves the way it works, except when it does not. The frustration the iPhone owner demonstrated illustrates the “good enough” approach to many functions in Apple’s and other firms’ software.

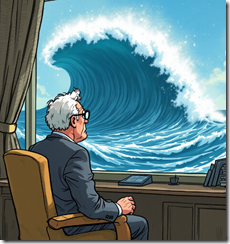

Will the normal course of events swamp this big time executive? Thanks, You.com. You were not creative, but you were good enough.

Why mention this?

Apple is a curious company. The firm has been a darling of cored fans, investors, and the MBA crowd. I have noted two actions related to Apple which suggest that the company may have a sleek exterior but the interior is different. Let’s look at these two recent developments.

The first item concerns what appear to be untoward behavior by Apple and those really good folks at Goldman Sachs. The Apple credit card received a statement showing that $89 million was due. The issue appears to be fumbling the ball with customers. For a well managed company, how does this happen? My view is that getting cute was not appreciated by some government authorities. A tiny mistake? Yes. The fine is miniscule compared to the revenue represented by the outstanding enterprises paying the fine. With small fines, have the Apple and Goldman Sachs professionals learned a lesson. Yes, get out of the credit card game. Other than that, I surmise that neither of the companies will veer from their game plans.

The second item is, from my point of view, a bit more interesting than credit cuteness. Apple, if the news report in the Washington Times, is close to the truth, is getting very comfortable with China. The basic idea is that Apple wants to invest in China. Is China the best friend forever of the US? I thought some American outfits were somewhat cautious with regard to their support of that nation state. Well, that does not appear to apply to China.

With the weird software, the credit card judgment, and the China love fest, we have three examples of a company operating in what I would describe as a fog of pragmatism. The copy paste issue makes clear that simplicity and attention to a common task on a widely used device is not important. The message for the iPhone is, “Figure out our way. Don’t even think about a meaningful, user centric change. Just upgrade and get the vapor of smart software.”

The message from the credit card judgment is, “Hey, we will do what we want. If there is a problem, send us a bill. We will continue to do what we want.” That shows me that Apple buys into the behavior pattern which makes Silicon Valley behavior the gold standard in management excellence.

My interpretation of the China-Apple BFF activity is that the policy of the US government is of little interest. Apple, like other large technology outfits, is effectively operating as a nation state. The company will do what it wants and let lawyer and PR people make the activity palatable.

I find it amusing that Apple appears to be reducing orders for its next big iPhone release. The market may be reaching a saturation point or the economic conditions in certain markets make lower cost devices more appealing. My own view is that the AI vapor spewed by Apple and other US companies is dissipating. Another utility function which does not work in a reliable way may not be enough.

Why not make copy paste more usable or is that a challenge beneath your vast aspirations?

Stephen E Arnold, October 28, 2024

Meta, Politics, and Money

October 24, 2024

Meta and its flagship product, Facebook, makes money from advertising. Targeted advertising using Meta’s personalization algorithm is profitable and political views seem to turn the money spigot. Remember the January 6 Riots or how Russia allegedly influenced the 2016 presidential election? Some of the reasons those happened was due to targeted advertising through social media like Facebook.

Gizmodo reviews how much Meta generates from political advertising in: “How Meta Brings In Millions Off Political Violence.” The Markup and CalMatters tracked how much money Meta made from Trump’s July assassination attempt via merchandise advertising. The total runs between $593,000 -$813,000. The number may understate the actual money:

“If you count all of the political ads mentioning Israel since the attack through the last week of September, organizations and individuals paid Meta between $14.8 and $22.1 million dollars for ads seen between 1.5 billion and 1.7 billion times on Meta’s platforms. Meta made much less for ads mentioning Israel during the same period the year before: between $2.4 and $4 million dollars for ads that were seen between 373 million and 445 million times. At the high end of Meta’s estimates, this was a 450 percent increase in Israel-related ad dollars for the company. (In our analysis, we converted foreign currency purchases to current U.S. dollars.)”

The organizations that funded those ads were supporters of Palestine or Israel. Meta doesn’t care who pays for ads. Tracy Clayton is a Meta spokesperson and she said that ads go through a review process to determine if they adhere to community standards. She also that advertisers don’t run their ads during times of strife, because they don’t want their goods and services associates with violence.

That’s not what the evidence shows. The Markup and CalMatters researched the ads’ subject matter after the July assassination attempt. While they didn’t violate Meta’s guidelines, they did relate to the event. There were ads for gun holsters and merchandise about the shooting. It was a business opportunity and people ran with it with Meta holding the finish line ribbon.

Meta really has an interesting ethical framework.

Whitney Grace, October 24, 2024

Money and Open Source: Unpleasant Taste?

October 23, 2024

Open-source veteran and blogger Armin Ronacher ponders “The Inevitability of Mixing Open Source and Money.” It is lovely when developers work on open-source projects for free out of the goodness of their hearts. However, the truth is these folks can only afford to spend so much time working for free. (A major reason open source documentation is a mess, by the way.)

For his part, Ronacher helped launch Sentry’s Open Source Pledge. That initiative asks companies to pledge funding to open source projects they actively use. It is particularly focused on small projects, like xz, that have a tougher time attracting funds than the big names. He acknowledges the perils of mixing open source and money, as described by Word Press’s David Heinemeier Hansson. But he insists the blend is already baked in. He considers:

“At face value, this suggests that Open Source and money shouldn’t mix, and that the absence of monetary rewards fosters a unique creative process. There’s certainly truth to this, but in reality, Open Source and money often mix quickly. If you look under the cover of many successful Open Source projects you will find companies with their own commercial interests supporting them (eg: Linux via contributors), companies outright leading projects they are also commercializing (eg: MariaDB, redis) or companies funding Open Source projects primarily for marketing / up-sell purposes (uv, next.js, pydantic, …). Even when money doesn’t directly fund an Open Source project, others may still profit from it, yet often those are not the original creators. These dynamics create stresses and moral dilemmas.”

For example, the conflict between Hansson and WP Engine. The tension can also personal stress. Ronacher shares doubts that have plagued him: to monetize or not to monetize? Would a certain project have taken off had he poured his own money into it? He has watched colleagues wrestle with similar questions that affected their health and careers. See his post for more on those issues. The write-up concludes:

“I firmly believe that the current state of Open Source and money is inadequate, and we should strive for a better one. Will the Pledge help? I hope for some projects, but WordPress has shown that we need to drive forward that conversation of money and Open Source regardless of the size of the project.”

Clearly, further discussion is warranted. New ideas from open-source enthusiasts are also needed. Can a balance be found?

Cynthia Murrell, October 23, 2024

AI: The Key to Academic Fame and Fortune

October 17, 2024

Just a humanoid processing information related to online services and information access.

Just a humanoid processing information related to online services and information access.

Why would professors use smart software to “help” them with their scholarly papers? The question may have been answered in the Phys.org article “Analysis of Approximately 75 Million Publications Finds Those Employing AI Are More Likely to Be a ‘Hit Paper’” reports:

A new Northwestern University study analyzing 74.6 million publications, 7.1 million patents and 4.2 million university course syllabi finds papers that employ AI exhibit a “citation impact premium.” However, the benefits of AI do not extend equitably to women and minority researchers, and, as AI plays more important roles in accelerating science, it may exacerbate existing disparities in science, with implications for building a diverse, equitable and inclusive research workforce.

Years ago some universities had an “honor code”? I think the University of Virginia was one of those dinosaurs. Today professors are using smart software to help them crank out academic hits.

The write up continues by quoting a couple of the study’s authors (presumably without using smart software) as saying:

“These advances raise the possibility that, as AI continues to improve in accuracy, robustness and reach, it may bring even more meaningful benefits to science, propelling scientific progress across a wide range of research areas while significantly augmenting researchers’ innovation capabilities…”

What are the payoffs for the professors who probably take a dim view of their own children using AI to make life easier, faster, and smoother? Let’s look at a handful my team and I discussed:

- More money in the form of pay raises

- Better shot at grants for research

- Fame at conferences

- Groupies. I know it is hard to imagine but it happens. A lot.

- Awards

- Better committee assignments

- Consulting work.

When one considers the benefits from babes to bucks, the chit chat about doing better research is of little interest to professors who see virtue in smart software.

The president of Stanford cheated. The head of the Harvard Ethics department appears to have done it. The professors in the study sample did it. The conclusion: Smart software use is normative behavior.

Stephen E Arnold, October 17, 2024

Happy AI News: Job Losses? Nope, Not a Thing

September 19, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

I read “AI May Not Steal Many Jobs after All. It May Just Make Workers More Efficient.” Immediately two points jumped out at me. The AP (the publisher of the “real” news story is hedging with the weasel word “may” and the hedgy phrase “after all.” Why is this important? The “real” news industry is interested in smart software to reduce costs and generate more “real” news more quickly. The days with “real” reporters disappearing for hours to confirm with a source are often associated with fiddling around. The costs of doing anything without a gusher of money pumping 24×7 are daunting. The word “efficient” sits in the headline as a digital harridan stakeholder. Who wants that?

The manager of a global news operation reports that under his watch, he has achieved peak efficiency. Thanks, MSFT Copilot. Will this work for production software development? Good enough is the new benchmark, right?

The story itself strikes me as a bit of content marketing which says, “Hey, everyone can use AI to become more efficient.” The subtext is, “Hey, don’t worry. No software robot or agentic thingy will reduce staff. Probably.

The AP is a litigious outfit even though I worked at a newspaper which “participated” in the business process of the entity. Here’s one sentence from the “real” news write up:

Instead, the technology might turn out to be more like breakthroughs of the past — the steam engine, electricity, the internet: That is, eliminate some jobs while creating others. And probably making workers more productive in general, to the eventual benefit of themselves, their employers and the economy.

Yep, just like the steam engine and the Internet.

When technologies emerge, most go away or become componentized or dematerialized. When one of those hot technologies fail to produce revenues, quite predictable outcomes result. Executives get fired. VC firms do fancy dancing. IRS professionals squint at tax returns.

So far AI has been a “big guys win sort of because they have bundles of cash” and “little outfits lose control of their costs”. Here’s my take:

- Human-generated news is expensive and if smart software can do a good enough job, that software will be deployed. The test will be real time. If the software fails, the company may sell itself, pivot, or run a garage sale.

- When “good enough” is the benchmark, staff will be replaced with smart software. Some of the whiz kids in AI like the buzzword “agentic.” Okay, agentic systems will replace humans with good enough smart software. That will happen. Excellence is not the goal. Money saving is.

- Over time, the ideas of the current transformer-based AI systems will be enriched by other numerical procedures and maybe— just maybe — some novel methods will provide “smart software” with more capabilities. Right now, most smart software just finds a path through already-known information. No output is new, just close to what the system’s math concludes is on point. Right now, the next generation of smart software seems to be in the future. How far? It’s anyone’s guess.

My hunch is that Amazon Audible will suggest that humans will not lose their jobs. However, the company is allegedly going to replace human voices with “audibles” generated by smart software. (For more about this displacement of humans, check out the Bloomberg story.)

Net net: The “real” news story prepares the field for planting writing software in an organization. It says, “Customer will benefit and produce more jobs.” Great assertions. I think AI will be disruptive and in unpredictable ways. Why not come out and say, “If the agentic software is good enough, we will fire people”? Answer: Being upfront is not something those who are not dinobabies do.

Stephen E Arnold, September 19, 2024

Smart Software: More Novel and Exciting Than a Mere Human

September 17, 2024

This essay is the work of a dumb humanoid. No smart software required.

This essay is the work of a dumb humanoid. No smart software required.

Idea people: What a quaint notion. Why pay for expensive blue-chip consultants or wonder youth from fancy universities? Just use smart software to generate new, novel, unique ideas. Does that sound over the top? Not according to “AIs Generate More Novel and Exciting Research Ideas Than Human Experts.” Wow, I forgot exciting. AI outputs can be exciting to the few humans left to examine the outputs.

The write up says:

Recent breakthroughs in large language models (LLMs) have excited researchers about the potential to revolutionize scientific discovery, with models like ChatGPT and Anthropic’s Claude showing an ability to autonomously generate and validate new research ideas. This, of course, was one of the many things most people assumed AIs could never take over from humans; the ability to generate new knowledge and make new scientific discoveries, as opposed to stitching together existing knowledge from their training data.

Aside from having no job and embracing couch surfing or returning to one’s parental domicile, what are the implications of this bold statement? It means that smart software is better, faster, and cheaper at producing novel and “exciting” research ideas. There is even a chart to prove that the study’s findings are allegedly reproducible. The graph has whisker lines too. I am a believer… sort of.

The magic of a Bonferroni correction which allegedly copes with data from multiple dependent or independent statistical tests are performed in one meta-calculation. Does it work? Sure, a fancy average is usually close enough for horseshoes I have heard.

Just keep in mind that human judgments are tossed into the results. That adds some of that delightful subjective spice. The proof of the “novelty” creation process, according to the write up comes from Google. The article says:

…we can’t understate AI’s potential to radically accelerate progress in certain areas – as evidenced by Deepmind’s GNoME system, which knocked off about 800 years’ worth of materials discovery in a matter of months, and spat out recipes for about 380,000 new inorganic crystals that could have revolutionary potential in all sorts of areas. This is the fastest-developing technology humanity has ever seen; it’s reasonable to expect that many of its flaws will be patched up and painted over within the next few years. Many AI researchers believe we’re approaching general superintelligence – the point at which generalist AIs will overtake expert knowledge in more or less all fields.

Flaws? Hallucinations? Hey, not to worry. These will be resolved as the AI sector moves with the arrow of technology. Too bad if some humanoids are pierced by the arrow and die on the shoulder of the uncaring Information Superhighway. What about those who say AI will not take jobs? Have those people talked with an accountants responsible for cost control?

Stephen E Arnold, September 17, 2024

Pay Up Time for Low Glow Apple

September 16, 2024

![green-dino_thumb_thumb_thumb_thumb_t[1] green-dino_thumb_thumb_thumb_thumb_t[1]](https://arnoldit.com/wordpress/wp-content/uploads/2024/09/green-dino_thumb_thumb_thumb_thumb_t1_thumb.gif) This essay is the work of a dumb dinobaby. No smart software required.

This essay is the work of a dumb dinobaby. No smart software required.

Who noticed the flip side of Apple’s big AI event? CNBC did. “Apple Loses EU Court Battle over 13 Billion Euro Tax Bill in Ireland” makes clear that the EU regulators were not awed by snappy colors, “to be” AI, and Apple’s push to be the big noise in hearing aids. Nope. The write up reported:

Europe’s top court on Tuesday ruled against Apple in the tech giant’s 10-year court battle over its tax affairs in Ireland. The pronouncement from the European Court of Justice comes hours after Apple unveiled a swathe of new product offerings, looking to revitalize its iPhone, Apple Watch and Air Pod line-ups.

Those new products will need to generate some additional revenue. The monetary penalty ascends to $14 billion. Packaged as illegal tax benefits, Apple will go through the appeal drill, the PR drill, and the apology drill. The drills may not stop the EU’s desire to scrutinize the behaviors of US high technology companies. It seems that the EU is on a crusade to hold the Big Dogs by their collars, slip on choke chains, and bring the beasts to heel.

An EU official hits a big rock and finds money inside. Thanks, MSFT Copilot. Good enough.

I have worked in a couple of EU countries. I recall numerous comments from my clients and colleagues in Europe who suggested US companies were operating as if they were countries. From these individuals’ points of view, their observations about US high technology outfits were understandable. The US, according to some, refused to hold these firms accountable for what some perceived as ignoring user privacy and outright illegal behavior of one sort or another.

What does the decision suggest?

- Big fines, recoveries, and judgments are likely to become more common

- Regulations to create market space for European start ups and technologies are likely to be forthcoming

- The Wild West behavior, tolerated by US regulators, will not be tolerated.

There is one other possible consequence of this $14 billion number. The penalty is big, even for a high tech money machine like Apple. The size of the number may encourage other countries’ regulators to think big as well. It is conceivable that after years of inaction, even US regulators may be tempted to jump into the big money when judgments go against the high technology outfits.

With Google on the spot for alleged monopolistic activities in the online advertising market, those YouTube ads are going to become more plentiful. Some Googlers may have an opportunity to find their future elsewhere as Xooglers (former Google employees). Freebies may be further curtailed in the Great Chain of Being hierarchy which guides Google’s organizational set up.

I found the timing of the news about the $14 billion number interesting. As the US quivered from the excitement of more AI in candy bar devices in rainbow colors, the EU was looking under the rock. The EU found nerve and a possible pile of money.

Stephen E Arnold, September 16, 2024

Online Gambling Has a Downside, Says Captain Obvious

September 13, 2024

People love gambling, especially when they’re betting on the results of sports. Online has made sports betting very easy and fun. Unfortunately some people who bet on sports are addicted to the activity. Business Insider reveals the underbelly of online gambling and paints a familiar picture of addiction: “It’s Official: Legalized Sports Betting Is Destroying Young Men’s Financial Futures.” The University of California, Los Angeles shared a working paper about the negative effects of legalized sports gambling:

“…takes a look at what’s happened to consumer financial health in the 38 states that have greenlighted sports betting since the Supreme Court in 2018 struck down a federal law prohibiting it. The findings are, well, rough. The researchers found that the average credit score in states that legalized any form of sports gambling decreased by 0.3% after about four years and that the negative impact was stronger where online sports gambling is allowed, with credit scores dipping in those areas by 1%. They also found an 8% increase in debt-collection amounts and a 28% increase in bankruptcies where online sports betting was given the go-ahead. By their estimation, that translates to about 100,000 extra bankruptcies each year in the states that have legalized sports betting. The number of people who fell dangerously behind on their car loans went up, too. Oddly enough, credit-card delinquencies fell, but the researchers believe that’s because banks wind up lowering credit limits to try to compensate for the rise in risky consumer behavior.”

The researchers discovered that legalized gambling leads to more gambling addictions. They also found if a person lives near a casino or is from a poor region, they’ll more prone to gambling. This isn’t anything new! The paper restates information people have known for centuries about gambling and other addictions: hurts finances, leads to destroyed relationships, job loss, increased in illegal activities, etc.

A good idea is to teach people to restraint. The sports betting Web sites can program limits and even assist their users to manage their money without going bankrupt. It’s better for people to be taught restraint so they can roll the dice one more time.

Whitney Grace, September 13, 2024

More Push Back Against US Wild West Tech

September 12, 2024

I spotted another example of a European nation state expressing some concern with American high-technology companies. There is not wind blown corral on Leidsestraat. No Sergio Leone music creeps out the observers. What dominates the scene is a judicial judgment firing a US$35 million fine at Clearview AI. The company has a database of faces, and the information is licensed to law enforcement agencies. What’s interesting is that Clearview does not do business in the Netherlands; nevertheless, the European Union’s data protection act, according to Dutch authorities, has been violated. Ergo: Pay up.

“The Dutch Are Having None of Clearview AI Harvesting Your Photos” reports:

“Following investigation, the DPA confirmed that photos of Dutch citizens are included in the database. It also found that Clearview is accountable for two GDPR breaches. The first is the collection and use of photos….The second is the lack of transparency. According to the DPA, the startup doesn’t offer sufficient information to individuals whose photos are used, nor does it provide access to which data the company has about them.”

Clearview is apparently unhappy with the judgment.

Several observations:

First, the decision is part of what might be called US technology pushback. The Wild West approach to user privacy has to get out of Dodge.

Second, Clearview may be on the receiving end of more fines. The charges may appear to be inappropriate because Clearview does not operate in the Netherlands. Other countries may decide to go after the company too.

Third, the Dutch action may be the first of actions against US high-technology companies.

Net net: If the US won’t curtail the Wild West activities of its technology-centric companies, the Dutch will.

Stephen E Arnold, September 12, 2024