Big Thoughts On How AI Will Affect The Job Market

March 4, 2025

Every time there is an advancement in technology, humans are fearful they won’t make an income. While some jobs disappeared, others emerged and humans adapted to the changes. We’ll continue to adapt as AI becomes more integral in society. How will we handle the changes?

Anthropic, a big player in the OpenAI field, launched The Anthropic Index to understand AI’s effects on labor markers and the economy. Anthropic claims it’s gathering “first-of-its” kind data from Claude.ai anonymized conversations. This data demonstrates how AI is incorporated into the economy. The organization is also building an open source dataset for researchers to use and build on their findings. Anthropic surmises that this data will help develop policy on employment and productivity.

Anthropic reported on their findings in their first paper:

• “Today, usage is concentrated in software development and technical writing tasks. Over one-third of occupations (roughly 36%) see AI use in at least a quarter of their associated tasks, while approximately 4% of occupations use it across three-quarters of their associated tasks.

• AI use leans more toward augmentation (57%), where AI collaborates with and enhances human capabilities, compared to automation (43%), where AI directly performs tasks.

• AI use is more prevalent for tasks associated with mid-to-high wage occupations like computer programmers and data scientists, but is lower for both the lowest- and highest-paid roles. This likely reflects both the limits of current AI capabilities, as well as practical barriers to using the technology.”

The Register put the Anthropic report in layman’s terms in the article, “Only 4 Percent Of Jobs Rely Heavily On AI, With Peak Use In Mid-Wage Roles.” They share that only 4% of jobs rely heavily on AI for their work. These jobs use AI for 75% of their tasks. Overall only 36% of jobs use AI for 25% of their tasks. Most of these jobs are in software engineering, media industries, and educational/library fields. Physical jobs use AI less. Anthropic also found that 57% of these jobs use AI to augment human tasks and 43% automates them.

These numbers make sense based on AI’s advancements and limitations. It’s also common sense that mid-tier wage roles will be affected and not physical or highly skilled labor. The top tier will surf on money; the water molecules are not so lucky.

Whitney Grace, March 4, 2025

Dear New York Times, Your Online System Does Not Work

March 3, 2025

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

The work of a real, live dinobaby. Sorry, no smart software involved. Whuff, whuff. That’s the sound of my swishing dino tail. Whuff.

I gave up on the print edition to the New York Times because the delivery was terrible. I did not buy the online version because I could get individual articles via the local library. I received a somewhat desperate email last week. The message was, “Subscribe for $4 per month for two years.” I thought, “Yeah, okay. How bad could it be?”

Let me tell you it was bad, very bad.

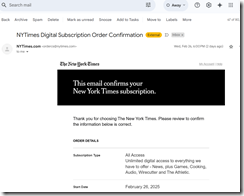

I signed up, spit out my credit card and received this in my email:

The subscription was confirmed on February 26, 2025. I tried to log in on the 27th. The system said, “Click here to receive an access code.” I did. In fact I did the click for the code three times. No code on the 27th.

Today is the 28th. I tried again. I entered my email and saw the click here for the access code. No code. I clicked four times. No code sent.

Dispirited, I called the customer service number. I spoke to two people. Both professionals told me they were sending the codes to my email. No codes arrived.

Guess what? I gave up and cancelled my subscription. I learned that I had to pay $4 for the privilege of being told my email was not working.

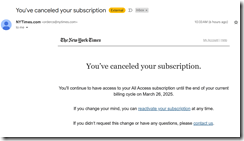

That was baloney. How do I know? Look at this screenshot:

The estimable newspaper was able to send me a notice that I cancelled.

How screwed up is the New York Times’ customer service? Answer: A lot. Two different support professionals told me I was not logged into my email. Therefore, I was not receiving the codes.

How screwed up are the computer systems at the New York Times? Answer: A lot, no, a whole lot.

I don’t think anyone at the New York Times knows about this issue. I don’t think anyone cares. I wonder how many people like me tried to buy a subscription and found that cancellation was the only viable option to escape automated billing for a service the buyer could not access.

Is this intentional cyber fraud? Probably not. I think it is indicative of poor management, cost cutting, and information technology that is just good enough. By the way, how can you send to my email a confirmation and a cancellation and NOT send me the access code? Answer: Ineptitude in action.

Well, hasta la vista.

Stephen E Arnold, March 3, 2025

Curricula Ideas That Will Go Nowhere Fast

February 28, 2025

No smart software. Just a dinobaby doing his thing.

No smart software. Just a dinobaby doing his thing.

I read “Stuff You Should Have Been Taught in College But Weren’t” reveals a young person who has some dinobaby notions. Good for Casey Handmer, PhD. Despite his brush with Hyperloop, he has retained an ability to think clearly about education. Caltech and the JPL have shielded him from some intellectual cubby holes.

So why am I mentioning the “Stuff You Should Have…” essay and the author? I found the write up in line with thoughts my colleagues and I have shared. Let me highlight a few of Dr. Handmer’s “Should haves” despite my dislike for “woulda coulda shoulda” as a mental bookshelf.

The write up says:

in the sorts of jobs you want to have, no-one should have to spell anything out for you.

I want to point out that the essay may not be appropriate for a person who seeks a job washing dishes at the El Nopal restaurant on Goose Creek Road. The observation strikes me as appropriate for an individual who seeks employment at a high-performing organization or an aspiring “performant” outfit. (I love the coinage “performant”; it is very with it.

What are other dinobaby-in-the-making observations in the write up. I have rephrased some of the comments, and I urge you to read the original essay. Here’s goes:

- Do something tangible to demonstrate your competence. Doom scrolling and watching TikTok-type videos may not do the job.

- Offer proof you deliver value in whatever you do. I am referring to “good” actors, not “bad” actors selling Telegram and WhatsApp hacking services on the Dark Web. “Proof” is verifiable facts, a reference from an individual of repute, or demonstrating a bit of software posted on GitHub or licensed from you.

- Watch, learn, and act in a way that benefits the organization, your colleagues, and your manager.

- Change jobs to grow and demonstrate your capabilities.

- Suck it up, buttercup. Life is a series of challenges. Meet them. Deliver value.

I want to acknowledge that not all dinobabies exhibit these traits as they toddle toward the holding tank for the soon-to-be-dead. However, for an individual who wants to contribute and grow, the ideas in this essay are good ones to consider and then implement.

I do have several observations:

- The percentage of a cohort who can consistently do and deliver is very small. Excellence is not for everyone. This has significant career implications unless you have a lot of money, family connections, or a Hollywood glow.

- Most of the young people with whom I interact say they have these or similar qualities. Then their own actions prove they don’t. Here’s an example: I met a business school dean. I offered to share some ideas relevant to the job market. I gave him my card because he forgot his cards. He never emailed me. I contacted him and said politely, “What’s up?” He double talked and wanted to meet up in the spring. What’s that tell me about this person’s work ethic? Answer: Loser.

- Universities and other formal training programs struggle even when the course material and teacher is on point. The “problem” begins before the student shows up in class. The impact of family stress on a person creates a hot house of sorts. What grows in the hortorium? Species with an inability to concentrate, a pollen that cannot connect with an ovule, and a baked in confusion of “I will do it” and “doing it.”

Net net: This dinobaby is happy to say that Dr. Handmer will make a very good dinobaby some day.

Stephen E Arnold, February 28, 2025

Tales of Silicon Valley Management Method: Perceived Cruelty

February 21, 2025

A dinobaby post. No smart software involved.

A dinobaby post. No smart software involved.

I read an interesting write up. Is it representative? A social media confection? A suggestion that one of the 21st centuries masters of the universe harbors a Vlad the Impaler behavior? I don’t know. But the article “Laid-Off Meta Employees Blast Zuckerberg for Running the Cruelest Tech Company Out There As Some Claim They Were Blindsided after Parental Leave” caught my attention. Note: This is a paywalled write up and you have to pay up.

Straight away I want to point out:

- AI does not have organic carbon based babies — at least not yet

- AI does not require health care — routine maintenance but the down time should be less than a year

- AI does not complain on social media about its gradient descents and Bayesian drift — hey, some do like the new “I remember” AI from Google.

Now back to the write up. I noted this passage:

Over on Blind, an anonymous app for verified employees often used in the tech space, employees are noting that an unseasonable chill has come over Silicon Valley. Besides allegations of the company misusing the low-performer label, some also claimed that Meta laid them off while they were taking approved leave.

Yep, a social media business story.

There are other tech giants in the story, but one is cited as a source of an anonymous post:

A Microsoft employee wrote on Blind that a friend from Meta was told to “find someone” to let go even though everyone was performing at or above expectations. “All of these layoffs this year are payback for 2021–2022,” they wrote. “Execs were terrified of the power workers had [at] that time and saw the offers and pay at that time [are] unsustainable. Best way to stop that is put the fear of god back in the workers.”

I think that a big time, mainstream business publication has found a new source of business news: Employee complaint forums.

In the 1970s I worked with a fellow who was a big time reporter for Fortune. He ended up at the blue chip consulting firm helping partners communicate. He communicated with me. He explained how he tracked down humans, interviewed them, and followed up with experts to crank out enjoyable fact-based feature stories. He seemed troubled that the approach at a big time consulting firm was different from that of a big time magazine in Manhattan. He had an attitude, and he liked spending months working on a business story.

I recall him because he liked explaining his process.

I am not sure the story about the cruel Zuckster would have been one that he would have written. What’s changed? I suppose I could answer the question if I prowled social media employee grousing sites. But we are working on a monograph about Telegram, and we are taking a different approach. I suppose my method is closer to what my former colleague did in his Fortune days reduced like a French sauce by the approach I learned at the blue chip consulting firm.

Maybe I should give social media research, anonymous sources, and something snappy like cruelty to enliven our work? Nah, probably not.

Stephen E Arnold, February 21, 2025

Now I Get It: Duct Tape Jobs Are the Problem

February 19, 2025

A dinobaby post. No smart software involved.

A dinobaby post. No smart software involved.

“Is Ops a Bullsh&t Job?” appears to address the odd world of fix it people who work on systems of one sort of anther. The focus in the write up is on software, but I think the essay reveals broader insight into work today. First, let’s look at a couple of statements in this very good essay, and, second, turn our attention briefly to the non-software programming sector.

I noted this passage attributed to an entity allegedly named Pablo:

Basically, we have two kinds of jobs. One kind involves working on core technologies, solving hard and challenging problems, etc. The other one is taking a bunch of core technologies and applying some duct tape to make them work together. The former is generally seen as useful. The latter is often seen as less useful or even useless, but, in any case, much less gratifying than the first kind. The feeling is probably based on the observation that if core technologies were done properly, there would be little or no need for duct tape.

The distinction strikes me as important. The really good programmers work on the “core” part of a system. A number of companies embrace this stratification of the allegedly most talented programmers and developers. This is a spin on what my seventh grade teacher called a “caste system.” I do remember thinking, “It is very important to get to the top of the pyramid; otherwise, life will be a chore.”

Another passage warranted a blue circle:

A “duct taper” is a role that only exists to solve a problem that ought not to exist in the first place.

The essay then provides some examples. Here are three from the essay:

-

- “My job was to transfer information about the state’s oil wells into a different set of notebooks than they were currently in.”

- “My day consisted of photocopying veterans’ health records for seven and a half hours a day. Workers were told time and again that it was too costly to buy the machines for digitizing.”

- “I was given one responsibility: watching an in-box that received emails in a certain form from employees in the company asking for tech help, and copy and paste it into a different form.”

Good stuff.

With that as background, here’s what I think the essay suggests.

The reason so many gratuitous changes, lousy basic services, and fights at youth baseball games are evident is a lack of meaningful work. Undertaking a project which a person and everyone else around the individual knows is meaningless, creates a persistent sense of unease.

How is this internal agitation manifested? Let me identify several from my experiences this week. None is directly “technical” but lurking in the background is the application of information to a function. When that information is distorted by the duct tape wrapped around a sensitive area, these are what happens in real life.

First, I had to get a tax sticker for my license plate. The number of people at the state agency was limited. More people entered than left. The cycle time for a sticker issuing professional was about 75 minutes. When I reached the desk of the SIP I presented my documents. I learned that my proof of insurance was a one page summary of the policy I had on my auto. I learned, “We can only accept insurance cards. This is a sheet of paper, not a card. You come back when you have the card. Next.” Nifty. Duct tape wrapped around a procedure that required a policy number and the name of the insurance provider.

Second, I bought three plastic wrapped packages of bottled water. I picked up a quart of milk. I put a package of raisins in my basket. I went through the self check out because no humans worked at the check out jobs at the time I visited. I scanned my items and placed them on the “Put purchases here” area. I inserted my credit card and the system honked and displayed, “Stay here a manager is coming.” Okay, I stayed there and noted that the other three self check outs had similar messages and honks coming from those self check out systems. I watched as a harried young person tried to determine if each of the four customers had stolen items. The fix he implemented was to have the four of us rescan the items. My system honked. My milk was not in the store’s system as a valid product. He asked me to step aside, and he entered the product number manually. Success for him. Utter failure for the grocery store.

Third, I picked up two shirts from the cleaners. I like my shirts with heavy starch. The two shirts had no starch. The young person had no idea what to do. I said, “Send the shirts through the process again and have your colleagues dip them in starch. The young worker told me, “We can’t do that. You have to pay the bill and then I will create a new work order.” Sorry. I paid the bill and went to another company’s store.

I am not sure these are duct tape jobs. If I needed money, I would certainly do the work and try to do my best. The message in the essay is that there are duct tape jobs. I disagree. The worker sees the job as beneath him or her and does not put physical, emotional, or intellectual effort in providing value to the employer or the customer.

Instead we get silly interface changes in Windows. We get truly stupid explanations about why a policy number cannot be entered from a sheet of paper, not a “card.” We get non-functioning check out systems and employees who don’t say, “Come to the register. I will get these processed and you out of here as fast as I can.”

Duct tape in the essay is about software. I think duct tape is a mind set issue. Use duct tape to make something better.

Stephen E Arnold, February 19, 2025

IBM Faces DOGE Questions?

February 17, 2025

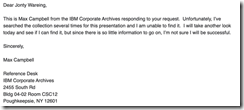

Simon Willison reminded us of the famous IBM internal training document that reads: “A Computer Can Never Be Held Accountable.” The document is also relevant for AI algorithms. Unfortunately the document has a mysterious history and the IBM Corporate Archives don’t have a copy of the presentation. A Twitter user with the name @bumblebike posted the original image. He said he found it when he went through his father’s papers. Unfortunately, the presentation with the legendary statement was destroyed in a 2019 flood.

I believe the image was first shared online in this tweet by @bumblebike in February 2017. Here’s where they confirm it was from 1979 internal training.

Here’s another tweet from @bumblebike from December 2021 about the flood:

Unfortunately destroyed by flood in 2019 with most of my things. Inquired at the retirees club zoom last week, but there’s almost no one the right age left. Not sure where else to ask.”

We don’t need the actual IBM document to know that IBM hasn’t done well when it comes to search. IBM, like most firms tried and sort of fizzled. (Remember Data Fountain or CLEVER?) IBM also moved into content management. Yep, the semi-Xerox, semi-information thing. But the good news is that a time sharing solution called Watson is doing pretty well. It’s not winning Jeopardy! but it is chugging along.

Now IBM professionals in DC have to answer the Doge nerd squad questions? Why not give OpenAI a whirl? The old Jeopardy! winner is kicking back. Doge wants to know.

Whitney Grace, February 17, 2025

The Thought Process May Be a Problem: Microsoft and Copilot Fees

February 4, 2025

Yep, a dinobaby wrote this blog post. Replace me with a subscription service or a contract worker from Fiverr. See if I care.

Yep, a dinobaby wrote this blog post. Replace me with a subscription service or a contract worker from Fiverr. See if I care.

Here’s a connection to consider. On one hand, we have the remarkable attack surface of Microsoft software. Think SolarWinds. Think note from the US government to fix security. Think about the flood of bug fixes to make Microsoft software secure. Think about the happy bad actors gleefully taking advantage of what is the equivalent of a piece of chocolate cake left on a picnic table in Iowa in July.

Now think about the marketing blast that kicked off the “smart software” revolution. Google flashed its weird yellow and red warning lights. Sam AI-Man began thinking in terms of trillions of dollars. Venture firms wrote checks like it was 1999 again. Even grade school students are using smart software to learn about George Washington crossing the Delaware.

And where are we? ZDNet published an interesting article which may have the immediate effect of getting some Microsoft negative vibes. But to ZDNet’s credit the write up “The Microsoft 365 Copilot Launch Was a Total Disaster.” I want to share some comments from the write up before I return to the broader notion that the “thought process” is THE Microsoft problem.

I noted this passage:

Shortly after the New Year, someone in Redmond pushed a button that raised the price of its popular (84 million paid subscribers worldwide!) Microsoft 365 product. You know, the one that used to be called Microsoft Office? Yeah, well, now the app is called Microsoft 365 Copilot, and you’re going to be paying at least 30% more for that subscription starting with your next bill.

How about this statement:

No one wants to pay for AI

Some people do, but these individuals do not seem to be the majority of computing device users. Furthermore there are some brave souls suggesting that today’s approach to AI is not improving as the costs of delivering AI continue to rise. Remember those Sam AI-Man trillions?

Microsoft is not too good with numbers either. The article walks through the pricing and cancellation functions. Here’s the key statement after explaining the failure to get the information consistent across the Microsoft empire:

It could be worse, I suppose. Just ask the French and Spanish subscribers who got a similar pop-up message telling them their price had gone from €10 a month to €13,000. (Those pesky decimals.)

Yep, details. Let’s go back to the attack surface idea. Microsoft’s corporate thought process creates problems. I think the security and Copilot examples make clear that something is amiss at Microsoft. The engineering of software and the details of that engineering are not a priority.

That is the problem. And, to me, it sure seems as though Microsoft’s worse characteristics are becoming the dominant features of the company. Furthermore, I believe that the organization cannot remediate itself. That is very concerning. Not only have users lost control, but the firm is unconsciously creating a greater set of problems for many people and organizations.

Not good. In fact, really bad.

Stephen E Arnold, February 4, 2025

Two Rules for Software. All Software If You Can Believe It

January 31, 2025

Did you know that there are two rules that dictate how all software is written? No, we didn’t either. FJ van Wingerde from the Ask The User blog states and explains what the rules are in his post: “The Two Rules Of Software Creation From Which Every Problem Derives.” After a bunch of jib jab about the failures of different codes, Wingerde states the questions:

“It’s the two rules that actually are behind every statement in the agile manifesto. The manifesto unfortunately doesn’t name them really; the people behind it were so steeped in the problems of software delivery—and what they thought would fix it—that they posited their statements without saying why each of these things are necessary to deliver good software. (Unfortunately, necessary but not enough for success, but that we found out in the next decades.) They are [1] Humans cannot accurately describe what they want out of a software system until it exists. and [2] Humans cannot accurately predict how long any software effort will take beyond four weeks. And after 2 weeks it is already dicey.”

The first rule is a true statement for all human activities, except the inability to accurately describe the problem. That may be true for software, however. Humans know they have a problem, but they don’t have a solution to fix. The smart humans figure out how to solve the problem and learn how to describe it with greater accuracy.

As for number two, is project management and weekly maintenance on software all a lucky guess then? Unless effort changes daily and that justifies paying software developers. Then again, someone needs to keep the systems running. Tech people are what keep businesses running, not to mention the entire world.

If software development only has these two rules, we now know why why developers cannot provide time estimates or provide assurances that their software works as leadership trained as accountants and lawyers expect. Rest easy. Software is hopefully good enough and advertising can cover the costs.

Whitney Grace, January 31, 2025

Happy New Year the Google Way

January 31, 2025

We don’t expect Alphabet Inc. to release anything but positive news these days. Business Standard reports another revealing headline, especially for Googlers in the story: "Google Layoffs: Sundar Pichai Announced 10% Job Cuts In Managerial Roles.” After a huge push in the wake of wokeness to hire under represented groups aka DEI hires, Google has slowly been getting rid of its deadweight employees. That is what Alphabet Inc. probably calls them.

DEI hires were the first to go, now in the last vestiges of Googles 2024 push for efficiency, 10% of its managerial positions are going bye-bye. Among those positions are directors and vice presidents. CEO Sundar Pichai says the push for downsizing also comes from bigger competition from AI companies, such as OpenAI. These companies are challenging Google’s dominance in the tech industry.

Pichai started the efficiency push in 2022 when people were starting to push back against the ineffectiveness of DEI hires, especially when their budgets were shrunk from inflation. In January 2023, 12,000 employees were laid off. Picker is also changing the meaning of “Googleyness”:

“At the same meeting, Pichai introduced a refined vision for ‘Googleyness’, a term that once broadly defined the traits of an ideal Google employee but had grown too ambiguous. Pichai reimagined it with a sharper focus on mission-driven work, innovation, and teamwork. He emphasized the importance of creating helpful products, taking bold risks, fostering a scrappy attitude, and collaborating effectively. “Updating modern Google,” as Pichai described it, is now central to the company’s ethos.”

The new spin on being Googley. Enervating. A month into the bright new year, let me ask a non Googley question: “How are those job searches, bills, and self esteem coming along?

Whitney Grace, January 31, 2025

The Joust of the Month: Microsoft Versus Salesforce

January 29, 2025

These folks don’t seem to see eye to eye: Windows Central tells us, “Microsoft Claps Back at Salesforce—Claims ‘100,000 Organizations’ Had Used Copilot Studio to Create AI Agents by October 2024.” Microsoft’s assertion is in response to jabs from Salesforce CEO Marc Benioff, who declares, “Microsoft has disappointed everybody with how they’ve approached this AI world.” To support this allegation, Benioff points to lines from a recent MarketWatch post. A post which, coincidentally, also lauds his company’s success with AI agents. The smug CEO also insists he is receiving complaints about his giant competitor’s AI tools. Writer Kevin Okemwa elaborates:

“Benioff has shared interesting consumer feedback about Copilot’s user experience, claiming customers aren’t finding themselves transformed while leveraging the tool’s capabilities. He added that customers barely use the tool, ‘and that’s when they don’t have a ChatGPT license or something like that in front of them.’ Last year, Salesforce’s CEO claimed Microsoft’s AI efforts are a ‘tremendous disservice’ to the industry while referring to Copilot as the new Microsoft Clippy because it reportedly doesn’t work or deliver value. As the AI agent race becomes more fierce, Microsoft has seemingly positioned itself in a unique position to compete on a level playing field with key players like Salesforce Agentforce, especially after launching autonomous agents and integrating them into Copilot Studio. Microsoft claims over 100,000 organizations had used Copilot Studio to create agents by October 2024. However, Benioff claimed Microsoft’s Copilot agents illustrated panic mode, majorly due to the stiff competition in the category.”

One notable example, writes Okemwa, is Zuckerberg’s vision of replacing Meta’s software engineers with AI agents. Oh, goodie. This anti-human stance may have inspired Benioff, who is second-guessing plans to hire live software engineers in 2025. At least Microsoft still appears to be interested in hiring people. For now. Will that antiquated attitude hold the firm back, supporting Benioff’s accusations?

Mount your steeds. Fight!

Cynthia Murrell, January 29, 2025